What is Cronbach's Alpha? - Explained Simply

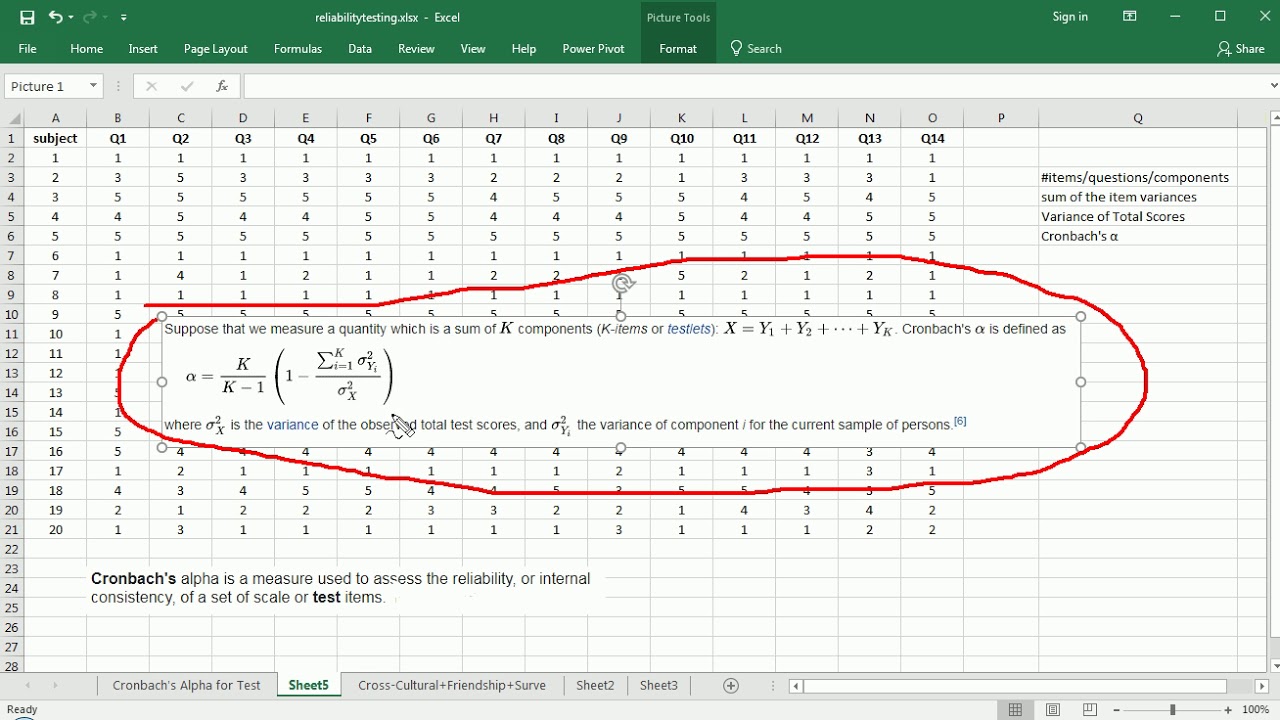

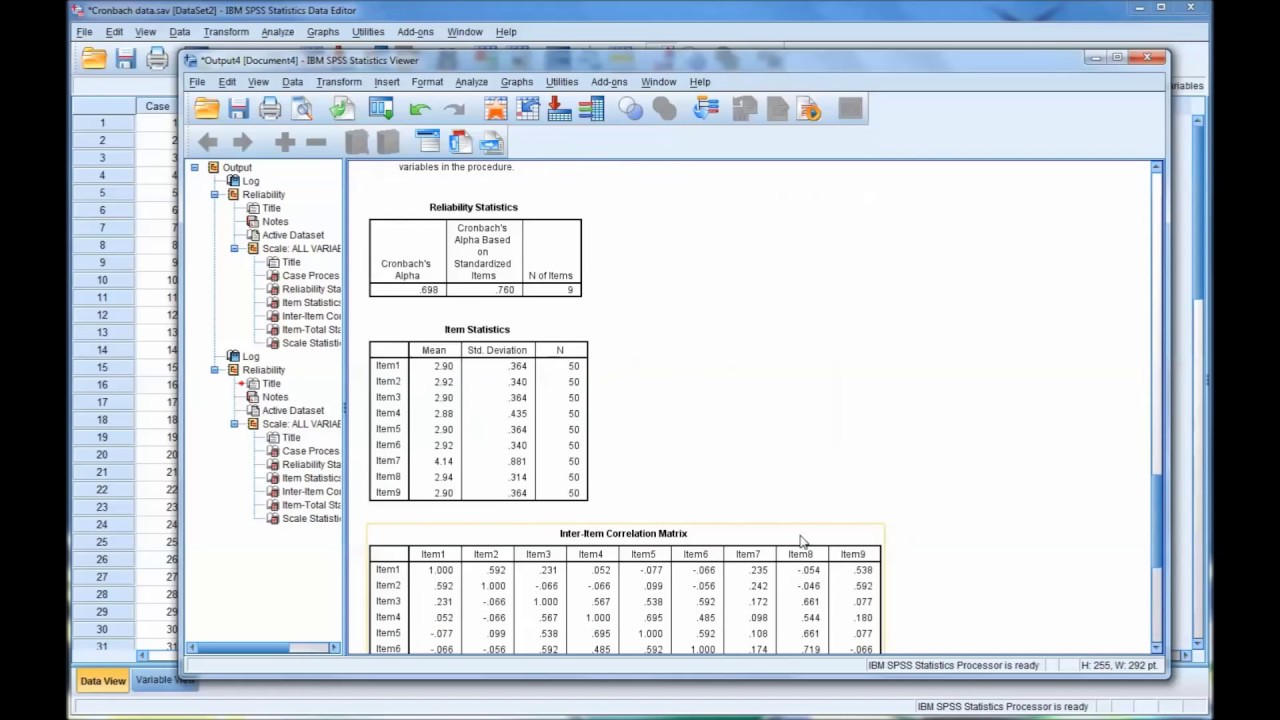

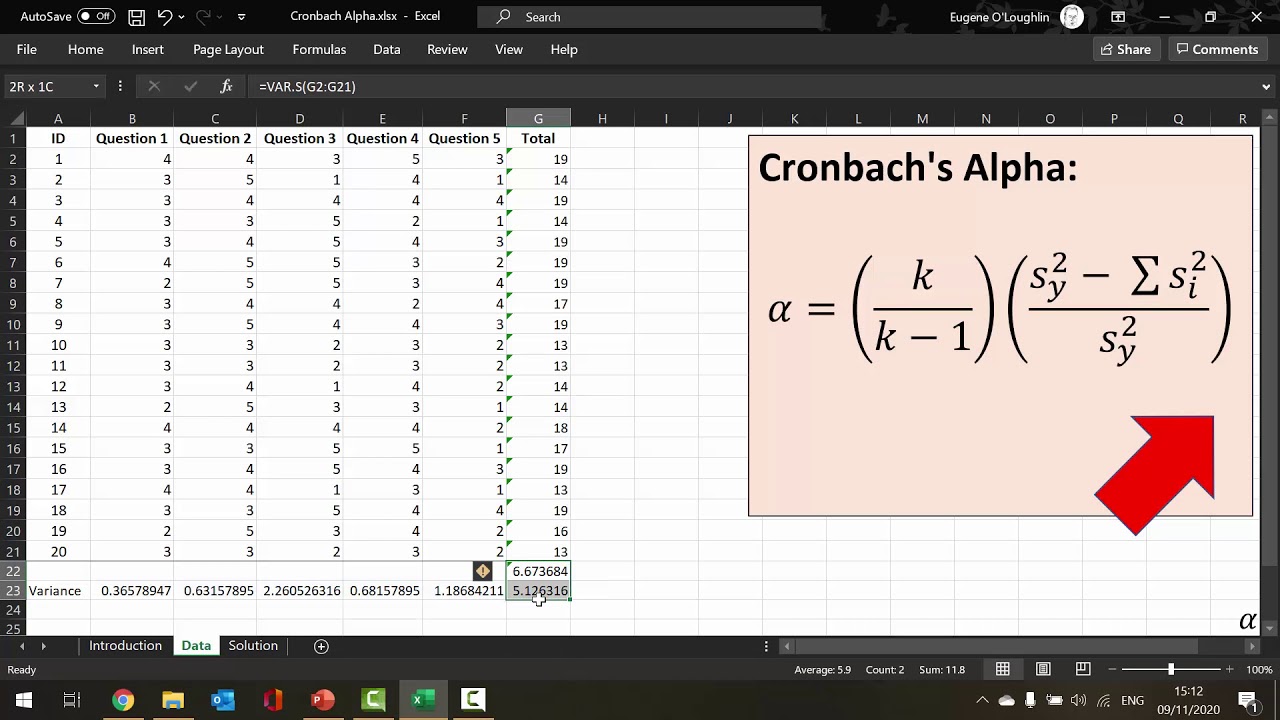

TLDRCronbach's alpha, introduced by Lee J. Cronbach in 1952, is a psychometric statistic that measures the internal consistency reliability of a set of items or variables within a test or questionnaire. It is more versatile than previous methods, like split-half reliability and the Cooter Richardson statistic, as it can be applied to both dichotomous and continuous data. Cronbach's alpha is widely used due to its generality and ability to represent the average of all possible split halves. The term 'coefficient alpha' was preferred by Cronbach himself, but the original name has stuck. The statistic ranges from 0.0 to 1.0, where 0.0 indicates no consistency and 1.0 indicates perfect consistency. A score of 0.70, for example, suggests that 70% of the variance in the scores is reliable, with the remaining 30% being error variance. Cronbach's alpha is particularly relevant to composite scores, which are sums or averages of two or more scores, rather than individual item scores.

Takeaways

- 📊 **Cronbach's Alpha Introduction**: Cronbach's alpha, introduced by Lee J. Cronbach in 1952, is a psychometric statistic used to estimate the internal consistency reliability of a set of items or variables.

- 📈 **Generality Over Previous Methods**: Cronbach's alpha is more general than previous methods like split half reliability and the Cooter Richardson statistic, as it can be applied to both dichotomous and continuously scored data.

- 🔄 **Average of Split Halves**: It represents the average of all possible split halves, which makes it a more robust measure of internal consistency than choosing a specific split half.

- 📚 **Terminology**: While commonly referred to as 'Cronbach's alpha', the term 'coefficient alpha' is also used and was actually preferred by Cronbach himself.

- 🔍 **Estimate of Reliability**: Cronbach's alpha provides an estimate of reliability, specifically internal consistency reliability, which is crucial for composite scores.

- 📋 **Consistency Over Homogeneity**: It measures consistency, not homogeneity or unidimensionality, which means it's focused on how well a set of items measures a single underlying construct.

- 🔢 **Range and Interpretation**: The coefficient can range from 0.0 to 1.0, where 0.0 indicates no consistency and 1.0 indicates perfect consistency; a score of 0.70 suggests 70% of the variance in scores is reliable.

- ⚖️ **Error Variance**: A higher alpha indicates lower error variance, which is desirable in research as it implies more reliable and consistent measurements.

- 📝 **Relevance to Composite Scores**: Cronbach's alpha is relevant to composite scores, which are sums or averages of two or more scores, rather than individual item scores.

- 🔬 **Research Application**: In research, a high Cronbach's alpha is sought after to ensure good reliability and consistency in the data collected.

- ❗ **Negative Reliability**: A negative reliability estimate is theoretically possible but computationally unusual and indicates a serious issue with the measurement consistency that needs to be addressed.

Q & A

What is Cronbach's alpha?

-Cronbach's alpha is a psychometric statistic that measures the internal consistency reliability of a set of items or variables within a test or questionnaire. It was introduced by Lee J. Cronbach in 1952.

Why was Cronbach's alpha considered an improvement over split half reliability?

-Cronbach's alpha is an improvement because it represents the average of all possible split halves, not just one, and it can be used for both dichotomous and continuously scored data, making it more general and versatile.

What is the difference between Cronbach's alpha and coefficient alpha?

-There is no difference; they both refer to the same psychometric statistic. However, Cronbach himself preferred the term 'coefficient alpha' to acknowledge the contributions of previous works on reliability.

What does a Cronbach's alpha value of 0.0 signify?

-A Cronbach's alpha value of 0.0 signifies that there is no consistency in the measurement, indicating that the items or variables in the test do not correlate well with each other.

What does a Cronbach's alpha value of 1.0 mean?

-A Cronbach's alpha value of 1.0 means there is perfect consistency in the measurement, suggesting that all items or variables in the test are highly correlated with each other.

What does it mean if 70% of the variance in the scores is reliable?

-It means that 70% of the variation in the scores can be attributed to true score variance, and the remaining 30% is considered error variance, which is undesirable in research.

Why is internal consistency important in measurement?

-Internal consistency is important because it indicates how well a set of items or variables measures a single, unified concept or trait. High internal consistency suggests that the items are all measuring the same thing.

What are composite scores?

-Composite scores are the sum or average of two or more scores. They are used when analyzing data based on combined items or variables within a test or questionnaire.

Why is Cronbach's alpha more relevant to composite scores than individual item scores?

-Cronbach's alpha is more relevant to composite scores because it assesses the internal consistency of a set of items as a whole, rather than the reliability of each individual item.

Can Cronbach's alpha be negative?

-Technically, Cronbach's alpha can be negative, but it is rare and indicates very poor internal consistency, suggesting that the items or variables are not measuring the same concept.

What is the fundamental concept behind all reliability estimates?

-The fundamental concept behind all reliability estimates is consistency. Reliability is about how consistently a measure reflects the true score or the underlying construct it is intended to measure.

Why do researchers prefer lower error variance in their data?

-Researchers prefer lower error variance because it indicates that the measurement errors are minimal, and the results are more reliable and valid, leading to more accurate conclusions.

Outlines

📊 Introduction to Cronbach's Alpha

The video begins with an introduction to Cronbach's Alpha, a psychometric statistic developed by Lee J. Cronbach in 1952. It is explained as a measure of internal consistency reliability, which is an improvement over previous methods like split half reliability and the Cooter Richardson statistic. Unlike these predecessors, Cronbach's Alpha can be applied to both dichotomous and continuously scored data. The term 'coefficient alpha' was preferred by Cronbach himself, but 'Cronbach's Alpha' is the more commonly used term. The statistic is used to estimate the consistency of a set of items, with a score close to 1.0 indicating high consistency and a score of 0.0 indicating no consistency at all. The video emphasizes that Cronbach's Alpha is not a measure of homogeneity or unidimensionality, but rather a measure of how consistently items in a test or questionnaire measure a single unidimensional construct.

Mindmap

Keywords

💡Cronbach's Alpha

💡Internal Consistency

💡Split Half Reliability

💡Coefficient Alpha

💡Dichotomous Items

💡Continuously Scored Data

💡Composite Scores

💡Reliability

💡Validity

💡Error Variance

💡Unidimensionality

Highlights

Cronbach's alpha is a psychometric statistic introduced by Lee J. Cronbach in 1952.

Prior to Cronbach's alpha, split half reliability estimates were limited by the choice of how to split the data.

Cronbach's alpha is more general than previous methods as it represents the average of all possible split halves.

It can be used for both dichotomous and continuously scored data or variables.

Cronbach's alpha is often used interchangeably with the term 'coefficient alpha'.

Cronbach himself preferred the term 'coefficient alpha', but 'Cronbach's alpha' is more commonly used.

Cronbach's alpha is an estimate of reliability, specifically internal consistency reliability.

The concept of consistency is central to understanding Cronbach's alpha.

Cronbach's alpha is not a measure of homogeneity or uni-dimensionality.

A Cronbach's alpha coefficient can range from 0.0 to 1.0, with 1.0 indicating perfect consistency.

A negative reliability estimate is theoretically possible but indicates serious issues with the measurement.

An alpha of 0.70 suggests that 70% of the variance in the scores is reliable.

Research aims for high reliability and low error variance in data.

Cronbach's alpha is relevant to composite scores, which are sums or averages of two or more scores.

Internal consistency reliability is applicable to composite scores rather than individual item scores.

The video provides an example to illustrate the meaning of consistency in the context of Cronbach's alpha.

Transcripts

Browse More Related Video

5.0 / 5 (0 votes)

Thanks for rating: