Cronbach's alpha or Coefficient alpha in simple language - DU Professor

TLDRThe video script discusses Cronbach's Alpha, a statistical measure used to assess the reliability of a set of items or test questions designed to measure the same concept or latent variable. It is crucial for ensuring the quality of research, as it indicates the consistency of a measurement method. The video explains that reliability and validity, while related, are distinct concepts; reliability focuses on consistency, whereas validity concerns accuracy. Cronbach's Alpha is particularly useful for Likert scale surveys and assumes unidimensionality of the items. The formula for calculating Cronbach's Alpha is presented, along with the interpretation of its values, where an alpha greater than 0.7 is generally acceptable, 0.8 to 0.9 is good, and 0.9 or above is excellent. The video also addresses the sensitivity of Cronbach's Alpha to the number of items and advises on factor analysis to ensure unidimensionality. It concludes with an invitation for viewers to engage with the content through likes, shares, and comments.

Takeaways

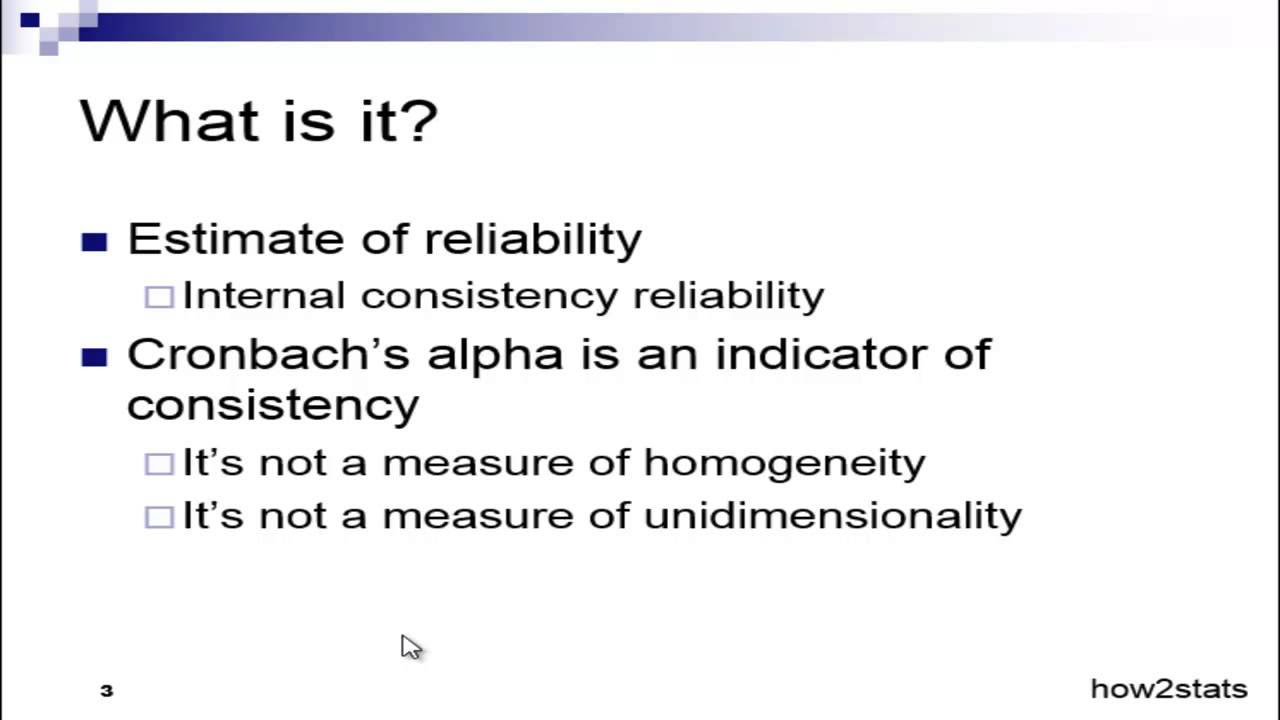

- 📊 **Cronbach's Alpha Importance**: Cronbach's alpha is a measure of internal consistency reliability, widely used in research to assess the quality of a set of scales or test items.

- 🔍 **Reliability & Validity**: Reliability is about consistency in measurement, while validity concerns accuracy. They are related but distinct concepts, with reliability not guaranteeing validity.

- 📚 **Types of Reliability**: There are three types of reliability - test-retest, inter-rater, and internal consistency, each with its own method of calculation and potential limitations.

- ⏱️ **Test-Retest Limitations**: Test-retest reliability can be affected by participant fatigue, boredom, or changes in perception due to events occurring between measurements.

- 👥 **Inter-Rater Reliability**: This type of reliability involves different observers measuring the same subject and is subject to the subjectivity of the observers.

- 📝 **Internal Consistency**: Internal consistency checks if different parts of a test designed to measure the same thing yield consistent results.

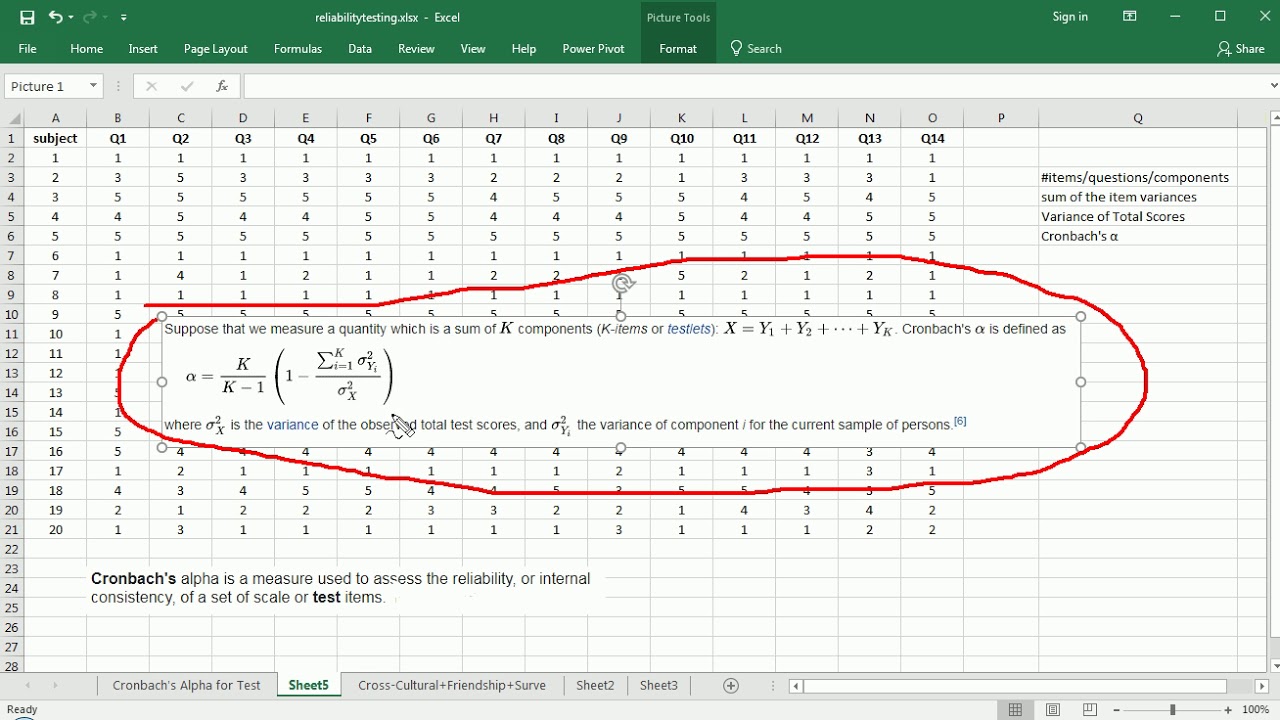

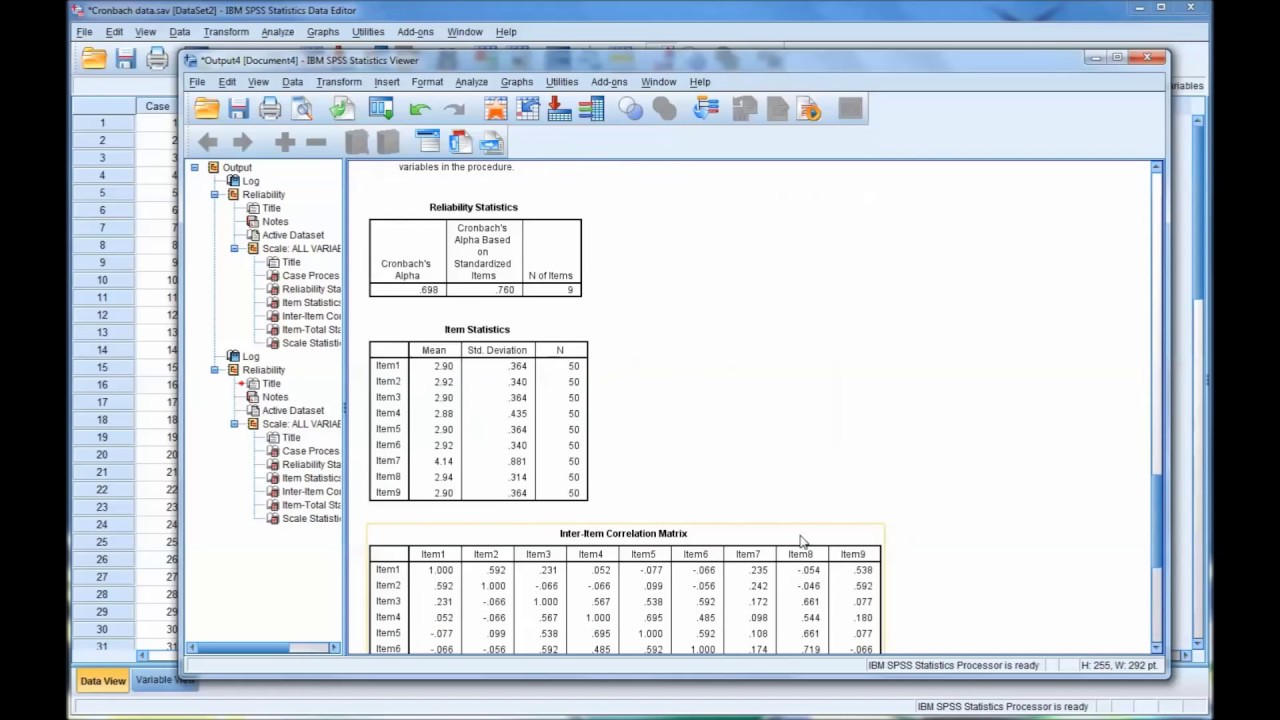

- 📊 **Cronbach's Alpha Formula**: The formula for Cronbach's alpha is based on the average covariance between item pairs and the average variance, assuming a unidimensional construct.

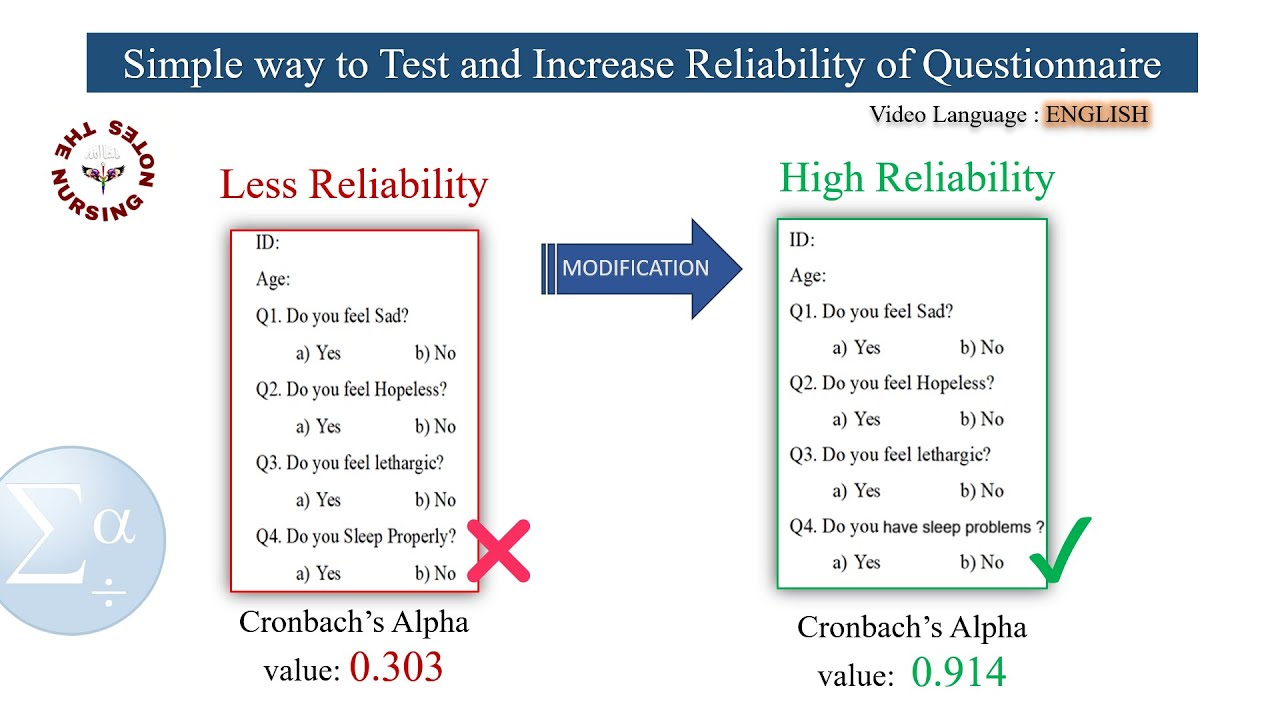

- 🔢 **Interpreting Alpha Values**: A Cronbach's alpha value above 0.7 is generally acceptable, above 0.8 is good, and 0.9 or above is excellent, though these benchmarks should be considered with caution.

- ⚖️ **Sensitivity to Item Count**: Cronbach's alpha is sensitive to the number of items; a larger number can result in a higher alpha, which doesn't necessarily indicate a better scale.

- 🧐 **Unidimensionality Assumption**: Cronbach's alpha assumes that all items in a test measure a single latent variable. Factor analysis can be used to verify this assumption.

- ✂️ **Breaking Down Tests**: If a test measures more than one dimension, it should be split into parts, each measuring a different latent variable.

- 📈 **Improving Reliability**: Understanding internal consistency and unidimensionality is crucial for using Cronbach's alpha effectively and improving the reliability of a scale.

Q & A

What is Cronbach's alpha?

-Cronbach's alpha, also known as coefficient alpha, is a measure used to assess the internal consistency or reliability of a set of items or scales within a questionnaire or test. It was developed by Lee Cronbach in 1951 and is widely used when multiple questions or Likert scale surveys are involved to measure latent variables such as attitudes or personality traits.

What is the significance of reliability in research?

-Reliability is a key factor in evaluating the quality of research. It indicates how well a method, technique, or test measures a particular variable consistently. A reliable measurement method will yield the same results when used under the same circumstances, ensuring that the research findings are consistent and dependable.

How is reliability different from validity in research?

-While both reliability and validity are crucial for assessing the quality of research, they address different aspects. Reliability concerns the consistency of a measure, ensuring that it yields the same results under the same conditions. Validity, on the other hand, pertains to the accuracy of the measure, ensuring that it actually measures what it is intended to measure. A measurement can be reliable without being valid if it consistently measures the wrong thing.

What are the three types of reliability?

-The three types of reliability are test-retest reliability, inter-rater reliability, and internal consistency. Test-retest reliability checks if the same results are obtained over time. Inter-rater reliability ensures that different observers or raters give the same assessment. Internal consistency verifies that different parts of a test designed to measure the same thing yield consistent results.

What is the formula for calculating Cronbach's alpha?

-The formula for Cronbach's alpha is given by: α = (N * ΣCovariance) / (ΣVariance + (N-1) * ΣCovariance), where N is the number of items, ΣCovariance is the sum of all covariances between item pairs, and ΣVariance is the sum of the variances of the items.

What does a Cronbach's alpha value of 0.9 indicate?

-A Cronbach's alpha value of 0.9 indicates excellent internal consistency among the items in a scale. It suggests that the items are highly correlated and that the scale is reliably measuring the underlying construct it is intended to measure.

What are the limitations of test-retest reliability?

-Test-retest reliability can be affected by changes in the participants' conditions or attitudes over time, leading to different responses. Additionally, unexpected events occurring between measurements can alter participants' perceptions, thus affecting the reliability of the results.

How can the subjectivity of raters affect inter-rater reliability?

-Inter-rater reliability can be low if different raters provide different assessments of the same subject or object. This can be due to the subjective criteria used by the raters, which can introduce bias and inconsistency in the measurement process.

What is the assumption underlying Cronbach's alpha?

-Cronbach's alpha assumes that all items in the scale are measuring the same underlying construct, which is known as unidimensionality. If the scale measures more than one dimension, the results can be skewed, and the alpha value may not accurately reflect the scale's reliability.

How should one interpret a Cronbach's alpha value?

-A Cronbach's alpha value typically ranges from 0 to 1. Values above 0.7 are generally acceptable, those between 0.8 and 0.9 are considered good, and a value of 0.9 or above is considered excellent. However, it's important to consider the number of items in the scale, as a larger number of items can artificially inflate the alpha value.

What is the relationship between the number of items in a test and Cronbach's alpha?

-The number of items in a test can influence the Cronbach's alpha value. A larger number of items can result in a higher alpha value, which might not necessarily mean better reliability if the items are measuring the same thing redundantly. Conversely, a smaller number of items may yield a lower alpha value, which doesn't necessarily indicate poor reliability but could suggest that more items are needed to measure the construct effectively.

How can one ensure that a test is unidimensional before using Cronbach's alpha?

-To ensure unidimensionality, one can conduct a factor analysis to identify the underlying dimensions in the test. If more than one dimension is found, the test can be split into parts, each measuring a different latent variable or dimension. This helps maintain the assumption of unidimensionality required for accurate Cronbach's alpha interpretation.

Outlines

📊 Introduction to Cronbach's Alpha and Reliability

The first paragraph introduces the topic of Cronbach's Alpha, a measure of internal consistency used to assess the reliability of a set of test items or scales. It explains the concept of reliability as a key factor in evaluating the quality of research, which is about the consistency of measurement methods. The paragraph also distinguishes between reliability and validity, two crucial concepts in research quality assessment. It further explains different types of reliability, including test-retest, inter-rater, and internal consistency, and touches upon the limitations associated with test-retest reliability.

🔍 Disadvantages of Inter-Rater Reliability and Internal Consistency

The second paragraph delves into the disadvantages of inter-rater reliability due to subjective criteria that can affect observers' judgments. It then transitions into a discussion on internal consistency, emphasizing the importance of obtaining consistent results from different parts of a test designed to measure the same construct. The paragraph outlines three methods for measuring internal consistency: split half correlation, Kuder-Richardson, and Cronbach's Alpha. It highlights Cronbach's Alpha as the most objective method, as it averages all possible split half correlations, thus reducing subjectivity.

📐 Understanding and Applying Cronbach's Alpha Formula

The third paragraph provides a detailed explanation of Cronbach's Alpha, including its formula and interpretation. It discusses the assumption of unidimensionality required for using Cronbach's Alpha and the importance of ensuring that the test items measure only one latent variable. The paragraph also offers a rule of thumb for interpreting Cronbach's Alpha values, suggesting that values above 0.7 are acceptable, while values of 0.9 and above are considered excellent. It cautions against relying solely on the number of items in a test when interpreting Alpha values and advises on improving the understanding of internal consistency and unidimensionality for more reliable use of Cronbach's Alpha.

Mindmap

Keywords

💡Cronbach's Alpha

💡Reliability

💡Validity

💡Test-Retest Reliability

💡Inter-Rater Reliability

💡Internal Consistency

💡Split-Half Reliability

💡Likert Scale

💡Latent Variable

💡Unidimensionality

💡Coefficient Alpha Interpretation

Highlights

Cronbach's alpha, also known as coefficient alpha, is widely used to measure the reliability of a set of scales or test items.

Reliability is a key factor in evaluating the quality of research, indicating how well a method measures a specific variable.

Reliability is about consistency, meaning that the same results should be obtained under the same circumstances using the same method.

Cronbach's alpha is used when involving multiple questions, such as in Likert scale surveys, to check the reliability of the questionnaire.

The concept of validity is closely related to reliability but focuses on accuracy rather than consistency.

A measurement can be reliable without being valid, as demonstrated by the example of a thermometer that consistently gives incorrect readings.

There are three types of reliability: test-retest, inter-rater, and internal consistency.

Test-retest reliability involves repeating measurements over time to check for consistency.

Inter-rater reliability assesses whether different observers or raters give the same results when measuring the same subject.

Internal consistency checks if different parts of a test designed to measure the same thing yield the same results.

Cronbach's alpha computes the mean of all possible split-half correlations for a set of items, eliminating subjectivity.

The formula for Cronbach's alpha involves the average covariance between item pairs and the average variance.

A Cronbach's alpha value above 0.7 is generally acceptable, with values above 0.9 indicating excellent reliability.

The number of items in a test can influence the alpha value, with larger numbers potentially leading to higher alphas.

Cronbach's alpha assumes unidimensionality, meaning that the items measure a single underlying construct.

Factor analysis can be used to determine if a test is unidimensional and to identify different dimensions in the test.

Cronbach's alpha is sensitive to the number of items, so a high alpha does not necessarily indicate a good scale.

A low alpha value does not necessarily mean the scale should be rejected; it could indicate a need for more items or a check for unidimensionality.

The video provides a comprehensive guide on understanding and interpreting Cronbach's alpha for assessing the reliability of research instruments.

Transcripts

Browse More Related Video

5.0 / 5 (0 votes)

Thanks for rating: