Lecture 5: Law of Large Numbers & Central Limit Theorem

TLDRThis lecture covers the Law of Large Numbers and the Central Limit Theorem, key concepts in probability theory involving repeated experiments and random sampling. It explains the importance of independently and identically distributed (iid) random variables, demonstrating how they are used to estimate population parameters from samples. The Strong Law of Large Numbers shows how increasing sample size leads the sample average to approximate the population average. The Central Limit Theorem further explains how, as sample size grows, the distribution of the sample average approaches a normal distribution, highlighting the link between discrete and continuous random variables.

Takeaways

- 🔍 The script introduces the Law of Large Numbers and Central Limit Theorem, which are fundamental concepts in probability theory and statistics.

- 📊 The Law of Large Numbers, particularly the strong version, states that as the size of a random sample increases, the sample average converges to the population average with probability 1.

- 🎲 The concept of random sampling is crucial for understanding the Law of Large Numbers, as it allows for estimation of population parameters without examining the entire population.

- 👉 The script explains the notion of iid (independently and identically distributed) random variables, which are essential for parametric estimation in statistics.

- 📚 IID variables are characterized by having the same distribution and being independent of each other, which is a key assumption in many statistical methods.

- 🎯 The strong law of large numbers is linked to the idea of repeated random experiments, where the outcome of each experiment is random but follows a specific distribution.

- 📉 The script discusses the use of Chebyshev's inequality and the Borel-Cantelli lemma in proving the strong law of large numbers, indicating the mathematical depth of the theorem.

- 🃏 Bernoulli variables, which take the value of 0 or 1 to represent success or failure, are introduced as a specific type of random variable that is fundamental to understanding the theorems.

- 📈 The Laplace de Moivre theorem is presented as a precursor to the Central Limit Theorem, focusing on the behavior of the sum of iid Bernoulli variables.

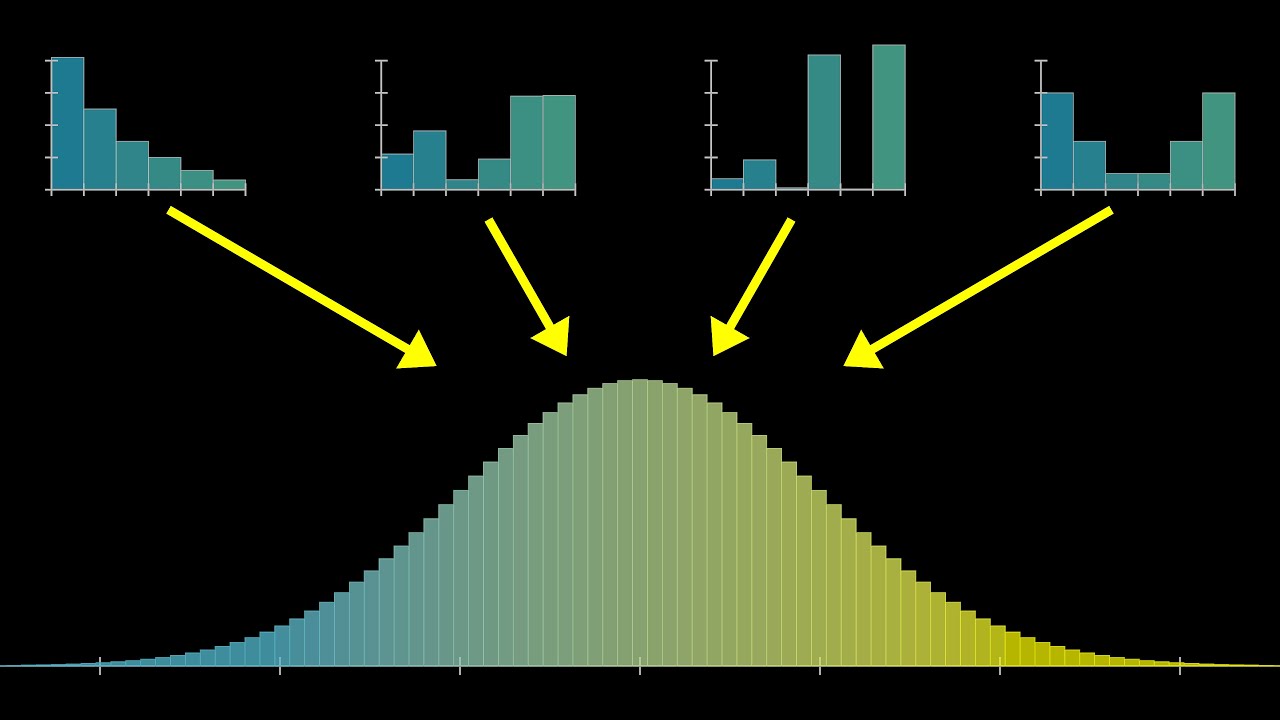

- 📊 The Central Limit Theorem (CLT) generalizes beyond Bernoulli variables, stating that the sum of a large number of iid random variables, regardless of their original distribution, will approximate a normal distribution.

- 📚 The script emphasizes the importance of understanding the theoretical underpinnings of statistical methods, such as the Law of Large Numbers and CLT, for effective application in real-world scenarios.

Q & A

What are the two key theorems discussed in the script related to probability theory?

-The two key theorems discussed in the script are the Law of Large Numbers and the Central Limit Theorem.

What is the concept of a random sample in the context of studying a population?

-A random sample is a subset of individuals from a larger population that is used to make inferences about the entire population without studying every single member.

What does the acronym 'iid' stand for in the context of random variables?

-In the context of random variables, 'iid' stands for Independently and Identically Distributed.

Why are iid random variables important in parametric estimation in statistics?

-IID random variables are important in parametric estimation in statistics because they allow for the application of various statistical methods and formulas that require the assumption of independence and identical distribution for accurate estimation.

What is the Strong Law of Large Numbers and what does it imply?

-The Strong Law of Large Numbers states that as the size of a random sample increases, the sample average tends towards the population average with probability 1, meaning it is almost certain to converge on the true population mean as the sample size grows.

What are Bernoulli variables and how do they relate to success and failure outcomes?

-Bernoulli variables are random variables that take the value 1 (representing success) or 0 (representing failure). They are used to model events with two possible outcomes, such as a coin toss.

Outlines

🔍 Introduction to Probability Theory Concepts

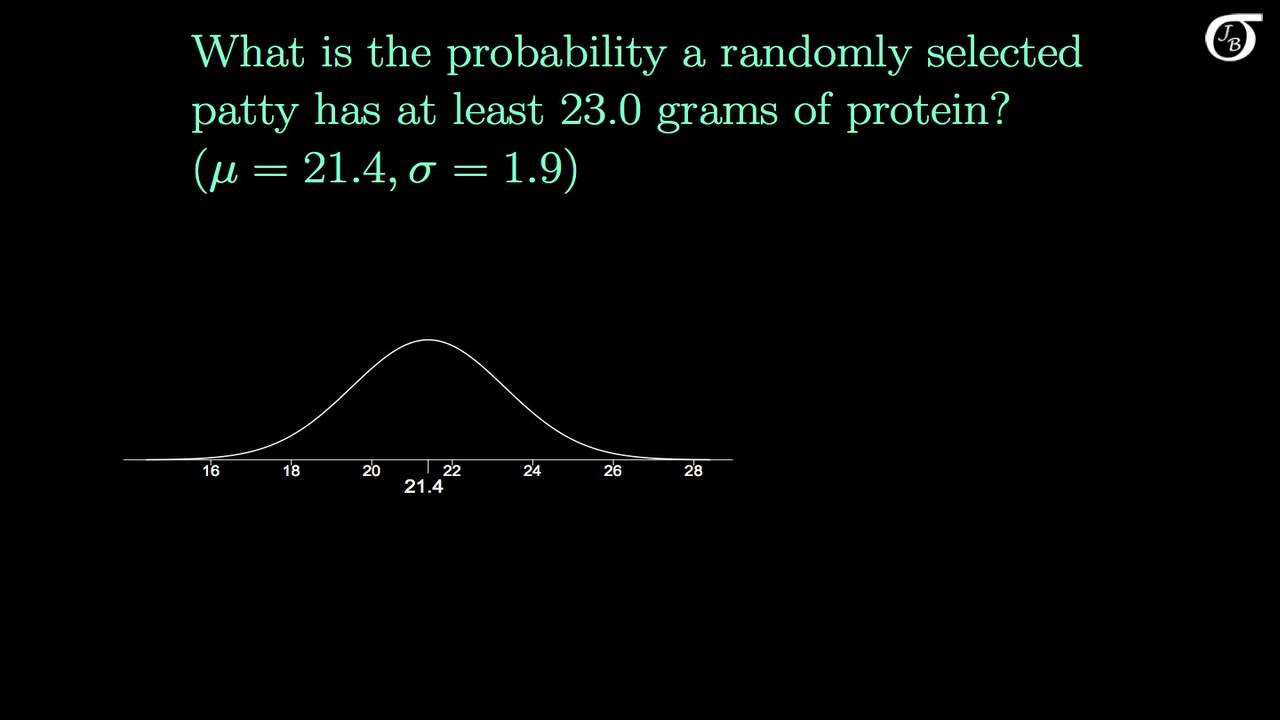

The script introduces the concepts of the Law of Large Numbers and the Central Limit Theorem, which are fundamental to probability theory. It explains the importance of random sampling in statistical analysis, using the example of measuring the average height of male members in a city. The process of drawing a random sample is likened to conducting repeated experiments, emphasizing the role of independent and identically distributed (iid) random variables in parametric estimation within statistics.

📏 Understanding Random Sampling and the Strong Law

This paragraph delves into the specifics of random sampling, illustrating how it can be used to estimate the average height of a population without measuring every individual. It introduces the strong law of large numbers, which states that as the size of a random sample increases, the sample average converges to the population average with probability 1. The explanation includes a technical discussion of iid random variables and their role in defining the strong law.

🎲 Bernoulli Trials and the Laplace de Moivre Theorem

The script discusses Bernoulli variables, which represent binary outcomes such as heads or tails in a coin toss. It explains how these variables can be used to model success and failure in experiments. The Laplace de Moivre theorem is introduced as a precursor to the Central Limit Theorem, focusing on the behavior of the sum of iid Bernoulli random variables and how they approach a standard normal distribution as the number of trials increases.

📉 Binomial Variables and Their Properties

The paragraph explains the concept of binomial random variables, which measure the number of successes in a fixed number of trials, each with a probability of success p. It discusses how these variables are related to the binomial distribution and highlights the mean and variance of binomial variables, which are key for understanding their behavior in large samples.

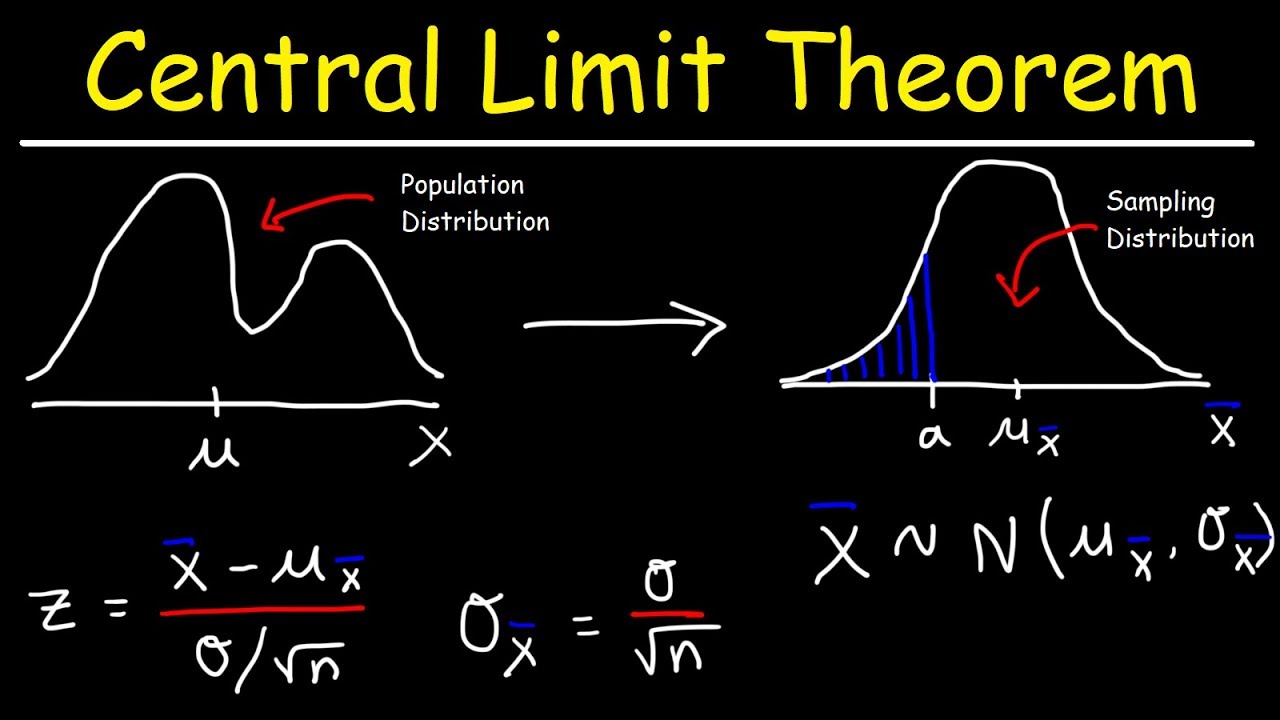

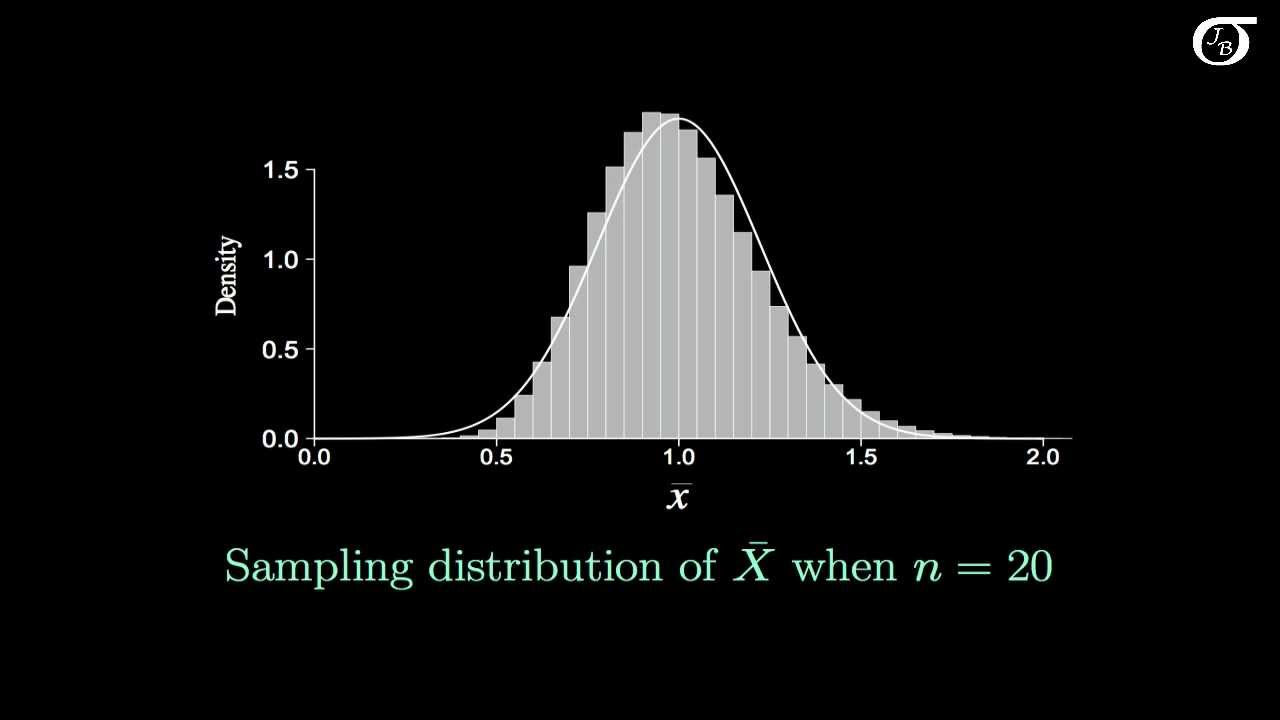

📊 Transition from Discrete to Continuous: The Central Limit Theorem

This section builds upon the previous discussion to introduce the Central Limit Theorem (CLT), which is a fundamental theorem in statistics. The CLT states that the sum of a large number of iid random variables, regardless of their original distribution, will be approximately normally distributed. The explanation highlights the transition from discrete binomial variables to the continuous standard normal distribution.

Mindmap

Keywords

💡Law of Large Numbers

💡Central Limit Theorem (CLT)

💡Random Sample

💡IID (Independently and Identically Distributed)

💡Bernoulli Variables

💡Binomial Random Variable

💡Chebyshev's Inequality

💡Borel-Cantelli Lemma

💡Laplace de Moivre Theorem

💡Standard Normal Distribution

Highlights

Introduction to the Law of Large Numbers and Central Limit Theorem as fundamental concepts in probability theory.

Explanation of random sampling as a method to infer population characteristics without examining the entire population.

The concept of repeated experiments in the context of random sampling.

Definition and importance of iid (independently and identically distributed) random variables in statistics.

The role of iid variables in parametric estimation within statistical analysis.

The notion of sample paths and numerical observations in the context of random variables.

The practical application of random sampling in estimating the average height of a city's male population.

The Strong Law of Large Numbers and its implication for sample averages converging to the population average.

The formal definition of the Strong Law of Large Numbers involving a sequence of iid random variables.

The use of Chebyshev's inequality and the Borel-Cantelli lemma in proving the Strong Law of Large Numbers.

Introduction to Bernoulli variables as a type of random variable representing success or failure.

The properties of Bernoulli variables and their representation of binary outcomes.

The explanation of binomial random variables as a sum of Bernoulli trials and their relevance to the binomial distribution.

The Laplace de Moivre theorem as a precursor to the Central Limit Theorem, specifically for Bernoulli random variables.

The Central Limit Theorem and its importance in statistical inference.

Transcripts

Browse More Related Video

Central Limit Theorem - Sampling Distribution of Sample Means - Stats & Probability

The Sampling Distribution of the Sample Mean

Central Limit Theorem & Sampling Distribution Concepts | Statistics Tutorial | MarinStatsLectures

Introduction to the Central Limit Theorem

But what is the Central Limit Theorem?

8. Sampling and Standard Error

5.0 / 5 (0 votes)

Thanks for rating: