Tensor Calculus 4e: Decomposition by Dot Product in Tensor Notation

TLDRThis script delves into the concept of vector decomposition in linear algebra, particularly with respect to an orthogonal basis. It illustrates the method of determining coefficients by evaluating inner products, simplifying the process in the case of an orthonormal basis. The script further explores the application of these principles in tensor notation, demonstrating how to find contravariant components of a vector by dotting with a contravariant basis element. The elegance of this approach is highlighted, emphasizing the power and beauty of mathematical notation in simplifying complex calculations.

Takeaways

- 📚 The script discusses a method in linear algebra for decomposing a vector with respect to an orthogonal basis using inner products.

- 🔍 It introduces the concept of using the inner product to find coefficients (alpha 1, alpha 2, alpha 3) for a vector V represented as a linear combination of basis vectors (e1, e2, e3).

- 📐 The inner product's symmetry is highlighted, allowing for the dot product to be used in the decomposition process.

- 🎯 By dotting both sides of the decomposition with e1, the script simplifies the expression to isolate alpha 1, demonstrating the orthogonality of the basis vectors.

- 🌐 The script explores the simplification of the formula when dealing with an orthonormal basis, where the vectors are orthogonal and of unit length.

- 🔢 It explains that for an orthonormal basis, the coefficients can be directly obtained by dotting the vector with the corresponding basis vector.

- 🤔 The script also considers the scenario where the basis is not orthogonal, leading to a system of equations that must be solved to find the coefficients.

- 📉 The effectiveness of the decomposition method is somewhat reduced when the basis is not orthogonal, but the method still applies with additional complexity.

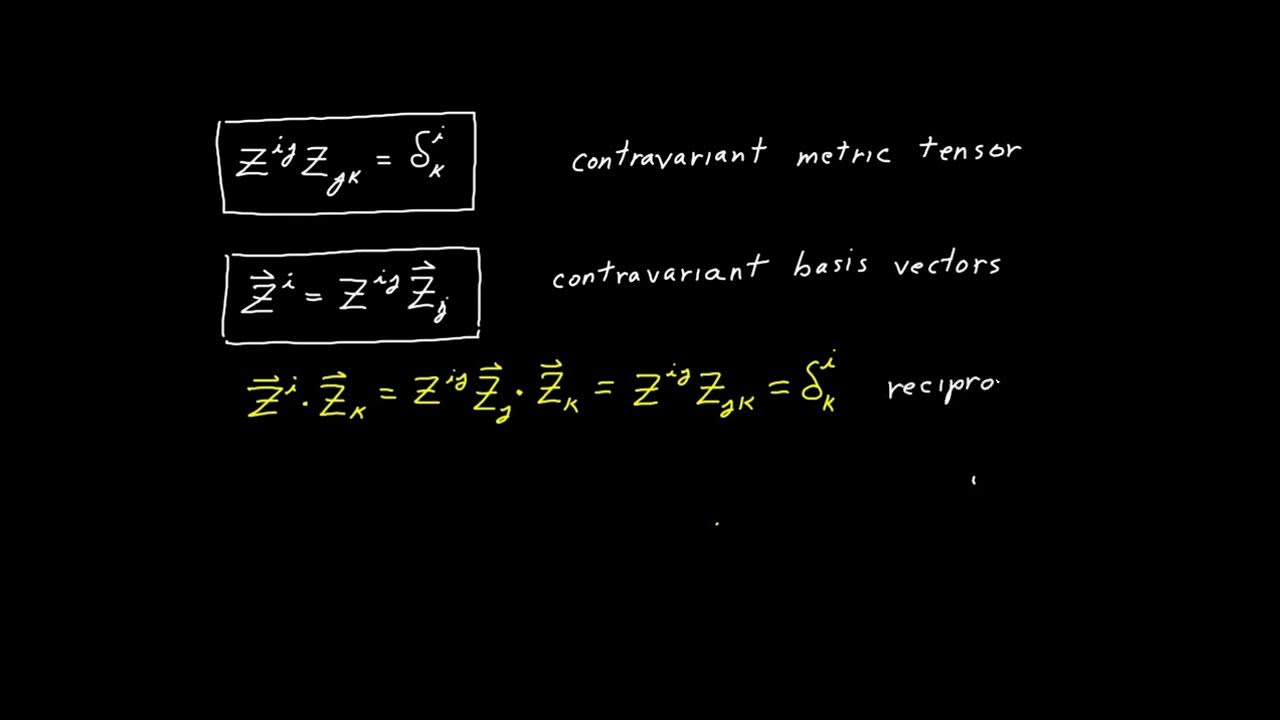

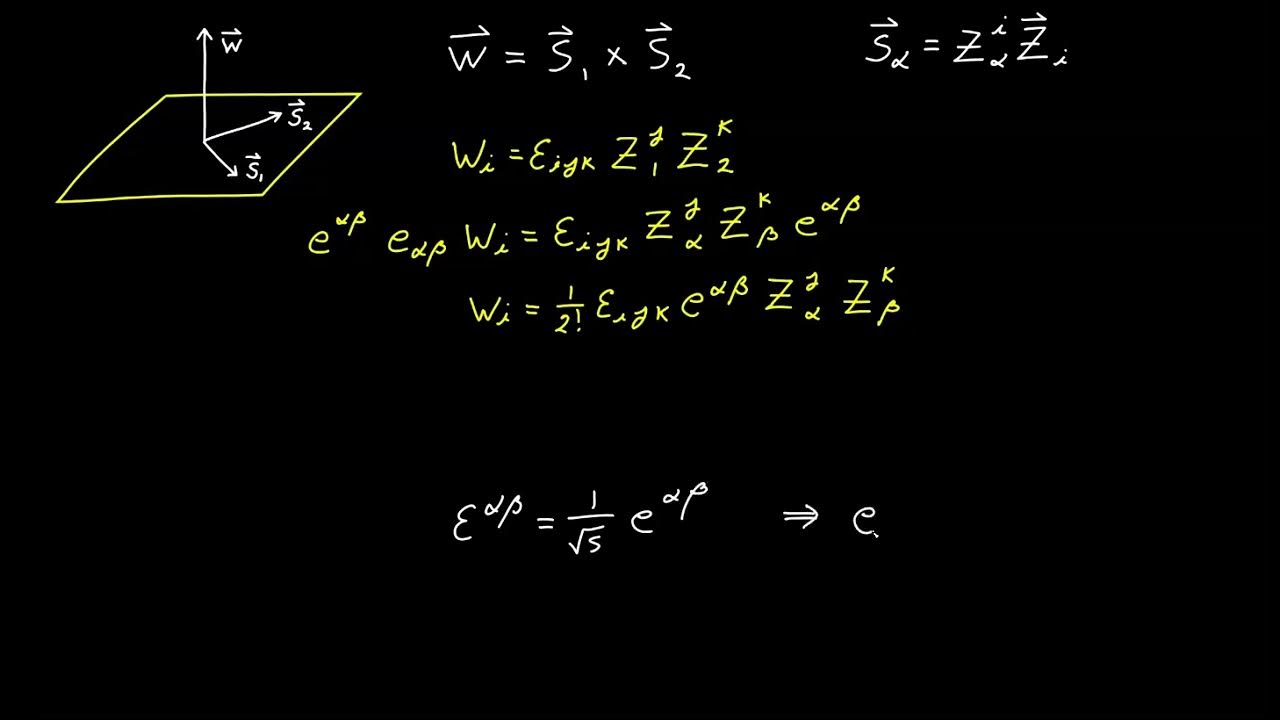

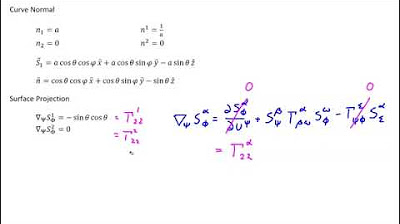

- 📚 The script transitions to tensor terms, showing how the decomposition method can be applied in a geometric space with covariant and contravariant bases.

- 📏 It demonstrates that the contravariant components of a vector can be found by dotting with a corresponding contravariant basis element, even if the basis is not orthogonal.

- 🧩 The script emphasizes the elegance and simplicity of the tensor notation, which encapsulates the same calculations as in linear algebra but in a more compact form.

- 🔄 The appearance of the metric tensor in tensor notation is discussed, showing that the inversion of this tensor is inherent in the placement of indices in the tensor expression.

Q & A

What is the basic concept of decomposing a vector with respect to an orthogonal basis?

-The basic concept involves expressing a vector V as a linear combination of basis vectors e1, e2, e3, with coefficients alpha1, alpha2, alpha3, respectively. This is done by evaluating the inner product of the vector with each basis vector to determine the coefficients.

Why is the inner product symmetric when decomposing a vector?

-The inner product is symmetric because it does not matter in which order the vectors are multiplied. This property allows for the simplification of the decomposition process, as it ensures that the dot product of orthogonal vectors is zero.

How does the decomposition formula simplify when the basis is orthonormal?

-In an orthonormal basis, the vectors are orthogonal and have unit length. This simplifies the formula for the coefficients, as the denominator of the original formula becomes 1, and the coefficients can be directly obtained by taking the dot product of the vector with the corresponding basis vector.

What happens to the decomposition process if the basis is not orthogonal?

-If the basis is not orthogonal, the simplicity of the decomposition formula is lost. However, you can still find the coefficients by evaluating all possible inner products and solving a system of linear equations involving the non-diagonal elements of the metric tensor.

How does the decomposition process differ in geometric space with covariant and contravariant basis?

-In geometric space, the decomposition process involves dotting both sides of the vector equation with a contravariant basis element to find the contravariant components of the vector. This process leverages the Kronecker Delta property, which simplifies the expression to a direct dot product with the corresponding contravariant basis element.

What is the Kronecker Delta property mentioned in the script?

-The Kronecker Delta is a property where the dot product of a vector with itself in a contravariant basis results in 1, and the dot product of different basis vectors is 0. This property simplifies the expression for finding the contravariant components of a vector.

Why is the metric tensor important in the decomposition process in tensor notation?

-The metric tensor is important because it encodes the information about the inner products of the basis vectors. It is used to invert the matrix of pairwise inner products, which is necessary for finding the coefficients of the decomposition in non-orthonormal bases.

How does the placement of indices in tensor notation simplify the decomposition process?

-The placement of indices in tensor notation simplifies the decomposition process by implicitly inverting the metric tensor and performing the necessary multiplications. This results in a more compact and appealing expression for finding the contravariant components of a vector.

What is the significance of the rule for finding the contravariant components of a vector?

-The rule for finding the contravariant components of a vector is significant because it provides a powerful and compact method for determining these components by simply dotting the vector with the corresponding contravariant basis element, regardless of whether the basis is orthogonal or not.

Why is the method of decomposition by inner product effective even with a non-orthogonal basis?

-The method of decomposition by inner product is effective even with a non-orthogonal basis because it allows for the evaluation of all possible inner products, which can then be used to solve a system of linear equations. This process can still recover the coefficients alpha1, alpha2, and alpha3, despite the complexity introduced by the non-orthogonality of the basis.

Outlines

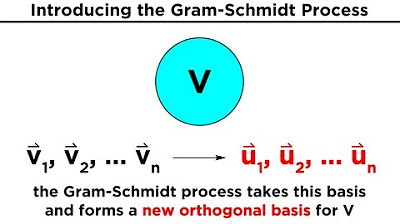

📚 Vector Decomposition in Linear Algebra

The first paragraph discusses the process of decomposing a vector with respect to an orthogonal basis in linear algebra. It explains how to determine the coefficients of a vector represented as a linear combination of basis vectors by evaluating inner products. The method simplifies when dealing with an orthonormal basis, where the coefficients can be directly obtained by dotting the vector with the corresponding basis vector. The paragraph also touches on the scenario where the basis is not orthogonal, requiring the solution of a system of linear equations involving the inner products of the basis vectors.

🔍 Tensor Notation and Vector Decomposition

The second paragraph extends the concept of vector decomposition to tensor notation, specifically in geometric spaces. It illustrates how to find the contravariant components of a vector by dotting with a contravariant basis element. The process is shown to be effective even with an arbitrary basis, not necessarily orthogonal, and highlights the simplicity and elegance of the tensor approach compared to the more complex matrix inversion required in linear algebra for non-orthogonal bases.

🌐 The Power of Tensor Notation in Simplifying Calculations

The third paragraph delves deeper into the advantages of tensor notation over traditional linear algebra methods, especially when dealing with non-orthogonal bases. It emphasizes the 'magic' of tensor notation that simplifies the process of finding vector components by directly dotting with a contravariant basis element, obviating the need for matrix inversion. The paragraph concludes by reinforcing the importance of understanding and applying this powerful and compact rule throughout one's academic and professional life in the field.

Mindmap

Keywords

💡Linear Algebra

💡Orthogonal Basis

💡Inner Product

💡Linear Combination

💡Coefficients

💡Orthonormal Basis

💡Tensor

💡Covariant and Contravariant Basis

💡Metric Tensor

💡Kronecker Delta

Highlights

Decomposing a vector with respect to an orthogonal basis by evaluating inner products.

Using the inner product to find coefficients in the linear combination of basis vectors.

Dotting both sides of the decomposition with E1 to isolate coefficients.

Orthogonality simplifies the inner product to zero, leaving only the relevant term.

Determining coefficients by the formula: alpha1 = V dot E1 / E1 dot E1.

Simplification of the formula for an orthonormal basis where denominators are one.

The rule for finding coefficients with respect to an orthonormal basis: dot the vector with the corresponding basis vector.

Adjusting the formula for nearly orthogonal bases by dividing by the length squared of the basis element.

Exploring the decomposition method's effectiveness with arbitrary, non-orthogonal bases.

Solving a system of linear equations to find coefficients in non-orthogonal bases.

The appearance of the metric tensor in tensor notation and its role in the decomposition.

The Kronecker Delta property simplifying the expression for contravariant components.

The rule for finding contravariant components by dotting with a contravariant basis element.

The method's applicability even when the basis is not orthogonal.

The elegance of tensor notation in expressing the decomposition rule.

Comparing the simplicity of tensor notation to the traditional linear algebra approach.

The importance of recognizing the metric tensor's role in the decomposition process.

The practicality of the decomposition rule for the rest of one's life in the field.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: