Watching Neural Networks Learn

TLDRThis video explores the fascinating world of neural networks and their ability to learn and approximate functions. It delves into the concept of neural networks as universal function approximators, capable of modeling the world through mathematical relationships. The presenter takes viewers on a journey, watching neural networks learn intricate shapes and patterns, while addressing challenges and limitations. The video also introduces alternative methods like Taylor and Fourier series for function approximation and discusses their strengths and weaknesses. It culminates with a challenge to the audience: how precisely can the Mandelbrot set be approximated using only a sample of data points, encouraging innovative solutions to this intriguing problem.

Takeaways

- 🧠 Neural networks are universal function approximators that can learn and model any function or relationship between inputs and outputs.

- 📐 Functions are fundamental to describing and understanding the world around us, from physics to mathematics to computer science.

- 🔄 Neural networks learn functions through a process called backpropagation, which adjusts the weights and biases of the network to minimize the error between predicted and true outputs.

- 🌀 Neural networks can approximate complex, high-dimensional functions, but struggle with infinitely complex fractals like the Mandelbrot set due to their inherent limitations.

- 🌊 Incorporating Fourier features (sine and cosine terms) as additional inputs can significantly improve neural network performance, especially for low-dimensional problems.

- ⚠️ The curse of dimensionality poses challenges for certain function approximation methods, as the computational complexity grows exponentially with the number of input dimensions.

- 🧪 Experimentation and empirical evaluation are crucial in machine learning, as theoretical guarantees may not always translate to practical performance.

- 🔁 Different architectures, models, and methods excel at different tasks, and no single approach is universally optimal for all problems.

- 🎯 The Mandelbrot set approximation problem serves as an open challenge to explore novel techniques and potentially discover better solutions for function approximation.

- 🌐 Advances in function approximation can have far-reaching applications in various domains, as understanding and modeling relationships is fundamental to many real-world problems.

Q & A

What is the main goal of artificial intelligence discussed in the video?

-The main goal of artificial intelligence discussed in the video is to write programs that can understand, model, and predict the world around us, or have them write themselves to build their own functions. This process is called function approximation.

What is a neural network, and what makes it a universal function approximator?

-A neural network is a function-building machine that can approximate any function by adjusting its internal parameters or weights through a training process. With an infinite number of neurons, a neural network can provably build any function, making it a universal function approximator.

How do neural networks handle higher-dimensional problems compared to other function approximation methods?

-Neural networks handle higher-dimensional problems relatively well, as it is trivial to add additional dimensions to the input and output vectors. Other methods, like the Fourier series, suffer from the curse of dimensionality, where the computational complexity explodes as the dimensionality increases.

What are Fourier features, and how do they improve function approximation?

-Fourier features are additional inputs computed from the Fourier series, consisting of sine and cosine terms with different frequencies. When fed into a neural network, Fourier features can significantly improve the approximation performance, especially for low-dimensional problems.

Why do Fourier features sometimes perform poorly for high-dimensional problems?

-For high-dimensional problems, using Fourier features can lead to overfitting, where the approximation learns the training data too well but fails to generalize to the underlying function. This issue may arise due to the large number of additional features computed for higher-dimensional inputs.

What is the challenge presented at the end of the video, and why is it important?

-The challenge presented at the end of the video is to find more precise and efficient ways to approximate the Mandelbrot set, given only a random sample of points. This challenge is important because it could lead to the discovery of better function approximation methods with real-world applications.

What is the curse of dimensionality, and how does it affect function approximation methods?

-The curse of dimensionality refers to the problem where computational complexity and resource requirements grow exponentially as the dimensionality of the input/output space increases. Many function approximation methods break down or become impractical for high-dimensional problems due to the curse of dimensionality.

What is the role of activation functions in neural networks?

-Activation functions define the mathematical shape of individual neurons in a neural network. They introduce non-linearity, which allows the network to learn complex, non-linear functions. Different activation functions, such as ReLU, leaky ReLU, and sigmoid, can impact the performance of the network.

Why is it important to normalize the input and output values for neural networks?

-Normalizing the input and output values to a smaller, centered range (e.g., -1 to 1 or 0 to 1) can improve the learning process for neural networks. It makes it easier for the network to handle and adjust the values during training, leading to better performance and convergence.

What is the significance of the Taylor series in function approximation?

-The Taylor series is an infinite sum of polynomial terms that can approximate a function around a specific point. It can be viewed as a single-layer neural network, where the coefficients of the polynomial terms are learned through backpropagation. However, the Taylor series may struggle to approximate functions over a wider range of inputs.

Outlines

🧠 Introduction to Neural Networks and Function Approximation

This paragraph introduces the concept of neural networks as universal function approximators. It explains how neural networks learn by approximating functions from input-output data points. The architecture of a fully connected feed-forward network is described, including neurons, weights, biases, and activation functions. The paragraph emphasizes that neural networks can learn any function given enough neurons, making them powerful tools for modeling and understanding the world.

🖼️ Learning Curves and Higher-Dimensional Problems

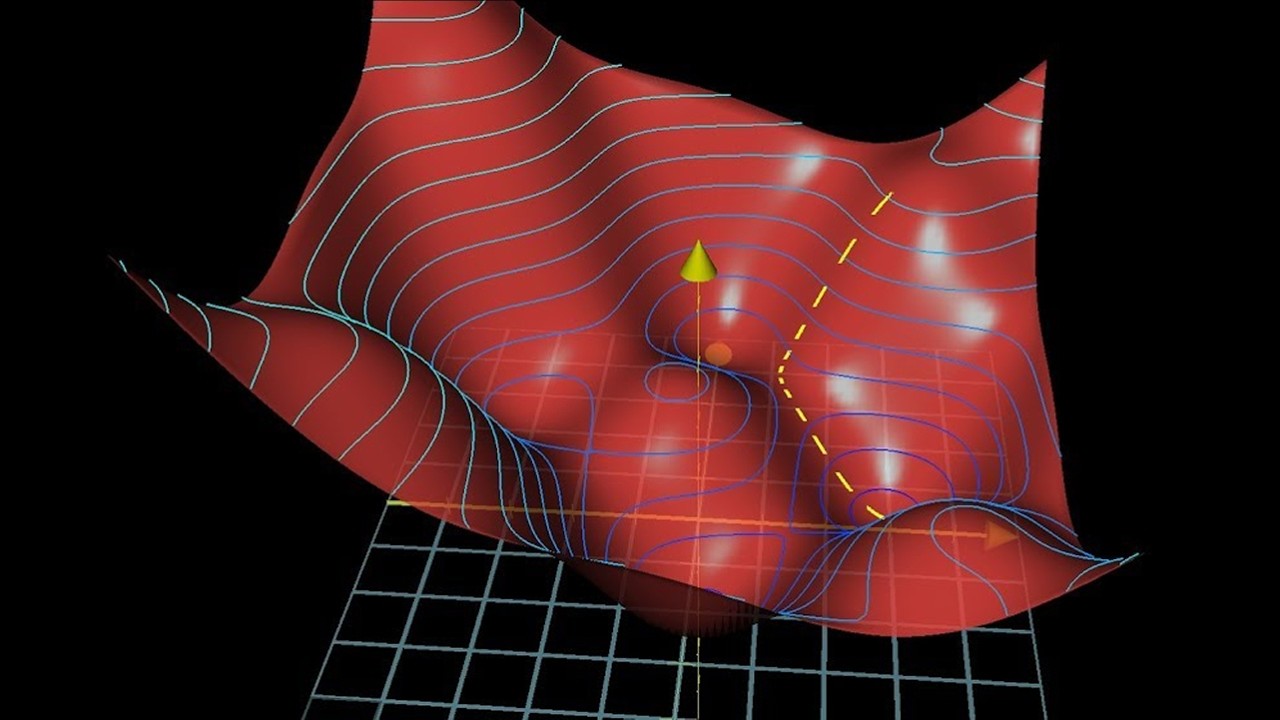

This paragraph explores the process of training neural networks to learn higher-dimensional problems, such as approximating images and 3D surfaces. It discusses techniques like normalization, activation functions (ReLU, leaky ReLU, sigmoid, and tanh), and visualizing the learning process. The challenges of learning complex shapes like spiral shells are highlighted, and the curse of dimensionality is introduced, where the complexity of approximation increases exponentially with higher dimensions.

📐 Alternative Methods for Function Approximation

The paragraph introduces alternative mathematical methods for function approximation, such as Taylor series and Fourier series. It explains how these series can be represented as neural network layers, and how additional features (Taylor features and Fourier features) can be fed to the network to improve approximation. The benefits of using Fourier features for image approximation are demonstrated, and the curse of dimensionality for higher-dimensional Fourier series is discussed.

🌀 Tackling the Mandelbrot Set Approximation

This paragraph focuses on the challenging task of approximating the Mandelbrot set, an infinitely complex fractal, using neural networks and Fourier features. It showcases the improved performance of a large neural network with high-order Fourier features in capturing more detail of the Mandelbrot set. However, the limitations of these approximations in capturing infinite detail are also highlighted.

🔢 Real-World Application: MNIST Digit Classification

The paragraph transitions to a real-world problem, the MNIST handwritten digit classification task, to evaluate the effectiveness of neural networks and Fourier features on high-dimensional inputs. It demonstrates that while Fourier features provide marginal improvement for low orders, they can lead to overfitting and degraded performance for higher orders. The importance of selecting appropriate methods for different tasks is emphasized.

🏆 Conclusion and Open Challenge

The final paragraph concludes the video by appreciating the power of function approximation and neural networks as mathematical tools. It presents an open challenge to the audience to find more precise and deep approximations of the Mandelbrot set using universal function approximators, suggesting potential real-world applications for improved solutions. The video encourages exploration and emphasizes that better methods may be waiting to be discovered.

Mindmap

Keywords

💡Neural Network

💡Function Approximation

💡Dimensionality

💡Activation Function

💡Backpropagation

💡Taylor Series

💡Fourier Series

💡Overfitting

💡Mandelbrot Set

💡Curse of Dimensionality

Highlights

Neural networks are universal function approximators, meaning they can learn and approximate any function by fitting a curve to data points.

The goal of artificial intelligence is to write programs that can understand, model, and predict the world by building their own functions, which is the point of function approximation.

Neural networks are function building machines that approximate unknown target functions from data samples of inputs and outputs.

The process of neural networks learning is visualized, showing how they bend and shape the curve to fit the data points.

Neural networks can learn higher dimensional problems, such as approximating images by treating pixel coordinates as inputs and pixel values as outputs.

Techniques like normalization, different activation functions, and adjusting learning rates can practically improve the approximation and optimize the learning process.

The Taylor series and Fourier series are alternative mathematical tools for function approximation, which can be computed as additional input features for neural networks.

Fourier features, based on sines and cosines of different frequencies, significantly improve the approximation quality for low-dimensional problems.

The curse of dimensionality is encountered, where many function approximation methods break down as the input dimensionality increases.

Neural networks handle higher dimensionality comparatively well, making it trivial to add additional input dimensions.

Fourier features help approximate the complex and infinitely detailed Mandelbrot set, though still missing infinite detail.

The MNIST handwritten digit recognition problem is used as a real-world example, showing that Fourier features provide minor improvements for high-dimensional inputs.

No single architecture, model, or method is the best fit for all tasks, and different approaches are required for different problems.

The Mandelbrot set approximation problem is presented as a fun challenge to find better solutions for precisely and deeply approximating it from a random sample of points.

Solutions to this toy problem could potentially have uses in the real world, and there may be far better solutions waiting to be discovered.

Transcripts

Browse More Related Video

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

Gradient descent, how neural networks learn | Chapter 2, Deep learning

Neural Networks: Crash Course Statistics #41

MIT Introduction to Deep Learning | 6.S191

How ChatGPT Works Technically For Beginners

[Quiz] Regularization in Deep Learning, Lipschitz continuity, Gradient regularization

5.0 / 5 (0 votes)

Thanks for rating: