MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

TLDRIn this comprehensive lecture, Ava dives into the intricacies of sequence modeling with neural networks, building upon Alexander's initial discussion on the fundamentals of neural networks. The lecture begins with an illustration of how sequential data can inform predictions, such as forecasting the trajectory of a moving ball, and extends to real-world applications like audio processing, language, and financial markets. Ava emphasizes the importance of understanding the components of sequence modeling, which can be challenging but crucial for grasping the concepts. The lecture covers the evolution from simple perceptrons to complex recurrent neural networks (RNNs) and the Transformer architecture, highlighting the need for handling variable sequence lengths and long-term dependencies. Ava also discusses the limitations of RNNs, such as the encoding bottleneck and the vanishing gradient problem, and introduces solutions like gradient clipping and the LSTM (Long Short-Term Memory) network. The lecture concludes with a foray into the transformative concept of self-attention, which underpins powerful models like Transformers, capable of advanced tasks in language, biology, and computer vision. The engaging presentation aims to equip learners with a foundational understanding of neural networks for sequence modeling and their potential applications.

Takeaways

- 📈 The lecture introduces sequence modeling, focusing on building neural networks capable of handling sequential data, which is crucial for various applications like language processing and time series analysis.

- 🌟 Sequential data is pervasive, from sound waves in audio to characters in text, and is fundamental in fields ranging from finance to biology.

- 🔍 The concept of recurrence is pivotal in defining Recurrent Neural Networks (RNNs), which maintain an internal state or memory term that is updated with each time step.

- 🔧 RNNs are designed to process variable-length sequences and capture long-term dependencies, which are essential for tasks like language modeling and machine translation.

- 🧠 Embeddings are used to numerically represent textual data, allowing neural networks to understand and process language through vector representations.

- 🔁 The backpropagation through time algorithm is used to train RNNs, which involves backpropagating errors across all time steps of the sequence.

- 🚀 LSTM (Long Short-Term Memory) networks are introduced as an advanced type of RNN that can better handle long-term dependencies through gated information flow.

- 🚨 Vanishing and exploding gradient problems are challenges in training RNNs, especially for long sequences, but can be mitigated with techniques like gradient clipping and specific activation functions.

- 🎶 The application of RNNs is showcased through music generation, where the model predicts the next note in a sequence, creating original compositions.

- 🔍 The Transformer architecture is highlighted as a powerful alternative to RNNs for sequence modeling, using self-attention mechanisms to focus on important parts of the input data.

- 🛠 Positional encoding is a technique used in conjunction with self-attention models to maintain the order of sequence data without processing it step by step.

- ⚙️ Multiple self-attention heads allow a network to focus on different aspects of the input data simultaneously, creating a richer representation for complex tasks.

Q & A

What is the main focus of Lecture 2 presented by Ava?

-Lecture 2 focuses on sequence modeling, specifically how to build neural networks that can handle and learn from sequential data.

What is the basic concept behind sequential data?

-Sequential data refers to information that is ordered and dependent on its position in a sequence, such as time series data, audio, text, or any other form of data that has a temporal or ordered component.

How does the recurrent neural network (RNN) differ from a feedforward neural network?

-Unlike feedforward neural networks that process information in a static, one-to-one input-output manner, RNNs are designed to handle sequential data by maintaining an internal state that captures information from previous time steps.

What is the issue with treating sequential data as a series of independent instances?

-Treating sequential data as independent instances fails to capture the temporal dependencies between data points. This can lead to less accurate predictions, as the model would not be able to leverage the context provided by past data points.

How does an RNN use its internal state to process sequential data?

-An RNN uses a recurrence relation that links the network's computations at a particular time step to its prior history. This internal state, or memory term, is maintained and updated as the network processes the sequence, allowing it to incorporate past information into its predictions.

What are the key design criteria for an RNN to effectively handle sequential data?

-The key design criteria include the ability to handle sequences of variable lengths, track and learn dependencies over time, maintain the order of observations, and perform parameter sharing across different time steps.

How does the concept of embedding help in representing textual data for neural networks?

-Embedding transforms textual data into a numerical format by mapping words to vectors of fixed size. This allows neural networks to process language data by treating words as numerical inputs, capturing semantic relationships between words through their vector representations.

What is the backpropagation through time (BPTT) algorithm?

-Backpropagation through time is a variant of the backpropagation algorithm used for training RNNs. It involves calculating the gradient of the loss with respect to each time step in the sequence and then propagating these gradients back through the network over time.

What are the limitations of RNNs in terms of processing long-term dependencies?

-RNNs can struggle with long-term dependencies due to issues like the vanishing gradient problem, where the gradients used for updating weights become increasingly small over many time steps, making it difficult to learn from data points that are far apart in the sequence.

How do LSTM networks address the vanishing gradient problem?

-LSTM (Long Short-Term Memory) networks introduce a gated mechanism that controls the flow of information. This gating allows the network to maintain a cell state that can capture long-term dependencies more effectively than standard RNNs.

What is the Transformer architecture and how does it improve upon RNNs?

-The Transformer architecture is a more recent development in deep learning models for sequence modeling that uses self-attention mechanisms to process sequences. It allows for parallel processing and does not suffer from the same bottlenecks as RNNs, such as the need for sequential processing and the issues with long-term dependencies.

How does the self-attention mechanism in Transformers enable the network to focus on important parts of the input data?

-The self-attention mechanism computes a set of query, key, and value vectors from the input data. It then calculates attention scores that determine the importance of each part of the input, and uses these scores to weight and combine the values, resulting in a representation that focuses more on the most relevant features.

Outlines

📈 Introduction to Sequence Modeling

Ava introduces Lecture 2, focusing on sequence modeling, which is about building neural networks to handle sequential data. She builds upon Alexander's first lecture by discussing how neural networks can be adapted to process data that has a temporal or sequential aspect. The lecture aims to demystify potentially confusing components and establish a strong foundation in understanding the math and operations behind these networks. Ava uses the example of predicting the trajectory of a ball to illustrate the concept of sequential data and its importance in various fields.

🔄 Understanding Recurrent Neural Networks (RNNs)

The lecture delves into the concept of recurrence and the definition of RNNs. Ava explains that unlike perceptrons and feed-forward models, RNNs can handle sequential information by maintaining a state that captures memory from previous time steps. She describes the process of how RNNs update their internal state and make predictions at each time step, highlighting the importance of the recurrence relation that links the network's computations across different time steps.

🤖 Working of RNNs and Computational Graphs

Ava outlines the operational details of RNNs, including how they generate output predictions and update their hidden states. She discusses the computational graph of an RNN, which can be visualized as unrolling the recurrence over time. The lecture also touches on how RNNs are trained, introducing the concept of loss at each time step and the summation of these losses to form the total loss for the network.

🧠 RNN Implementation and Design Criteria

The lecture moves on to the practical aspects of RNN implementation, including coding in Python or using high-level APIs like TensorFlow. Ava emphasizes design criteria for robust RNNs, such as handling variable sequence lengths, capturing long-term dependencies, maintaining order, and parameter sharing. She also discusses the challenge of representing text-based data for neural network processing through numerical encoding and embeddings.

🔢 Encoding Language Data and Embeddings

Ava explains the process of transforming language data into numerical encodings that can be processed by neural networks. She introduces the concept of embeddings, which map words to numerical vectors, and discusses one-hot encoding as well as learned embeddings that capture semantic meaning. The lecture also covers how these embeddings are foundational for sequence modeling networks.

📉 Training RNNs and Addressing Vanishing Gradients

The lecture addresses the training of RNNs through the backpropagation algorithm, with a focus on handling sequential information. Ava discusses the challenges of backpropagation through time, including the exploding and vanishing gradient problems. She outlines solutions such as gradient clipping, choosing the right activation functions, parameter initialization, and the introduction of more complex RNN units like LSTMs to better handle long-term dependencies.

🎵 Practical Application: RNNs in Music Generation

Ava concludes the lecture with a practical example of RNNs being used in music generation, specifically the task of predicting the next musical note in a sequence to generate new musical compositions. She mentions a historical example where an RNN was trained to complete Schubert's unfinished Symphony, providing a glimpse into the creative potential of RNNs.

🚧 Limitations of RNNs and the Need for Advanced Models

The lecture acknowledges the limitations of RNNs, such as encoding bottlenecks, slow processing speeds, and difficulties with long memory dependencies. Ava discusses the need to move beyond step-by-step recurrent processing to more powerful architectures that can handle sequential data more effectively, setting the stage for the introduction of advanced models like Transformers in subsequent lectures.

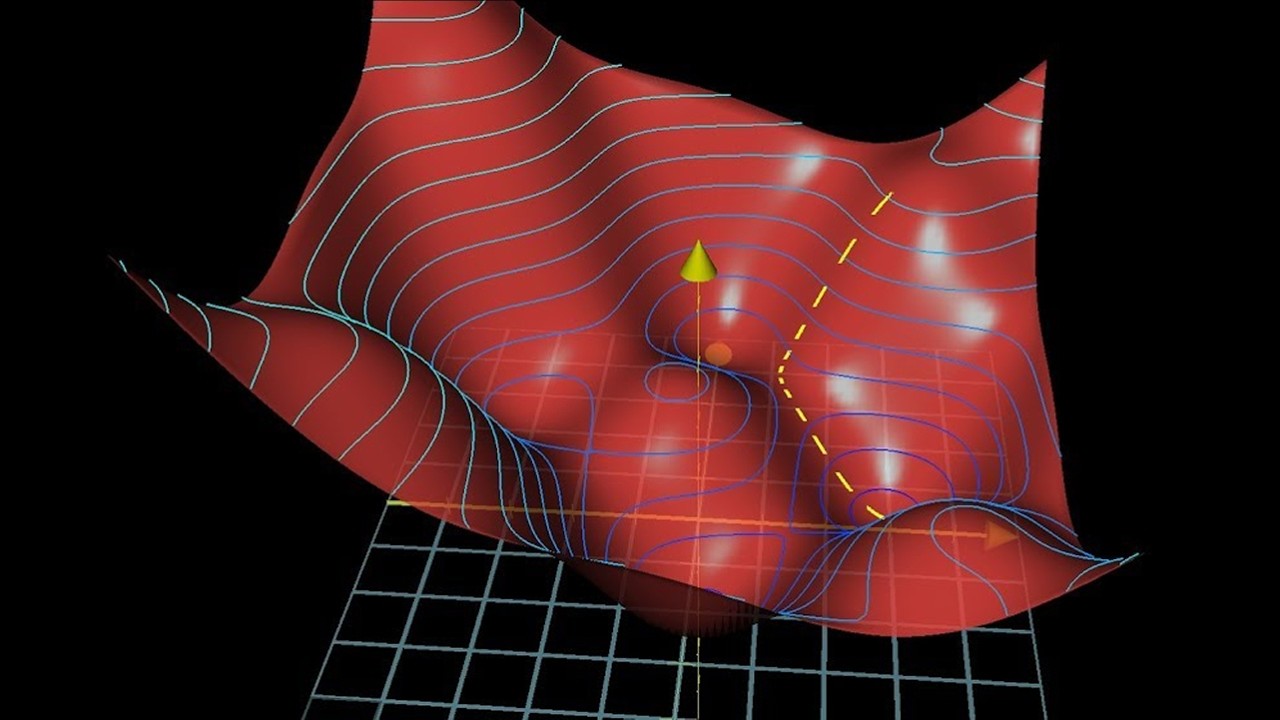

🔍 Introducing Self-Attention Mechanisms

Ava introduces the concept of self-attention as a mechanism to overcome the limitations of RNNs. She explains how self-attention allows models to identify and focus on important parts of the input data without the need for recurrence. The lecture covers the intuition behind self-attention, comparing it to human attention and the process of search, and how it can be used to build more powerful neural networks for deep learning in sequence modeling.

🌟 The Impact and Future of Self-Attention Models

The lecture concludes with a discussion on the impact of self-attention models, such as GPT-3 and AlphaFold 2, across various fields including natural language processing, biology, and medicine. Ava emphasizes the transformative effect of attention mechanisms on computer vision and deep learning, and encourages students to explore these models further during the lab portion and open office hours.

Mindmap

Keywords

💡Sequence Modeling

💡Recurrent Neural Networks (RNNs)

💡Backpropagation Through Time

💡Vanishing Gradients

💡Exploding Gradients

💡Long Short-Term Memory (LSTM)

💡Self-Attention

💡Transformer Architecture

💡Embeddings

💡Positional Encoding

💡Gating Mechanism

Highlights

Introduction to sequence modeling, focusing on building neural networks that can handle sequential data.

Motivation for sequential data through a simple example of predicting a ball's trajectory.

Discussion on the prevalence of sequential data in various fields such as audio, text, medical signals, and climate patterns.

Exploration of different problem definitions in sequential modeling, including classification, regression, and generation tasks.

Explanation of the limitations of perceptrons and feed-forward models in handling sequential data.

Introduction to recurrent neural networks (RNNs) and their ability to maintain a state that captures memory from previous time steps.

Mathematical definition of RNN operations and how they are implemented in code.

Challenges associated with training RNNs, such as exploding and vanishing gradient problems.

Solution to gradient issues using gradient clipping and modifications to activation functions and network architecture.

Introduction to Long Short-Term Memory (LSTM) networks as a solution for tracking long-term dependencies.

Overview of the design criteria for robust sequential modeling, including handling variable sequence lengths and parameter sharing.

Discussion on the encoding bottleneck and the challenges of processing long sequences with RNNs.

Introduction to the concept of self-attention as an alternative to recurrence for handling sequential data.

Explanation of how self-attention mechanisms allow for parallel processing and overcoming the limitations of RNNs.

Overview of the Transformer architecture and its use of self-attention to process information without temporal dependencies.

Practical example of using RNNs for music generation, highlighting the potential for creative applications.

Summary of the lecture, emphasizing the foundational concepts of neural networks for sequence modeling and the transition to self-attention models.

Transcripts

Browse More Related Video

Neural Networks: Crash Course Statistics #41

MIT Introduction to Deep Learning | 6.S191

Watching Neural Networks Learn

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Gradient descent, how neural networks learn | Chapter 2, Deep learning

How to Create a Neural Network (and Train it to Identify Doodles)

5.0 / 5 (0 votes)

Thanks for rating: