Neural Networks: Crash Course Statistics #41

TLDRThis video explains neural networks, which are computing systems modeled on the human brain that can analyze complex data to detect patterns and make predictions. They have applications in image and speech recognition, data analytics, and more. The video describes how neural networks have 'input' nodes that take in data, 'output' nodes that produce results, and layers of 'hidden' nodes that transform the data. Key concepts covered include deep learning, convolutional neural networks for image processing, and recurrent neural networks for sequence prediction. Overall, the script conveys how neural networks help make sense of big, messy data in creative ways across many real-world domains.

Takeaways

- 😀 Neural networks are used for complex tasks like image recognition, natural language processing, and data modeling

- 👉 They work by examining input data and figuring out the calculations to produce a desired output

- 📊 The nodes and layers transform the input data into useful new features

- 🔁 Recurrent neural networks can process sequential data like text by remembering previous inputs

- 🖼 Convolutional neural networks are used for image processing by examining windows of pixels

- 😎 Generative adversarial networks can create new data that resembles real data

- 🤖 Neural networks allow us to find patterns in complex data that humans can't easily see

- 🌟 Deep learning uses neural networks with multiple layers to perform very complex processing

- ✏️ Neural networks learn by tweaking their calculations when they make incorrect predictions

- 💡 Applications of neural networks range from self-driving cars to art generation

Q & A

What are neural networks analogous to in terms of learning?

-Neural networks are analogous to robots that can learn to make things like toy cars not by following human instructions but by looking at existing toy cars and figuring out how to turn inputs like metal and plastic into toy car outputs.

What is an activation function and how does it help neural networks?

-An activation function transforms the weighted sum of inputs in a neural network before returning an output. Activation functions like the Rectified Linear Unit (ReLU) improve the way neural networks learn and give them more flexibility to model complex relationships.

What are some examples of how neural networks have been used?

-Neural networks have been used for handwritten digit and x-ray image recognition, spelling correction, music generation, and more. They are behind many modern applications like self-driving cars, translation apps, and AI assistants.

What is the difference between feedforward and recurrent neural networks?

-Feedforward neural networks only pass data from input to output in one direction, while recurrent neural networks can pass data backwards to 'remember' previous outputs and have connections between nodes.

How do convolutional neural networks work with images?

-Convolutional neural networks look at windows of pixels in images and apply filters to create features. Through steps like pooling, these networks can take a high-resolution image and extract smaller sets of key features for tasks like object recognition.

What are some limitations of neural networks?

-One key limitation of neural networks is requiring large amounts of quality data for training, especially complex deep learning models. They also tend to be computationally intensive and like any AI system can perpetuate biases in data.

What are generative adversarial networks and how do they work?

-Generative adversarial networks (GANs) use two neural networks - a generator to create new simulated data, and a discriminator to evaluate real from fake data. As they compete, the two networks get better at their jobs to produce increasingly realistic simulated data.

Can neural networks write creative fiction like Harry Potter chapters?

-While neural networks can mimic styles and structures of existing text, what they generate lacks meaning and narrative coherence. The Harry Potter example shows current limits, as it has correct grammar but nonsense content.

How might neural networks be applied to search and rescue missions?

-Neural networks could potentially be used in image recognition systems on search and rescue drones to identify people, objects, vehicles, buildings, terrain features, and more to help locate missing people faster.

Why are neural networks becoming more popular?

-As data continues growing in size and complexity, neural networks are critical tools to understand patterns, make predictions, and generate new data. They allow us to make use of data that might otherwise be too large and overwhelming to leverage.

Outlines

📺 What are neural networks and how do they work

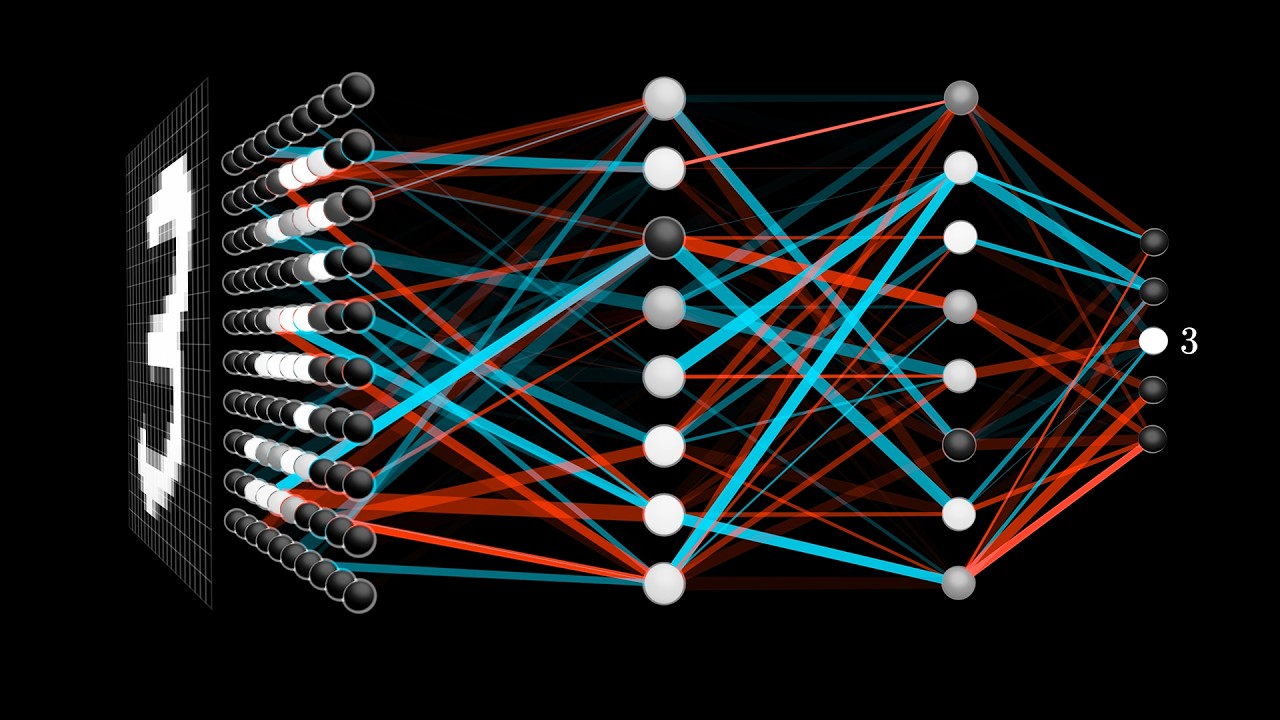

This paragraph provides an introduction to neural networks. It explains that neural networks can take in data and output useful information like predictions. It gives examples like predicting likelihood of hospital infections, generating Harry Potter text, and creating annoying Twitter bots. The paragraph states that we will look at what neural networks are and how they are able to do these things.

❓ Understanding the components and workings of a neural network

This paragraph explains the basics of how a neural network works. It has input nodes that hold values for variables. These feed into layers of other nodes that do calculations to eventually output a prediction. It explains concepts like activation functions, feature generation, and deep learning with multiple layers. The paragraph tries to demystify what the middle layers represent.

🤖 Applications and variations of neural networks

This paragraph discusses applications and types of neural networks. It covers recurrent neural networks which can model sequential data and learn patterns. It shows an example of using them for spell checking. The paragraph also introduces convolutional neural networks which are commonly used for image recognition. Finally, it explains generative adversarial networks which can create synthetic data.

Mindmap

Keywords

💡Neural Network

💡Deep Learning

💡Training

💡Features

💡Convolutional Neural Network

💡Generative Adversarial Network (GAN)

💡Recurrent Neural Network (RNN)

💡Natural Language Processing (NLP)

💡Big Data

💡Image Recognition

Highlights

Neural networks can output everything from the probability of someone getting a nasty strain of MRSA to new chapters of Harry Potter

Neural networks feed weighted inputs through activation functions which give them flexibility to model complex relationships

When we have large, complex data, neural networks save time by figuring out which variables are important

Deep learning uses neural networks with multiple layers to find patterns humans can't see

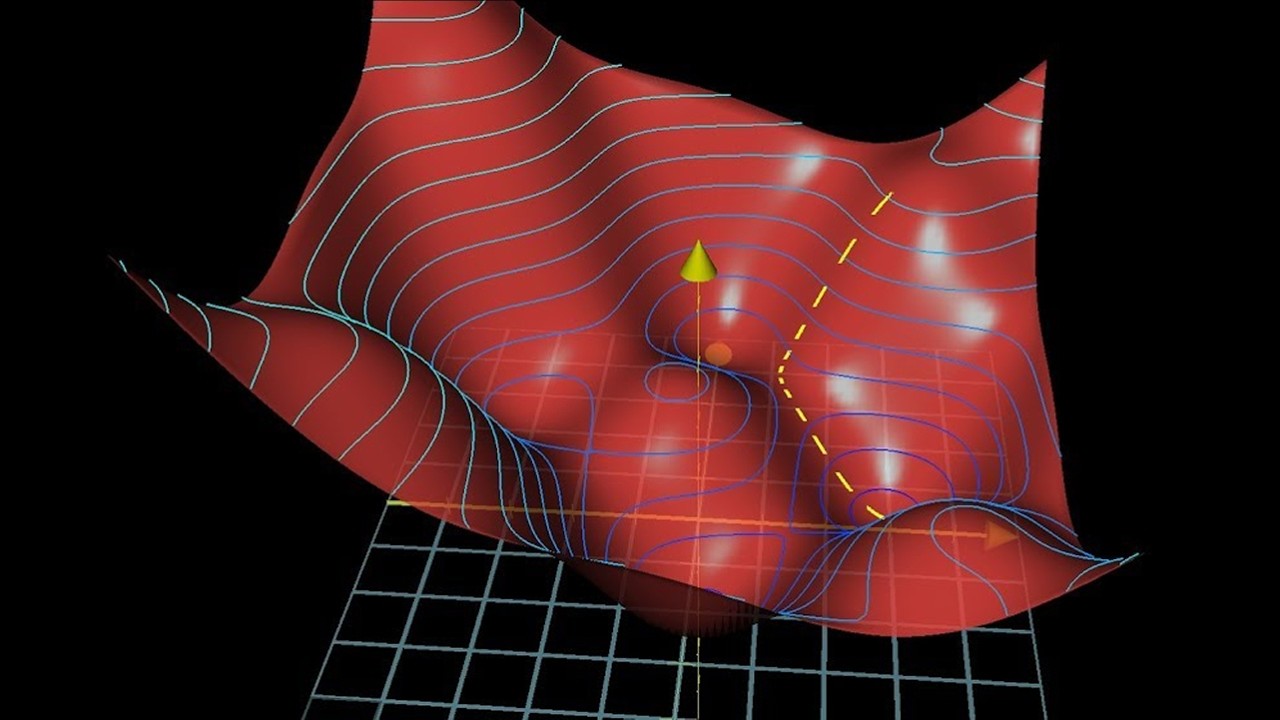

Neural networks learn by figuring out what they predicted wrong and tweaking values to be more accurate next time

Recurrent neural networks can learn sequences like words in a sentence or notes in a melody

Convolutional neural networks transform image pixels into features to classify images

Neural networks power translation, image recognition, and CAPTCHAs

Generative adversarial networks create synthetic data to train other networks

Neural networks detect patterns in big, messy data that humans can't see

As data grows, neural networks will become more common for understanding it

Image recognition with neural networks could help search-and-rescue drones

Natural language processing with neural networks powers voice assistants

Understanding neural networks allows creative applications

Neural networks help us make use of overwhelming data

Transcripts

Browse More Related Video

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

Machine Learning vs Deep Learning

Gradient descent, how neural networks learn | Chapter 2, Deep learning

How convolutional neural networks work, in depth

Convolutional Neural Networks Explained (CNN Visualized)

But what is a neural network? | Chapter 1, Deep learning

5.0 / 5 (0 votes)

Thanks for rating: