What do my results mean Effect size is not the same as statistical significance. With Tom Reader.

TLDRIn this insightful video, Tom Reader elucidates the nuanced relationship between statistical significance and effect size. He challenges the common misconception that a low p-value alone is sufficient to declare a study's findings meaningful. Using the analogy of two hypothetical baldness treatments, Reader illustrates the importance of considering both the statistical significance and the practical impact of a study's results. The video emphasizes that while a p-value can indicate the likelihood of an effect being due to chance, it does not convey the magnitude or real-world relevance of that effect. By comparing the outcomes of two treatments—one with a small but statistically significant effect and another with a larger, yet unproven, effect—Reader demonstrates the need to critically assess both the size and significance of an effect when interpreting research findings. He concludes by advising researchers to report not only the p-value but also a clear description of the effect size to provide a comprehensive understanding of their study's implications.

Takeaways

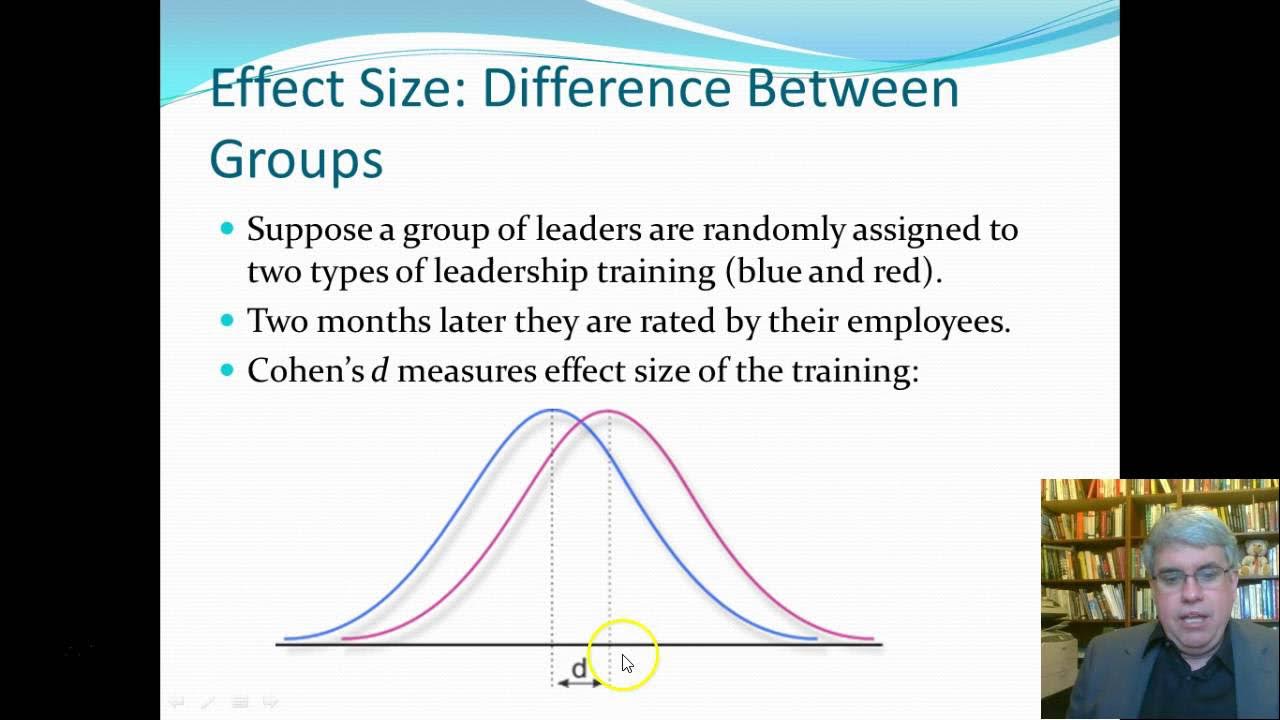

- 🧐 **Effect Size vs. Significance**: Understanding the difference between effect size and statistical significance is crucial for interpreting study results.

- 📉 **P-Value Limitations**: A p-value alone is insufficient to determine the practical significance of a study's findings.

- 🧑🔬 **Sample Size Importance**: Larger sample sizes can detect smaller effects, which is why the size of the sample matters in a study.

- 📏 **Measurement Precision**: The precision of measurements and the methods used can greatly affect the reliability of study outcomes.

- 🔍 **Statistical Tests**: Conducting and reporting a proper statistical test is essential to assess whether observed effects are likely due to chance.

- 📈 **Effect Size Reporting**: When reporting results, it's important to describe the size of the effect detected, not just its statistical significance.

- 📊 **Meaningful Change**: Even statistically significant effects may be too small to be meaningful or practical in real-world applications.

- 🧮 **Baseline Measurements**: It's important to measure and account for baseline differences between groups at the start of a study.

- 🧬 **Long-Term Impact**: Consideration of the long-term impact and practicality of a treatment or intervention is necessary for evaluating its true value.

- 🧲 **Marketing vs. Evidence**: Be wary of marketing claims and seek out hard evidence from scientific reports to assess the validity of a treatment.

- 📝 **Transparent Reporting**: Researchers should aim to provide clear and transparent information about their study's findings and their implications.

- 🔑 **Understanding Results**: The responsibility lies with researchers to ensure that the audience understands both the presence and the magnitude of the effects they are studying.

Q & A

What is the main topic of the video by Tom Reader?

-The main topic of the video is to explore the difference between effect size and statistical significance in the context of interpreting study results.

Why is it insufficient to only report the p-value when sharing study results?

-Reporting only the p-value is insufficient because it only tells you if there is a significant effect, not how large or important that effect is.

What is the hypothetical scenario presented in the video to illustrate the concept of effect size and statistical significance?

-The hypothetical scenario involves Tom Reader imagining himself as bald and looking for a cure, comparing two hair growth treatments, Furball Express and Toupee Away, to illustrate the importance of considering both effect size and statistical significance.

What are the two hair growth treatments mentioned in the video?

-The two hair growth treatments mentioned are Furball Express and Toupee Away.

What is the issue with the study results presented for Furball Express?

-The issue with the study results for Furball Express is the small sample size, lack of precision in measurement tools, and absence of a statistical test to evaluate the observed effect.

How many volunteers were used in the Furball Express study and what was the treatment duration?

-Four volunteers were used in the Furball Express study, and the treatment duration was two weeks.

What was the sample size and treatment duration for the Toupee Away study?

-The sample size for the Toupee Away study was 1000 volunteers, and the treatment duration was six months.

What statistical test was used in the Toupee Away study and what did it reveal?

-A t-test was used in the Toupee Away study, which showed that the average hair length increase of 5 microns was statistically significant (p < 0.01).

What is the problem with the statistically significant result of the Toupee Away study?

-The problem with the statistically significant result of the Toupee Away study is that the effect size is very small (5 microns increase in hair length), making it essentially irrelevant for practical purposes.

What should be reported along with the p-value when sharing study results according to the video?

-Along with the p-value, one should report the size of any detected effect, which can be described as a percentage or proportion of the control group mean, or through other measures such as 'r-squared' for linear relationships.

What is the conclusion Tom Reader draws about the two 'miracle cures' based on the presented data?

-Tom Reader concludes that one should steer clear of both 'miracle cures' because there is no certainty about the benefits of Furball Express, and Toupee Away does not meaningfully improve hair growth despite being statistically significant.

Outlines

🔍 Understanding Effect Size and Statistical Significance

In this segment, Tom Reader introduces the concepts of effect size and statistical significance, explaining that a p-value alone is insufficient to determine the validity of a study's findings. He uses a humorous example of seeking a cure for baldness and comparing two hypothetical treatments, Furball Express and Toupee Away. The narrative highlights the importance of looking beyond the p-value to understand the practical significance of a study's results. Tom critiques the small sample size and lack of precision in the Furball Express study, while pointing out that although Toupee Away shows statistical significance, the effect size is minuscule, making the treatment practically ineffective.

📊 The Importance of Reporting Effect Size Alongside P-Value

The second paragraph delves into the necessity of reporting both the p-value and the effect size when presenting study results. Tom emphasizes that a significant effect doesn't necessarily equate to a meaningful one, as illustrated by the Toupee Away example. He suggests that researchers should provide a clear description of the effect size, such as the difference between group means as a percentage or the proportion of variation explained in a linear relationship (r-squared). The summary underscores the responsibility of researchers to ensure that the implications of their findings are well-understood, both in terms of statistical significance and practical relevance.

Mindmap

Keywords

💡Effect Size

💡Statistical Significance

💡P-value

💡Null Hypothesis

💡Sample Size

💡Clinical Trials

💡Control Group

💡Randomization

💡Measurement Tools

💡r-squared

💡Hypothesis Testing

Highlights

Exploring the difference between effect size and statistical significance.

Importance of interpreting study results beyond just the p-value.

The hypothetical scenario of searching for a cure for baldness.

Evaluating two potential baldness 'miracle cures': Furball Express and Toupee Away.

The limitations of the Furball Express study with a small sample size and lack of precision.

The absence of a statistical test in the Furball Express report.

The methodological strengths of the Toupee Away study with a large sample and precise measurements.

The statistically significant but practically insignificant effect of Toupee Away.

The necessity to report both p-value and effect size in study results.

Understanding the difference between a significant effect and a meaningful one.

The example of a 2% reduction in malaria deaths illustrating the importance of effect size.

How to properly report study results including hypothesis testing and effect size description.

Describing effect size through percentages, proportions, or 'r-squared' for linear relationships.

Ensuring the audience understands the implications of study results.

The conclusion to avoid the 'miracle cures' based on the presented evidence.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: