What is Effect Size? Statistical significance vs. Practical significance

TLDRThe video script discusses the concept of 'effect size' and how it differs from 'statistical significance'. Statistical significance indicates that a result is not likely due to chance, often represented by a p-value. However, it does not convey the practical importance or magnitude of a difference. Effect size measures the meaningfulness of a statistical difference, often calculated differently depending on the test used, such as Cohen's d or eta squared. These measures compare the difference between groups to the variability within groups. A common guideline for interpreting effect size is that a Cohen's d of less than 0.2 is negligible, 0.2 is small, 0.5 is medium, and above 0.8 is large. The script emphasizes that while statistical significance is important, it is the effect size that informs whether a result has practical implications. Modern scientific papers often report both statistical significance and effect size to provide a comprehensive understanding of the study's findings.

Takeaways

- 📉 Statistical significance indicates a result is unlikely due to chance, often represented by a p-value.

- 🔍 Statistical significance does not convey the size or practical importance of a result.

- 🎢 With enough data, even very small differences can be statistically significant.

- 🤔 Practical significance is about whether the result matters in the real world, which is a judgment call.

- 📊 Effect size is a measure used to quantify the magnitude of a statistical difference.

- 📈 Different statistical tests have their own effect size measures like Cohen's d, Glass's Δ, or eta squared.

- 📐 Effect size involves comparing the difference between groups to the variability within groups.

- 🔑 A common guideline for interpreting effect sizes is that a Cohen's d of less than 0.2 is negligible, 0.2 is small, 0.5 is medium, and above 0.8 is large.

- 📝 Modern scientific papers often report both statistical significance and effect size measures.

- 📚 The importance of effect size has grown among scientists, leading to its inclusion in many research papers.

- 📈 Effect size helps determine the practical significance of a result, beyond just statistical significance.

Q & A

What does statistical significance imply in the context of a study?

-Statistical significance implies that the observed result is unlikely to have occurred by chance alone. It is often represented by a p-value, indicating whether the result is reliable and not simply due to random variation.

What is the limitation of statistical significance in determining the importance of a result?

-Statistical significance only tells us if there is a detectable difference, but it does not inform us about the size or practical importance of that difference. Even very small differences can be statistically significant if enough data is collected.

How does the concept of effect size differ from statistical significance?

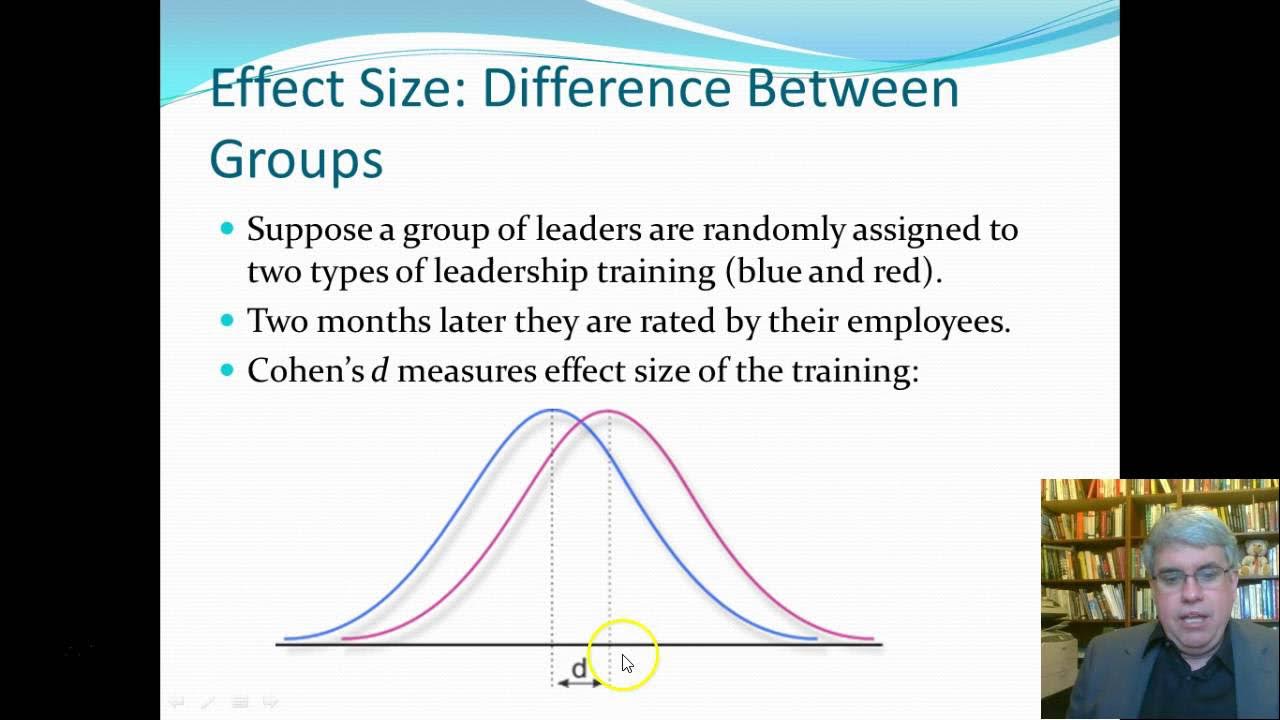

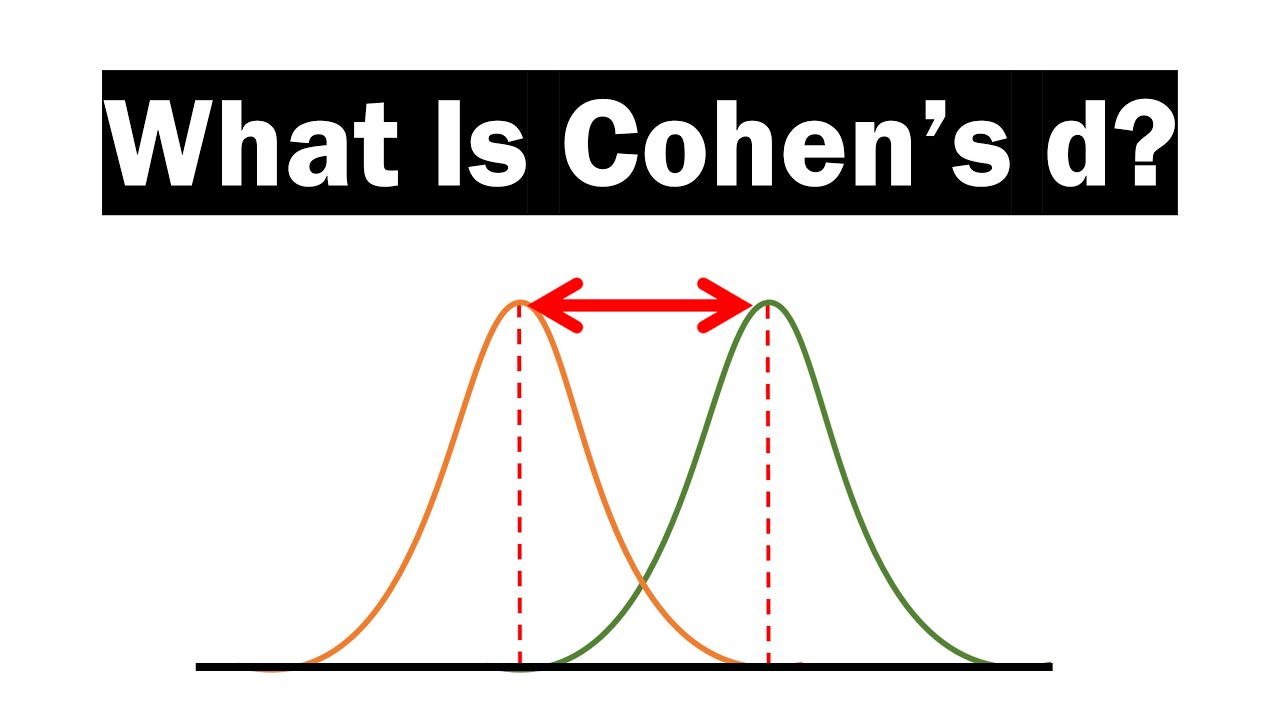

-Effect size provides a measure of the magnitude of the difference between groups in a study, which gives an idea of how meaningful the difference is. It is a quantifiable way to assess the practical significance of a result, whereas statistical significance only indicates if a result is unlikely to be due to chance.

What are some common measures of effect size used in statistical tests?

-Common measures of effect size include Cohen's d, Glass's Δ (delta), and eta squared. These measures are calculated differently depending on the statistical test used and involve comparing the difference between groups to the variability within the groups.

How is the practical significance of a result determined?

-Practical significance is partly a judgment call and interpretation of whether a result is meaningful in a real-world context. It can also be quantified through effect size measures, which provide numerical values that can be interpreted using published guidelines to assess the magnitude of the effect.

What is the general guideline for interpreting the size of Cohen's d in the context of a t-test?

-In the context of a t-test, a Cohen's d of less than 0.2 is considered negligible, 0.2 is considered small, 0.5 is medium, and anything larger than 0.8 is considered large.

Why are both statistical significance and effect size important in scientific papers?

-Both statistical significance and effect size are important because they provide a comprehensive understanding of the results. Statistical significance tells us if the results are reliable, while effect size tells us how meaningful the results are, which is crucial for determining the practical implications of the study.

Why might a statistically significant result not have practical significance?

-A statistically significant result might not have practical significance if the effect size is very small, indicating that the difference, while detectable, is not large enough to be meaningful or impactful in real-world applications.

What is the role of journals and reviewers in promoting the understanding of effect size?

-Journals and reviewers play a significant role in promoting the understanding of effect size by insisting that researchers include effect size measures in their papers. This helps to ensure that the practical significance of the results is communicated along with the statistical significance.

How does the example of a coin with a slight bias illustrate the concept of practical significance?

-The example of a coin with a slight bias illustrates that even if a coin is statistically biased, the practical significance of that bias may be negligible. In everyday situations, such as deciding who gets the last piece of pizza, a small bias might not matter and thus does not have practical significance.

What is the commitment of the channel when it comes to calculating and reporting statistical tests?

-The channel commits to including effect size measures along with the calculation of statistical tests in their videos. This approach ensures that viewers are not only informed about the statistical significance but also understand the practical implications of the results.

Outlines

📊 Understanding Effect Size and Significance

This paragraph explains the concept of effect size and distinguishes between statistical and practical significance. Statistical significance indicates that a result is not likely due to chance, often represented by a p-value. However, it does not convey the magnitude of the difference or its importance. Practical significance, on the other hand, is about whether the result matters in a real-world context. Effect size measures, such as Cohen's d or eta squared, quantify the magnitude of the difference between groups relative to their variability. Common benchmarks for effect size are provided, with values less than 0.2 considered negligible, 0.2 to 0.5 small, and above 0.8 large. The paragraph emphasizes the importance of reporting effect size in scientific papers, which has become a standard practice as the scientific community recognizes its relevance.

🔄 Engaging with the Audience

The second paragraph serves as a call to action for the viewers, encouraging them to like, subscribe, and engage with the content by leaving comments. This helps grow the channel's audience and reach, allowing the creators to disseminate their educational message more widely. The paragraph uses a friendly and informal tone, reminding viewers of the fun aspect of interacting with digital media and supporting the channel's mission to inform and assist as many people as possible.

Mindmap

Keywords

💡Effect Size

💡Practical Significance

💡Statistical Significance

💡P-value

💡Bias

💡Cohen's d

💡Eta Squared

💡Variability

💡Guidelines

💡Scientific Papers

Highlights

Statistical significance indicates a result is not due to chance, often represented by a p-value.

Statistical significance does not convey the size or importance of a difference.

With enough data, even very small differences can be statistically identified.

Practical significance is about whether a result matters in the real world, which is separate from statistical significance.

Effect size is a measure to quantify the magnitude of a difference and its practical significance.

Different statistical tests have their own measures of effect size, such as Cohen's d, Glass's V, or eta squared.

Effect size measures typically involve comparing the difference between groups to the variability within groups.

A larger effect size number indicates a greater magnitude of difference.

Effect sizes are often interpreted using published guidelines to determine small, medium, and large effects.

In a t-test, Cohen's d of less than 0.2 is negligible, 0.2 is small, 0.5 is medium, and above 0.8 is large.

Statistically significant results with a small effect size (<0.2) may not be practically significant.

Modern scientific papers often report both statistical significance and effect size measures.

The importance of effect size has grown among scientists, with many journals and reviewers requiring its inclusion.

P-values represent statistical significance but do not indicate the practical significance of a result.

Effect size measurements help estimate how meaningful a result is in practical terms.

The channel promises to include effect size measures in future statistical test calculations.

Upcoming videos will cover basic statistical tests practical for use in psychology.

The presenter encourages viewers to like, subscribe, and comment to support the channel's growth and outreach.

Transcripts

Browse More Related Video

P value versus effect size

What do my results mean Effect size is not the same as statistical significance. With Tom Reader.

How to find Cohen's D to determine the Effect Size Using Pooled Standard Deviation

Introduction to Effect Size

Cohen’s d Effect Size for t Tests (10-7)

What Is And How To Calculate Cohen's d?

5.0 / 5 (0 votes)

Thanks for rating: