Matrices to solve a system of equations | Matrices | Precalculus | Khan Academy

TLDRThe video script discusses the concept and application of matrices in solving systems of linear equations. It explains how matrices represent data and how the rules of matrix operations, though human-created, prove useful in various applications. The script revisits linear equations from Algebra, demonstrating how they can be represented as matrices and solved using matrix inversion. The process of finding the inverse of a matrix and using it to solve for the variables in a system of equations is detailed, highlighting the efficiency of this method, especially for larger systems or when the right-hand side vector changes frequently.

Takeaways

- 📊 A matrix is a way of representing data, and the rules for matrix operations are human-created but have been defined to be useful in applications.

- 🔍 The traditional method of solving linear equations in Algebra can be translated into the 'matrix world' for problem-solving.

- 📚 Linear equations can be viewed as finding the intersection point of lines represented by different equations.

- 🤝 In matrix form, a system of linear equations can be represented as Ax = b, where A is the matrix of coefficients, x is the column vector of variables, and b is the column vector of constants.

- 🔄 Matrix multiplication allows us to represent and solve systems of linear equations without the need for plus signs and equals signs.

- 🎭 Visualizing the matrix representation of linear equations can help build intuition for how these concepts map onto one another.

- 🔧 Gauss-Jordan elimination, a method learned in algebra, is essentially the same as solving systems of linear equations through matrix operations.

- 🔁 When dealing with multiple linear equations with the same coefficient matrix, finding the inverse of the matrix can simplify the process of finding solutions for different right-hand side vectors.

- 📈 The inverse of a matrix (A^-1) is calculated as 1/determinant(A) * adjugate(A), where the adjugate is the matrix obtained by swapping diagonal elements and changing the sign of off-diagonal elements.

- 🛠️ Once the inverse of a matrix is found, solving for x in the equation Ax = b becomes a matter of multiplying the inverse matrix by the right-hand side vector.

- 📌 The process of solving linear equations using matrices and their inverses can be more efficient with larger systems or when the same matrix is used repeatedly with different right-hand side vectors.

Q & A

What is the primary purpose of a matrix?

-The primary purpose of a matrix is to represent data in a structured way, allowing for efficient manipulation and solution of systems of linear equations.

How do the rules for matrix operations relate to their applications?

-While the rules for matrix operations are human-created, they have been defined in a way that proves to be quite useful in various applications, especially as we progress into solving real-world problems.

What is the significance of linear equations in the context of matrices?

-Linear equations are significant in the context of matrices because they can be represented as matrix equations, allowing for the use of matrix operations to solve systems of linear equations efficiently.

How does the matrix representation of a system of linear equations eliminate the need for plus signs and equals signs?

-In the matrix representation, the system of linear equations is transformed into a matrix equation of the form Ax = b, where A is the matrix of coefficients, x is the column vector of variables, and b is the column vector of constants. This form inherently includes the necessary plus signs and equals signs within the matrix structure, thus eliminating the need to write them out explicitly.

What is the role of the matrix 'a' in the context of solving a system of linear equations?

-In the context of solving a system of linear equations, 'a' represents the matrix of coefficients from the system. It is used to form the matrix equation Ax = b, and its inverse plays a crucial role in finding the solution to the system.

What is the general notation for a matrix versus a vector?

-In the general notation, a matrix is represented by an uppercase letter, while a vector, which is a one-dimensional array of numbers, is represented by a lowercase letter. Bold formatting is often used in textbooks to distinguish matrices and vectors from other variables.

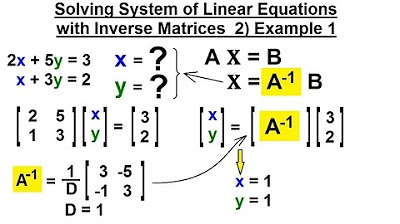

How does one find the inverse of a 2x2 matrix?

-To find the inverse of a 2x2 matrix, you first calculate the determinant of the matrix. Then, you swap the positions of the two elements in the main diagonal, change the signs of the off-diagonal elements, and finally, divide each element of the resulting matrix by the determinant to get the inverse.

What is the advantage of using matrix inversion to solve a system of linear equations?

-Using matrix inversion to solve a system of linear equations is advantageous when dealing with larger systems or when the right-hand side vector (b) changes frequently. Once the inverse of the matrix is calculated, solving for different right-hand side vectors becomes a matter of simple multiplication, which can save time and computational effort.

Why might finding the inverse of a matrix not be practical for small systems of linear equations?

-For small systems of linear equations, such as 2x2 systems, the process of finding the inverse and then multiplying by the right-hand side vector can be more cumbersome than traditional methods of solving the system, such as substitution or elimination. The overhead of calculating the inverse may not be worth the effort for such simple systems.

What is the matrix analogy to division?

-The matrix analogy to division is multiplication by the inverse of the matrix. Instead of dividing by a scalar as in traditional algebra, in matrix operations, you multiply both sides of an equation by the inverse of the matrix to isolate the variable vector.

How does the visual representation of linear equations as lines help in understanding the matrix world?

-The visual representation of linear equations as lines helps in understanding the matrix world by providing an intuitive way to visualize the intersection point of the lines, which corresponds to the solution of the system of equations. This visual approach aids in mapping the concepts of linear equations to their matrix representations.

Outlines

📚 Introduction to Matrices and Their Applications

This paragraph introduces the concept of matrices and their practical applications beyond the learned rules of multiplication, addition, subtraction, and inversion. It emphasizes that matrices are essentially a way of representing data and that the defined operations on matrices prove quite useful in real-world applications. The discussion transitions into a review of linear equations and systems of linear equations, using the example of finding the intersection point of two lines represented by equations. The visual representation of these lines and their intersection point is used to draw an analogy to the matrix world, setting the stage for representing this problem using matrices in the subsequent paragraphs.

🔢 Matrix Representation of Linear Equations

This paragraph delves into the representation of the previously discussed linear equations as matrices. It explains how the coefficients of the equations can be arranged into a matrix and combined with column vector matrices to form a system of equations in matrix form. The process of matrix multiplication is used to demonstrate that the same results obtained from traditional algebraic methods can be achieved through matrix operations. The paragraph introduces the concept of matrix notation, where bolded lowercase letters represent vectors and capital letters represent matrices. It also presents the general form of a linear equation as 'ax equals b' and discusses the usefulness of knowing the inverse of a matrix for solving such equations efficiently.

🧠 Solving Linear Equations Using Matrix Inversion

This paragraph explains the process of solving linear equations using matrix inversion. It describes how the inverse of a matrix can be used to simplify the solution of a system of linear equations, particularly when dealing with larger systems or multiple sets of equations with the same left-hand side. The concept of the identity matrix and its role in the solution process is introduced. The paragraph then provides a step-by-step guide on how to calculate the inverse of a 2x2 matrix, using the determinant and adjoint of the matrix. The method for solving for x and y using the inverse is outlined, with an example calculation that leads to the intersection point of the lines from the previous discussion.

🎓 Conclusion and Preview of Future Topics

In this final paragraph, the speaker concludes the discussion on matrix representation and solving linear equations, acknowledging that while the method may seem laborious for simple 2x2 systems, it becomes more advantageous for larger systems or when the right-hand side of the equation changes frequently. The speaker encourages the audience to practice the method as an exercise and teases the topic of the next video, where the same problem will be approached from a different perspective, hinting at further applications and insights into the power of matrices in data representation and problem-solving.

Mindmap

Keywords

💡Matrix

💡Linear Equations

💡Gauss-Jordan Elimination

💡Matrix Multiplication

💡Inverse Matrix

💡Determinant

💡Vector

💡Identity Matrix

💡Systems of Equations

💡Algebraic Concepts

💡Visual Representation

Highlights

The core concept of matrices as a representation of data is introduced.

Matrix operations are described as human-created rules without a natural fundamental.

The usefulness of matrix operations is validated through their applications.

A connection is drawn between matrices and linear equations from Algebra 1 or 2.

The concept of systems of linear equations and their graphical representation is discussed.

The process of finding the intersection point of two lines is analogous to solving a system of equations.

Matrix representation of a system of linear equations is demonstrated using a 2x2 matrix.

The equivalence between matrix multiplication and the system of equations is established.

The Gauss-Jordan elimination method is related to solving systems of equations algebraically.

The general form of a linear equation system is presented as Ax = b, where A is a matrix, x is a vector, and b is a column vector.

The concept of matrix inversion is introduced as a tool for solving linear equations.

The method of multiplying both sides of a linear equation by the inverse of the matrix is explained.

The practical advantage of using matrix inversion is discussed, especially for larger systems or changing right-hand side values.

The process of finding the inverse of a matrix is outlined, including the determinant and adjoint matrix.

The solution to the given system of equations is calculated using matrix inversion, yielding the intersection point (x=1, y=2).

The efficiency of using matrix inversion for solving systems of equations is emphasized.

The video concludes with a teaser for the next video, where the same problem will be approached from a different perspective.

Transcripts

Browse More Related Video

Matrix equations and systems | Matrices | Precalculus | Khan Academy

PreCalculus - Matrices & Matrix Applications (1 of 33) What is a Matrix? 1

Ch. 10.5 Inverses of Matrices and Matrix Equations

PreCalculus - Matrices & Matrix Applications (27 of 33) Solving Sys of Linear Eqn with Inverse

Inverse matrices, column space and null space | Chapter 7, Essence of linear algebra

PreCalculus - Matrices & Matrix Applications (26 of 33) Solving Sys of Linear Eqn with Inverse

5.0 / 5 (0 votes)

Thanks for rating: