Linear Regression, Clearly Explained!!!

TLDRThe video script introduces linear regression, a statistical method to model relationships between variables. It explains the process of fitting a line to data using least squares, calculating the R-squared value to assess the model's goodness of fit, and determining the p-value to evaluate the statistical significance of the relationship. The script uses the example of predicting mouse size based on weight to illustrate these concepts, emphasizing the importance of both R-squared and p-value in interpreting the results of a linear regression analysis.

Takeaways

- 🚀 Linear regression is a powerful statistical tool used to fit a line to data points and understand relationships.

- 📈 The least squares method is employed to minimize the sum of squared residuals (distances from the data points to the fitted line).

- 🔄 Calculating r-squared quantifies the proportion of variance in the dependent variable that's explained by the independent variable(s).

- 📊 A high r-squared value indicates a better fit of the model, meaning the independent variable(s) explain a larger portion of the variance.

- 🎯 The p-value is used to assess the statistical significance of the r-squared value, determining if the relationship is likely due to random chance.

- 🔢 Fitting a line (or plane in more dimensions) involves estimating parameters such as the y-intercept and slope based on the data.

- 🔍 The concept of residuals is crucial in linear regression, representing the differences between observed and predicted values.

- 📊 Sum of squares (SS) is calculated for both the mean and the fit, providing insight into the variation explained by the model.

- 🔧 An adjusted r-squared is used for more complex models to account for the number of parameters and prevent overfitting.

- 🎲 The p-value for r-squared is derived from the F-distribution, which considers the variation explained and unexplained by the model.

- 🔍 Understanding the relationship between degrees of freedom, sample size, and the fit parameters is essential for proper interpretation of regression results.

Q & A

What is the primary focus of the video?

-The primary focus of the video is to explain the concept of linear regression, also known as general linear models, and its key components such as least squares, R-squared, and p-value calculations.

How does least squares fit a line to data?

-Least squares fits a line to data by minimizing the sum of the squares of the residuals, which are the distances from the data points to the line. It involves rotating the line to find the position that results in the least sum of squared residuals.

What is R-squared and how is it calculated?

-R-squared is a measure of how well the fitted line or model explains the variability of the data. It is calculated as 1 minus the ratio of the variance around the fit to the variance around the mean. A higher R-squared value indicates a better fit of the model to the data.

What is the significance of calculating a p-value for R-squared?

-Calculating a p-value for R-squared helps to determine if the observed relationship between variables is statistically significant or if it could be due to random chance. A low p-value suggests that the relationship is statistically significant.

How does the video illustrate the concept of residuals?

-The video illustrates the concept of residuals by showing the distances from individual data points to the fitted line. It explains that residuals are the differences between the observed values and the values predicted by the model.

What is the role of the F distribution in calculating p-values for R-squared?

-The F distribution is used to approximate the distribution of the F statistic, which is calculated using the variances around the mean and around the fit. The F distribution helps to determine the p-value by comparing the observed F statistic against the critical values from the distribution.

How does the number of parameters in a model affect the calculation of R-squared and p-value?

-The number of parameters in a model affects the calculation of R-squared and p-value because it influences the complexity of the model. More parameters can potentially explain more variance in the data, increasing R-squared. However, the p-value adjusts for the number of parameters to prevent overfitting, ensuring that the model's predictive power is not just due to chance.

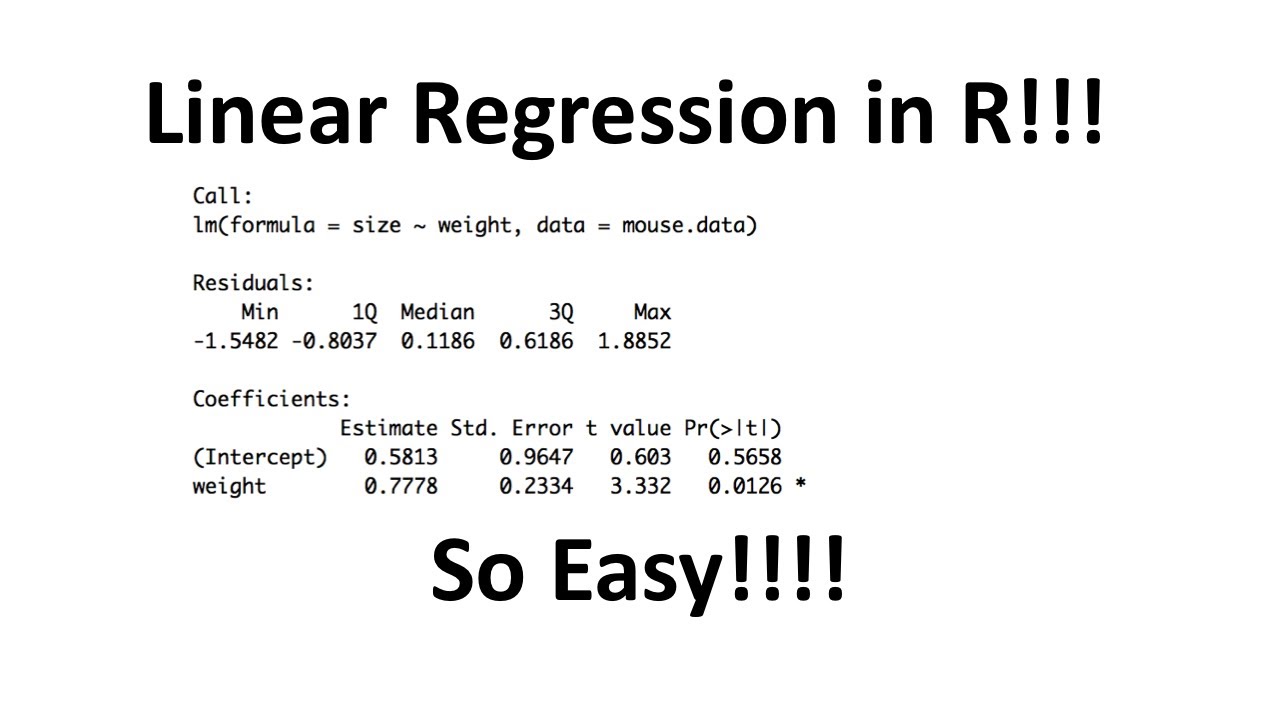

What is an example of a simple linear model discussed in the video?

-An example of a simple linear model discussed in the video is predicting mouse size based on mouse weight. The model is represented as Y = 0.1 + 0.78 * X, where Y is the mouse size and X is the mouse weight.

How does the video explain the concept of variance in the context of linear regression?

-The video explains variance as the sum of the squares of the residuals (differences between the observed values and the predicted values) divided by the sample size. It distinguishes between variance around the mean and variance around the fit, using these to calculate R-squared and the F statistic for determining significance.

What is the importance of understanding degrees of freedom in linear regression?

-Understanding degrees of freedom is important in linear regression because it relates to the number of independent observations that can vary freely in the data set. It is used to adjust the sums of squares into variances, which are then used in the calculation of R-squared and the F statistic for determining statistical significance.

What is the adjusted R-squared and why is it used?

-The adjusted R-squared is a modified version of R-squared that takes into account the number of parameters in the model. It is used because adding more parameters to a model will always increase the R-squared value, but not necessarily improve its predictive power. The adjusted R-squared adjusts for this by scaling R-squared by the number of parameters, providing a more accurate measure of the model's explanatory power.

Outlines

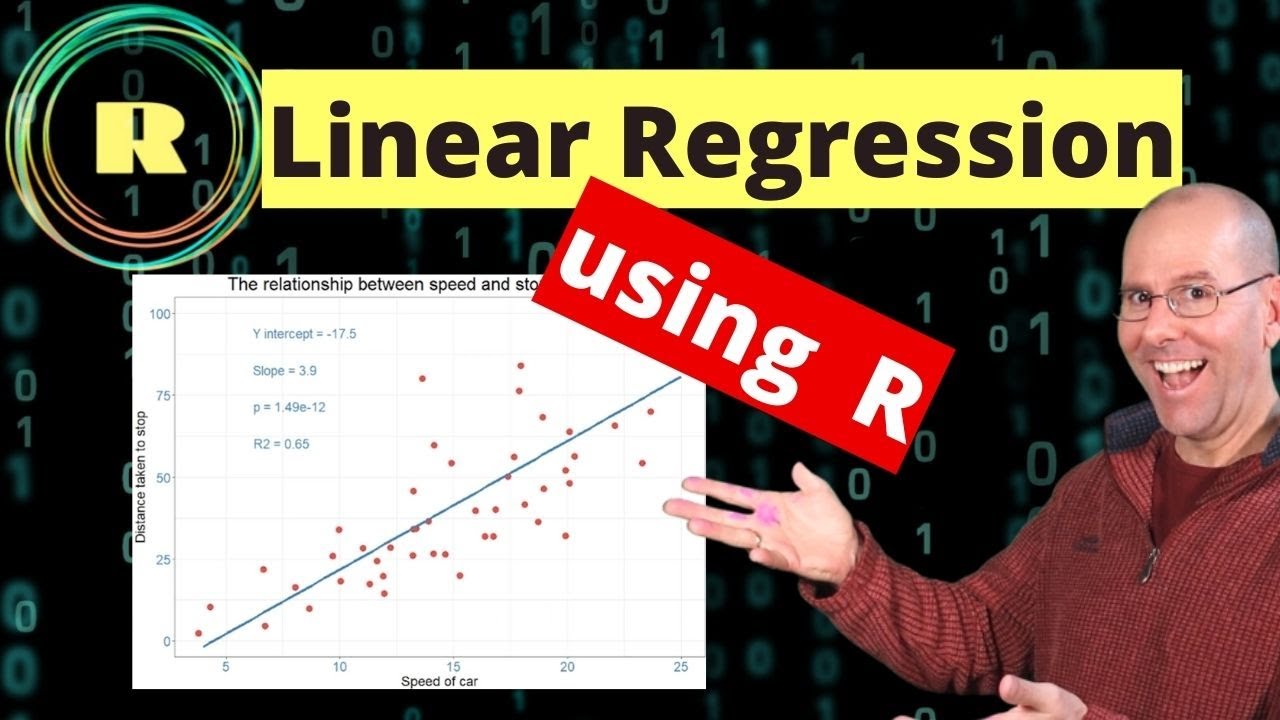

🚢 Introduction to Linear Regression

The video begins with an introduction to linear regression, also known as general linear models. The speaker explains that linear regression involves using least squares to fit a line to data points, calculating the R-squared value, and determining a p-value for the R-squared. The main concepts are discussed using the example of predicting mouse size based on weight, emphasizing the importance of understanding the details behind linear regression.

📊 Understanding Residuals and Variance

This paragraph delves into the terminology and concepts of residuals and variance in the context of linear regression. The speaker explains how to calculate the sum of squares around the mean (SS_mean) and the sum of squares around the least squares fit (SS_fit), and how these relate to the variation in the data. The R-squared value is further clarified as a measure of how much variation in the dependent variable (mouse size) can be explained by the independent variable (mouse weight).

📈 R-Squared and Its Interpretation

The speaker continues to discuss R-squared, providing examples to illustrate its interpretation. It is explained that R-squared can range from 0 to 1, with higher values indicating a better fit of the model to the data. The paragraph highlights how R-squared can be used to determine the proportion of variance explained by the model and how it applies to more complex equations beyond simple linear relationships.

🔢 Fitting a Plane in Three-Dimensional Space

This section introduces a more complex example of fitting a plane in three-dimensional space, using mouse weight and tail length to predict body length. The speaker explains the process of least squares fitting in a 3D context and how to interpret the resulting equation. The concept of R-squared is extended to this scenario, emphasizing that adding parameters to a model will not worsen the fit as long as the sum of squares around the fit is considered.

🎯 Adjusted R-Squared and Statistical Significance

The paragraph discusses the limitations of R-squared when more parameters are added to a model and introduces adjusted R-squared as a way to account for this. The speaker also explains the need for a p-value to determine the statistical significance of the R-squared value. The process of calculating the F-statistic and using it to find the p-value is outlined, emphasizing the importance of ensuring that the relationship found in the data is not due to random chance.

🎓 Summary of Linear Regression Concepts

In the concluding paragraph, the speaker summarizes the main ideas behind linear regression, R-squared, and the significance of the p-value. The importance of both a large R-squared value and a small p-value for establishing an interesting and reliable result is reiterated. The speaker encourages viewers to subscribe for more content and invites suggestions for future videos.

Mindmap

Keywords

💡Linear Regression

💡Least Squares

💡R-Squared

💡P-Value

💡Residuals

💡Variance

💡Fitting a Line to Data

💡General Linear Models

💡Sum of Squares

💡Degrees of Freedom

💡Statistical Significance

Highlights

Linear regression, also known as General Linear Models, is introduced as a powerful concept in statistics.

Least squares method is used to fit a line to the data, which is the first step in linear regression.

The concept of 'residuals' is introduced as the distance from the line to the data point.

The process of rotating the line to minimize the sum of squared residuals is explained, leading to the best fit line.

The equation for the line estimated by least squares, including the y-axis intercept and slope, is discussed.

R-squared is introduced as a measure to determine how good the guess (prediction) is based on the line fit.

The formula for calculating r-squared is provided, which is the variation around the mean minus the variation around the fit divided by the variation around the mean.

An example is given where 60% of the variation in mouse size can be explained by mouse weight, showing the practical application of r-squared.

The concept of 'sum of squares' is used to calculate r-squared, relating to the variation around the mean and around the fitted line.

The importance of the slope not being zero in indicating a relationship between variables is highlighted.

The video introduces the concept of adjusting r-squared for the number of parameters in the model to prevent overfitting.

The calculation of p-value for r-squared is explained, providing a measure of statistical significance.

The F distribution is used to approximate the p-value, with the degrees of freedom playing a crucial role in its shape.

The process of generating random data sets to calculate the p-value is outlined, emphasizing the role of random chance.

The importance of both a large r-squared value and a small p-value in determining an interesting and reliable result is stressed.

The transcript provides a comprehensive overview of linear regression, its methodology, and its application in understanding data relationships.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: