What are Generative AI models?

TLDRKate Soule of IBM Research discusses the emergence of large language models or LLMs like chatGPT that are trained on massive amounts of data to generate text. She explains how LLMs can transfer learning to multiple tasks through tuning and prompting, leading to performance gains, but have disadvantages like high compute costs and trust issues. IBM is innovating to improve efficiency and reliability of LLMs for business uses across domains like language, vision, code, chemistry, and climate change.

Takeaways

- 😲Large language models like chatGPT are showing dramatic improvements in AI capabilities

- 📈Foundation models represent a new paradigm in AI where one model can perform many different tasks

- 🧠Foundation models are trained on huge amounts of unstructured data to develop strong generative capabilities

- 👍Key advantages of foundation models are high performance and productivity gains

- 💰High compute cost and trust issues are current disadvantages of foundation models

- 🔬IBM is researching ways to make foundation models more efficient, trustworthy and business-ready

- 🖼Foundation models are being developed for vision, code, chemistry and other domains beyond language

- 🤖IBM is productizing foundation models into offerings like Watson Assistant and Maximo Visual Inspection

- 🌍IBM is building foundation models for climate change research and other domains

- 📚There are links below to learn more about IBM's work on improving foundation models

Q & A

What are large language models (LLMs) and how are they impacting various fields?

-Large language models (LLMs), like ChatGPT, are AI systems that have demonstrated significant capabilities in various tasks such as writing poetry or assisting in planning vacations. Their emergence represents a step change in AI performance and potential to drive enterprise value across different sectors.

Who introduced the term 'foundation models' and what was the reasoning behind it?

-The term 'foundation models' was first coined by a team from Stanford, recognizing a shift in AI towards a new paradigm where a single model could support multiple applications and tasks, moving away from the traditional approach of using different models for different specific tasks.

What distinguishes foundation models from other AI models?

-Foundation models are distinguished by their ability to be applied to a wide range of tasks without needing to be retrained from scratch for each new task. This versatility is due to their training on massive amounts of data in an unsupervised manner, allowing them to generate or predict outcomes based on the input they receive.

How do foundation models gain their generative capabilities?

-Foundation models gain their generative capabilities through training on vast amounts of unstructured data in an unsupervised manner. This training enables them to predict or generate the next word in a sentence, for example, based on the context provided by the preceding words.

What is 'tuning' in the context of foundation models?

-Tuning refers to the process of adapting a foundation model to perform specific tasks by introducing a small amount of labeled data. This process updates the model's parameters to specialize it for tasks such as classification or named-entity recognition.

How do foundation models perform in domains with low amounts of labeled data?

-Foundation models excel in low-labeled data domains, utilizing techniques like prompting or prompt engineering to apply the models effectively for tasks that traditionally require more substantial labeled datasets. This is due to their pre-training on large, diverse datasets.

What are the primary advantages of foundation models?

-The primary advantages of foundation models include their high performance on a wide range of tasks due to extensive pre-training on large datasets, and productivity gains from needing less labeled data for task-specific applications, leveraging their pre-trained knowledge.

What are the main disadvantages of foundation models?

-The main disadvantages include high compute costs for training and running inference, making them less accessible for smaller enterprises, and issues related to trustworthiness and bias due to the vast, unvetted datasets they are trained on.

How is IBM addressing the disadvantages of foundation models?

-IBM Research is working on innovations to improve the efficiency, trustworthiness, and reliability of foundation models, aiming to make them more suitable and relevant for business applications by addressing issues such as computational costs and data bias.

What domains beyond language are foundation models being applied to?

-Foundation models are being applied to various domains beyond language, including vision with models like DALL-E 2 for image generation, code completion with tools like Copilot, molecule discovery, and climate research, showcasing their versatility across fields.

Outlines

😊 Overview of large language models and foundation models

Paragraph 1 provides an introduction to large language models (LLMs) like chatGPT that have taken the world by storm recently. It explains that LLMs are a type of foundation model - a new paradigm in AI where a single model can be transferred to many different tasks. Foundation models are trained on huge unlabeled datasets to perform generative prediction tasks like predicting the next word in a sentence. This gives them unique capabilities that allow them to be tuned or prompted to perform well on downstream NLP tasks using very little labeled data.

😀 Advantages and disadvantages of foundation models

Paragraph 2 discusses key advantages and disadvantages of foundation models. Advantages include superior performance due to seeing abundant data, and productivity gains from needing less labeled data to complete tasks. Disadvantages are high compute costs for training and inference, and trust issues from training on unlabeled web data that may contain toxic content.

Mindmap

Keywords

💡large language models (LLMs)

💡foundation models

💡generative AI

💡tuning

💡prompting

💡trustworthiness

💡multimodal

💡climate modeling

💡enterprise applications

💡regulated industries

Highlights

The speaker discusses using computational methods to analyze complex biological systems.

Machine learning techniques like neural networks can help predict protein structures and interactions.

Mapping out cell signaling pathways is key to understanding disease mechanisms and drug targets.

Integrating diverse datasets from genomics, proteomics, metabolomics provides a systems view of biology.

The speaker explains how modeling gene regulatory networks reveals emergent properties.

Insights into robustness, modularity and feedback controls in biological systems.

Synthetic biology approaches allow rational re-design of biological systems.

Microfluidics and organ-on-a-chip technology enables cell biology studies in controlled environments.

Single cell analysis provides unprecedented resolution into cell heterogeneity.

The speaker emphasizes the need for interdisciplinary collaboration between biologists and mathematicians.

Physics-based modeling provides insight into morphogenesis and developmental processes.

Success stories in systems biology include modeling oscillations in circadian rhythms.

Challenges remain in modeling complex phenomena like spatial patterning and stochastic dynamics.

The potential for systems biology to advance biomedicine and biotechnology is discussed.

Overall, the talk highlights the power of computational approaches in modern biology.

Transcripts

Browse More Related Video

How Chatbots and Large Language Models Work

Exploring foundation models - Session 1

You CAN use ChatGPT for genealogy (with accuracy)! Here's how

What is generative AI and how does it work? – The Turing Lectures with Mirella Lapata

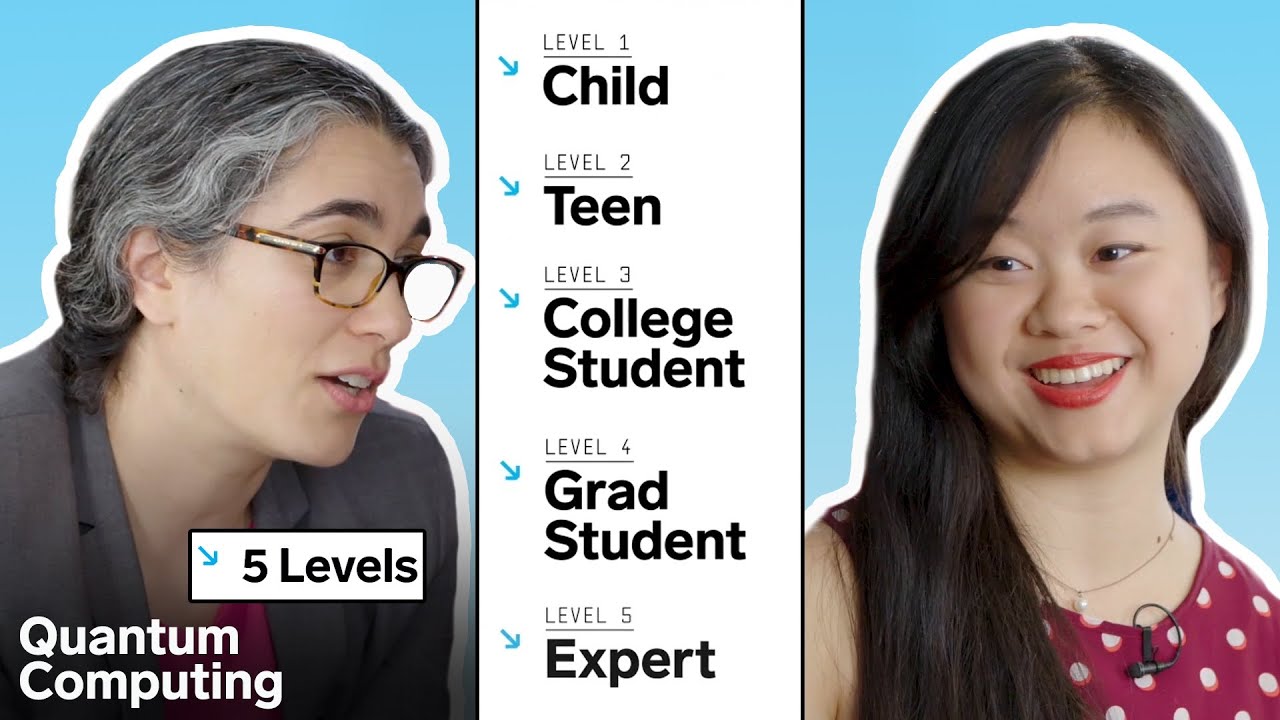

Quantum Computing Expert Explains One Concept in 5 Levels of Difficulty | WIRED

Environmental Econ: Crash Course Economics #22

5.0 / 5 (0 votes)

Thanks for rating: