But what is a neural network? | Chapter 1, Deep learning

TLDRThis video explains what a neural network is at its core, using the example of building one that can recognize handwritten digits. It visualizes the structure of layers of artificial neurons that hold numbers representing activations, with connections between neurons defined by weights and biases - knobs that can be tweaked for the network to solve problems. It conveys neural networks as just functions, but very complex ones with thousands of parameters that can potentially pick up on visual patterns. The second video will detail how such a network learns appropriate weights to perform tasks by looking at data.

Takeaways

- 😲 Our brains can easily recognize handwritten digits even when they are rendered with low resolution or sloppiness.

- 😎 The goal is to create a neural network that can learn to recognize handwritten digits given input images.

- 👀 A neural network has layers of "neurons" which hold numbers representing activations. The neurons are connected and influence each other.

- 💡 The connections have weights and biases, which are key parameters that can be tweaked so the network can potentially learn.

- 🧠 The structure of the network is inspired by brains and has an input layer, hidden layers, and output layer.

- 🔢 The hope is that the hidden layers can pick up on subcomponents like lines and loops to eventually recognize digits.

- 🤯 There are around 13,000 weights and biases in total, which would be crazy to set by hand.

- 📈 The activations of one layer depend on and determine the activations of the next layer.

- 🎛 The network as a whole is just a complex function that transforms input image data into output digits.

- 🧮 The network learns the appropriate weights and biases from looking at training data.

Q & A

What is the main goal of the neural network described in the video?

-The goal is to create a neural network that can learn to recognize handwritten digits.

What does the term 'activation' refer to in the context of neurons?

-The activation refers to the number between 0 and 1 that is held inside each neuron. It represents things like the grayscale value of a pixel or the network's confidence that an image depicts a certain digit.

What are weights and biases in a neural network?

-The weights are the numbers that are assigned to the connections between neurons in adjacent layers. Biases are additional numbers that are added to the weighted sum before applying the sigmoid function.

What is the purpose of the sigmoid function?

-The sigmoid function squashes the weighted sum to be between 0 and 1 to determine the activation level of the next layer of neurons.

What does it mean for a neural network to 'learn'?

-When a neural network learns, it is finding appropriate values for all of its weights and biases so that it can accurately map input images to output categories.

What is the advantage of using ReLU over sigmoid mentioned in the video?

-The video mentions that ReLU is easier to train compared to sigmoid activation functions.

What is the layered structure hoping to achieve?

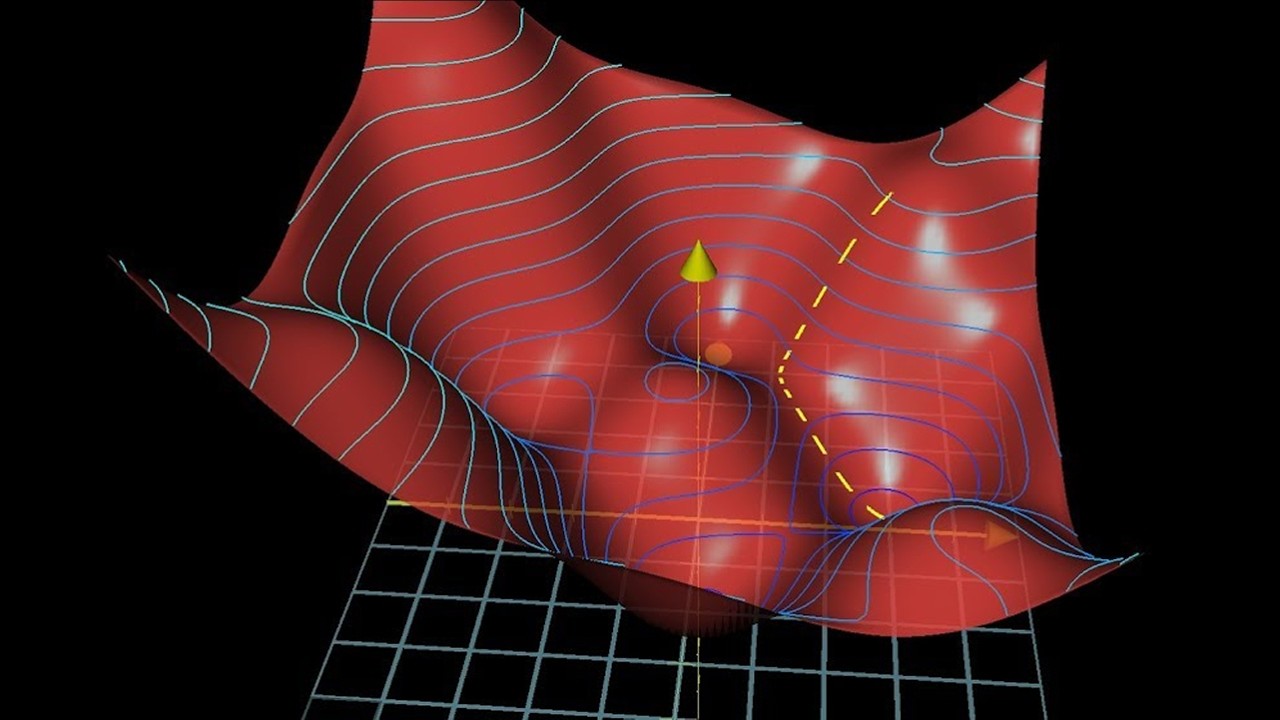

-The hope is that the layered structure can break down complex recognition tasks into simpler sub-problems, with lower layers detecting basic patterns like edges and higher layers detecting more complex shapes and full digits.

How are connections between neurons represented mathematically?

-The connections are represented by a weight matrix that encodes all the separate weight values. Matrix multiplication is then used to efficiently compute the weighted sums.

Why is having an understanding of the weights meaningful?

-Understanding what the learned weights represent provides intuitions about what patterns the network is responding to. This allows diagnosing issues when the network underperforms.

What is meant by thinking of the full network as just a function?

-At its core, the network is just a mapping from input image pixels to output digit probabilities. The network defines a complex mathematical function to perform this mapping.

Outlines

🧠 Introducing the concept of neural networks

This paragraph introduces the concept of neural networks, motivating their relevance and importance. It outlines the goal of showing what a neural network is and helping to visualize how it works, using a classic example of recognizing handwritten digits. The structure of a simple neural network with input, output and hidden layers is described.

🔢 Illustrating how a neural network recognizes digits

This paragraph provides an example of how a trained neural network recognizes handwritten digits. It explains how passing an input image activates neurons across layers based on learned weights and biases, ultimately lighting up an output neuron representing the predicted digit.

❓ Wondering what the hidden layers might represent

This paragraph speculates on what the hidden layers in the neural network might be doing, raising hopes that they could detect meaningful subcomponents like edges and loops that combine to form digit patterns. It acknowledges the challenge of learning appropriate subcomponents.

🤝 Understanding connections between neurons

This paragraph dives into the details of how activations from one layer of neurons determine the activations of the next layer. Key concepts like weights, biases and the sigmoid activation function are introduced. The math for weighted sums and matrix multiplication representations is shown.

Mindmap

Keywords

💡Neural network

💡Neuron

💡Weight

💡Bias

💡Sigmoid function

💡Matrix

💡Feature extraction

💡Backpropagation

💡ReLU

💡Deep learning

Highlights

Neural networks are inspired by the brain, but made of neurons that just hold numbers between 0 and 1 called activations.

The network has layers - an input layer, hidden layers, and an output layer. Activations flow from one layer to the next.

The heart of a neural network is how activations in one layer bring about activations in the next layer.

Weights and biases are the knobs and dials - the parameters of the network that can be tweaked to make it behave differently.

The hope is that hidden layers can detect edges, patterns, loops, lines - subcomponents that make up digits.

The weighted sums of inputs and nonlinear squishing make the network expressive enough to potentially capture patterns.

The network has around 13,000 weights and biases - parameters that can be tweaked to make it recognize patterns.

Setting all those parameters by hand would be satisfying but horrifying.

Matrices and vectors neatly capture the math of weights and activations flowing through layers.

The network is just a function - an absurdly complicated one with over 13,000 parameters.

It looks complicated, but that gives hope it can take on the challenge of recognizing digits.

The next video covers how this network can learn appropriate weights and biases from data.

Modern networks often use the ReLU activation function rather than sigmoid.

ReLU made networks much easier to train at some point.

Deep networks happened to work very well when people tried ReLU.

Transcripts

Browse More Related Video

Gradient descent, how neural networks learn | Chapter 2, Deep learning

How convolutional neural networks work, in depth

How to Create a Neural Network (and Train it to Identify Doodles)

Backpropagation — Topic 79 of Machine Learning Foundations

Neural Networks: Crash Course Statistics #41

New computer will mimic human brain -- and I'm kinda scared

5.0 / 5 (0 votes)

Thanks for rating: