How to Create a Neural Network (and Train it to Identify Doodles)

TLDRThis script documents an in-depth exploration of training neural networks to recognize images. Starting with basic experiments, the journey evolves to complex tasks like identifying doodles and color images. It covers network design, training challenges, and the application of techniques like backpropagation and gradient descent. The presenter tests the network's accuracy with various datasets, including handwritten digits and doodles, highlighting both successes and limitations, and suggests future improvements with convolutional neural networks for enhanced performance.

Takeaways

- 🧠 The script discusses the process of teaching a computer to recognize various images using Neural Networks, starting with simple experiments and gradually increasing complexity.

- 👶 The journey begins with a basic understanding of Neural Networks from about a decade ago, highlighting early experiments with animating stick creatures and the challenges faced.

- 🚗 An experiment with a 2D car using sensors and a neural network to steer is described, emphasizing the iterative process of learning through competition and mutation.

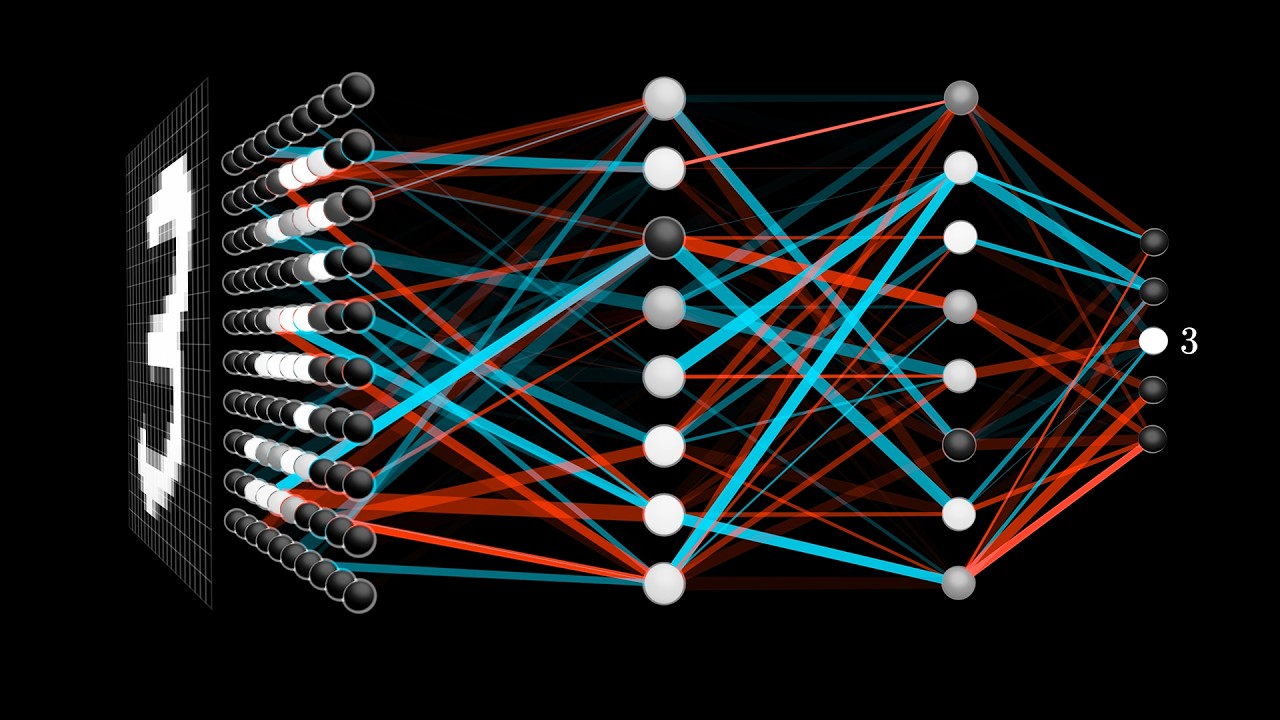

- 🔢 The script covers training a neural network to identify handwritten digits, explaining the steps and processes involved in creating and training the network.

- 👗 It also explores the idea of training the network to recognize images of clothing and fashion accessories, and the challenges of scaling up to more complex tasks.

- 🎨 The presenter attempts to train the network to recognize doodles of various objects, like helicopters and umbrellas, and the need for convolutional neural networks for more complex image recognition.

- 🍎 A hypothetical scenario involving a new fruit with poisonous and non-poisonous variants is used to illustrate the concept of decision boundaries in a neural network.

- 🔄 The importance of activation functions in introducing non-linearity to the network is discussed, allowing for more complex decision boundaries and better classification.

- 📉 The script explains the use of cost functions to measure the performance of the network and the process of using gradients to optimize network weights through Gradient Descent.

- 🔍 The concept of backpropagation is introduced as a method for efficiently calculating gradients for all weights and biases in a network, enabling faster learning.

- 🔧 The presenter discusses various techniques for improving network performance, such as data augmentation, adjusting learning rates, and adding momentum to the gradient descent process.

Q & A

What is the primary focus of the video script?

-The primary focus of the video script is to demonstrate the process of teaching a computer to recognize various doodles and images using Neural Networks.

What is the first experiment described in the script?

-The first experiment described in the script involves programming a neural network to make stick creatures walk around on their own, which faced issues with the physics code causing unexpected behaviors.

How does the script describe the process of evolving a simple car with sensors to drive autonomously?

-The script describes the process as letting multiple cars with neural networks compete, selecting the top performers, cloning them with random mutations, and repeating the process until a car can drive successfully on its own.

What is a 'decision boundary' in the context of the script?

-A 'decision boundary' in the script refers to a line or boundary that separates different classifications, such as safe and poisonous fruits in the example provided.

What is the purpose of adding 'bias' to a neural network?

-The purpose of adding 'bias' to a neural network is to allow the decision boundary to shift vertically, enabling the network to make more accurate classifications.

What is the role of 'activation function' in a neural network?

-The role of the 'activation function' in a neural network is to introduce non-linearity into the network, allowing it to create more complex decision boundaries and model more intricate patterns in the data.

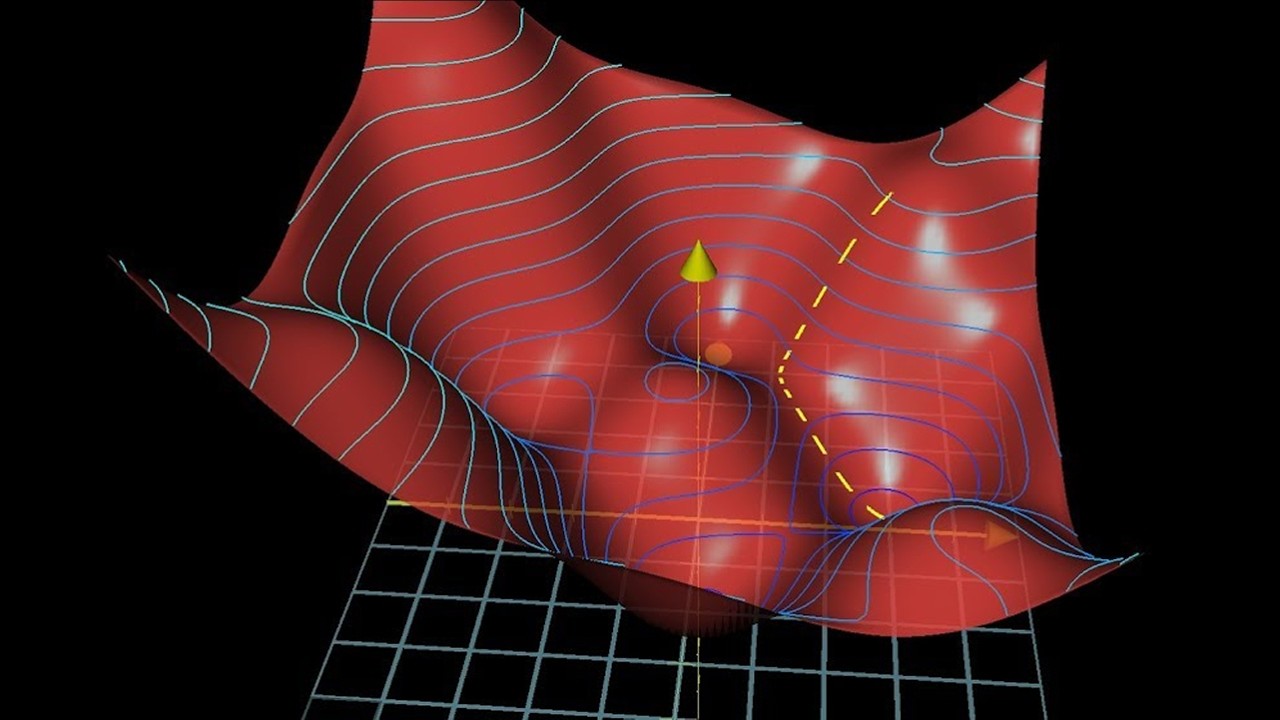

What is the 'gradient descent' algorithm mentioned in the script?

-The 'gradient descent' algorithm is a technique used to minimize a function by iteratively moving in the direction of the steepest descent as defined by the negative of the gradient.

What is the purpose of 'backpropagation' in training a neural network?

-The purpose of 'backpropagation' is to calculate the gradient of the loss function with respect to the weights by the chain rule, which is then used to update the weights in a manner that reduces the loss.

How does the script suggest improving the neural network's performance on recognizing handwritten digits?

-The script suggests improving performance by adding hidden layers with more nodes, using different cost and activation functions, and incorporating techniques like data augmentation and adding noise to the inputs.

What challenges does the neural network face when trying to recognize doodles from the 'quick, draw!' project?

-The neural network faces challenges such as the variety in the way people draw the same object, the presence of noise and background in the images, and the need to generalize from the training data to recognize a wider range of doodle styles.

What is the script's conclusion about the neural network's performance on color images of different objects and creatures?

-The script concludes that the neural network struggles with the complexity of color images, achieving only about 53% accuracy, and suggests that significant upgrades to the network are required for better performance.

Outlines

🤖 Introduction to Neural Networks and Experiments

The speaker introduces the concept of using neural networks to recognize images and doodles. They recount their initial foray into programming a neural network to animate stick creatures and the humorous results of their physics code. The speaker then describes a more successful experiment involving a 2D car with sensors steered by a neural network. They also mention a project to identify handwritten digits and express an intention to test the same neural network on images of clothing and fashion accessories, as well as doodles of various objects. The speaker concludes the paragraph by discussing the potential need to upgrade to a convolutional neural network for more complex image recognition tasks.

📊 Exploring Neural Network Decision Boundaries

The speaker discusses the concept of decision boundaries in neural networks using a hypothetical example of a new fruit that is either delicious or poisonous. They describe how to plot the fruit based on the size of spots and length of spikes and how to use these attributes to create a graph that can classify the fruit as safe or poisonous. The speaker explains the limitations of linear decision boundaries and the need for more complex models to handle non-linear separations in data, such as adding hidden layers to the neural network.

🔄 Network Training and Activation Functions

The speaker delves into the intricacies of training a neural network, starting with a simple model and gradually introducing complexities such as hidden layers and non-linear activation functions. They discuss the role of activation functions in creating more complex decision boundaries and the use of the sigmoid function to allow for smoother transitions in the output. The speaker also touches on the challenges of adjusting the network's weights and biases manually and the need for an automated training process.

📉 Cost Function and Gradient Descent

The speaker introduces the concept of a cost function to measure the performance of the neural network and the use of gradient descent to minimize this cost. They explain the process of adjusting weights and biases to find the optimal solution that classifies the training data accurately. The speaker also discusses the challenges of using a brute-force approach for larger networks and the need for a more efficient method to calculate the gradients.

🔢 Implementing Gradient Descent and Mini-Batch Training

The speaker describes the implementation of gradient descent in neural networks and the introduction of mini-batch training to improve efficiency. They explain how running the cost function for every single weight and bias is impractical for large datasets and how mini-batches can speed up the learning process. The speaker also mentions the potential benefits of the noise introduced by mini-batches, such as helping to escape saddle points in the cost function landscape.

📚 Understanding Calculus for Efficient Gradient Calculation

The speaker emphasizes the importance of calculus in understanding and optimizing neural network training. They provide a refresher on derivatives and introduce the concept of the chain rule, which is essential for calculating the gradients of the cost function with respect to the network's weights and biases efficiently. The speaker illustrates how these calculus concepts can be applied to a simplified neural network to derive the necessary gradients for the gradient descent algorithm.

🔧 Refining the Network with Backpropagation

The speaker describes the backpropagation algorithm, which is a method for efficiently calculating the gradients of the cost function with respect to the network's parameters. They explain how this algorithm works by starting at the output layer and moving backward through the network, reusing calculations to update the gradients for each layer. The speaker also discusses the implementation of this algorithm in code and its application to a neural network with a single hidden layer.

🎨 Training the Network on Handwritten Digits

The speaker narrates the process of training a neural network on a dataset of handwritten digits. They discuss the setup of the network with 784 inputs for the pixel values and 10 outputs for the digits, and the use of a hidden layer to improve accuracy. The speaker also mentions techniques such as adding momentum to the gradient descent algorithm and experimenting with different cost and activation functions to enhance the network's performance.

🔍 Evaluating Network Performance and Generalization

The speaker evaluates the neural network's performance on the handwritten digits dataset and notes its accuracy on both training and test data. They discuss the network's tendency to memorize specific patterns rather than learning general ones and the implementation of techniques such as adding noise to the inputs to improve generalization. The speaker also tests the network's ability to recognize handwritten digits drawn by the user and notes its shortcomings and the need for further improvement.

🚁 Advancing to More Complex Image Recognition

The speaker moves on to more complex image recognition tasks, starting with a fashion dataset and then a set of doodles from the 'quick, draw!' project. They describe the challenges of increasing the network's size and complexity to handle these tasks and the use of random transformations to improve the network's ability to generalize. The speaker concludes by discussing the network's performance on individual categories and its sensitivity to noise and variations in the doodle drawings.

🌐 Concluding with Color Image Recognition

In the final part of the video, the speaker presents the network with a significant challenge: recognizing objects and creatures in color images with a complex background. They discuss the network's struggle with the increased complexity and the wide variety of images, even within a single category. The speaker acknowledges the network's limited success and expresses a desire to return to the project in the future with more advanced techniques to improve its performance.

Mindmap

Keywords

💡Neural Networks

💡Sensors

💡Mutations

💡Handwritten Digits

💡Convolutional Neural Networks

💡Decision Boundary

💡Activation Function

💡Gradient Descent

💡Backpropagation

💡Momentum

💡Generalization

Highlights

Introduction to using Neural Networks for image recognition tasks.

Early experiments with programming neural networks for basic creature animations.

The discovery of the importance of correctly handling physics in neural network programming.

Experimentation with a 2D car model using neural networks for autonomous driving.

Utilization of competition and mutation strategies to improve neural network performance.

Training a neural network to identify handwritten digits as an initial test task.

Exploration of recognizing images of clothing and fashion accessories using the same neural network code.

Attempt to recognize doodles of 10 different objects using a neural network.

Introduction of convolutional neural networks for more complex image recognition tasks.

The use of a simple fruit classification example to illustrate neural network decision boundaries.

Explanation of the concept of weights and biases in neural network connections.

Demonstration of how to visualize and adjust neural network decision boundaries.

Introduction of 'hidden' layers in neural networks to handle more complex data separation.

The implementation of an activation function to allow non-linear decision boundaries.

Discussion on the choice of activation functions and their impact on network training.

Introduction of the concept of 'Gradient Descent' for efficient neural network training.

Explanation of how to calculate the slope of a function and its application in neural networks.

Development of a more efficient learning algorithm using calculus and the backpropagation method.

Testing the trained neural network on the MNIST dataset of handwritten digits.

Analysis of the network's performance and accuracy on the handwritten digit recognition task.

Investigation of the network's ability to generalize beyond the training dataset.

Experimentation with data augmentation to improve network robustness.

Training the network on a more complex dataset of doodles.

Assessment of the network's performance on recognizing various doodle categories.

Attempt to train the network on color images of different objects.

Reflection on the network's limitations and the need for more advanced models for complex tasks.

Conclusion and future outlook for upgrading the neural network to handle more complex image recognition challenges.

Transcripts

Browse More Related Video

MIT Introduction to Deep Learning | 6.S191

Gradient descent, how neural networks learn | Chapter 2, Deep learning

How convolutional neural networks work, in depth

The Chain Rule for Derivatives — Topic 59 of Machine Learning Foundations

But what is a neural network? | Chapter 1, Deep learning

Backpropagation — Topic 79 of Machine Learning Foundations

5.0 / 5 (0 votes)

Thanks for rating: