2023's Biggest Breakthroughs in Computer Science

TLDRThe script discusses the limitations of artificial neural networks in reasoning by analogy, contrasting them with the statistical AI approach. It introduces hyperdimensional computing as a promising method to combine statistical and symbolic AI, as demonstrated by IBM Research's breakthrough in solving the Ravens Progressive Matrix. The script also touches on Oded Regev's potential improvement to Shor's quantum factoring algorithm and the concept of emergent behaviors in large language models, which enable them to solve problems in new and unexpected ways.

Takeaways

- 🧠 The script discusses the limitations of artificial neural networks in reasoning by analogy, unlike the human brain.

- 📈 It introduces the concept of statistical AI, which is central to deep neural networks but struggles with analogy and requires scaling up for new concepts.

- 🤖 The script contrasts statistical AI with symbolic AI, which uses logic-based programming and symbols to represent concepts and rules.

- 🔬 An emerging approach called hyperdimensional computing is presented as a way to combine the strengths of statistical and symbolic AI.

- 📚 Hyperdimensional computing uses vectors to represent information in a multi-dimensional way, allowing for nuanced concept representation.

- 🔄 This new approach can encode information without needing to add more nodes to the network, unlike traditional neural networks.

- 🎓 Researchers at IBM Research in Zurich made a breakthrough by combining statistical and symbolic methods to solve the Ravens progressive matrix puzzle.

- 🚀 The combination of hyperdimensional computing with deep neural networks allows for solving abstract reasoning problems at scale.

- 🏎️ The researchers also achieved a significant acceleration in inference times for abstract reasoning tasks, making AI more practical for larger scale problems.

- 🌿 The potential of hyperdimensional computing is highlighted in being faster, more transparent, and more energy efficient than current AI platforms.

- 🔐 The script touches on the historical impact of Shor's algorithm and its recent improvement by Oded Regev, which could make quantum computing more efficient.

- 🧩 The concept of emergent behaviors in large language models (LLMs) is introduced, where larger models exhibit surprising new abilities not present in smaller ones.

- 🔑 The transformer code, introduced in 2017, allowed neural networks to analyze words in parallel, greatly improving the performance of LLMs.

- 🛠️ The script mentions the challenges of understanding and predicting emergent behaviors in LLMs, as they can lead to both beneficial and harmful outcomes.

Q & A

What is the main limitation of artificial neural networks in terms of reasoning?

-Artificial neural networks struggle with reasoning by analogy. Unlike human brains, which can generalize new concepts from existing knowledge without growing new neurons, artificial neural networks often require more artificial nodes to scale up their statistical abilities and learn new concepts.

What is the core principle behind statistical AI?

-Statistical AI is based on the use of deep neural networks that learn from data through statistical patterns. It involves scaling up the network's nodes to improve its ability to learn and understand new concepts.

How does symbolic AI differ from statistical AI?

-Symbolic AI uses logic-based programming and symbols to represent concepts and rules. It is inherently different from statistical AI, which relies on data patterns and does not use symbolic representation.

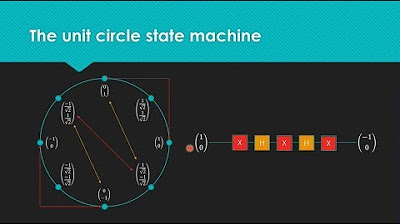

What is hyperdimensional computing and how does it aim to combine statistical and symbolic AI?

-Hyperdimensional computing is an emerging approach that uses vectors, or ordered lists of numbers, to represent information in a complex, multi-dimensional way. It can embody nuances and traits of concepts through small changes in vector orientation and aims to combine the statistical abilities of AI with the symbolic computing approach.

What was the breakthrough achieved by IBM Research in Zurich in March 2023?

-IBM Research in Zurich made a significant breakthrough by combining statistical and symbolic methods to solve the Raven's Progressive Matrix, a classical problem that requires both visual perception and abstract reasoning.

What are the potential advantages of combining hyperdimensional computing with deep neural networks?

-The combination of hyperdimensional computing with deep neural networks can lead to faster, more transparent, and more energy-efficient AI platforms. It allows for the emulation of symbolic computing tasks within the neural network, which are otherwise hard for the network to perform.

How did the researchers at IBM Research accelerate the inference times for abstract reasoning tasks?

-The researchers were able to significantly accelerate the inference times for abstract reasoning tasks by 250 times using a method that can rapidly search in the problem space, making it more practical for larger scale and real-world problems.

What is Shor's algorithm and why is it significant?

-Shor's algorithm is a quantum factoring algorithm developed by Peter Shor in the 1990s. It is significant because it can rapidly break large numbers into their prime factors, which threatens modern cryptography that has safeguarded online privacy for decades.

What was the improvement proposed by Oded Regev to Shor's algorithm?

-Oded Regev proposed an improvement to Shor's algorithm by transforming the periodic function from one dimension to multiple dimensions. This involves including more numbers and multiplying each with itself many times over to find the period of repetition in the outputs, potentially making the algorithm faster and more efficient.

What are emergent behaviors in AI and why are they significant?

-Emergent behaviors in AI refer to new abilities that are not present in smaller language models but become present in larger ones. They are significant because they enable AI systems to solve problems they have never encountered before, demonstrating a departure from older neural networks and a step towards more advanced artificial intelligence.

What is the transformer model and how does it contribute to the capabilities of large language models (LLMs)?

-The transformer model is a key piece of code introduced in 2017 that allows neural networks to analyze words in parallel. It contributes to the capabilities of LLMs by enabling them to process strings of text instantly and become better at abstract tasks and generalization as the models scale up.

What is the challenge with understanding emergent behaviors in AI?

-The challenge with understanding emergent behaviors in AI is that they are unpredictable. While larger models generally perform better, they can also demonstrate novel behaviors that developers did not anticipate. This unpredictability makes it difficult to fully understand the tipping point into emergence and to measure the potential harms that might arise with scale.

Outlines

🧠 AI Limitations and the Rise of Hyperdimensional Computing

The script discusses the limitations of artificial neural networks in reasoning by analogy, contrasting it with human brains' ability to generalize from existing knowledge. It introduces two AI approaches: statistical AI, which underpins deep neural networks and requires scaling up to learn new concepts, and symbolic AI, which uses logic-based programming. The challenge lies in combining these approaches for optimal performance. Hyperdimensional computing emerges as a solution, utilizing vectors to represent complex, multi-dimensional information and enabling the encoding of new concepts without adding nodes. In March 2023, IBM Research in Zurich made a significant breakthrough by combining statistical and symbolic methods to solve the Raven's Progressive Matrix, a classical problem requiring both perception and abstract reasoning. This integration of hyperdimensional computing with deep neural networks allows for the emulation of symbolic computing tasks within the neural network, potentially offering advantages in speed and energy efficiency.

🚀 Advancements in Quantum Algorithms: Shor's and Regev's Algorithms

The script highlights the significance of Shor's algorithm, which enables quantum computers to factor integers quickly, a process critical for cryptography. It then introduces a theoretical advancement by mathematician Oded Regev, who proposed a method to accelerate Shor's algorithm by extending the periodic function from one dimension to multiple dimensions. This involves multiplying more numbers with themselves to find the period of repetition in outputs, thereby improving the efficiency and speed of integer factoring. Shor's original algorithm is likened to walking on a line, while Regev's improvement allows for 'walking' in a plane or higher-dimensional space. Both algorithms are theoretical, with the potential to become more practical in the future of quantum computing. The script also touches on the possibility of further improvements and the desire to discover new capabilities of quantum computers.

🤖 Emergent Behaviors in Large Language Models (LLMs)

The final paragraph delves into the concept of emergent behaviors in AI, particularly within large language models (LLMs). These behaviors are new abilities that arise as models scale up and are not predictable or present in smaller models. The script provides an example of three different AI models answering a question using emojis, with the most complex system providing the correct answer in one attempt. This is attributed to emergent behaviors, which are a departure from older neural networks that processed language sequentially. The introduction of the transformer code in 2017 allowed for parallel processing of words, leading to models that can instantly process text. The script mentions that researchers have observed emergent behaviors enabling LLMs to solve problems they've never encountered before, a capability known as zero-shot or few-shot learning. However, the exact mechanisms behind these emergent abilities remain a mystery, with researchers still exploring the tipping point at which emergence occurs. The models improve as they grow larger, but understanding the science behind this is progressing more slowly, leading to a sense of unpredictability in the field.

Mindmap

Keywords

💡Artificial Intelligence (AI)

💡Artificial Neural Networks

💡Reasoning by Analogy

💡Statistical AI

💡Symbolic AI

💡Hyperdimensional Computing

💡Ravens Progressive Matrix

💡Abstract Reasoning

💡Emergent Behaviors

💡Transformers

💡Zero Shot Learning

💡Chain of Thought Reasoning

💡Quantum Computing

💡Shor's Algorithm

Highlights

Artificial neural networks struggle with reasoning by analogy and require more nodes to learn new concepts.

Statistical AI is central to deep neural networks but faces limitations in reasoning.

Symbolic AI uses logic-based programming and symbolic representation, offering an alternative to statistical AI.

Hyperdimensional computing aims to combine the strengths of statistical and symbolic AI.

Hyperdimensional vectors can represent complex information through changes in orientation.

These vectors can encode information without needing to add more nodes to the network.

IBM Research in Zurich made a breakthrough by combining statistical and symbolic methods to solve the Ravens progressive matrix.

The Ravens progressive matrix is a classical problem requiring both perception and abstract reasoning.

Researchers combined hyperdimensional computing with deep neural networks to solve abstract reasoning problems.

This approach can emulate symbolic computing tasks within a neural network.

IBM's research significantly accelerated inference times for abstract reasoning tasks by 250 times.

Hyperdimensional computing could be faster, more transparent, and more energy efficient than current AI platforms.

Peter Shor developed an algorithm that threatened the Internet by enabling quantum computers to break encryption.

Shor's algorithm is based on finding the period of a function to factor large numbers.

Oded Regev proposed an improvement to Shor's algorithm by using multiple dimensions to find the period faster.

Regev's new algorithm could make quantum computing more practical for factoring integers.

Emergent behaviors in AI are new abilities that appear as models scale up in size.

Large language models can exhibit emergent behaviors that enable them to solve problems they've never seen before.

The transformer code introduced to LLMs allows for parallel processing of words, enhancing their capabilities.

Researchers are investigating the tipping point at which models begin to exhibit emergent behaviors.

Chain of thought reasoning is one theory for how large language models break down complex tasks.

The unpredictability of emergent behaviors poses challenges for developers and users of AI models.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: