How AI Discovered a Faster Matrix Multiplication Algorithm

TLDRMatrix multiplication, a foundational operation in mathematics, plays a crucial role in various fields from computer graphics to quantum physics. Despite its simplicity, it poses significant computational challenges, especially with larger matrices. Traditional methods involve N-cubed steps, but in 1969, Volker Strassen introduced an algorithm reducing the steps for 2x2 matrices from eight to seven. This breakthrough was further improved upon by Google's DeepMind with their AI system, AlphaTensor, which discovered a new algorithm for 4x4 matrices with binary elements, using only 47 multiplications instead of the previous 49 or 64. This advancement not only showcases the potential of AI in mathematical research but also highlights the synergy between human mathematicians and AI, as evidenced by subsequent refinements to the algorithm by Manuel Kauers and Jakob Moosbauer, reducing the steps further to 95. The collaboration between technology and mathematicians opens new frontiers in solving complex problems.

Takeaways

- 🧮 **Matrix Multiplication's Role**: Matrix multiplication is a fundamental operation in mathematics, crucial in fields like computer graphics, neural networks, and quantum physics.

- 🚀 **Efficiency Importance**: Researchers have been seeking more efficient matrix multiplication methods to solve larger problems that are currently infeasible due to computational constraints.

- 📚 **Standard Algorithm**: The traditional method for multiplying two matrices involves N-cubed steps, which becomes inefficient for larger matrices.

- 🔍 **Strassen's Algorithm**: Volker Strassen developed an algorithm that reduces the number of multiplications needed for two by two matrices from eight to seven, offering significant savings for larger matrices.

- 📉 **Winograd's Proof**: Shmuel Winograd proved that it's impossible to multiply two by two matrices using six or fewer multiplications, establishing Strassen's algorithm as the best known solution for a long time.

- 🔧 **DeepMind's Breakthrough**: Google's DeepMind lab discovered a new algorithm that surpasses Strassen's for multiplying two four by four matrices with elements of zero or one, breaking a 50-year-old record.

- 🤖 **AI in Mathematical Research**: DeepMind's AlphaTensor, a descendant of the AlphaGo algorithm, used reinforcement learning to discover more efficient matrix multiplication algorithms.

- 🎲 **Reinforcement Learning**: AlphaTensor was trained using a game-like approach where it was rewarded for finding more efficient ways to decompose a 3D tensor into rank-1 tensors.

- 🧩 **Tensor Decomposition**: The process of breaking down a 3D tensor into rank-1 tensors is key to finding more efficient matrix multiplication algorithms.

- 📈 **AlphaTensor's Achievements**: AlphaTensor not only rediscovered Strassen's algorithm but also found a new algorithm that uses only 47 multiplications for four by four matrices, instead of the standard 64 or Strassen's 49.

- 🤝 **Human-AI Collaboration**: The collaboration between AI tools like AlphaTensor and mathematicians can lead to significant advancements, with AI assisting in the discovery process and mathematicians further refining and understanding the results.

Q & A

What is matrix multiplication?

-Matrix multiplication is a fundamental mathematical operation used in various fields such as computer graphics, neural networks, and quantum physics. It involves performing operations on a two-dimensional array of numbers.

Why is finding more efficient ways to multiply matrices important?

-Efficient matrix multiplication allows for the solving of larger problems that were previously considered too big to compute in a reasonable time, thus expanding the scope of what can be achieved in fields like engineering and physics.

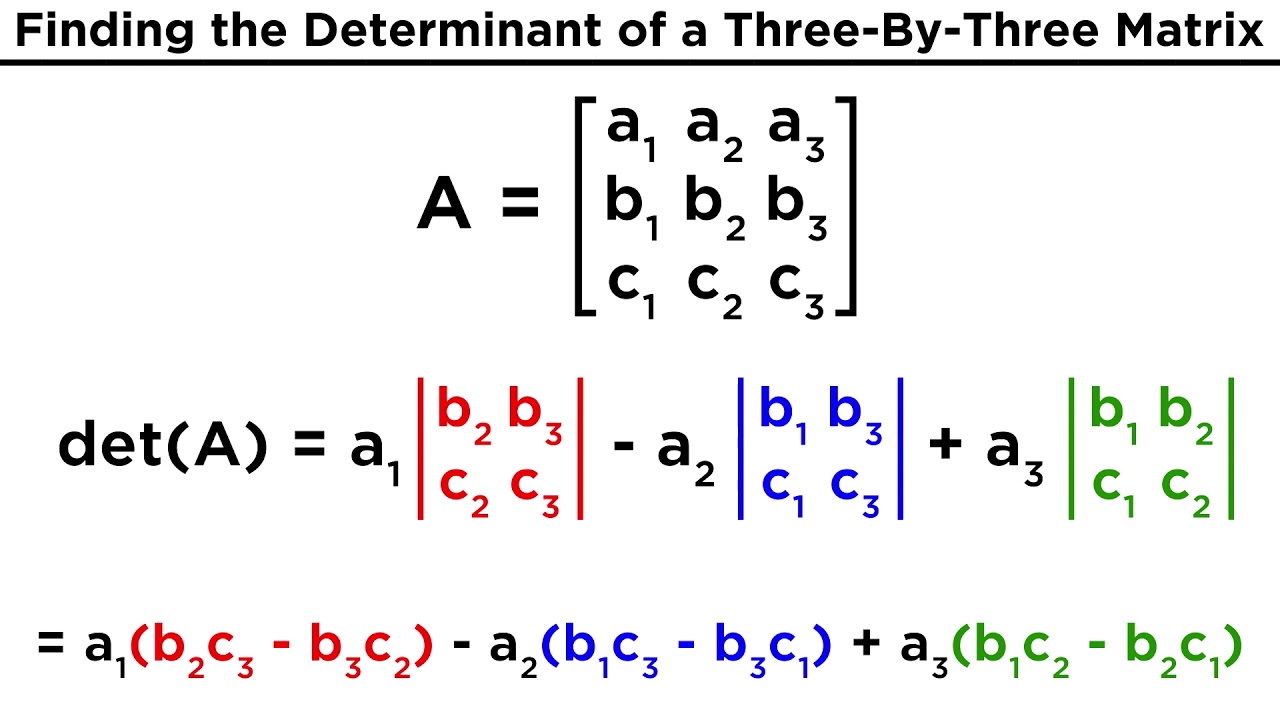

What is the standard method for multiplying two 2x2 matrices?

-The standard method involves multiplying elements from the first row of matrix A with the first column of matrix B, and adding them to get the first element of matrix C, then repeating this process for all row-column pairs in the matrices.

Who discovered the algorithm that reduces the number of multiplication steps for 2x2 matrices from eight to seven?

-Volker Strassen, a German mathematician, discovered the algorithm in 1969 that offers significant computational savings for larger matrices.

What is the significance of Strassen's algorithm for large matrices?

-Strassen's algorithm allows for the breakdown of large matrices into smaller ones, applying the savings in multiplication steps to these smaller matrices, which propagates over and over, resulting in fewer multiplication steps overall.

Who proved that it is impossible to use six or fewer multiplications to multiply two 2x2 matrices?

-Shmuel Winograd, an IBM researcher, proved this in 1970, establishing Strassen's algorithm with seven multiplications as the best solution.

What was the breakthrough in matrix multiplication in October 2022?

-A new algorithm was revealed by Google's DeepMind that beat Strassen's algorithm specifically for multiplying two 4x4 matrices where the elements are only zero or one, allowing for even faster computation.

How did DeepMind's AlphaTensor contribute to the discovery of a new matrix multiplication algorithm?

-AlphaTensor, built on a reinforcement learning algorithm, was used to explore a vast search space of possible algorithms. It played a 'game' where it was rewarded for using fewer multiplication steps, leading to the discovery of the new algorithm.

What is the role of a tensor in the context of AlphaTensor's algorithm discovery?

-A tensor is an array of numbers with any number of dimensions. In the context of matrix multiplication, the process can be described by a unique 3D tensor, which AlphaTensor used to represent and optimize the multiplication steps.

How does reinforcement learning play a role in AlphaTensor's discovery process?

-Reinforcement learning involves strategically penalizing and rewarding an AI system as it experiments with different methods to achieve its task. AlphaTensor used this technique to learn how to minimize the number of multiplication steps in matrix multiplication.

What is the potential impact of AI systems like AlphaTensor on the field of mathematics?

-AI systems like AlphaTensor can assist mathematicians by finding new results and guiding their intuition. They can also help in exploring large, complex problems that are beyond human computational capacity, thus acting as a tool for collaboration rather than replacement.

How did the mathematical community respond to the results published by AlphaTensor?

-The results were used as inspiration by mathematicians to further their own research. For example, two mathematicians in Austria used AlphaTensor's algorithm as a starting point to find an even more efficient method for multiplying 5x5 matrices.

Outlines

🧮 The Enigma of Matrix Multiplication

Matrix multiplication is a fundamental mathematical operation that underpins various fields such as computer graphics, neural networks, and quantum physics. Despite its simplicity for beginners, it remains a complex topic that even experienced mathematicians have not fully mastered. It is crucial in engineering and physics computations, and researchers are continuously seeking more efficient methods to multiply matrices. The traditional method involves a significant number of steps, which becomes impractical for larger matrices. A breakthrough came with Volker Strassen's algorithm, which reduced the number of multiplication steps needed for 2x2 matrices. This algorithm has significant implications for larger matrices, as they can be broken down into smaller ones, leading to substantial computational savings. The story of matrix multiplication took a new turn in October 2022 when a new algorithm for multiplying 4x4 matrices with binary elements was discovered by Google's DeepMind, surpassing Strassen's method.

🤖 DeepMind's AI and the Matrix Multiplication Challenge

DeepMind, Google's artificial intelligence research lab, known for creating AI systems that master games, turned its attention to the challenge of matrix multiplication. They developed an algorithm called AlphaTensor, derived from the AlphaGo algorithm, and based on a reinforcement learning approach. The process involves representing the matrix multiplication as a 3D tensor and then decomposing it into rank-1 tensors, which correspond to multiplication steps. By strategically penalizing and rewarding the AI, DeepMind's system was able to find more efficient ways to decompose these tensors, leading to faster matrix multiplication algorithms. This approach was not without its challenges, given the vast number of possible tensor decompositions. However, AlphaTensor was able to identify patterns and rediscover Strassen's algorithm, eventually surpassing it with a new method for 4x4 matrices with binary elements, using 47 multiplications instead of the standard 64 or Strassen's 49.

📚 The Future of AI and Mathematical Discovery

The discovery of a new matrix multiplication algorithm by AlphaTensor raises questions about the role of AI in mathematical research. While some might worry that AI could replace mathematicians, the consensus is that tools like AlphaTensor will instead serve as aids, guiding mathematicians towards new insights. This was demonstrated when two mathematicians in Austria used AlphaTensor's algorithm as a starting point to further optimize matrix multiplication for 5x5 matrices. The collaboration between AI and mathematicians represents an exciting frontier, with the potential to empower individuals to achieve more in their research. The story of AlphaTensor and matrix multiplication is a testament to the potential of human and artificial intelligence working together to push the boundaries of knowledge.

Mindmap

Keywords

💡Matrix Multiplication

💡Efficient Algorithms

💡Strassen's Algorithm

💡DeepMind

💡AlphaGo and AlphaTensor

💡Reinforcement Learning

💡Tensor Decomposition

💡Rank-1 Tensors

💡Modulo-2 Multiplication

💡Machine Learning Techniques

💡Collaboration between AI and Mathematicians

Highlights

Matrix multiplication is a fundamental operation in mathematics, crucial for fields like computer graphics, neural networks, and quantum physics.

Efficient matrix multiplication methods are sought to solve larger problems in a reasonable time.

The traditional method of multiplying two N by N matrices requires N-cubed steps, which becomes unwieldy with larger matrices.

Volker Strassen's algorithm reduced the number of multiplication steps for 2x2 matrices from eight to seven, offering significant computational savings for larger matrices.

Shmuel Winograd proved that no algorithm could use six or fewer multiplications for 2x2 matrices, making Strassen's algorithm the best known solution for half a century.

DeepMind's AI lab discovered a new algorithm that beats Strassen's for multiplying two 4x4 matrices with elements of zero or one.

The new algorithm allows for even faster multiplication of large matrices by breaking them into 4x4 matrices instead of 2x2.

DeepMind's AlphaTensor uses reinforcement learning to discover more efficient matrix multiplication algorithms.

AlphaTensor's approach involves playing a 'game' where it decomposes a 3D tensor into rank-1 tensors to find the most efficient algorithm.

AlphaTensor's algorithm for 4x4 matrix multiplication with modulo-2 elements uses only 47 multiplications, breaking the 50-year record.

The discovery of new algorithms by AlphaTensor includes ones for 5x5 matrices in modulo-2, showcasing the potential of AI in mathematical research.

AlphaTensor's findings inspired human mathematicians to further refine the 5x5 matrix multiplication algorithm, reducing it from 96 to 95 steps.

The collaboration between AI technology like AlphaTensor and mathematicians is seen as a powerful tool for discovery and intuition guidance in mathematics.

The potential for human and artificial intelligence collaboration is a new frontier being explored for solving complex mathematical problems.

AI programs like AlphaTensor are not expected to replace mathematicians but rather to empower them to achieve more.

The use of reinforcement learning in mathematical research is a significant innovation in the field.

Tensor decomposition is a complex process, with the number of possible decompositions for a 3x3 matrix exceeding the number of atoms in the universe.

AlphaTensor's success in discovering new algorithms demonstrates the capability of AI to navigate and learn from vast, formalizable search spaces.

Transcripts

Browse More Related Video

Matrix Multiplication and Associated Properties

2023's Biggest Breakthroughs in Computer Science

Evaluating the Determinant of a Matrix

Matrix equations and systems | Matrices | Precalculus | Khan Academy

AI Invents New Bowling Techniques

Introduction to the matrix | Matrices | Precalculus | Khan Academy

5.0 / 5 (0 votes)

Thanks for rating: