But what is a convolution?

TLDRThis script explores the concept of convolution, a fundamental operation in various fields like probability, image processing, and signal processing. It delves into the visual and intuitive representations of convolutions, showcasing their applications in tasks like blurring images and detecting edges. Additionally, it unveils a clever algorithm that leverages Fourier transforms to compute convolutions efficiently, allowing for faster computations in large-scale operations. The script masterfully explains complex mathematical concepts through engaging visuals and relatable examples, making it accessible and thought-provoking for viewers.

Takeaways

- 😄 Convolution is a fundamental operation that combines two lists or functions in a unique way, beyond simple addition or multiplication.

- 🤔 Convolutions are ubiquitous in various fields, including image processing, probability theory, and differential equations.

- 🎯 Convolutions can be visualized as sliding one list/function over another, taking pairwise products, and summing them up.

- 🔢 Convolving probability distributions helps calculate the chances of various outcomes, like the sum of rolling two dice.

- 📸 In image processing, convolutions with different kernels can produce effects like blurring, edge detection, and sharpening.

- 🧮 Multiplying two polynomials can be interpreted as convolving their coefficients, providing a connection between convolutions and polynomial multiplication.

- ⚡ The Fast Fourier Transform (FFT) algorithm can compute convolutions much faster than the naive method, reducing complexity from O(n^2) to O(n log n).

- 🔄 The FFT algorithm treats the input lists as coefficients of polynomials, evaluates them at special points, and performs an inverse FFT to obtain the convolution.

- 🤯 Even simple integer multiplication can be viewed as a convolution between the digits, implying the existence of a faster algorithm for multiplying large numbers.

- 🌟 Convolutions arise in seemingly unrelated areas, showcasing the importance of recognizing connections between different mathematical concepts.

Q & A

What is a convolution, and why is it important?

-A convolution is a fundamental operation that combines two sequences or functions to produce a new sequence or function. It is important because convolutions are ubiquitous in various fields, including image processing, probability theory, signal processing, and differential equations. They play a crucial role in applications like filtering, edge detection, and feature extraction.

How can convolutions be used in probability theory?

-In probability theory, convolutions can be used to combine probability distributions. For example, when rolling two dice, the probability of obtaining a particular sum can be calculated by convolving the probability distributions of the individual dice. This process involves flipping one distribution, aligning it with the other at different offsets, multiplying the corresponding probabilities, and summing the products.

Can you explain the connection between convolutions and polynomials?

-There is a deep connection between convolutions and polynomials. Multiplying two polynomials is essentially the same operation as convolving their coefficient sequences. This relationship allows for the use of a fast algorithm called the Fast Fourier Transform (FFT) to compute convolutions efficiently, by treating the input sequences as polynomial coefficients and leveraging the redundancies in the computations.

What is the computational complexity of the naive convolution algorithm?

-The naive algorithm for computing the convolution of two sequences of length n has a computational complexity of O(n^2), as it involves performing n^2 multiplications and n^2 additions.

How does the FFT algorithm improve the computational efficiency of convolutions?

-The FFT algorithm reduces the computational complexity of convolutions from O(n^2) to O(n log n). It achieves this by transforming the input sequences into the frequency domain using the Discrete Fourier Transform (DFT), performing a pointwise multiplication, and then applying the inverse DFT to obtain the convolution result. This approach exploits the redundancies in the DFT computations, resulting in a significant performance improvement.

What are some applications of convolutions in image processing?

-Convolutions have various applications in image processing, such as blurring (smoothing), sharpening, edge detection, and feature extraction. By convolving an image with different kernels (small arrays of values), various effects can be achieved, including noise reduction, edge enhancement, and object recognition.

How can convolutions be used for moving averages?

-Convolutions can be used to compute moving averages of a sequence. By convolving the sequence with a kernel consisting of equal weights that sum to 1, the resulting sequence represents a smoothed version of the original data, where each point is the average of neighboring points within the kernel's window.

What is the relationship between convolutions and convolutional neural networks?

-Convolutional Neural Networks (CNNs) are a type of deep learning architecture that extensively uses convolutions for feature extraction and pattern recognition tasks, particularly in computer vision applications. In CNNs, the convolution operation is applied to the input data (e.g., images) using learnable kernels, allowing the network to automatically learn and extract relevant features.

Can you provide an example of a practical application of convolutions?

-One practical application of convolutions is in digital signal processing, where they are used for filtering and denoising signals. For instance, in audio processing, convolving an audio signal with a specific kernel can remove unwanted noise or apply effects like reverberation or equalization.

What is the significance of the flipping operation in the context of convolutions?

-The flipping operation, where one of the input sequences or functions is reversed before convolving, is a fundamental aspect of the mathematical definition of convolutions. It arises naturally in certain contexts, such as probability theory, and is essential for maintaining the mathematical properties and interpretations of convolutions. However, in some applications like image processing, this flipping step may seem unnecessary and can be omitted.

Outlines

🔢 Combining Lists and Functions: Convolutions

This paragraph introduces the concept of convolutions, which are a fundamental way of combining two lists of numbers or functions. It explains how convolutions differ from simple arithmetic operations like addition and multiplication, and how they are ubiquitous in various fields such as image processing, probability theory, and differential equations. The paragraph also previews the visual explanations and insights that will be explored in the video, setting the stage for understanding convolutions.

🎲 Probability and Moving Averages

The paragraph discusses two practical examples where convolutions are useful: calculating probability distributions (such as rolling dice) and computing moving averages for smoothing data. It provides visual representations and mathematical notation to illustrate how convolutions can be used in these contexts. Additionally, it touches on image processing applications like blurring and edge detection, introducing the idea of using different 'kernels' to achieve different effects through convolutions.

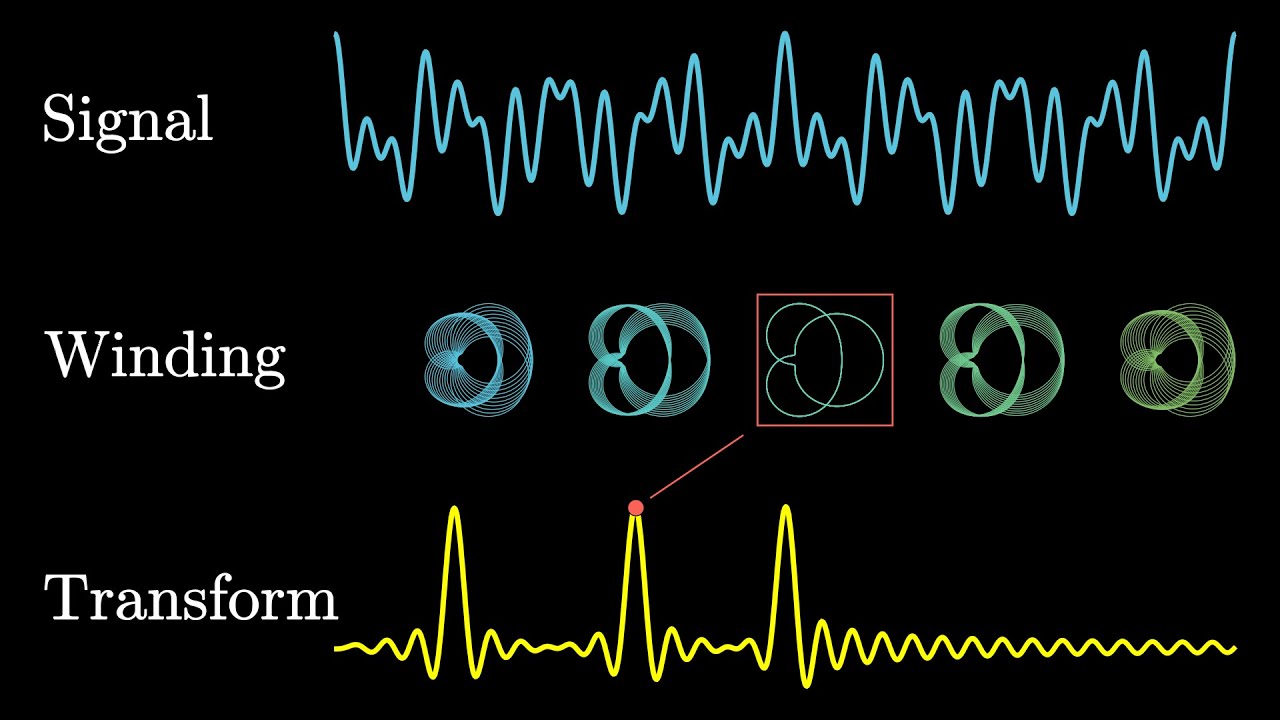

🔄 Polynomial Multiplication and Fast Convolution Algorithms

This paragraph draws a connection between convolutions and polynomial multiplication, showing that expanding the product of two polynomials is essentially the same process as convolving their coefficients. It then explores the idea of using the Fast Fourier Transform (FFT) algorithm to compute convolutions more efficiently, taking advantage of the redundancy in the calculations. The paragraph outlines the algorithm and emphasizes the significant performance improvement it offers over naive convolution methods, especially for large inputs.

📝 The Fast Fourier Transform Algorithm

Building upon the previous paragraph, this section delves deeper into the Fast Fourier Transform (FFT) algorithm and its application to computing convolutions efficiently. It explains the key idea of evaluating polynomials at specially chosen points (roots of unity) and leveraging the resulting redundancy to accelerate the calculations. The paragraph also provides references to additional resources for learning more about FFTs and discrete Fourier transforms.

🧮 Large Integer Multiplication and Further Insights

The final paragraph explores an intriguing connection between convolutions and elementary school multiplication of large integers. It suggests that the FFT-based convolution algorithm can be used to multiply large numbers more efficiently than the traditional method, requiring only O(n log n) operations instead of O(n^2). The paragraph concludes by hinting at the upcoming discussion on continuous convolutions and their applications in probability distributions.

Mindmap

Keywords

💡Convolution

💡Discrete Case

💡Image Processing

💡Probability Distributions

💡Differential Equations

💡Moving Average

💡Kernel

💡Fourier Transform

💡Gaussian Distribution

💡Polynomial Multiplication

Highlights

Convolutions are a fundamental way to combine two lists of numbers or two functions, and they show up in various fields such as image processing, probability theory, and solving differential equations.

Convolving two lists involves taking the first list, flipping the second list, and then aligning them at various offsets, multiplying corresponding terms, and summing the products to get the new sequence.

Convolutions can be interpreted as a way to mix probability distributions, where the resulting sequence represents the probabilities of different sums.

Convolving a data sequence with a small list of weights that sum to 1 can be interpreted as a moving average, providing a smoothed version of the data.

In image processing, convolving an image with different kernels (grids of values) can produce effects like blurring, edge detection, and sharpening.

Multiplying two polynomials is equivalent to convolving the coefficients of the polynomials, and collecting the like terms along the diagonals of the pairwise product table.

There exists a fast algorithm (Fast Fourier Transform) to compute convolutions in O(n log n) time, rather than the naïve O(n^2) time, by treating the input lists as coefficients of polynomials and leveraging the redundancy in evaluating at special points (roots of unity).

The Fast Fourier Transform algorithm is applicable to any context where convolutions are needed, such as adding probability distributions or image processing, providing a significant speedup for large inputs.

Multiplying two large integers can be viewed as a convolution between their digits, and the Fast Fourier Transform algorithm can provide a faster method than the elementary school algorithm for extremely large numbers.

Convolutions are a versatile and powerful operation that arise in various contexts, and their connection to polynomial multiplication leads to a clever and efficient algorithm for computing them.

Unpacking the mathematical definition of convolutions and visualizing the process can reveal its beauty and natural applications, as demonstrated in the probability example.

In the context of image processing, different kernels (grids of values) can be convolved with an image to achieve effects like blurring, edge detection, and sharpening, with each kernel producing a different result.

Convolutional neural networks leverage the idea of learning appropriate kernels from data to detect desired features in images.

The notion of flipping the second array in a convolution, inherited from the pure math context, may seem counterintuitive in computer science contexts but is essential for certain applications.

Convolutions can be viewed as a way to multiply polynomials by first extracting coefficients, convolving them, and then collecting like terms along diagonals of the pairwise product table.

Transcripts

Browse More Related Video

5.0 / 5 (0 votes)

Thanks for rating: