Convolutional Neural Networks Explained (CNN Visualized)

TLDRThis video script delves into the intricacies of convolutional neural networks (CNNs), highlighting their architecture and functionality in image recognition tasks. It begins with an overview of the deep learning series, then focuses on CNNs, using number recognition as a practical example. The script explains the input process, the role of kernels in feature detection, and the application of pooling layers for downsampling. It emphasizes the progression from simple feature detection to complex pattern recognition, and how CNNs build abstraction. The script also touches on the limitations of CNNs in tasks like natural language processing, suggesting further exploration in related courses for a comprehensive understanding of deep learning.

Takeaways

- 🌟 Deep learning involves artificial neural networks that mimic the structure of biological neural networks.

- 🔍 The focus of the video is on convolutional neural networks (CNNs), a type of neural network used for image recognition tasks.

- 📷 Images are represented as matrices of pixel values, with each image typically having three color channels (RGB).

- 💡 The convolutional layer in CNNs uses kernels to perform feature detection by taking the dot product of the kernel and input matrices.

- 🔍 Feature maps are generated as a result of the convolution operation, highlighting different aspects of the input image such as edges and shapes.

- 📊 Pooling layers downsample feature maps, reducing spatial dimensions and preventing overfitting while retaining important features.

- 🔄 Multiple convolutional and pooling layers are used to build up abstraction, moving from simple to complex feature detection.

- 🧠 The fully connected layers at the end of a CNN classify the image based on the high-level features extracted by the convolutional layers.

- 🤖 CNNs are not suitable for tasks like natural language processing, which require different types of networks designed for sequential data.

- 📚 Further learning resources are available for those interested in a deeper understanding of deep learning and its various applications.

Q & A

What is the main focus of the video?

-The main focus of the video is to introduce and discuss the architecture and functionality of convolutional neural networks (CNNs), using number recognition as a common example.

How does the structure of a feedforward network differ from that of a convolutional network in terms of abstraction layers?

-In a feedforward network, the abstraction layers build upon each other in a way that is more random to the human eye due to the network's architecture. In contrast, convolutional networks allow us to see these layers of abstraction building up more clearly, as they are designed to detect features in a hierarchical manner.

What is a kernel in the context of convolutional neural networks?

-A kernel in CNNs is a mini matrix used for feature detection through a convolution operation. It moves across the input image, taking the dot product of the two matrices, and saves the result in a new matrix called the feature map.

What is the primary purpose of pooling layers in a CNN?

-The primary purpose of pooling layers is to downsample the feature maps, retaining the most important parts and discarding the rest. This helps reduce overfitting and speeds up calculations in later layers due to the reduced spatial size of the image.

How do convolutional layers contribute to feature extraction in CNNs?

-Convolutional layers contribute to feature extraction by using multiple kernels to detect simple patterns such as edges, corners, and shapes. These low-level features are then used to build more complex, high-level features in subsequent layers, allowing the network to recognize objects and structures within images.

What is the role of fully connected layers in a CNN?

-The role of fully connected layers in a CNN is to classify the high-level abstracted features extracted from the input. These layers are similar to those in a feedforward network, but they operate on the feature maps produced by the convolutional and pooling layers rather than raw input pixels.

Why are convolutional neural networks particularly suited for image classification tasks?

-CNNs are well-suited for image classification tasks because they are designed to automatically and adaptively learn spatial hierarchies of features from the input images. This allows them to detect complex patterns and structures, making them highly effective for tasks related to image recognition and classification.

What are some limitations of convolutional neural networks?

-While CNNs excel at image-related tasks, they do not perform well for tasks such as natural language processing, which require memory and sequential data handling. Other network architectures, such as recurrent neural networks, are better suited for these types of tasks.

How does the process of backpropagation and gradient descent affect the parameters in a convolutional network?

-During backpropagation and gradient descent, the weight and bias values in a convolutional network are adjusted to minimize the error. Additionally, kernel coefficients and other parameters in the convolution and pooling layers are also fine-tuned to improve the network's performance.

What are some hyper-parameters that can be adjusted in a convolutional neural network?

-Some hyper-parameters that can be adjusted in a CNN include kernel size, stride, dilation rate, the use of transposed convolutions, and padding. These parameters can significantly affect the output and performance of the network.

What is the significance of the non-linearity function, such as ReLU, in a convolutional neural network?

-The non-linearity function, like ReLU (Rectified Linear Unit), is crucial in a CNN as it introduces non-linearity into the network, allowing it to learn more complex patterns and making the feature maps more adaptable to real-world data.

Outlines

🤖 Introduction to Convolutional Neural Networks (CNNs)

This paragraph introduces the concept of Convolutional Neural Networks (CNNs) as an extension of the deep learning series. It discusses the transition from feedforward networks to CNNs, emphasizing the latter's ability to detect layers of abstraction more visibly. The focus is on the number recognition example, which is used to illustrate the workings of CNNs. The paragraph sets the stage for a detailed explanation of the structure and function of CNNs, starting with the input layer that represents individual pixels of an image and the output layer that classifies these patterns as numbers zero to nine. It also touches on the role of convolutional and pooling layers in the network.

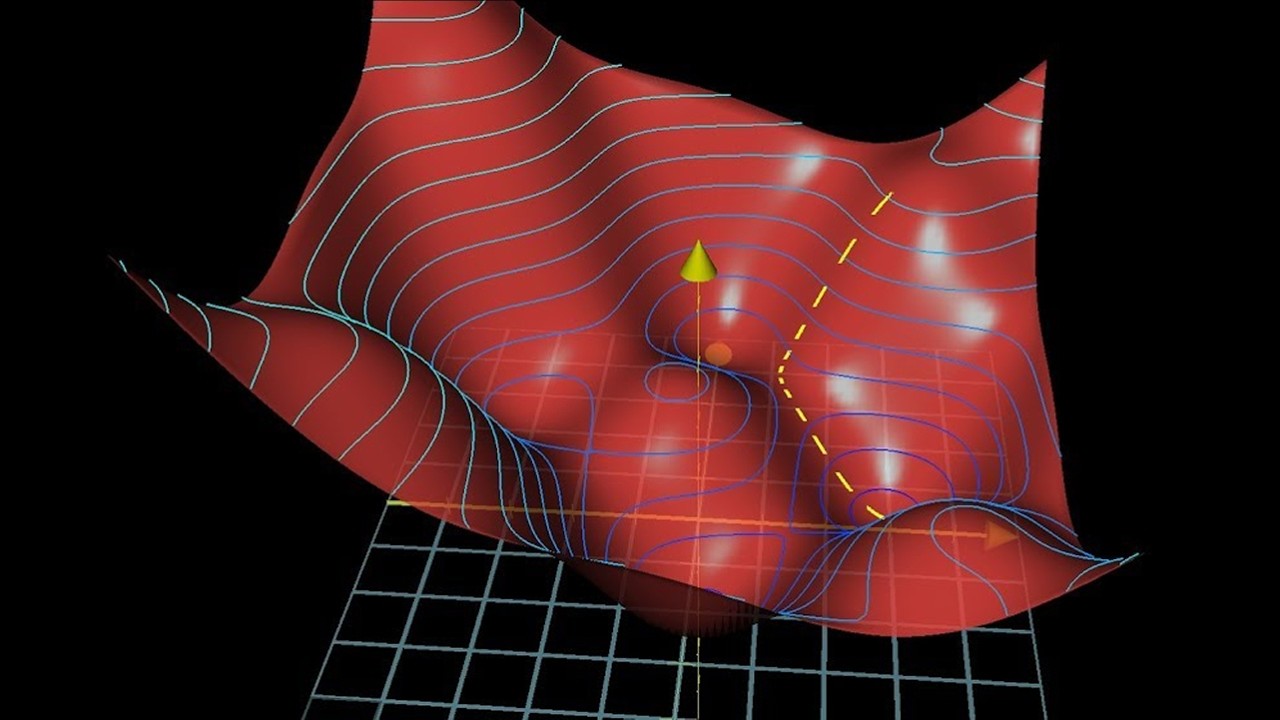

🌟 Understanding the Working of Convolutional and Pooling Layers

This paragraph delves into the specifics of how convolutional and pooling layers function within a CNN. It explains the convolutional operation using kernels that act as feature detectors, highlighting how these kernels can be initialized to identify various patterns in an image. The paragraph also discusses the use of max pooling to downsample feature maps, retaining important information while reducing spatial size to combat overfitting and speed up calculations. The progression from simple feature detection in the initial layers to more complex pattern recognition in deeper layers is outlined, illustrating the concept of abstraction in CNNs.

🚀 Classification and Further Exploration of Deep Learning

The final paragraph discusses the role of fully connected layers in classifying high-level abstracted features extracted by the CNN. It explains how these layers, similar to those in a feedforward network, use the compressed feature maps for accurate classification. The paragraph also acknowledges the limitations of CNNs in tasks like natural language processing, which require memory, and suggests that future videos will cover networks designed for such tasks. The speaker encourages viewers to explore deep learning further through resources like brilliant.org and supports the channel through Patreon or YouTube membership.

Mindmap

Keywords

💡Deep Learning

💡Convolutional Neural Network (CNN)

💡Feedforward Network

💡Kernel

💡Feature Map

💡Pooling Layer

💡ReLU (Rectified Linear Unit)

💡Backpropagation

💡Fully Connected Layers

💡Hyper-parameters

💡Natural Language Processing (NLP)

Highlights

The video discusses the architecture and functionality of convolutional neural networks (CNNs), a popular type of neural network used in image recognition and other computer vision tasks.

The focus of the video is to initiate discussion on CNNs, which are different from the previously discussed feedforward networks.

A common example used in the video to illustrate CNNs is number recognition, highlighting the network's ability to identify and classify handwritten digits.

The video explains that unlike feedforward networks, CNNs allow us to see layers of abstraction building up, making the network's operations more interpretable.

The structure of the network discussed in the video includes two convolutional layers, two pooling layers, and two fully connected layers.

The input for CNNs is the individual pixels of an image, and these pixels are stored as matrices representing different channels such as RGB.

The convolutional layer in CNNs uses a mathematical operation called convolution, which involves a kernel moving across the input image to detect features.

Kernels in CNNs can be thought of as mini matrices that, when applied with specific values, can transform an input image to find various patterns.

The video mentions that the first layer of a CNN detects simple patterns like horizontal lines, vertical lines, and corners using multiple kernels.

A non-linearity function, such as ReLU, is applied to the feature maps produced by the convolutional layer to make the network more adaptable to real-world data.

Pooling layers in CNNs are used to downsample feature maps, retaining important parts and discarding the rest, which helps reduce overfitting and speeds up calculations.

Max pooling is a type of pooling used in CNNs where the largest pixel value in a region is saved, helping to retain key information while reducing spatial size.

The video explains that in later layers of a CNN, more complex kernels are used to detect shapes, objects, and other complex structures by building on previously detected simple features.

The process of the convolutional and pooling layers is referred to as feature extraction, aiming to detect high-level features with as low spatial resolution as possible.

The classifier part of a CNN consists of fully connected layers that use the high-level abstracted features from the input to classify them.

The video acknowledges that many details and hyper-parameters such as kernel size, stride, and pooling types have been generalized in the discussion.

The video concludes by noting that while CNNs excel at image classification tasks, they are not suitable for tasks like natural language processing, which require memory.

The video encourages viewers to learn more about deep learning and stay sharp in a world where automation through algorithms is increasingly replacing jobs.

Transcripts

Browse More Related Video

MIT 6.S191 (2023): Convolutional Neural Networks

How convolutional neural networks work, in depth

Neural Networks: Crash Course Statistics #41

Gradient descent, how neural networks learn | Chapter 2, Deep learning

MIT Introduction to Deep Learning | 6.S191

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

5.0 / 5 (0 votes)

Thanks for rating: