Video 22 - Raising & Lowering Indexes

TLDRThis video tutorial delves into tensor calculus, focusing on the concept of raising and lowering indices. It explains how the metric tensor serves as a fundamental conversion factor between covariant and contravariant components of vectors and basis vectors. The process of index manipulation is demonstrated through various tensor ranks, highlighting the importance of convention in tensor notation. The video also covers the symmetric property of tensors and introduces the 'flip-flop' technique for dummy indices, providing a comprehensive guide to tensor operations.

Takeaways

- 📚 The video discusses the concept of raising and lowering indices in tensor calculus, building on the understanding of contraction.

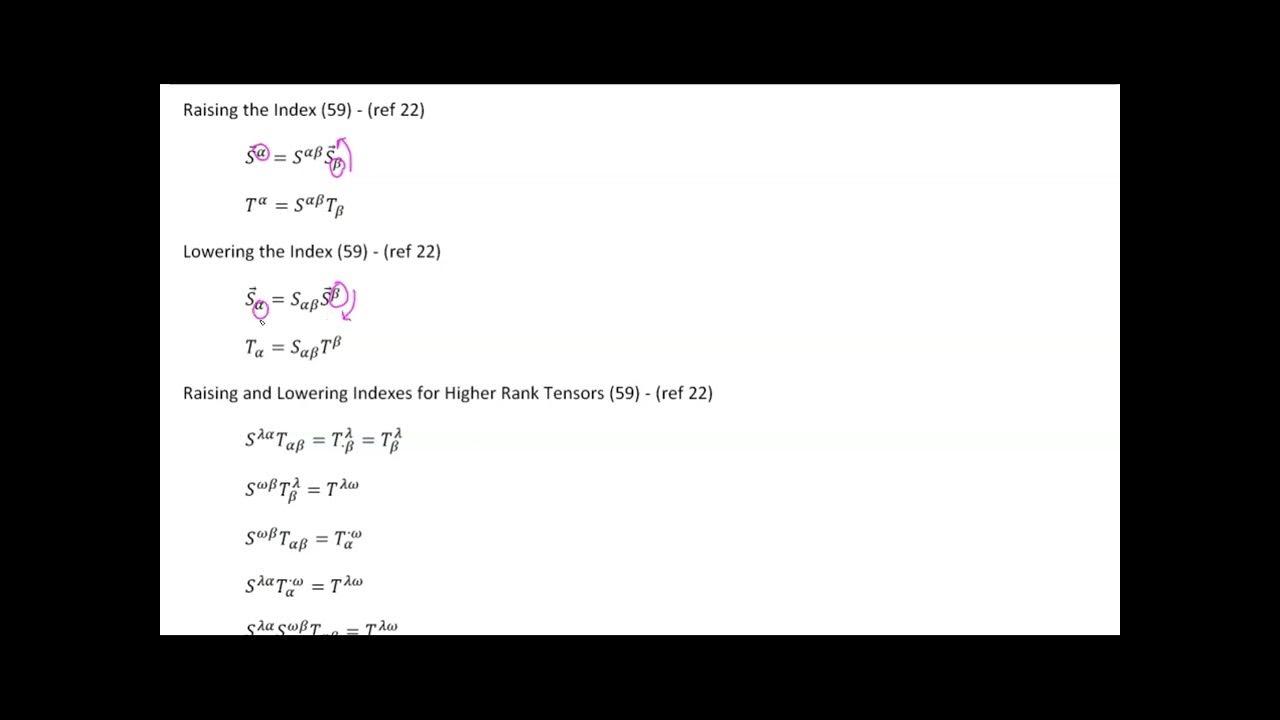

- 🔄 Raising an index involves using the contravariant metric tensor to convert a covariant basis vector into a contravariant one, and vice versa for lowering an index.

- 🧠 The process of index manipulation is crucial for transitioning between covariant and contravariant components of vectors and tensors.

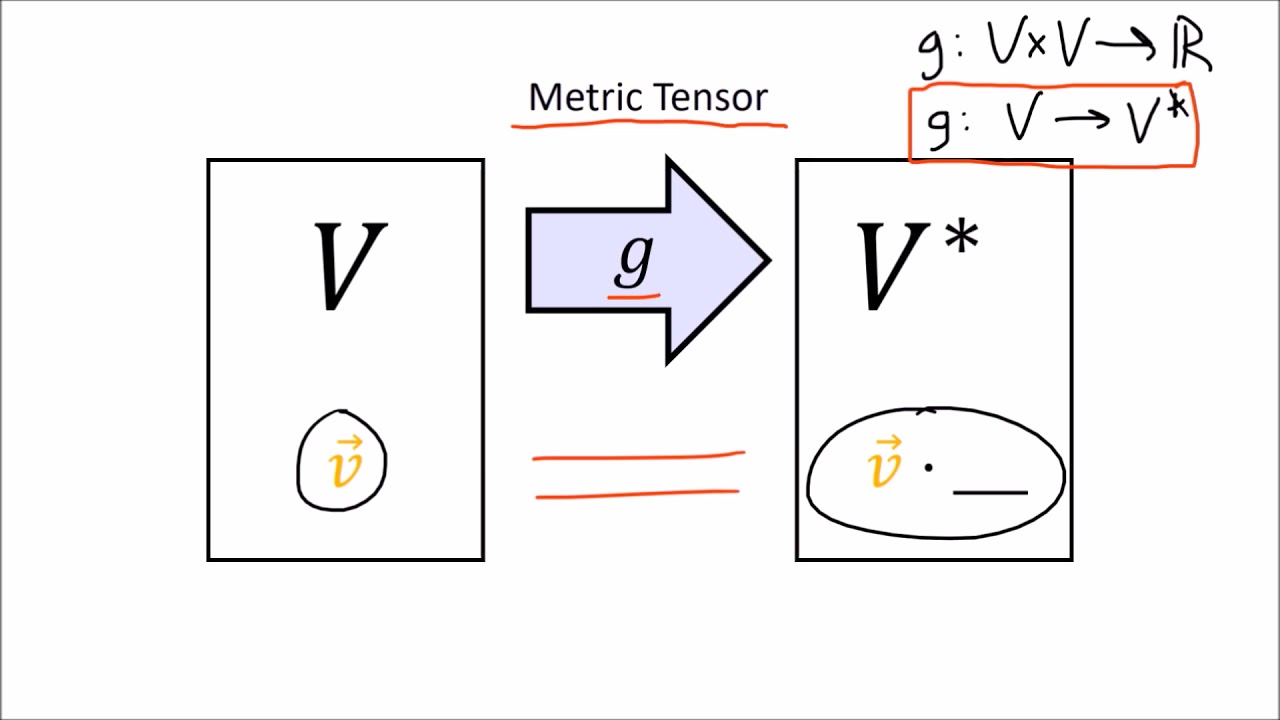

- 📈 The script explains that the metric tensor acts as a fundamental conversion factor between covariant and contravariant components.

- 🔑 The video demonstrates that the covariant and contravariant components of a vector are equivalent, carrying the same information about the vector.

- 🤔 The script introduces the concept of 'flip-flop' for dummy indices in contractions, allowing for the interchange of positions without changing the result.

- 📉 The importance of the order of index raising is highlighted, especially for tensors that are not symmetric, where the order affects the outcome.

- 📚 The script provides an in-depth example involving a sixth-rank tensor to illustrate the process of index lowering.

- 📝 Conventions for the placement of indices in tensor expressions are discussed, emphasizing that upper indices are typically placed to the left of lower indices.

- 🔍 An exception is noted for symmetric second-rank tensors, where the order of index raising does not matter due to the tensor's symmetry.

- 🔬 The video concludes by reinforcing the utility of these index manipulation techniques for working with tensors of higher ranks in various mathematical and physical contexts.

Q & A

What is the purpose of raising and lowering indices in tensor calculus?

-Raising and lowering indices in tensor calculus allows for the conversion between covariant and contravariant components of vectors and tensors, providing flexibility in mathematical expressions and calculations.

How does the contravariant metric tensor relate to the covariant metric tensor?

-The contravariant metric tensor is the matrix inverse of the covariant metric tensor, and their product results in the identity matrix, indicating that they are inverse operations of each other.

What is the significance of the Kronecker delta in the context of raising and lowering indices?

-The Kronecker delta is used in the process of raising and lowering indices as it absorbs an index during the contraction process, effectively 'raising' or 'lowering' the index in the tensor expression.

Can you explain the concept of a dummy index in tensor contraction?

-A dummy index is a temporary index used in tensor contractions that is not displayed in the final expression. It is used to facilitate the contraction process and can be renamed without affecting the outcome.

What is the flip-flop operation in the context of tensor contractions?

-The flip-flop operation refers to the ability to interchange the positions of dummy indices in a contraction without changing the result. This is particularly useful when simplifying or rearranging tensor expressions.

How does the metric tensor act as a conversion factor between covariant and contravariant components?

-The metric tensor serves as a fundamental tensor that connects covariant and contravariant components by allowing the transformation between them through the process of raising and lowering indices.

What is the difference between a covariant and a contravariant basis vector?

-A covariant basis vector is associated with the covariant metric tensor, while a contravariant basis vector is associated with the contravariant metric tensor. They are related through the metric tensor and can be converted into each other using the process of raising and lowering indices.

Why is the order of raising indices important when dealing with non-symmetric tensors?

-The order of raising indices is crucial for non-symmetric tensors because raising one index at a time can result in different tensor expressions. Only symmetric tensors allow for the interchangeability of index positions without affecting the tensor's value.

How can you represent a vector using its covariant and contravariant components?

-A vector can be represented using its covariant components times the covariant basis vector or its contravariant components times the contravariant basis vector. Both representations carry the same information about the vector.

What is the convention for placing upper and lower indices in tensor expressions?

-The convention is to place upper indices to the left of lower indices in tensor expressions. This helps in understanding the structure of the tensor and the positions of the indices after raising or lowering operations.

Outlines

📚 Introduction to Raising and Lowering Indexes in Tensor Calculus

This paragraph introduces the concept of raising and lowering indexes in tensor calculus, building upon the understanding of contraction. It explains how the contravariant metric tensor is defined as the inverse of the covariant metric tensor and how these interact with basis vectors to form inner products. The process of altering the meaning of an index from free to dummy and vice versa is discussed, leading to the derivation of expressions that are fundamental to understanding the operations of raising and lowering indexes.

🔄 Operations of Index Raising and Lowering with Basis Vectors

The second paragraph delves into the operations of index raising and lowering, specifically with basis vectors. It demonstrates how to convert between covariant and contravariant basis vectors using the metric tensor. The paragraph also illustrates the process of raising and lowering indexes with scalar components of a vector, showing that these operations are inverses of each other and can be applied to both basis vectors and their scalar components.

🔗 Application of Index Operations to Higher Rank Tensors

This section extends the concept of index raising and lowering to higher rank tensors. It explains how to perform contractions to change the rank of a tensor and how the order of these operations can affect the resulting tensor. The paragraph emphasizes the importance of understanding the conventions used when representing tensors with raised and lowered indexes, especially when dealing with mixed tensors.

📘 Detailed Example of Index Lowering in a Sixth Rank Tensor

The fourth paragraph provides a detailed example of lowering an index in a sixth rank tensor. It explains the process step by step, including the use of placeholders (dots) to indicate the positions of raised and lowered indexes. The paragraph also discusses conventions regarding the placement of these placeholders and the importance of following these conventions to avoid ambiguity.

🔄 The Flip-Flop Operation and Symmetry in Tensors

The final paragraph discusses the flip-flop operation, which allows for the interchange of dummy indices in a tensor contraction. It also touches on the special case of symmetric tensors, where certain forms of tensors can be represented without ambiguity due to their symmetry. The paragraph concludes with a review of the key points covered in the video, reinforcing the understanding of raising and lowering indexes in various tensor operations.

Mindmap

Keywords

💡Tensor

💡Contraction

💡Covariant Metric Tensor

💡Contravariant Metric Tensor

💡Raising and Lowering Indices

💡Basis Vector

💡Inner Product

💡Symmetric Tensor

💡Dummy Index

💡Flip-Flop

💡Higher Rank Tensors

Highlights

Introduction of the video on tensor calculus, focusing on the technique of raising and lowering indices.

Explanation of the contravariant metric tensor as the matrix inverse of the covariant metric tensor.

The matrix product of covariant and contravariant metric tensors yielding the Kronecker delta or identity matrix.

Definition of the contravariant basis vector through an inner product with the covariant basis vector and metric tensor.

Formation of a contraction with the covariant metric tensor to derive the contravariant basis vector.

Introduction of the concept of raising an index from a free index to a dummy index and vice versa.

Demonstration of the process to derive the covariant basis vector from the contravariant basis vector.

Explanation of the inverse operations of raising and lowering indices for basis vectors.

Discussion on the representation of any vector as a linear combination using covariant and contravariant components.

Derivation of expressions to replace contravariant basis vectors with equivalent expressions.

Introduction of index renaming and reordering in tensor expressions for simplification.

Illustration of the scalar component's equivalence to a derived expression through tensor manipulation.

Introduction of the concept of raising and lowering indices for vector components, not just basis vectors.

Explanation of the metric tensor as a fundamental tensor that connects covariant and contravariant components.

Application of index raising and lowering to higher rank tensors, specifically second rank tensors.

Discussion on the convention of placing upper indexes to the left of lower indexes in tensor notation.

Clarification on the difference between tensors with mixed indices and the importance of order in raising indices.

Exception rule for symmetric tensors allowing for the omission of dots in tensor notation.

Introduction of the 'flip-flop' operation for dummy indices in tensor contractions.

Final review summarizing the ability to raise and lower indices and the importance of the metric tensor.

Transcripts

Browse More Related Video

5.0 / 5 (0 votes)

Thanks for rating: