Tensors for Beginners 16: Raising/Lowering Indexes (with motivation, sharp + flat operators)

TLDRThis educational video explores the concept of raising and lowering tensor indices in the context of vector and covector spaces. It discusses the challenges of creating a consistent correspondence between vectors and covectors across different bases and introduces the metric tensor as a tool for establishing a basis-independent partnership. The video also explains the use of the inverse metric tensor for index raising, and highlights the utility of these operations in manipulating tensors of any rank, concluding with an introduction to the flat and sharp operators for converting between vector and covector components.

Takeaways

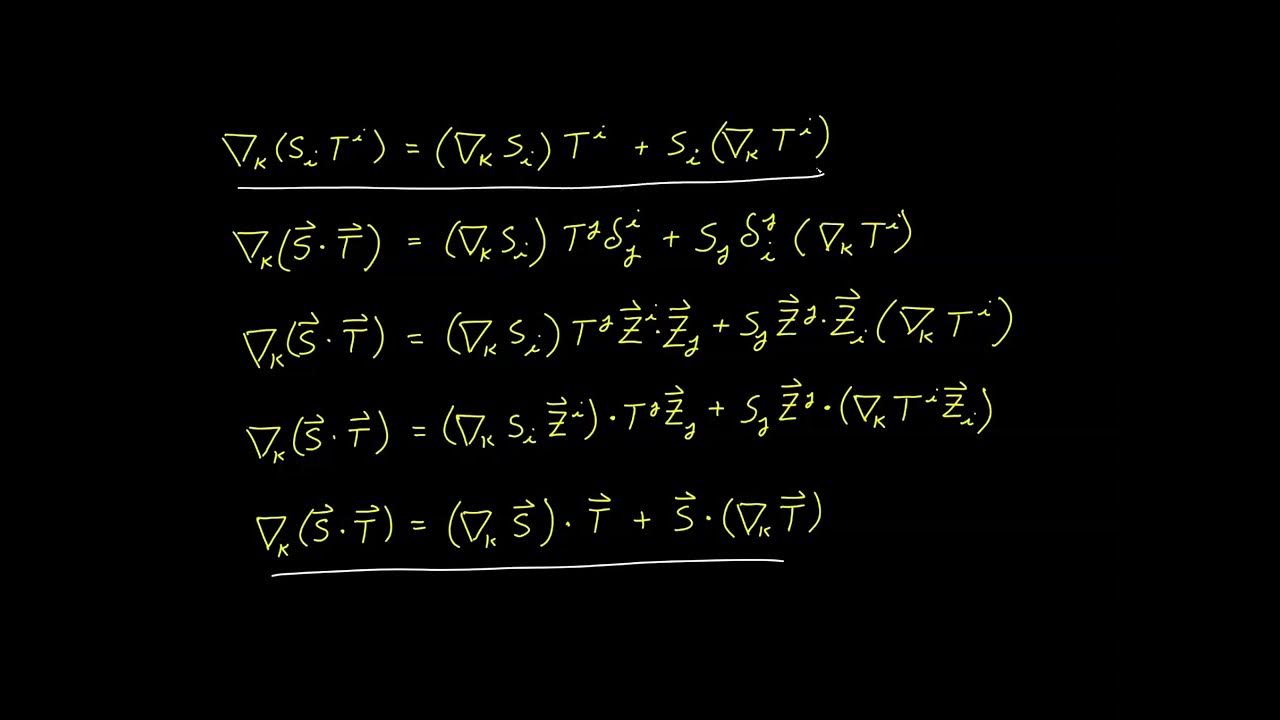

- 📚 The video discusses the concept of raising and lowering tensor indexes using non-standard tensor product notation.

- 🌐 It introduces the vector space 'V' and its dual space 'V*' where vectors and covectors reside respectively.

- 🔄 The script explores creating a correspondence between vectors in 'V' and covectors in 'V*', initially through basis vectors and covectors.

- 🔑 The problem with the initial approach is highlighted when changing the basis, showing that basis vectors and covectors transform differently under such changes.

- 🔄 A new method is proposed to establish a correspondence between vectors and covectors without using a basis, ensuring invariance under basis changes.

- 📉 The script explains that the metric tensor is used to convert vector components into covector components by lowering indices.

- 📈 The inverse metric tensor is introduced for the reverse operation, raising indices to convert covectors back into vectors.

- 🎼 The flat (♭) and sharp (♯) operators are introduced as alternative notations for lowering and raising indices, respectively.

- 🔢 The components of a vector 'v' and its covector partner 'v-dot-something' are shown to be related through the metric tensor.

- 🔗 The concept of tensor index manipulation is generalized to tensors of any size, not just vectors and covectors.

- 📝 The importance of understanding the metric tensor as a tool for both scalar output from vector pairs and covector generation from vectors is emphasized.

Q & A

What is the main topic discussed in the video?

-The main topic discussed in the video is the concept of raising and lowering tensor indexes in the context of vector spaces and their dual spaces.

What is the difference between vector space V and its dual space V*?

-Vector space V is where vectors live, while the dual space V* is where covectors, which are functional counterparts to vectors, reside.

How does the video initially suggest creating a correspondence between vectors and covectors?

-The video initially suggests creating a correspondence by pairing basis vectors e_i in V with basis covectors epsilon^i in V*.

What problem arises with the initial approach of creating correspondence between vectors and covectors?

-The problem is that the correspondence looks nice in one basis but becomes inconsistent when the basis changes, as basis vectors and covectors transform differently under a change of basis.

How does the video propose to avoid the issue of basis dependency when creating vector-covector correspondence?

-The video proposes to avoid the issue by not using a basis at all and instead introducing a covector 'v-dot-something' where 'something' is an input slot for another vector.

Why is the function 'v-dot-something' considered a covector?

-The function 'v-dot-something' is considered a covector because it is a linear function from the vector space V to a scalar, satisfying the properties of linearity such as scaling and distributivity.

What is the role of the metric tensor in the process of lowering and raising tensor indexes?

-The metric tensor is used to convert between the upstairs (contravariant) and downstairs (covariant) components of vectors and covectors by performing a summation with the metric tensor components.

What is the special case where 'v' with downstairs components is equal to 'v' with upstairs components?

-The special case is when the metric tensor components are given by the Kronecker delta, which corresponds to an orthonormal coordinate system.

What is the inverse metric tensor and how is it related to the ordinary metric tensor?

-The inverse metric tensor is a tensor that, when combined with the ordinary metric tensor in a summation, results in the Kronecker delta. It is used to raise indexes, as opposed to the ordinary metric tensor, which lowers indexes.

How can the flat and sharp operators be used to denote the lowering and raising of tensor indexes?

-The flat operator (♭) is used to denote the lowering of indexes, converting a vector to its corresponding covector. The sharp operator (♯) is used to denote the raising of indexes, converting a covector to its corresponding vector.

Can the operations of raising and lowering indexes be applied to tensors of any size?

-Yes, the operations of raising and lowering indexes can be applied to tensors of any size, not just vectors and covectors.

Outlines

📚 Tensor Index Raising and Lowering Basics

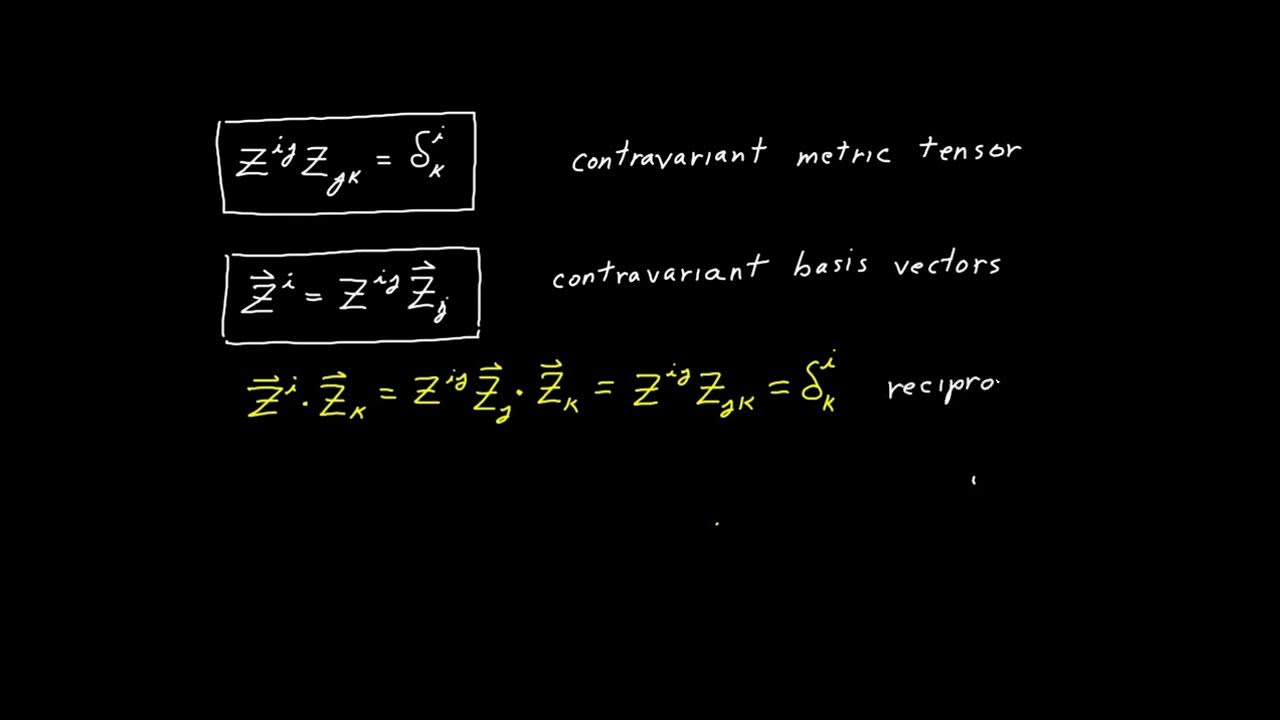

This paragraph introduces the concept of raising and lowering tensor indices, using a non-standard tensor product notation. It explains the familiar vector spaces V and V*, and explores the possibility of creating a correspondence between vectors in V and covectors in V*. The method involves pairing basis vectors with basis covectors and expanding an arbitrary vector v in terms of basis vectors to find its covector partner in V*. However, a problem arises when changing the basis, as the transformation of basis vectors and covectors does not maintain the correspondence, leading to an inconsistent approach.

🔄 Overcoming Basis Dependency in Tensor Correspondence

The paragraph discusses the limitations of the initial approach to tensor correspondence and proposes a new method that avoids using a basis entirely. It introduces the concept of a covector 'v-dot-something', which is shown to be a member of V* by demonstrating its linearity through the properties of the dot product. This new method is basis-independent, meaning it maintains consistency across different basis choices, and ensures that vectors and their covector partners scale uniformly under changes of basis.

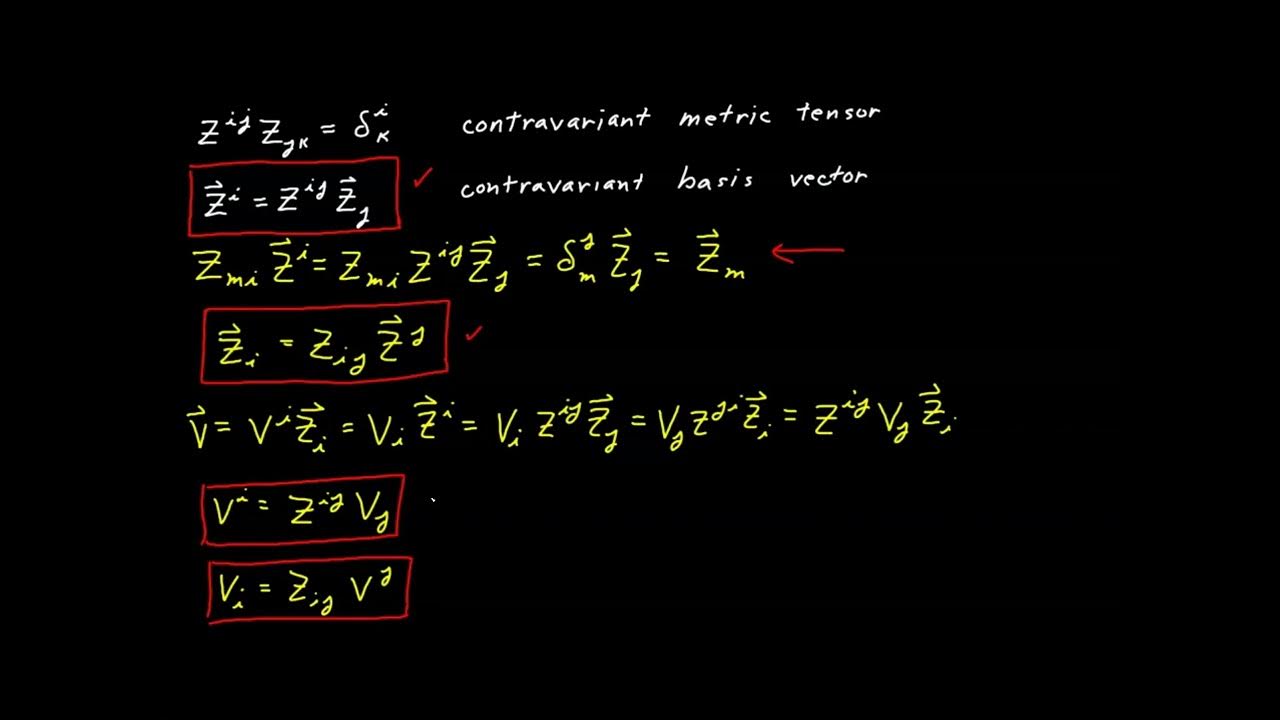

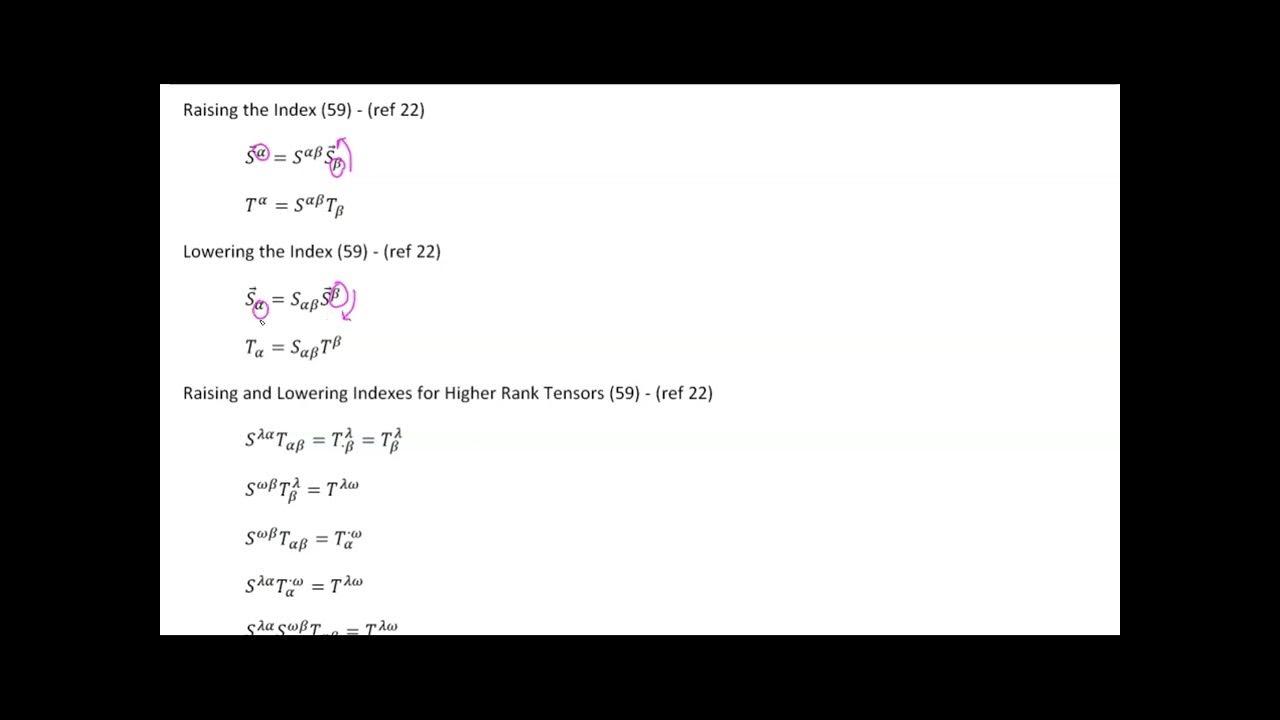

🔢 Metric Tensor and Index Manipulation

This section delves into the components of the covector 'v-dot-something' and how they relate to the metric tensor 'g'. It explains the process of expanding vectors and the metric tensor as linear combinations and using the metric tensor to compute the output. The paragraph also introduces the concept of the inverse metric tensor, which is used to raise indices, in contrast to the ordinary metric tensor that lowers indices. The discussion includes the use of these tensors to convert between vector and covector components and the conditions under which vectors and covectors are equivalent.

🎼 Flat and Sharp Operators for Tensor Index Conversion

The final paragraph summarizes the use of the metric tensors for index lowering and raising, not only for vectors and covectors but also for tensors of any size. It introduces the flat and sharp operators as alternative notations for converting between vector and covector components. The flat operator is likened to lowering the pitch in music, while the sharp operator raises it, providing a visual and conceptual tool for understanding the index manipulation process.

Mindmap

Keywords

💡Tensor Indexes

💡Vector Spaces

💡Dual Space (V*)

💡Basis Vectors and Covectors

💡Linear Combination

💡Change of Basis

💡Covariant and Contravariant

💡Metric Tensor

💡Inverse Metric Tensor

💡Flat and Sharp Operators

💡Kronecker Delta

Highlights

Introduction of the concept of raising and lowering tensor indices using non-standard tensor product notation.

Explanation of vector spaces V and V*, where vectors and covectors reside respectively.

Discussion on creating a correspondence between vectors of V and covectors of V*.

Assignment of covector partners to basis vectors using epsilon notation.

Method to find the covector partner of an arbitrary vector by expanding it as a linear combination of basis vectors.

Identification of issues with the approach when changing the basis of the vector space.

Introduction of a new method to create a correspondence between vectors and covectors without using a basis.

Demonstration that 'v-dot-something' is indeed a covector and a member of V*.

Explanation of how the new correspondence avoids issues with basis changes and maintains consistency.

Introduction of the metric tensor and its role in converting vector components to covector components.

Process of determining the components of 'v-dot-something' using the metric tensor.

Clarification that 'v' with downstairs components and 'v' with upstairs components are not the same and require the metric tensor for conversion.

Introduction of the inverse metric tensor and its definition in relation to the ordinary metric tensor.

Explanation of how the inverse metric tensor is used to raise indices and convert from covectors to vectors.

Application of raising and lowering operations on the components of tensors of any size.

Introduction of the flat and sharp operators as alternative notations for index conversion.

Summary of the process of creating vector-covector partners and the role of metric tensors in index manipulation.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: