What is Multicollinearity? Extensive video + simulation!

TLDRThis video delves into the concept of multicollinearity, a phenomenon in regression analysis where the predictor variables are correlated with each other, potentially obscuring their individual effects on the outcome. The video explains the intuition behind multicollinearity, its impact on regression results, and methods for detection such as bivariate correlations and variance inflation factors. It also discusses remedies, including doing nothing, removing or combining correlated variables, and using techniques like partial least squares. A simulation is presented to illustrate the effects of varying degrees of multicollinearity on regression coefficients and standard errors, highlighting that even high correlations may not always be problematic.

Takeaways

- 📊 Multicollinearity refers to a situation in regression analysis where the independent variables (X variables) are correlated with each other, potentially obscuring their individual effects on the dependent variable (Y).

- 🔍 Detecting multicollinearity can be done through bivariate correlations, where high correlations (typically above 0.9) between X variables might indicate a problem, and variance inflation factors (VIFs), which provide a more robust detection method.

- 💡 The presence of multicollinearity does not bias the coefficients but can greatly inflate the variance of the affected variables, leading to larger standard errors and potentially non-significant p-values.

- 🤔 The impact of multicollinearity is that it makes it difficult to determine the individual contributions of correlated X variables to the dependent variable, as they may be 'fighting' for the effect on Y.

- 👉 In the case of perfect multicollinearity, where X variables are perfectly correlated, the regression model breaks down and cannot provide any output, as it cannot distinguish between the effects of the correlated variables.

- 📝 The script provides a simulation example where a dataset of law firm salaries is used to demonstrate the effects of multicollinearity on regression coefficients and standard errors as additional correlated X variables are introduced.

- 🛠️ Remedies for multicollinearity include doing nothing if the model is for prediction and the coefficients are unbiased, removing one of the correlated variables, combining the correlated variables into a new one, or using advanced techniques like partial least squares or principal component analysis.

- 🔎 The script emphasizes that even high correlations (e.g., 0.9 and 0.95) may not necessarily be problematic and that context and judgment are important in deciding how to address multicollinearity.

- 📈 The example of the Olympic swimmer's energy expenditure illustrates perfect multicollinearity, where the number of laps can be derived from the total distance, making the inclusion of both variables in the model redundant.

- 🌟 The script concludes with the importance of understanding multicollinearity, its effects on regression analysis, and the various options available to address it in practical scenarios.

Q & A

What is the main topic of the video script?

-The main topic of the video script is multicollinearity, its effects on regression analysis, how to detect it, and possible remedies for dealing with it.

What is the definition of multicollinearity given in the script?

-Multicollinearity is a situation in regression analysis where the independent variables (X variables) are themselves related, making it difficult to distinguish their individual effects on the dependent variable (Y).

How does multicollinearity affect regression analysis?

-Multicollinearity affects regression analysis by inflating the variance of the coefficients, making the standard errors larger. This can lead to high p-values, suggesting that the variables are not statistically significant, even when they might be.

What are the two methods of detecting multicollinearity discussed in the script?

-The two methods of detecting multicollinearity discussed are bivariate correlations and variance inflation factors (VIFs). Bivariate correlations look at the pairwise relationships between X variables, while VIFs assess the extent to which an X variable is explained by other X variables in the model.

What is the rule of thumb for the correlation threshold that might indicate multicollinearity?

-The rule of thumb mentioned in the script is that a correlation greater than about 0.9 could start being a problem, although it's noted that this isn't a hard and fast rule and correlations up to 0.95 can still be acceptable depending on the context.

What happens when there is perfect multicollinearity?

-In the case of perfect multicollinearity, the regression model breaks down and cannot provide any output because the computer encounters a 'near singular matrix' error. This means that the variables are perfectly correlated, and the model cannot distinguish their individual effects.

What are some real-world examples of situations that could lead to multicollinearity?

-Examples include a model assessing energy burned by an Olympic swimmer where the total distance covered and the number of laps are included, setting water pressure in a diver based on distance from the ocean surface and ocean floor, and a sales model that includes advertising spend and dummy variables for each quarter without removing one as a base case.

What are the four options for dealing with multicollinearity mentioned in the script?

-The four options are: 1) Do nothing and use the model for prediction if the coefficients are unbiased. 2) Remove one of the correlated variables. 3) Combine the correlated variables into a new variable. 4) Use advanced techniques like partial least squares or principal components analysis.

How does the script demonstrate the effects of multicollinearity on regression coefficients and standard errors?

-The script describes a simulation where a dataset of law firm salaries is used, and a new X variable highly correlated with years of experience is added. The script then shows how the coefficient and standard error of experience change as the correlation between X and experience increases, illustrating the effects of multicollinearity.

What is the significance of the p-value in the context of multicollinearity?

-The p-value indicates the statistical significance of a variable's effect on the dependent variable. When multicollinearity is present, the p-values may become high, suggesting that the variables are not significantly affecting the dependent variable, even when they might have an actual effect.

How does the script suggest dealing with multicollinearity if the model is used for prediction?

-If the model is used for prediction, the script suggests that doing nothing might be an option because as long as the coefficients are unbiased, the model can still be used for prediction purposes, even if the standard errors are inflated due to multicollinearity.

Outlines

🧐 Introduction to Multicollinearity

This paragraph introduces the concept of multicollinearity, explaining that it occurs when independent variables (X variables) used in a regression model are correlated with each other. The speaker uses the example of a lawyer's salary being dependent on both their years of experience and age, which are naturally related, to illustrate this point. The paragraph sets the stage for a deeper exploration of multicollinearity, its effects on regression analysis, and how to diagnose and deal with it.

🔍 Detecting Multicollinearity

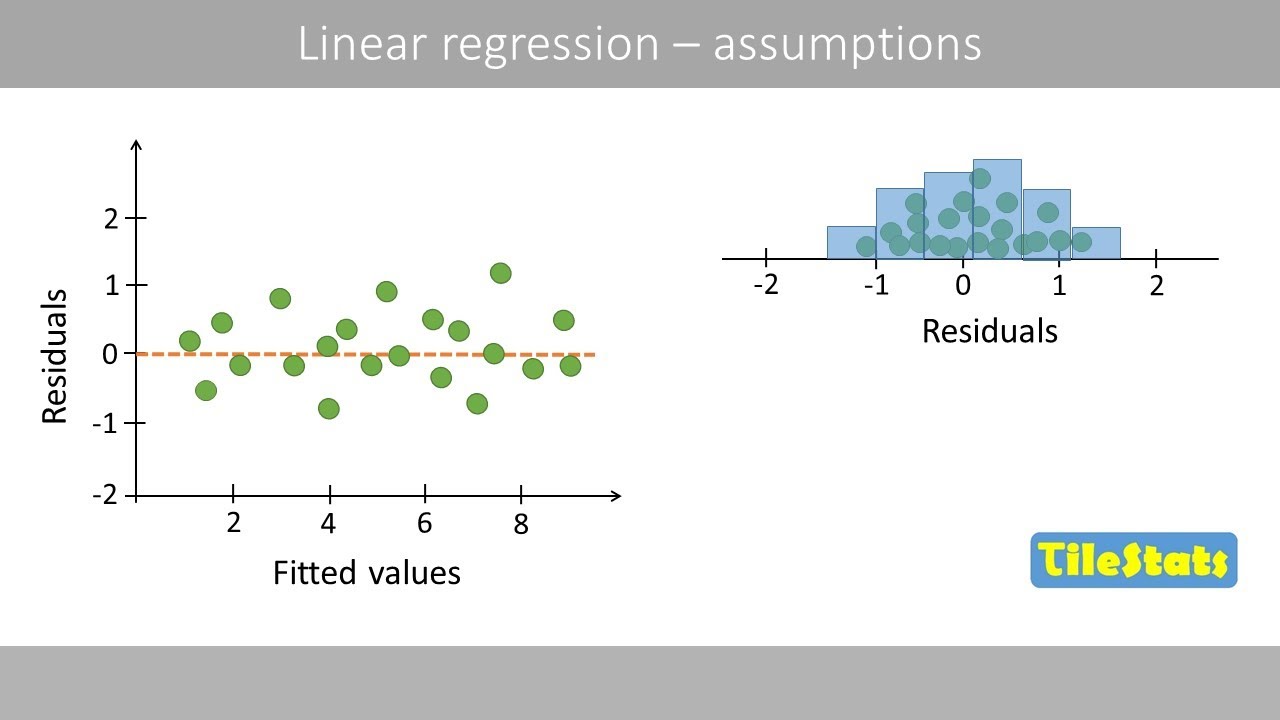

The speaker discusses two methods for detecting multicollinearity. The first method involves examining bivariate correlations between pairs of X variables and looking for correlations greater than a certain threshold, typically around 0.9. The second method is more robust and involves calculating variance inflation factors (VIFs) for each X variable. A VIF above ten is often considered problematic, but the speaker emphasizes that these are guidelines rather than hard rules. The paragraph also explains how multicollinearity affects the standard errors and p-values of the coefficients in a regression model, leading to uncertainty about the individual effects of the correlated variables.

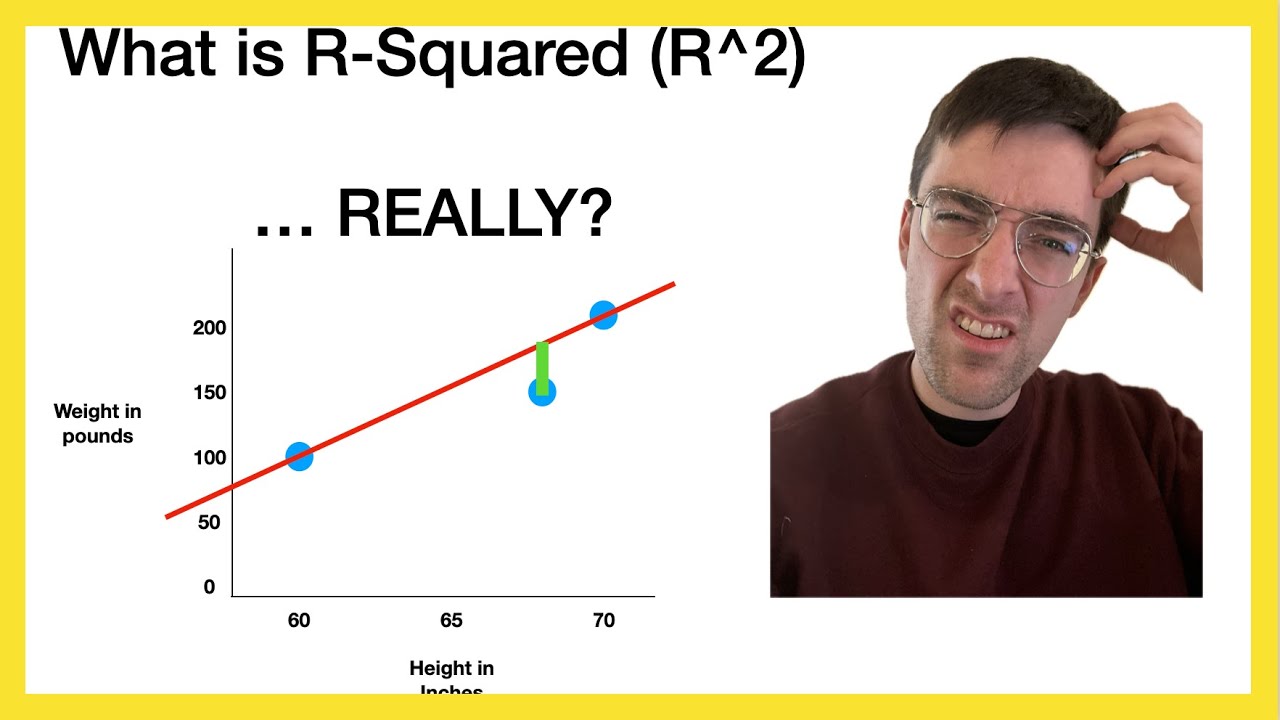

📉 Multicollinearity's Impact on Regression

This paragraph delves into the practical implications of multicollinearity on regression analysis. The speaker explains that while the coefficients themselves remain unbiased, the standard errors become inflated, leading to higher p-values that may question the statistical significance of the variables. The overall model fit, such as R-squared and F-statistics, is not affected, meaning the model's predictive ability remains intact. The speaker also discusses various strategies to address multicollinearity, including doing nothing if the model is for prediction, removing or combining correlated variables, or using advanced techniques like partial least squares or principal component analysis.

🧠 Simulation of Multicollinearity

The speaker presents a simulation to demonstrate the effects of multicollinearity on regression coefficients and standard errors. By creating a dataset of law firm salaries and adding a highly correlated variable, the speaker shows how the standard error of the experience variable increases with the strength of the correlation. The simulation illustrates that even at high correlations, the coefficients remain relatively stable, but the standard errors and p-values can change significantly, potentially leading to non-significance of variables that were previously significant.

🚫 Perfect Multicollinearity and Real-World Examples

In this paragraph, the speaker discusses the concept of perfect multicollinearity, where X variables are perfectly correlated, leading to a breakdown of the regression model. The speaker explains that statistical software will not produce a result in such cases due to the 'near singular matrix' error. The speaker also provides real-world examples where perfect multicollinearity can occur, such as using the number of laps and total distance covered in a swimmer's training, or the meters from the surface and ocean floor in diving. These examples highlight the importance of careful variable selection to avoid multicollinearity issues.

📢 Conclusion and Further Resources

The speaker concludes the video by summarizing the key points discussed about multicollinearity and its impact on regression analysis. The speaker encourages viewers to subscribe for more content on regression and other statistical topics, and also mentions the availability of a podcast for further learning. The speaker invites viewers to send suggestions and engage in discussions through email or comments on the video.

Mindmap

Keywords

💡Regression

💡Multicollinearity

💡Bivariate Correlations

💡Variance Inflation Factor (VIF)

💡Standard Errors

💡Coefficients

💡R-squared

💡Dummy Variables

💡Perfect Multicollinearity

💡Omitted Variable Bias

💡Principal Components Analysis (PCA)

Highlights

Exploring multicollinearity and its impact on regression analysis.

Multicollinearity occurs when independent variables (X variables) are related, making it difficult to determine individual effects on the dependent variable (Y).

The example of a lawyer's salary being potentially influenced by both years of experience and age, where multicollinearity can obscure the individual effects.

Interpreting regression coefficients and the challenge of holding other variables constant when multicollinearity is present.

The impact of multicollinearity on standard errors and p-values, potentially leading to statistically insignificant results.

The model fit is not affected by multicollinearity; it only influences the coefficients' variance and the ability to make predictions remains intact.

Detecting multicollinearity through bivariate correlations and the rule of thumb that correlations greater than 0.9 might be problematic.

Variance inflation factors (VIFs) as a more robust method for multicollinearity detection, with a rule of thumb suggesting VIFs above ten as problematic.

Options for dealing with multicollinearity, including doing nothing, removing one of the correlated variables, or combining the correlated variables into a new variable.

The potential issue of omitted variable bias when removing variables to address multicollinearity.

The use of principal components analysis as a method to address multicollinearity, though it is rarely used in practice.

A simulation demonstrating the effects of different levels of multicollinearity on regression coefficients and standard errors.

The surprising finding that even high levels of correlation (e.g., 0.9) may not be as problematic as expected in a regression model.

The concept of perfect multicollinearity, where variables are perfectly correlated, leading to a breakdown of the regression model.

Real-world examples where perfect multicollinearity can occur, such as energy expenditure models in athletes and water pressure settings for divers.

The dummy variable trap in regression analysis, where including all possible dummy variables can lead to perfect multicollinearity.

The importance of understanding multicollinearity for accurate regression analysis and interpretation of statistical results.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: