Video 47 - Cofactors

TLDRThis video delves into tensor calculus, focusing on the definition and analysis of cofactors within a determinant. The presenter demonstrates how to derive cofactors by taking partial derivatives of a determinant with respect to its elements, leading to the concept of a cofactor matrix. The video also explores important properties of cofactors, including their relationship to the determinant inverse, and shows how cofactors can be used to easily invert a matrix. The explanations are applicable to systems with varying index structures, providing foundational insights for future topics.

Takeaways

- 📐 The video defines and analyzes the cofactors of a determinant, specifically for a system with one upper index and one lower index.

- 🧮 The derivative of the determinant with respect to one of the elements is calculated using the product rule.

- 🔄 The relationship between the partial derivative and the original determinant is captured using a pair of Delta terms.

- ⚖️ Index renaming, swapping, and the distribution of the Delta factor are key steps in simplifying the expression.

- 🔄 Cofactors are derived and defined using a specific expression, with a one-to-one relationship between system elements and cofactors.

- ♾️ The cofactor matrix is introduced, with each element in the system having a corresponding cofactor.

- 🔀 Indexes within the expression are shown to be skew symmetric, meaning the sign changes when any two indexes are swapped.

- 🔗 The contraction of a system component with its cofactor is either zero or three times the determinant value, depending on the indexes.

- 🧩 The expression involving cofactors is proportional to the inverse of the original matrix, with the determinant as the constant of proportionality.

- 🔗 The concepts apply equally to systems with two upper indexes or two lower indexes, with the analysis being similar.

Q & A

What is the main focus of this video in the tensor calculus series?

-The main focus of this video is to define and analyze the cofactors of a determinant, particularly in the context of a system with one upper index and one lower index.

How is the derivative of a determinant with respect to a system element calculated?

-The derivative of a determinant with respect to a system element is calculated using the product rule. The process involves partial derivatives and the use of Delta terms to account for cases where indexes are equal or different.

What role do the Delta terms play in the calculation?

-The Delta terms, such as Delta_lr and Delta_iu, are used to capture the relationship where the derivative result is 1 when the indexes are equal and 0 when they are not. These terms ensure that only the relevant components contribute to the determinant.

How are cofactors defined in the context of this video?

-Cofactors are defined using an expression that involves taking the partial derivative of the determinant with respect to the system element. The result of this operation gives the cofactor corresponding to each element in the system.

What is the significance of the cofactor matrix?

-The cofactor matrix is significant because it represents the collection of all cofactors corresponding to the elements of the original system. There is a one-to-one relationship between the elements of the system and their corresponding cofactors.

How does the video explain the anti-symmetry property of cofactors?

-The video explains the anti-symmetry property by showing that swapping any two indexes in the cofactor expression changes the sign of the expression. This implies that the cofactors are skew-symmetric, meaning the indexes must be unique for the expression to be non-zero.

What happens if the indexes i and m in the cofactor contraction are not equal?

-If the indexes i and m are not equal in the cofactor contraction, the expression evaluates to zero. This is because the uniqueness of the indexes is violated, making it impossible for the expression to be non-zero.

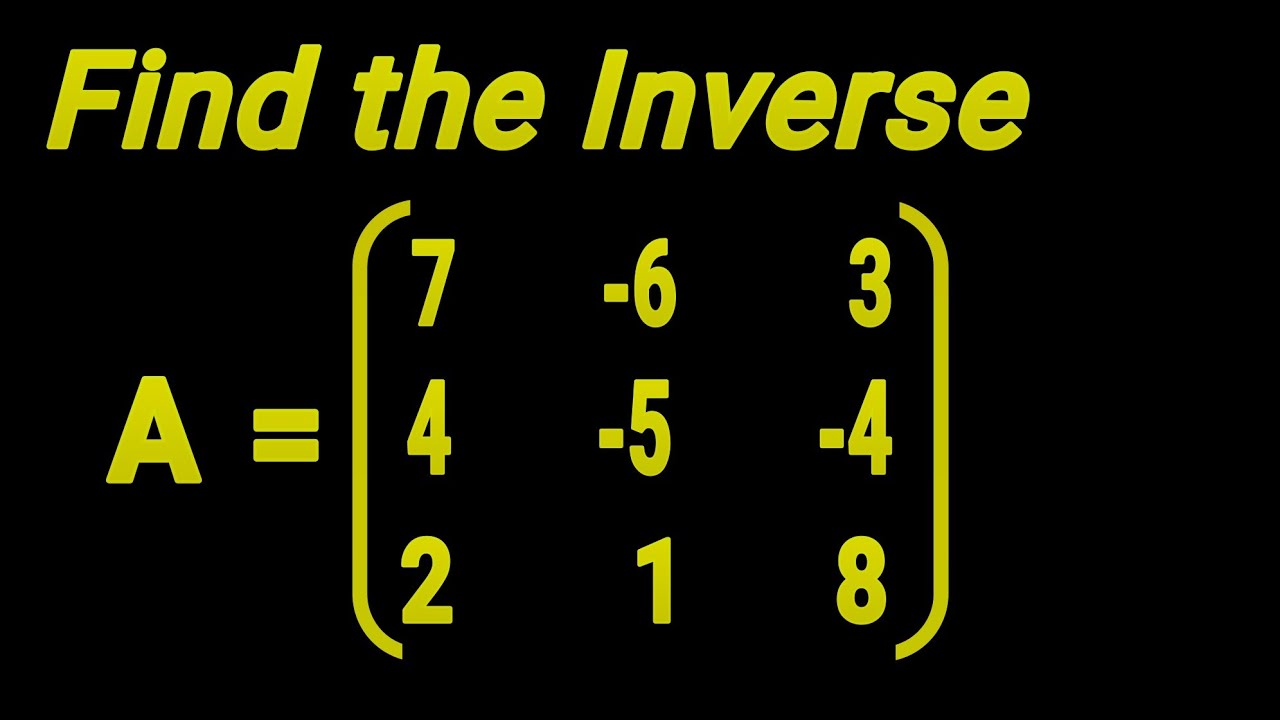

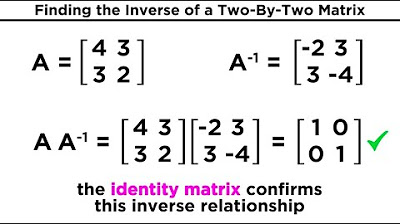

How does the cofactor matrix relate to the inverse of the original system matrix?

-The cofactor matrix is proportional to the inverse of the original system matrix. The constant of proportionality is the determinant value of the system. This relationship provides an easy method to calculate the inverse of a matrix.

What is the importance of the chronic or Delta function in this analysis?

-The chronic or Delta function is important because it succinctly expresses the result of the cofactor contraction, indicating that the cofactor matrix divided by the determinant is the inverse of the original system matrix.

Can the analysis and results discussed be applied to systems with different index structures?

-Yes, the analysis and results can be applied to systems with different index structures, such as those with two upper indexes or two lower indexes. The process is similar, though it requires using permutation symbols instead of Delta terms.

Outlines

🧩 Tensor Calculus: Cofactors and Determinants

This paragraph introduces the concept of cofactors in tensor calculus. It explains the determinant of a system 'a' with mixed indices and explores the partial derivative of this determinant with respect to its components. The process involves using the product rule and the Delta function to simplify the expression, leading to the definition of cofactors. The importance of index structure is highlighted, with a focus on free and dummy indices, and the cofactor matrix is introduced as a set of elements related to the original system.

🔍 Deriving Cofactors and Their Properties

The second paragraph delves into the derivation of cofactors, emphasizing their relationship with the determinant. It discusses the index structure and how the cofactors are defined using partial derivatives. The paragraph also introduces the concept of skew symmetry in the context of cofactors, demonstrating how swapping indices affects the expression's sign. The analysis concludes with the understanding that the cofactors are proportional to the inverse of the matrix, with the determinant serving as the constant of proportionality.

📉 Skew Symmetry and Contraction of Cofactors

This section examines the skew symmetry property of the cofactor expressions, showing how the sign changes when certain index pairs are swapped. It also discusses the process of forming contractions with system components, which alters the nature of the indices involved. The analysis reveals that the expressions are anti-symmetric with respect to specific index pairs, leading to the conclusion that for the expression to be non-zero, the indices must be unique and form a permutation of 1, 2, and 3.

🔄 Inversion of Matrices Using Cofactors

The final paragraph summarizes the process of using cofactors to invert a matrix. It explains that by dividing the cofactor by the determinant, one obtains the multiplicative inverse of the original matrix. This method provides an efficient way to calculate the inverse, highlighting the relationship between the cofactor matrix and the determinant. The paragraph also generalizes the results to systems with two upper or two lower indices, using permutation symbols instead of the Delta function, and concludes with a brief recap of the video's main points.

Mindmap

Keywords

💡Tensor Calculus

💡Determinant

💡Cofactors

💡Partial Derivative

💡Delta Function

💡Index Notation

💡Product Rule

💡Skew Symmetry

💡Matrix Inversion

💡Permutation

💡One-to-One Relationship

Highlights

Introduction to the concept of cofactors in tensor calculus, focusing on their definition and analysis.

Explanation of the determinant of a system with one upper and one lower index.

Detailed process of taking the partial derivative of the determinant with respect to one of its components.

Introduction of Delta terms to capture the relationship when partial derivatives are taken, especially when indices are equal.

Explanation of the use of product rule in deriving the partial derivatives of determinant components.

Substitution of expressions with Delta terms and distribution of these terms across the system components.

Derivation and analysis of cofactors, establishing a one-to-one correspondence between system elements and their cofactors.

Demonstration that the derived relationship between cofactors and system components is consistent with index structures.

Introduction of the concept of a cofactor matrix, describing the collective relationship between system elements and cofactors.

Discussion of the anti-symmetric property of indexes, leading to the requirement that indexes must be unique permutations for non-zero values.

Exploration of the contraction process using system components and its implications for the anti-symmetric property.

Analysis of the condition under which the contraction expression equals zero or three times the determinant.

Simplification of the relationship between cofactors and the determinant using the Kronecker Delta function.

Conclusion that cofactors are proportional to the inverse of the matrix, with the determinant as the constant of proportionality.

Summary of the video, reiterating the key results and their implications for matrix inversion using cofactors.

Transcripts

Browse More Related Video

How to find The Inverse of a (3 × 3) matrix Using Determinant and Co-factor

Inverse of a 3x3 Matrix - (THE SIMPLE WAY)

Inverse of a 3x3 Matrix | Co-factor Method

How to find the Inverse of a Matrix

Inverse Matrices and Their Properties

Classic video on inverting a 3x3 matrix part 1 | Matrices | Precalculus | Khan Academy

5.0 / 5 (0 votes)

Thanks for rating: