6. Matrix Computing

TLDRThe video script delves into high-performance computing, focusing on solving systems of linear equations using matrix manipulations. It highlights the superiority of computers in handling such tasks and introduces the concept of matrix problems in scientific fields. The script discusses the use of Newton-Raphson search methods and Gaussian elimination for linear equations, and the eigenvalue problem. It emphasizes the utility of scientific subroutine libraries like JAMA for matrix computations, showcasing their efficiency and robustness. Practical aspects of matrix computing, including memory management and array handling, are also covered, along with a demonstration of using JAMA for eigenvalue problems in Java.

Takeaways

- 🧠 High performance computing (HPC) is vital for solving complex systems of linear equations, which are common in scientific problems.

- 💻 Computers excel at matrix manipulations, a task that is both repetitive and complex, making them ideal for HPC tasks that involve large matrices.

- 🔍 The script discusses a specific problem involving two weights and strings, illustrating the need for HPC to solve simultaneous equations with multiple unknowns.

- 📚 The Newton-Raphson search method is highlighted as a technique to linearize non-linear equations, allowing for their solution using HPC.

- 📉 Scientific subroutine libraries are recommended for HPC tasks due to their efficiency, robustness, and ability to minimize round-off errors.

- 🛠️ The script emphasizes the importance of understanding the underlying mathematics when using HPC, as mathematical rules still apply even with powerful computational tools.

- 🔢 The distinction between different classes of matrix problems is important for selecting the appropriate subroutine from a library.

- 📈 Gaussian elimination is presented as the preferred method for solving systems of linear equations due to its robustness and efficiency.

- 🔑 The eigenvalue problem is introduced as a more complex type of matrix problem, where the right-hand side of the equation is unknown, complicating the solution.

- 🖥️ Practical aspects of matrix computing, such as memory management and avoiding page faults, are crucial for efficient HPC.

- 🔗 The script provides an overview of various matrix subroutine libraries available for different programming languages, emphasizing their utility in solving real-world problems.

Q & A

What is the main topic discussed in the script?

-The main topic discussed in the script is high-performance computing, specifically focusing on solving systems of linear equations with computers and the use of matrix manipulations in scientific problems.

Why are computers particularly good at dealing with matrices?

-Computers are particularly good at dealing with matrices because they can efficiently perform repetitive calculations, which is a common requirement in matrix operations, and they can handle large-scale computations that would be impractical for humans to do by hand.

What is the significance of using matrix equations in high-performance computing?

-Using matrix equations in high-performance computing simplifies the representation of complex systems of equations, making it easier to solve them computationally. It also allows for a more abstract approach to problem-solving, which can be more efficient and effective when dealing with large systems.

What is an example of a physical system that can be modeled using matrix equations?

-An example given in the script is the two weights hanging by strings problem, where the angles of the strings and the tensions in them need to be determined, which can be set up as a matrix problem with nine equations and nine unknowns.

Why can't linear algebra be used to solve the equations in the two weights problem?

-Linear algebra can't be used to solve the equations in the two weights problem because the equations derived from the physics of statics are non-linear, requiring a different approach such as search techniques to find the solution.

What is the role of scientific subroutine libraries in solving matrix problems?

-Scientific subroutine libraries provide pre-written, efficient, and robust routines for solving matrix problems. They are designed to handle large-scale computations and minimize errors, often being faster and more reliable than custom-written algorithms.

Why are eigenvalue problems considered more challenging than standard linear algebra problems?

-Eigenvalue problems are more challenging because they involve finding values of a parameter (the eigenvalue) that make the system of equations solvable. This often results in having more unknowns than equations, meaning that solutions may not always exist or may require special conditions to be met.

What is the importance of understanding the mathematical principles behind matrix computations?

-Understanding the mathematical principles behind matrix computations is crucial because it allows one to correctly interpret the results provided by computer algorithms, to identify potential errors in the computations, and to ensure that the mathematical rules are not violated during the computation.

What are some practical considerations when dealing with matrix computations on a computer?

-Some practical considerations include being aware of the computer's finite memory, the number of computational steps required (often proportional to the cube of the matrix dimensions), and the potential for page faults when accessing elements in a matrix that are not currently stored in memory.

How does the script suggest one should approach the use of subroutine libraries for matrix problems?

-The script suggests that one should make use of existing subroutine libraries for matrix problems rather than writing their own routines, due to the robustness, efficiency, and reliability of these libraries. However, it also emphasizes the importance of understanding what these routines do, rather than treating them as black boxes.

Outlines

🧠 Introduction to High Performance Computing with Linear Equations

The script begins with an introduction to high performance computing (HPC), focusing on solving systems of linear equations using computers. It emphasizes the suitability of computers for matrix manipulations, a task that is less suited to human capabilities. The presenter introduces the concept of HPC, which often involves matrix operations, and recalls a previous problem involving two weights suspended by strings. This problem, involving nine unknowns, was set up as a matrix problem, highlighting the need for HPC in solving complex, real-world problems that involve simultaneous equations.

🔍 The Utility of Scientific Subroutine Libraries in HPC

The second paragraph discusses the importance of scientific subroutine libraries in high performance computing. These libraries, often available for free, contain pre-written code for solving equations and are recommended for their efficiency, speed, and robustness. The text explains that these libraries are designed to minimize round-off errors and are written with an understanding of computer architecture, making them superior to custom-written algorithms for most users. However, the presenter also notes the need for understanding the underlying algorithms, despite advocating the use of these libraries.

📚 Understanding Matrix Problems and Their Computational Classes

The third paragraph delves into the different classes of matrix problems and the importance of understanding these classifications for effective communication and problem-solving. It stresses the importance of adhering to mathematical rules, even when using powerful computational tools. The paragraph also touches on the limitations of solutions when there are more unknowns than equations, and the necessity of having a unique set of linearly independent equations for a unique solution.

🔢 Gaussian Elimination and Inverse Matrix Methods

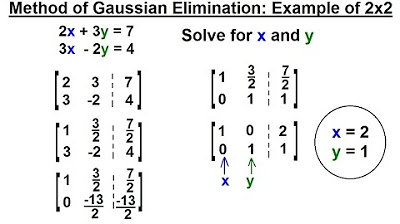

This section explains two primary methods for solving systems of linear equations: Gaussian elimination and the use of inverse matrices. Gaussian elimination is praised for its robustness and efficiency, as it avoids the need to calculate the inverse of a matrix explicitly. The inverse matrix method, while conceptually straightforward, is often less efficient and more prone to errors in a computational context. The paragraph also encourages learners to compare both methods to gain a deeper understanding of their computational properties.

🎓 Eigenvalue Problems and Their Computational Solutions

The script introduces the eigenvalue problem, a type of linear equation where the right-hand side of the equation is unknown, making it inherently more complex. It explains the conditions under which non-trivial solutions exist and how these solutions are found using determinants and specialized library routines. The paragraph also discusses the practical aspects of solving eigenvalue problems on a computer, including the use of secular equations and the iterative search for eigenvalues.

⚠️ Practical Considerations in Matrix Computing

This paragraph highlights the practical aspects and potential pitfalls of matrix computing, such as the finite nature of computer memory and the importance of avoiding page faults. It discusses the impact of array size on computation time and the need to be mindful of memory usage when dealing with large matrices. The text also touches on the efficiency of accessing matrix elements and the importance of understanding the storage order of matrices in different programming languages.

🗄️ Memory Management and Matrix Storage in Computing

The focus of this paragraph is on memory management and how matrices are stored in computing environments. It explains the difference between row-major and column-major storage orders and the implications these have on the efficiency of matrix operations. The paragraph also addresses common programming mistakes related to array indices and the distinction between physical and logical dimensions of matrices.

🌐 Exploring Subroutine Libraries for Matrix Computations

The script provides an overview of various subroutine libraries available for matrix computations, including Netlib, LAPACK, SLATEC, and others. It discusses the features and applications of these libraries, such as their use in linear algebra, signal processing, and eigenvalue analysis. The paragraph emphasizes the value of these libraries for both educational purposes and practical, large-scale scientific computing.

📝 Demonstrating the Use of JAMA, a Java Matrix Library

This section provides a practical demonstration of using the JAMA library in Java for solving linear algebra problems, including the eigenvalue decomposition. It walks through a sample Java program that utilizes JAMA to solve for eigenvalues and eigenvectors of a matrix, highlighting the simplicity and effectiveness of the library. The script also discusses the object-oriented approach of JAMA and its ability to handle complex matrix operations with ease.

🧑💻 Encouraging Hands-on Experience with JAMA

The final paragraph encourages learners to gain hands-on experience with the JAMA library by trying it out on sample test problems. It suggests that practical application is essential for understanding and effectively using the library for more complex problems. The script ends on a note that emphasizes the importance of experimentation and learning through doing.

Mindmap

Keywords

💡High Performance Computing (HPC)

💡Linear Equations

💡Matrices

💡Newton-Raphson Search Method

💡Gaussian Elimination

💡Eigenvalue Problem

💡Subroutine Libraries

💡Matrix Multiplication

💡Scientific Subroutine Packages

💡JAMA

Highlights

Introduction to high performance computing and its application in solving systems of linear equations.

Computers excel at matrix manipulations which are less intuitive for humans.

High performance computing often involves dealing with matrices due to their prevalence in scientific problems.

The two weights hanging by strings problem as an example of a matrix problem in physics.

Nine unknowns in the two weights problem, necessitating the use of matrix equations.

The use of search techniques to solve non-linear equations by linearizing them.

The standard form for linear equations and the Newton-Raphson search method.

The importance of scientific subroutine libraries for solving matrix problems efficiently.

Advantages of using industrial-strength subroutine libraries over custom-written algorithms.

The recommendation to not write your own matrix routines due to robustness and efficiency.

Challenges of using subroutine libraries include installation and understanding which routine to use.

Different classes of matrix problems and the importance of understanding them for effective communication and problem-solving.

The basic problem of linear algebra: solving a system of linear equations.

Gaussian elimination as the preferred method for solving systems of linear equations.

The eigenvalue problem and its distinction from standard linear algebra problems.

Practical aspects of matrix computing, including dealing with memory and avoiding page faults.

The impact of matrix size on computational complexity and the importance of efficient memory usage.

The difference in storage order between Java/C and Fortran and its implications for algorithm efficiency.

The Jama Java matrix library as an example of an object-oriented approach to matrix operations.

Demonstration of Jama library's capabilities in solving linear algebra problems with simple code examples.

The practicality of Jama for real-world applications and its ease of use for beginners.

The Eigenvalue program example showcasing the simplicity of using Jama for sophisticated computations.

The importance of testing matrix solutions for accuracy using residuals.

Transcripts

Browse More Related Video

Solving the matrix vector equation | Matrices | Precalculus | Khan Academy

Matrix equations and systems | Matrices | Precalculus | Khan Academy

Ch. 10.5 Inverses of Matrices and Matrix Equations

PreCalculus - Matrices & Matrix Applications (6 of 33) Method of Gaussian Elimination: 2x2 Matrix

PreCalculus - Matrices & Matrix Applications (7 of 33) Method of Gaussian Elimination: 3x3 Matrix*

A Guide to Gaussian Elimination Method (and Solving Systems of Equations) | Linear Algebra

5.0 / 5 (0 votes)

Thanks for rating: