Statistical Mechanics Lecture 2

TLDRThe video script from Stanford University delves into the concept of the Boltzmann constant and its significance in physics, particularly in relation to temperature and energy. It explains that the Boltzmann constant acts as a conversion factor between human-conceived units and more fundamental physical units. The script discusses the historical development of temperature scales, such as Fahrenheit and Kelvin, and how they were originally arbitrary and based on human accessibility rather than a true reflection of molecular energy. It further explores the idea that temperature is a measure of energy at the molecular level, with the Boltzmann constant providing a bridge between the macroscopic human perception of temperature and the microscopic world of molecular kinetic energy. The script also touches on the concept of entropy, its units, and its relationship with temperature, highlighting how entropy is a measure of information and disorder in a system. The lecture concludes by connecting the abstract statistical mechanical definition of temperature with the everyday experience of temperature differences and the flow of energy from hotter to cooler systems, as described by the second law of thermodynamics.

Takeaways

- 📏 The Boltzmann constant is a conversion factor between human units and more fundamental units of energy, typically used in physics.

- 🌡️ Temperature is a measure of energy at a fundamental level, and it is related to the kinetic energy of molecules in a gas.

- 🔄 The concept of temperature is derived from the notions of energy and entropy, and it is defined as the rate of change of energy with respect to entropy (dE = T dS).

- ⚖️ The Kelvin scale is based on absolute zero, whereas the Celsius scale is based on the temperature at which water freezes, making it an arbitrary choice.

- 🔩 If judiciously chosen, units can simplify calculations. For instance, setting the speed of light to one in certain units simplifies equations in physics.

- 🧬 Avogadro's number is related to the Boltzmann constant, and both are roughly 10 to the 23rd, indicating a fundamental relationship between the two.

- 🔵 The natural unit for temperature could be joules, but because the energy of a single molecule at room temperature is very small, the Boltzmann constant is also very small.

- 🔴 The entropy of a system is a measure of information and is typically measured in bits, which are dimensionless units.

- 🔀 When two systems are connected, energy will flow from the system at a higher temperature to the one at a lower temperature, reflecting the second law of thermodynamics.

- 🔄 The entropy of a system increases when it reaches thermal equilibrium, which is a state where the system has settled into an equilibrium configuration after a long enough time.

Q & A

What is the Boltzmann constant and why is it significant in physics?

-The Boltzmann constant is a fundamental constant in physics that serves as a conversion factor between temperature and energy. It is typically denoted by 'k' or 'kB' and is used in various equations in thermodynamics and statistical mechanics. It is significant because it allows for the connection between macroscopic temperature scales and the microscopic world of particle kinetic energies.

What is the relationship between the Boltzmann constant and the energy of a molecule in a dilute gas?

-The kinetic energy of a molecule in a dilute gas is related to the Boltzmann constant through the equation: kinetic energy = (3/2)kB * T, where T is the temperature in Kelvin. This equation shows that the kinetic energy of a molecule is directly proportional to the temperature and the Boltzmann constant.

Why are Kelvin units or centigrade units used to measure temperature?

-Kelvin units and centigrade units are used to measure temperature because they are convenient for human scales and magnitudes of quantities. The Kelvin scale is based on absolute zero, the theoretical lowest limit of temperature, making it a more fundamental unit. Centigrade, or Celsius, units are based on the freezing and boiling points of water at atmospheric pressure, which are easily reproducible and understandable for human use.

What is the connection between temperature and energy in the context of entropy?

-In the context of entropy, temperature is defined as the rate of change of energy with respect to entropy. This relationship is given by the equation dE = TdS, where dE is the change in energy, T is the temperature, and dS is the change in entropy. This shows that temperature is a measure of the energy change that corresponds to a unit change in entropy.

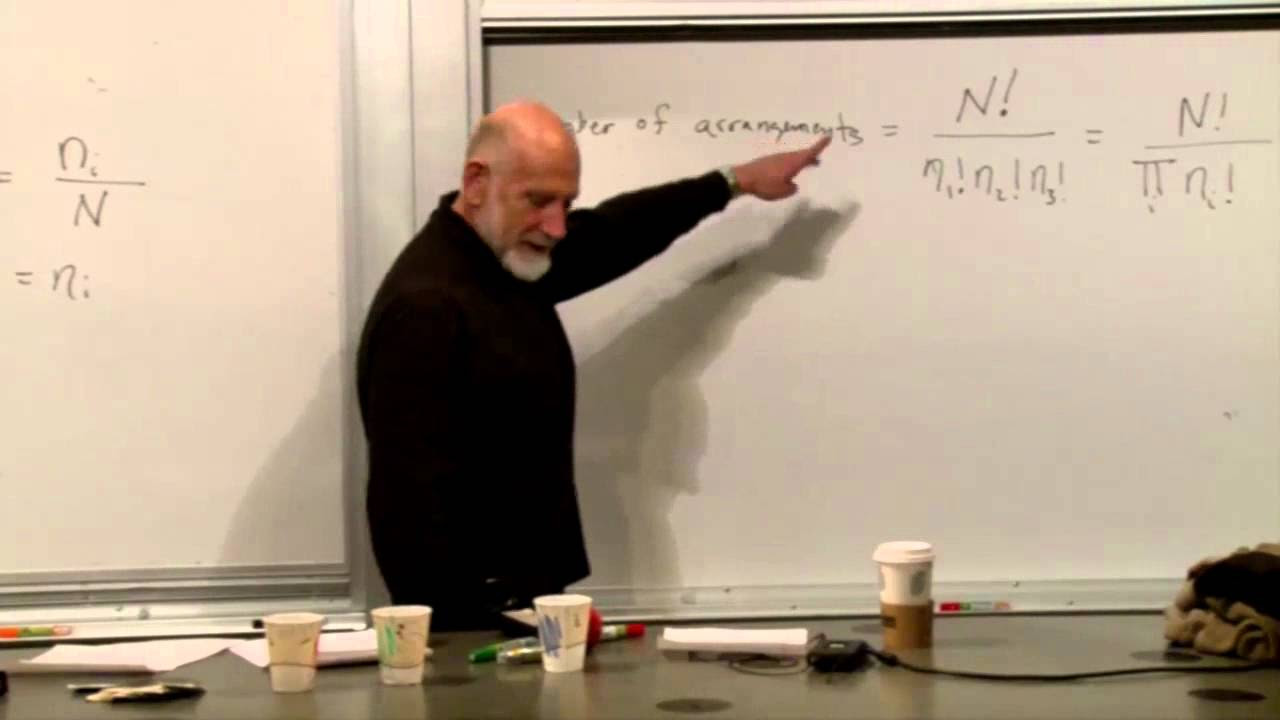

How does the concept of entropy relate to the number of states in a system?

-Entropy, denoted by 'S', is a measure of the number of possible microscopic states of a system that are consistent with its macroscopic properties. It is calculated using the formula S = -kB ∑P_i * ln(P_i), where P_i is the probability of the system being in a particular state. The higher the entropy, the greater the number of states accessible to the system.

Why is the Boltzmann constant important in the calculation of entropy?

-The Boltzmann constant is important in the calculation of entropy because it relates the macroscopic concept of temperature to the microscopic concept of energy at the level of individual particles. It is used to convert the units of entropy from a dimensionless quantity (when calculated as ln(P_i)) to units of energy per Kelvin (when calculated in the context of thermodynamics).

What is the significance of the second law of thermodynamics in the context of energy exchange between two systems?

-The second law of thermodynamics states that the total entropy of an isolated system can never decrease over time, and is constant if and only if all processes are reversible. In the context of energy exchange between two systems, it implies that energy will flow from the system at a higher temperature to the one at a lower temperature, resulting in an increase in the total entropy of the combined systems until equilibrium is reached.

How does the concept of thermal equilibrium relate to the average energy and probability distribution of a system?

-In thermal equilibrium, a system's average energy is constant, and it is characterized by a probability distribution that describes the likelihood of the system being in different energy states. The temperature of the system is a measure of the average energy change per unit change in entropy, which is determined by this probability distribution.

What is the significance of the first law of thermodynamics in terms of energy conservation during the interaction of two systems?

-The first law of thermodynamics, also known as the law of energy conservation, states that energy cannot be created or destroyed in an isolated system. When two systems interact, the total energy of the combined system remains constant. If one system gains energy, the other loses an equal amount, ensuring that the total energy is conserved.

Why is the concept of degeneracy in energy states considered unusual?

-Degeneracy, where multiple states have the same energy, is considered unusual because in most physical systems, each state has a unique energy level. The occurrence of degenerate states is rare and typically happens only under specific conditions, such as in quantum systems where particles occupy the same energy level due to the Pauli exclusion principle.

How does the monotonic relationship between energy and entropy affect the concept of temperature?

-The monotonic relationship between energy and entropy implies that as the energy of a system increases, its entropy also increases, and vice versa. This relationship is crucial for defining temperature, as it ensures that temperature is a positive quantity that can be used to measure the rate of energy change with respect to entropy, as expressed by the equation dE = TdS.

Outlines

📏 Introduction to the Boltzmann Constant and Units

The first paragraph introduces the Boltzmann constant as a conversion factor in physics, similar to the speed of light. It discusses the concept of human units, which are convenient for everyday measurements, and how they relate to more fundamental units. The paragraph also touches on the history of temperature measurement, the Kelvin and Fahrenheit scales, and the arbitrary nature of these units. It concludes with the idea that temperature is fundamentally related to energy, particularly the kinetic energy of gas molecules.

🔍 The Boltzmann Constant and Energy Quantification

This paragraph delves into the Boltzmann constant's role in connecting the energy of a single molecule in a dilute gas to temperature. It explains that the Boltzmann constant has units of energy per degree Kelvin and is a small number, illustrating the tiny amount of energy associated with a molecule at room temperature. The paragraph also discusses the relationship between the Boltzmann constant and Avogadro's number, and how temperature is inherently an energy measurement in fundamental physics.

🌡️ Redefining Temperature and Entropy Units

The third paragraph explores the concept of redefining the unit of temperature to eliminate the Boltzmann constant from equations. It introduces a new unit of temperature, capital T, which is defined as the Boltzmann constant times the human unit of temperature. This redefines temperature in terms of energy, and the paragraph also discusses the concept of entropy, its units, and how it differs from the traditional Carnot definition by a factor of the Boltzmann constant. It concludes with the statistical definition of entropy and its connection to the Boltzmann entropy.

🔄 The Boltzmann Factor and Energy Units

The fourth paragraph clarifies that the Boltzmann factor effectively disappears from equations when using the new unit of temperature, capital T. It emphasizes that temperature, when defined in this way, has units of energy and depends on the choice of energy units. The paragraph also discusses the determination of the Boltzmann constant experimentally and the historical context of its understanding, including contributions from Einstein.

⚖️ Thermal Equilibrium and the Concept of Temperature

This paragraph defines temperature in the context of a system in thermal equilibrium. It explains the concept of energy fluctuation within a system and how, on average, these fluctuations result in an equilibrium state. The paragraph introduces the idea that each state of the system has a certain energy and that there's a probabilistic distribution of the system's energy states. It concludes by stating that the average energy can be thought of as a parameter that influences the probability distribution.

🌟 The Maxwell-Boltzmann Distribution and Correlation

The sixth paragraph discusses the probability distribution of a system in thermal equilibrium, known as the Boltzmann distribution. It assumes a one-parameter family of probability distributions, each associated with a different average energy. The paragraph also addresses the concept of energy states being independent and the idea of degeneracy, where multiple states have the same energy, which is considered a rare occurrence.

🔢 Entropy as a Function of Energy

The seventh paragraph establishes entropy as a function of average energy for a given probability distribution. It explores the relationship between entropy and energy and introduces the concept that changing the average energy results in a shift in the probability distribution. The paragraph concludes with a discussion about the derivative of energy with respect to entropy, which is a measure of how much energy must change to alter the entropy by a specific amount.

↔️ The Definition and Relation to Familiar Concepts of Temperature

The eighth paragraph focuses on the abstract definition of temperature as the rate of change of energy with respect to entropy. It discusses the implications of changing units and how the Boltzmann constant cancels out in the definitions, leading to a universal relationship between energy, temperature, and entropy. The paragraph also touches on the concept of temperature as a measure of differences between systems and the flow of energy from hotter to cooler systems.

⚛️ The Second Law of Thermodynamics and Energy Conservation

The ninth paragraph introduces the first and second laws of thermodynamics in the context of two systems exchanging energy. It explains that the total entropy of a system increases when it reaches thermal equilibrium and that the total energy is conserved during this process. The paragraph concludes with a set of equations that summarize the relationships between energy, temperature, and entropy in the systems.

Mindmap

Keywords

💡Boltzmann Constant

💡Temperature

💡Entropy

💡Thermal Equilibrium

💡Kelvin Scale

💡Second Law of Thermodynamics

💡Energy Conservation

💡Statistical Mechanics

💡Absolute Zero

💡Heat

💡Probability Distribution

Highlights

The Boltzmann constant is a conversion factor between human units and more fundamental units.

The natural units for temperature are related to energy, with temperature being an energy measure.

The Kelvin scale was defined before the understanding that temperature equals energy.

The Boltzmann constant has units of energy divided by degrees Kelvin and is a small number.

The concept of temperature is fundamentally linked to the kinetic energy of molecules in a gas.

In thermal equilibrium, all atoms in a gas have essentially the same kinetic energy.

The energy of a single molecule in a dilute gas is directly related to the Boltzmann constant and temperature.

Entropy, a measure of information, was discovered by Carnot and is fundamentally related to temperature and energy.

The Boltzmann distribution is a statistical concept that describes the probability distribution of energy states at a given temperature.

Entropy is additive for independent systems, which is derived from the logarithmic nature of probability.

The second law of thermodynamics states that the total entropy of a system increases over time.

The first law of thermodynamics concerns the conservation of energy in a closed system.

The concept of temperature is defined as the rate of change of energy with respect to entropy.

When two systems are connected, energy flows from the system at a higher temperature to the one at a lower temperature.

The temperature of a system can be understood as a measure of the differences in temperature between systems.

Boltzmann's constant was not known to Boltzmann himself in terms of its numerical value due to the lack of atomic property measurements.

The statistical mechanical concept of temperature is abstract and does not immediately relate to the everyday experience of temperature.

The relationship between temperature and energy is monotonic under normal physical conditions, meaning they increase together.

Transcripts

Browse More Related Video

5.0 / 5 (0 votes)

Thanks for rating: