Linear Independence

TLDRThis script explains the mathematical concepts of linear dependence and independence among vectors and other algebraic structures. It provides definitions, examples, and methods to test if a set of vectors or polynomials are linearly independent or dependent, including solving systems of equations, row reduction of matrices, and calculating determinants. The goal is to express vector spaces in the simplest way using the fewest elements, avoiding unnecessary information. These abstract concepts allow unification of mathematical objects like functions, vectors, and matrices to answer questions about whether one object can be expressed in terms of others.

Takeaways

- 😀 Linearly dependent vectors can be written as a linear combination of other vectors. Linearly independent vectors cannot.

- 😕 To check for linear independence, set scalars multiplied by vectors equal to 0. If the only solution is all 0 scalars, the vectors are independent.

- 🤓 Turning linear combinations into matrices allows us to use row reduction to check for linear independence easily.

- 😃 The number of nonzero rows in row echelon form must equal the number of vectors for linear independence.

- 🧐 A 'free variable' signals linear dependence - one vector can be written in terms of others.

- 🤔 Determinants let us check independence easily for square matrices. 0 = dependent, nonzero = independent.

- 👍 Polynomials can also be checked for linear independence by setting coefficients equal to 0.

- 😊 Abstraction and unification of concepts like vectors, functions and matrices is common in advanced math.

- 🤖 After getting linear independence down, we can combine it with span to get another key concept.

- 😉 Comprehension check time! We covered a lot of ground on linear independence here.

Q & A

What does it mean for a set of vectors to be linearly dependent?

-A set of vectors is linearly dependent if one of the vectors can be written as a linear combination of the other vectors. In other words, if there is a set of nonzero scalars that makes the linear combination of the vectors equal to zero, they are linearly dependent.

How can we check if a set of vectors is linearly independent?

-To check for linear independence, we can set up a system of equations by multiplying each vector by an unknown scalar and setting their sum equal to zero. If the only solution is for all the scalars to be zero, the vectors are linearly independent. If there are nonzero scalar solutions, the vectors are linearly dependent.

What is a free variable in the context of linear independence?

-A free variable refers to a scalar variable that is not constrained to be zero in the set of linear equations we set up to check for linear independence. If one or more of the scalar variables can take on any nonzero value and still satisfy the equations, this means there is a free variable and the vectors are linearly dependent.

How can we use matrices to check for linear independence?

-We can set up a matrix using the vector coordinates. If the matrix is square, we can take the determinant. If the determinant is nonzero, the vectors are linearly independent. If the determinant equals zero, the vectors are linearly dependent.

What does it mean to express a vector space in the simplest, most efficient way?

-Expressing a vector space in the simplest, most efficient way refers to finding a linearly independent spanning set - the smallest possible set of vectors (or other elements) that can be used to write all other vectors in the space as linear combinations.

What is the significance of row echelon form in determining linear independence?

-Putting a matrix into row echelon form through row operations allows us to easily identify whether there are free variables. If the number of nonzero rows matches the number of original vectors, there are no free variables and the vectors are linearly independent. If there are fewer nonzero rows, there are free variables and the vectors are dependent.

Can we check linear independence for mathematical objects other than just vectors?

-Yes, the concept of linear independence applies to any elements in a vector space, not just vectors. For example, we can check for the linear independence of polynomials or functions by using the same process of setting scalar multiples equal to zero and checking for nonzero solutions.

What is the significance of linear independence with regards to spanning sets?

-A linearly independent spanning set provides an efficient way to represent all vectors in a space. Any linearly dependent vectors are unnecessary because they can be written in terms of other vectors. Identifying a linearly independent spanning set helps simplify and minimize the information needed.

Why is the concept of linear independence important?

-Linear independence is important because it allows us to express vector spaces in the simplest way without redundant or unnecessary elements. It also helps unify different mathematical objects like vectors, functions, and matrices so we can analyze them similarly. This abstraction and unification is key to higher level mathematics.

What is the next key concept related to linear independence?

-The next key concept is span. Together with linear independence, the ideas of span and linear independence allow us to concisely characterize vector spaces and work with them effectively.

Outlines

📖 Defining Linear Dependence and Independence of Vectors

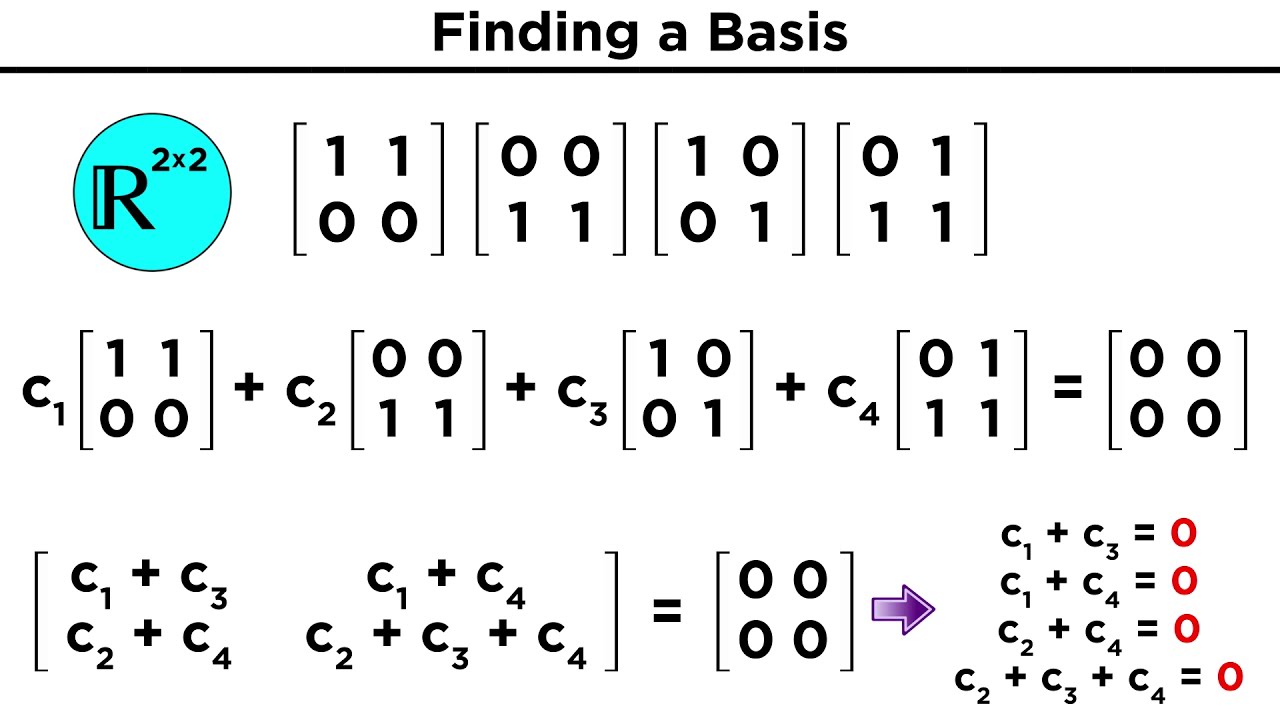

This paragraph provides formal mathematical definitions and explanations of linear dependence and independence among a set of vectors. It discusses how to test for linear dependence by setting up equations with vector coefficients, and solving to check if only the trivial all-zero solution exists (implying linear independence) or if free variables/non-zero solutions exist (implying linear dependence).

😎 Example Demonstrating Linear Dependence Testing

A step-by-step worked example is presented to demonstrate testing a set of 3 vectors for linear dependence. The process involves setting up a matrix equation, row-reducing it to echelon form, analyzing the resulting system of equations, and concluding linear dependence based on a free variable.

📚 Extending Linear Independence Concepts Beyond Vectors

This paragraph generalizes the linear independence concept beyond just vectors to other objects like polynomials. An example showing how to test a set of 3 polynomials for linear dependence is presented, concluding dependence based on the determinant of the coefficient matrix being zero.

Mindmap

Keywords

💡vector

💡linearly independent

💡linearly dependent

💡span

💡vector space

💡linear combination

💡polynomial

💡determinant

💡abstraction

💡row echelon form

Highlights

To express vector spaces in the simplest, most efficient way, we need the fewest number of elements that can be used to write any other elements within the vector space.

To see if a set of vectors or elements are linearly independent, we must simply see if there is a set of nonzero scalars that exists that makes the linear combination of the elements equal to zero.

If after our row operations we were left with a surviving 1 in the third row, third column, when we wrote our set of equations, we would have had to include a third equation that sets c3 equal to zero. Then, substituting that into the second equation, c2 would have to be zero, and then plugging in zero for both c2 and c3 in the first equation, we would find that c1 is equal to zero as well.

If the determinant ends up being 0, the vectors are linearly dependent, and if the determinant is not zero, the vectors are linearly independent.

Vector spaces and related concepts certainly may seem abstract, and they are, but they are useful because they allow us to unify things like functions, vectors, and matrices, so that we can use the same set of tools to answer certain questions.

With linear independence understood, we can move on and combine this with the concept of span to form another key concept.

Let's start by considering three vectors: a, b, and c. Let's also say that c is equal to (a plus 2b). In this case, for any linear combination of a, b, and c, such as 2a + b - c, c can be replaced by (a plus 2b), making it a combination of only a and b.

On the other hand, if none of the vectors can be written in terms of the others, they are linearly independent.

If you have vectors v1, v2, all the way to vn, in a vector space, V, then to be linearly independent, the equation c1v1 + c2v2 all the way to CNVN = 0 can only be satisfied if all the scalars from c1 to cn equal zero.

Often we'll be dealing with much more complicated systems. However, we can still use the methods we've already learned by treating the scalars as our unknown variables.

Finally, given that vectors spaces can be made of more than just vectors, we can also check for the linear independence of things other than vectors.

If this seems confusing, you can also just distribute the scalars, group up like terms, and factor out the x terms if you prefer.

While it did take a few extra steps, we once again have a system of equations to solve just like we have been doing.

So to see if a set of vectors or elements are linearly independent, we must simply see if there is a set of nonzero scalars that exists that makes the linear combination of the elements equal to zero.

Transcripts

Browse More Related Video

Basis and Dimension

Understanding Vector Spaces

Linear combinations, span, and basis vectors | Chapter 2, Essence of linear algebra

Lec 34: Final review | MIT 18.02 Multivariable Calculus, Fall 2007

Matrices to solve a vector combination problem | Matrices | Precalculus | Khan Academy

Orthogonality and Orthonormality

5.0 / 5 (0 votes)

Thanks for rating: