Statistical Mechanics Lecture 1

TLDRThe provided transcript is a detailed lecture on statistical mechanics, a fundamental theory in physics that deals with the behavior of large systems and the statistical distribution of their properties. The lecturer emphasizes that statistical mechanics is deeply rooted in the second law of thermodynamics and概率论 (probability theory), which allows for predictions in systems where precise predictability is not feasible. The discussion covers the concept of entropy, which is central to understanding the degree of disorder in a system, and introduces the idea of information conservation in physics. The lecture also touches on the zeroth, first, and second laws of thermodynamics, highlighting the conservation of energy and the increase of entropy over time. The lecturer further explains the calculation of entropy for different probability distributions, including the case of a system of coins and the concept of bits as the unit of entropy in information theory. The transcript concludes with a teaser for the next topics, which include temperature and the Boltzmann distribution, a statistical description of the distribution of energy in a system at thermal equilibrium.

Takeaways

- 📚 Statistical mechanics is a fundamental theory in physics that applies probability theory to physical systems, particularly useful when initial conditions are not known with precision.

- 🔄 The second law of thermodynamics, a cornerstone of statistical mechanics, is a guiding principle that has withstood the test of time and is deeply rooted in the understanding of physical systems.

- 🚀 Statistical mechanics is crucial for understanding phenomena that are too small or involve too many particles to track individually, such as the behavior of gases, liquids, and solids.

- 🎯 Probability theory becomes a precise predictor due to the law of large numbers when dealing with a vast number of particles, allowing for statistical predictions in systems where deterministic predictions are impossible.

- ⚖️ Symmetry often dictates probabilities in physical systems, as it implies equal likelihood for outcomes related by symmetry, unless there is an underlying rule or law that provides more specific guidance.

- 🔴 Fluctuations in systems, though unpredictable in timing, can be described probabilistically, which is essential in statistical mechanics for understanding phenomena like energy distribution in a gas.

- 🎲 The concept of entropy is introduced as a measure of the number of states with non-zero probability and is a fundamental quantity that is conserved in an information-conserving system.

- 🔵 The zeroth law of thermodynamics is mentioned but not detailed, hinting at the concept of thermal equilibrium and its transitive property among systems.

- 🔵 The first law of thermodynamics, which is the conservation of energy, is presented as a simple yet powerful principle that governs the behavior of closed systems and their interactions.

- 🔵 The concept of entropy is further elaborated upon, showing its dependence on the probability distribution of a system and how it can be calculated for various levels of knowledge or ignorance about the system's state.

- 🤔 The script touches on the idea that entropy is not just a property of a system but also depends on the observer's knowledge, making it a more complex and nuanced concept than other physical quantities like energy or momentum.

Q & A

What is the main focus of statistical mechanics?

-Statistical mechanics is primarily concerned with making statistical, probabilistic predictions about systems that are too small or contain too many elements to track individually. It applies probability theory to physical systems and is particularly useful when initial conditions are not known with complete precision or when the system is not isolated.

Why is the second law of thermodynamics considered a guiding principle in statistical mechanics?

-The second law of thermodynamics is seen as a guiding principle because it describes the increase in entropy or disorder in a system, which is a central concept in statistical mechanics. It helps in making sense of the behavior of large systems where exact predictability is not possible.

How does symmetry play a role in assigning probabilities in statistical mechanics?

-Symmetry is often used to assign equal probabilities to outcomes that are related by some form of symmetry. If a system has symmetrical outcomes, it is assumed that there is no reason for one outcome to occur more frequently than another, thus each outcome is given an equal probability.

What is the law of large numbers and how does it apply to statistical mechanics?

-The law of large numbers is a principle that states that as the number of trials or observations increases, the actual ratio of outcomes will converge on the theoretical, or expected, ratio. In statistical mechanics, this law implies that the more times a system is observed or the more particles are involved, the more predictable the system becomes, even though individual outcomes are uncertain.

What is the concept of entropy in the context of statistical mechanics?

-Entropy, in statistical mechanics, is a measure of the number of possible microscopic configurations (states) of a system that are consistent with its macroscopic (observable) properties. It is a fundamental quantity that increases over time in a closed system, reflecting the increase in disorder.

How does the concept of a 'conservation law' apply to statistical mechanics?

-In statistical mechanics, a conservation law implies that certain quantities, like energy, remain constant throughout the evolution of a system. This has implications for the probabilities of different states, as the system's behavior is constrained by these conserved quantities.

What is the significance of the 'minus first law of physics' mentioned in the transcript?

-The 'minus first law of physics', as mentioned, refers to the principle of conservation of information. It states that physical processes are reversible and that information is never lost. This is a fundamental concept that underlies the deterministic nature of physical laws and has implications for the predictability of system states.

Why is the concept of phase space important in statistical mechanics?

-Phase space is a mathematical space that represents all possible states of a system. In statistical mechanics, it is used to describe the distribution of systems over all possible states. The evolution of a system in phase space is crucial for understanding its dynamics and for calculating entropy.

What is the relationship between entropy and the amount of information known about a system?

-Entropy is inversely related to the amount of information known about a system. The more information or precise knowledge we have about a system, the lower its entropy. Conversely, the less we know, the higher the entropy, reflecting greater uncertainty or disorder.

How does the concept of temperature relate to entropy in statistical mechanics?

-Temperature is a derived quantity that comes after entropy in terms of fundamental importance. It is related to the average kinetic energy of particles in a system. In the context of entropy, temperature can be seen as a measure of how the probability distribution over phase space is spread out, which in turn affects the system's entropy.

What is the role of the Boltzmann distribution in statistical mechanics?

-The Boltzmann distribution describes the probability of a system being in a particular state at a given temperature. It is a key concept for understanding how systems reach thermal equilibrium and is used to calculate the entropy of a system in equilibrium.

Outlines

📚 Introduction to Statistical Mechanics

The paragraph introduces the topic of statistical mechanics, positioning it as a fundamental aspect of physics that bridges modern and premodern physics. It emphasizes the significance of the second law of thermodynamics and the probabilistic nature of statistical mechanics, which contrasts with the perfect predictability of classical physics. The speaker assures that statistical mechanics is deep and broader in scope, crucial for understanding the world and even the Higgs boson.

🎲 Probability and Predictability in Systems

This section delves into the application of probability theory to physical systems, particularly when initial conditions are not perfectly known or when the system is not closed. It discusses how statistical mechanics can be highly predictable for macroscopic properties like temperature and pressure of a gas, despite the unpredictability of individual molecular positions and velocities. The concept of fluctuations is introduced, illustrating how they are probabilistic and not governed by deterministic laws.

🔄 The Law of Large Numbers and Statistical Predictability

The paragraph focuses on the law of large numbers and how it contributes to the predictability in statistical mechanics. It explains that with a large number of particles, probabilities become precise predictors. The text also touches on the role of symmetry in assigning probabilities and the use of experiments to measure probabilities when symmetry is not present or when deeper theories are unavailable.

🚀 The Evolution of Systems and Conservation Laws

This section discusses the evolution of systems over time and the concept of conservation laws. It uses the example of a six-sided cube to illustrate how the probabilities of landing on each side can be determined by the system's law of motion, even without symmetry. The importance of knowing the system's underlying law to predict probabilities is emphasized, and the idea of a conservation law is introduced to explain how certain quantities remain constant throughout the system's evolution.

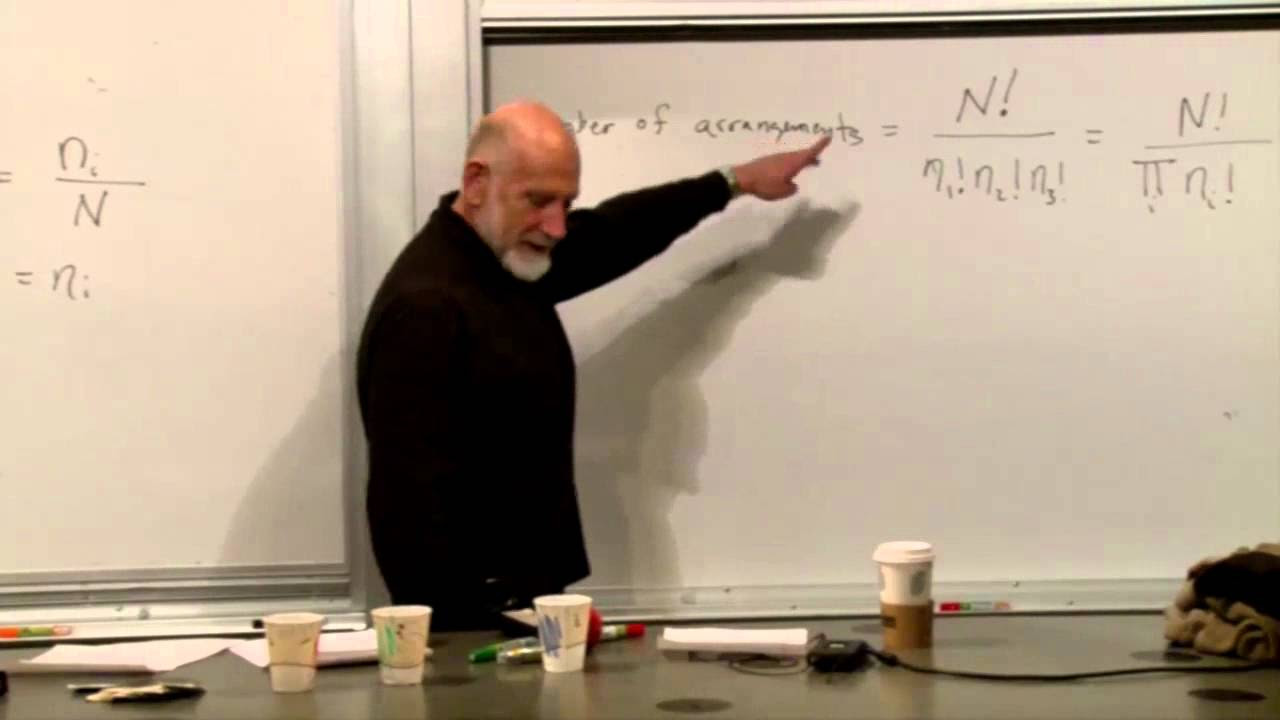

🔗 The Role of Information Conservation in Physics

The paragraph emphasizes the principle of information conservation as a fundamental law of physics. It contrasts 'good' laws, where each state has an equal number of incoming and outgoing transitions, with 'bad' laws that do not conserve information. The concept of entropy is introduced as a measure of the number of states with non-zero probability, and it is quantified as the logarithm of this number. The conservation of entropy is highlighted, explaining that it remains constant even as the system evolves.

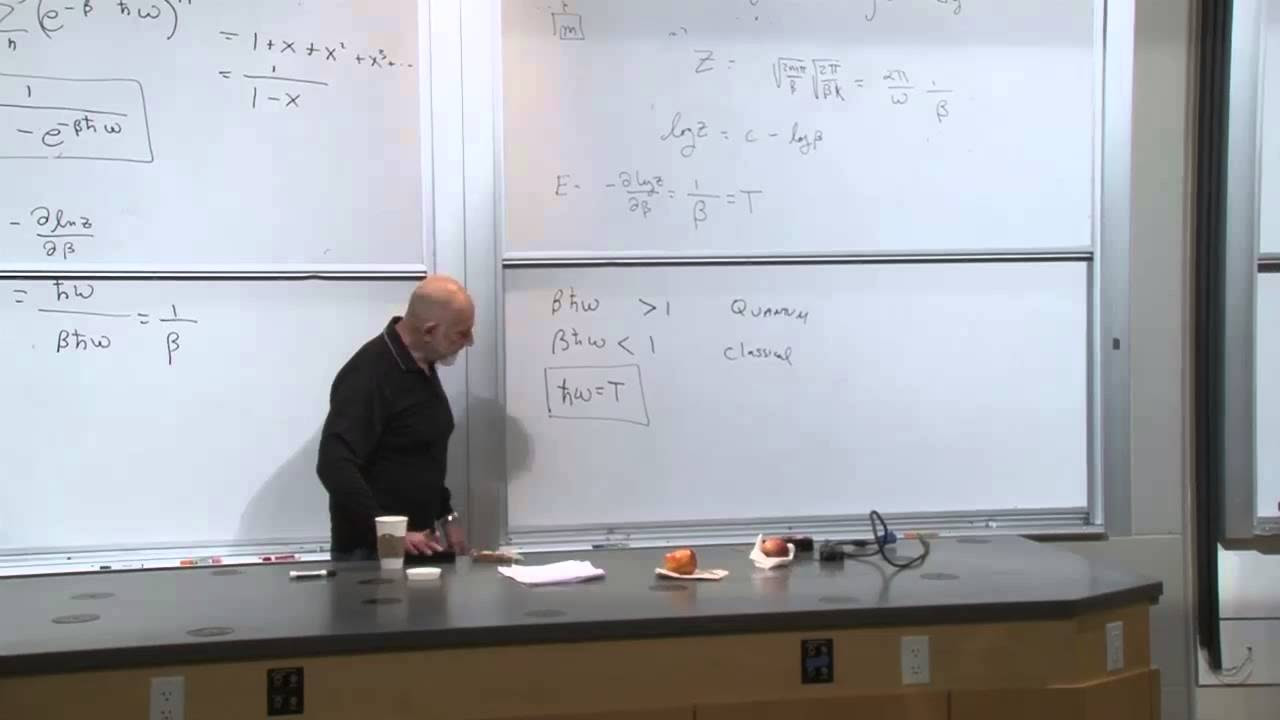

🌡️ Temperature, Entropy, and the Boltzmann Distribution

The final paragraph touches on the definitions of temperature and entropy in the context of phase space. It explains that entropy is a measure of the volume of the probability distribution in phase space and is related to the system's state of knowledge. The text also mentions the Boltzmann distribution, which will be discussed in relation to thermal equilibrium, and hints at the connection between information theory and statistical mechanics.

Mindmap

Keywords

💡Statistical Mechanics

💡Second Law of Thermodynamics

💡Predictability

💡Probability Theory

💡Fluctuations

💡Conservation Laws

💡Entropy

💡Phase Space

💡Liouville's Theorem

💡Thermal Equilibrium

💡Energy Conservation

Highlights

Statistical mechanics is introduced as a fundamental theory in physics, with deep implications and broad applicability.

The second law of Thermodynamics is highlighted as a guiding principle, likely to outlast many modern theories.

Statistical mechanics is positioned as a bridge between classical and quantum mechanics, with a significant role in understanding the Higgs boson.

The concept of predictability in physics is challenged by the inherent limitations of knowing initial conditions perfectly.

Probability theory is applied to physical systems to account for the limitations of perfect predictability in large systems.

The law of large numbers is discussed as a key principle allowing for precise probabilistic predictions in systems with many particles.

Fluctuations in physical systems are acknowledged as inherent and unpredictable events that follow probability distributions.

The use of symmetry to assign probabilities in systems with discrete outcomes, like coin flips or dice rolls, is explained.

The importance of statistical mechanics in making predictions about systems that are too complex to track individually is emphasized.

The role of statistical mechanics in the history of physics is discussed, noting that all great physicists were masters of it.

The beauty and utility of statistical mechanics as a subject in physics and mathematics are praised.

The concept of entropy is introduced as a measure of the number of states in a system with non-zero probability, reflecting the level of ignorance.

The principle of conservation of information is discussed, stating that physical laws must not allow for the loss of information over time.

Liouville's theorem is mentioned as a key theorem in statistical mechanics, ensuring the conservation of volume in phase space.

The first law of thermodynamics, energy conservation, is presented in the context of closed systems and interactions between system components.

The concept of thermal equilibrium is briefly introduced, promising a deeper exploration in subsequent discussions.

The zeroth law of thermodynamics is referenced, focusing on the transitivity of thermal equilibrium between systems.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: