21. Eigenvalues and Eigenvectors

TLDRThis lecture introduces eigenvalues and eigenvectors, crucial concepts in linear algebra with broad applications. The speaker explains that eigenvectors are special vectors that, when multiplied by a matrix, result in a vector that is a scalar multiple (the eigenvalue) of the original. The focus is on understanding these concepts rather than their applications. The lecture explores how to identify eigenvectors and eigenvalues, particularly noting that eigenvalues can be zero, and how they relate to the matrix's null space. Several examples are given, including projection and permutation matrices, to illustrate the concepts. The importance of the determinant in finding eigenvalues is highlighted, leading to the characteristic equation. The lecture also touches on the properties of eigenvalues, such as their sum equaling the trace of the matrix, and the potential for complex eigenvalues in certain matrices. The discussion concludes with the impact of adding multiples of the identity matrix to a matrix on its eigenvalues and vectors, and a cautionary note on the misconception that eigenvalues of A plus B or A times B can be directly inferred from the eigenvalues of A and B individually.

Takeaways

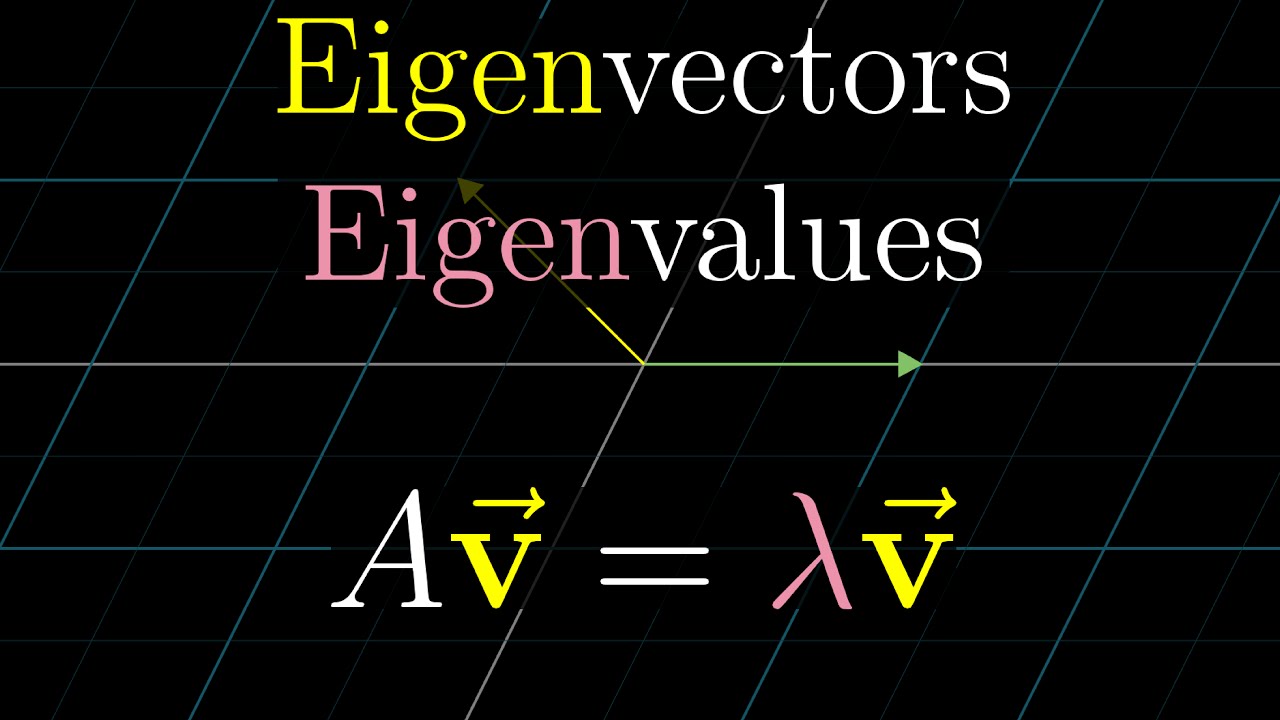

- 📐 **Eigenvalues and Eigenvectors**: The lecture introduces eigenvalues as special numbers and eigenvectors as special vectors associated with a matrix, where the matrix-vector multiplication results in a vector that is a scalar multiple of the original vector.

- 🔍 **Eigenvector Definition**: An eigenvector is a non-zero vector that, when multiplied by a matrix, results in a vector that is a scalar (eigenvalue) multiple of itself, maintaining the same or opposite direction.

- 🧮 **Eigenvalue Equation**: The key equation for eigenvalues is Ax = λx, where A is the matrix, x is the eigenvector, and λ is the eigenvalue.

- 📉 **Eigenvalue Zero**: When an eigenvalue is zero, the corresponding eigenvector lies in the null space of the matrix, meaning the matrix maps the vector to the zero vector.

- 🔍 **Finding Eigenvalues and Eigenvectors**: To find eigenvalues and eigenvectors, one must solve the characteristic equation det(A - λI) = 0, where I is the identity matrix.

- 🔢 **Sum of Eigenvalues**: For an n × n matrix, the sum of the eigenvalues equals the trace of the matrix (the sum of the elements on the main diagonal).

- ✖️ **Eigenvalues of A + B**: The eigenvalues of the sum of two matrices A + B cannot be simply obtained by adding the eigenvalues of A and B together; they must be found by solving the eigenvalue problem for A + B.

- 🤔 **Eigenvectors of Special Matrices**: The lecture provides examples of finding eigenvalues and eigenvectors for projection and permutation matrices, illustrating that eigenvectors can be found without complex calculations for certain types of matrices.

- ⚙️ **Effect of Matrix Operations**: Adding a multiple of the identity matrix to a matrix results in increased eigenvalues but does not change the eigenvectors.

- 🔄 **Rotation Matrices**: For rotation matrices, eigenvalues can be complex numbers, even if the matrix itself is real, and they come in complex conjugate pairs.

- 📊 **Triangular Matrix Eigenvalues**: The eigenvalues of a triangular matrix can be directly read from the diagonal of the matrix.

- ⚠️ **Repeated Eigenvalues**: A matrix with repeated eigenvalues may not have a complete set of linearly independent eigenvectors, leading to a deficiency in the eigenspace.

Q & A

What are eigenvalues and eigenvectors in the context of linear algebra?

-Eigenvalues and eigenvectors are special components in linear algebra related to square matrices. An eigenvector is a non-zero vector that, when a matrix is multiplied by it, results in a vector that is a scalar multiple (the eigenvalue) of the original vector, maintaining the same direction. Eigenvalues are the scalar multiples associated with their respective eigenvectors.

What is the significance of the eigenvalue zero in the context of eigenvectors?

-An eigenvalue of zero indicates that when the matrix is multiplied by its corresponding eigenvector, the result is the zero vector. The eigenvectors with eigenvalue zero form the null space of the matrix.

How can you determine if a vector is an eigenvector of a given matrix?

-To determine if a vector is an eigenvector, you would check if the matrix-vector multiplication results in a vector that is a scalar multiple of the original vector. This is done by solving the equation Av = λv, where A is the matrix, v is the vector in question, and λ is the eigenvalue.

What is the relationship between the trace of a matrix and its eigenvalues?

-The trace of a matrix, which is the sum of the elements on its main diagonal, is equal to the sum of its eigenvalues. This relationship is a consequence of the characteristic equation of the matrix.

How do you find the eigenvalues of a matrix?

-To find the eigenvalues of a matrix, you solve the characteristic equation, which is given by det(A - λI) = 0, where A is the matrix, λ represents the eigenvalues, I is the identity matrix, and det denotes the determinant.

What is the impact of adding a multiple of the identity matrix to a matrix on its eigenvalues and eigenvectors?

-Adding a multiple of the identity matrix to a matrix will increase its eigenvalues by the amount of the multiple, but the eigenvectors will remain unchanged.

Why can't you simply add the eigenvalues of two matrices to find the eigenvalues of their sum?

-Eigenvalues do not possess additive or multiplicative properties across matrix operations like addition or multiplication. The eigenvalues of A + B or A * B are not necessarily the sums or products of the eigenvalues of A and B, respectively.

What are the eigenvalues of a rotation matrix, and why might they be complex?

-The eigenvalues of a rotation matrix can be complex numbers, even if the matrix itself is real. This occurs because a rotation can map a vector into a vector that is not just a scalar multiple but also a rotated version of the original vector, which can be represented by a complex number in terms of its direction.

What is the determinant of a triangular matrix, and how does it relate to the matrix's eigenvalues?

-The determinant of a triangular matrix is the product of the elements on its main diagonal. For a triangular matrix, the eigenvalues are precisely these diagonal elements.

What is the issue with a matrix having a repeated eigenvalue but not enough independent eigenvectors?

-A matrix with a repeated eigenvalue may not have a complete set of linearly independent eigenvectors. This situation, known as degeneracy, can limit the matrix's applicability in certain contexts, such as diagonalization.

How do you find the eigenvectors associated with a given eigenvalue?

-To find the eigenvectors associated with a specific eigenvalue, you solve the equation (A - λI)v = 0, where A is the matrix, λ is the eigenvalue, I is the identity matrix, and v is the eigenvector you're solving for.

What is the significance of eigenvectors being perpendicular in certain matrices?

-When eigenvectors corresponding to distinct eigenvalues are perpendicular, it simplifies the analysis of the matrix and ensures that the matrix can be diagonalized. This property is particularly useful in many applications, including stability analysis and vibrations in physics.

Outlines

😀 Introduction to Eigenvalues and Eigenvectors

The lecture begins by introducing the concepts of eigenvalues and eigenvectors, which are fundamental to the course. Eigenvalues are special numbers and eigenvectors are special vectors associated with a matrix A. An eigenvector is a non-zero vector x that, when multiplied by the matrix A, results in a vector (Ax) that is a scalar multiple (denoted by lambda) of the same vector x. The lecture emphasizes the importance of understanding what these entities are and their applications in subsequent lectures.

🔍 Eigenvectors and Their Calculation

The paragraph delves deeper into the calculation of eigenvectors and eigenvalues. It discusses the challenge of solving for these elements when they are both unknowns in the equation Ax = λx. The determinant is introduced as a tool to help find these unknowns. The paragraph also provides examples using specific matrices, such as a projection matrix, to illustrate the concepts of eigenvalues and eigenvectors in practical scenarios.

📚 Eigenvalues of Special Matrices

This section focuses on the eigenvalues of specific types of matrices, such as projection and permutation matrices. It explains that the eigenvalues of a projection matrix are one and zero, and that any vector lying in the plane of projection is an eigenvector with an eigenvalue of one. The paragraph also explores the concept of the trace of a matrix and how it relates to the sum of the eigenvalues.

🤔 The Challenge of Finding Eigenvalues and Eigenvectors

The paragraph discusses the process of finding eigenvalues and eigenvectors by rearranging the equation Ax = λx to isolate the variable x. It highlights the necessity of the matrix (A - λI) being singular for non-trivial solutions to exist. The determinant of (A - λI) is identified as a key to finding the eigenvalues, which are the values for which the determinant is zero.

🧮 Solving for Eigenvalues and Eigenvectors

The paragraph outlines the method for solving for eigenvalues and eigenvectors. It explains that once the eigenvalues are found, the eigenvectors can be determined by solving the equation (A - λI)x = 0. The process involves finding the null space of the matrix (A - λI) for each eigenvalue λ. The paragraph also touches on the properties of symmetric matrices and their real eigenvalues.

🔗 Relationship Between Matrix Operations and Eigenvalues

This part of the lecture explores how eigenvalues and eigenvectors are affected by operations on the matrix, such as adding a multiple of the identity matrix to the original matrix. It is shown that adding a multiple of the identity matrix increases the eigenvalues by that multiple but does not change the eigenvectors. However, the paragraph cautions that this is not generally true for arbitrary matrix additions or multiplications.

🌀 Complex Eigenvalues and the Rotation Matrix

The paragraph introduces the concept of complex eigenvalues using the example of a rotation matrix. It explains that for a matrix that rotates vectors by ninety degrees, the eigenvalues are complex numbers, specifically, the complex conjugate pair i and -i. This example demonstrates that even real matrices can have complex eigenvalues under certain conditions.

🚫 Matrices with Repeated Eigenvalues and Incomplete Eigenvectors

The final paragraph addresses the issue of matrices with repeated eigenvalues that do not have a complete set of eigenvectors. Using a triangular matrix as an example, it is shown that while the eigenvalues can be easily identified, the matrix may lack independent eigenvectors corresponding to the repeated eigenvalues, leading to a situation where the matrix is degenerate.

📅 Conclusion and Upcoming Lecture

The lecture concludes with a mention of a forthcoming lecture that will provide a complete understanding of eigenvalues and eigenvectors for all types of matrices. The speaker wishes the audience a pleasant weekend before the next session.

Mindmap

Keywords

💡Eigenvalues

💡Eigenvectors

💡Matrix Multiplication

💡Null Space

💡Determinant

💡Characteristic Equation

💡Singular Matrix

💡Projection Matrix

💡Permutation Matrix

💡Orthogonal Matrices

💡Complex Eigenvalues

Highlights

Eigenvalues and eigenvectors are fundamental concepts in linear algebra, with eigenvalues being special numbers and eigenvectors being special vectors associated with a matrix.

An eigenvector is a non-zero vector that, when multiplied by a matrix, results in a vector that is a scalar multiple of the original vector.

The eigenvalue associated with an eigenvector is the scalar that the eigenvector is multiplied by during the matrix transformation.

Eigenvectors can have eigenvalues of zero, which places them in the null space of the matrix.

The process of finding eigenvalues and eigenvectors involves solving the equation Ax = λx, where λ is the eigenvalue and x is the eigenvector.

The determinant of a matrix A minus λI (identity matrix) must be zero for a matrix to have eigenvalues, which leads to the characteristic equation.

Eigenvalues can be real or complex numbers, and for a given matrix, there may be multiple eigenvalues or repeated eigenvalues.

The sum of the eigenvalues of a matrix equals the trace of the matrix, which is the sum of its diagonal elements.

Eigenvectors corresponding to distinct eigenvalues are orthogonal to each other.

Adding a multiple of the identity matrix to a matrix results in adding that multiple to all eigenvalues, without changing the eigenvectors.

The eigenvalues and eigenvectors of a matrix can provide important insights into the matrix's properties and its effects on transformations.

Eigenvalues of a projection matrix are one and zero, with a whole plane of eigenvectors corresponding to the eigenvalue one.

Permutation matrices have eigenvalues of one and negative one, with specific vectors that remain unchanged or reversed after multiplication by the matrix.

Eigenvalues of a matrix can be complex, as demonstrated by the example of a rotation matrix with eigenvalues i and -i.

The presence of complex eigenvalues in a real matrix is possible and indicates a certain lack of symmetry in the matrix.

A matrix may have repeated eigenvalues but a shortage of independent eigenvectors, leading to a degenerate or non-diagonalizable matrix.

Eigenvectors and eigenvalues are powerful tools for understanding and analyzing linear transformations, including rotations and projections.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: