The Sample Variance and its Chi Squared Distribution

TLDRThe video script delves into the statistical concept of the distribution of the S squared estimator, which is the unbiased sample variance. It explains that the distribution of S squared follows a chi-squared distribution with (n-1) degrees of freedom, where n is the sample size. The script provides a detailed mathematical proof of this claim, starting with the definition of S squared and leading up to the demonstration that the modified quantity (n-1)S^2/Σ^2 is chi-squared. The explanation involves assuming normal distribution of the population and using algebraic manipulations to show that the sum of squared deviations from the mean divided by the standard deviation follows a chi-squared distribution. This principle is crucial for understanding t-distributions and t-tests in statistical analysis.

Takeaways

- 📚 The topic of the video is the distribution of S squared, which is an estimator for the population variance.

- 🧠 S squared is calculated as (1/(n-1)) * Σ(xj - x̄)², where xj represents individual data points, x̄ is the sample mean, and n is the sample size.

- 🎯 The expected value of S squared is equal to the population variance, Sigma squared (σ²).

- 🔍 The video aims to determine the distribution of the S squared statistic, which is the unbiased sample variance.

- 🌟 The distribution of S squared is a chi-squared distribution with (n-1) degrees of freedom when considering the modified quantity (n-1)S²/σ².

- 📈 The proof involves considering the sum of (xj - μ)/σ, assuming that the Xj are normally distributed with mean μ and variance σ².

- 🔢 By squaring the standardized random variables and using algebraic manipulations, the distribution of the sum can be shown to be chi-squared with n degrees of freedom.

- 🧩 The middle term in the expansion cancels out because it is a sum of a constant, leaving the desired chi-squared distribution term and an additional term.

- 📊 The additional term represents a chi-squared distribution with one degree of freedom, which can be subtracted from the chi-squared distribution with n degrees of freedom.

- 🎓 The final result is that (n-1)S²/σ² follows a chi-squared distribution with (n-1) degrees of freedom, which is crucial for understanding t-distributions and t-tests.

- 🤝 This relationship between S squared and the chi-squared distribution is important for statistical inference, particularly in the context of hypothesis testing and confidence intervals.

Q & A

What is the definition of s-squared in the context of the video?

-In the context of the video, s-squared is defined as the unbiased sample variance, calculated as 1 over n minus one times the sum from j=1 to n of (x_j - x_bar) squared.

What is the expected value of s-squared?

-The expected value of s-squared is equal to the population variance, Sigma squared.

What is the distribution of the s-squared statistic?

-The distribution of the s-squared statistic is Chi-Squared with n minus 1 degrees of freedom when divided by the total population variance, Sigma squared.

Why is s-squared considered an unbiased estimator for the population variance?

-S-squared is considered an unbiased estimator for the population variance because its expected value is equal to the true population variance, Sigma squared.

How does the video demonstrate that (n-1)s^2/Sigma^2 has a Chi-Squared distribution?

-The video demonstrates this by first assuming that the population has a normal distribution and the X_j values are normally distributed with mean mu and variance Sigma squared. It then shows that the sum of (x_j - mu)/Sigma^2 is a Chi-Squared distribution with n degrees of freedom. Through algebraic manipulations and subtractions, it is proven that (n-1)s^2/Sigma^2 is also Chi-Squared, but with n-1 degrees of freedom.

What is the significance of the Chi-Squared distribution in the context of s-squared?

-The Chi-Squared distribution is significant because it provides the theoretical distribution of the s-squared statistic. This knowledge is crucial for hypothesis testing and constructing confidence intervals for the population variance.

How does the video relate the distribution of s-squared to the t-distribution and t-tests?

-The video mentions that the relationship between the Chi-Squared distribution of (n-1)s^2/Sigma^2 will play an important role in defining t-distributions and t-tests for sample population variances.

What is the assumption made about the X_j values in the video?

-The video assumes that the X_j values, or the sample data points, are normally distributed with a mean of mu and a variance of Sigma squared.

What is the role of the normal distribution assumption in the proof?

-The assumption that the X_j values are normally distributed is crucial because it allows the application of properties of the normal distribution to the proof. Specifically, it enables the transformation of the sum of (x_j - mu)/Sigma into a sum of independent standard normal random variables, which leads to the conclusion that the distribution is Chi-Squared.

What happens to the middle term in the algebraic manipulation during the proof?

-During the algebraic manipulation, the middle term, which is the sum from j=1 to n of (x_j - X_bar) times (X_bar - mu) over Sigma squared, cancels out. This happens because the sum of (x_j - X_bar) equals n times (X_bar - X_bar), which is zero.

How does the last term in the proof contribute to the final result?

-The last term in the proof simplifies to (X_bar - mu) over Sigma times the square root of n, which is a standard normal random variable squared. This term is Chi-Squared with one degree of freedom and allows for the subtraction of one degree of freedom from the n degrees of freedom Chi-Squared distribution, resulting in the (n-1)s^2/Sigma^2 being Chi-Squared with n-1 degrees of freedom.

What is the practical implication of understanding the distribution of s-squared?

-Understanding the distribution of s-squared is essential for statistical inference. It allows researchers to make inferences about the population variance based on the sample data, which is crucial in many fields of study, including science, economics, and social sciences.

Outlines

📚 Introduction to the Distribution of S^2

This paragraph introduces the topic of the distribution of S^2, the estimator for the population variance. It begins by recalling the formula for S^2 and its expected value, which is equal to the population variance (Sigma squared). The focus then shifts to understanding the distribution of the S^2 statistic, highlighting its role as an unbiased sample variance. The main question posed is what the distribution of S^2 is, setting the stage for a detailed exploration of the statistical properties of this estimator.

🧠 Deriving the Chi-Squared Distribution of (n-1)S^2/Sigma^2

This paragraph delves into the mathematical proof that demonstrates the distribution of the modified quantity (n-1)S^2/Sigma^2 as a Chi-Squared distribution with (n-1) degrees of freedom. It starts by considering the sum of independent normal random variables and their relationship to the Chi-Squared distribution. The proof involves algebraic manipulations, including the addition and subtraction of terms, and the squaring of expressions. The paragraph establishes that (n-1)S^2/Sigma^2 follows a Chi-Squared distribution, which is crucial for understanding the statistical inferences made in t-distributions and t-tests for sample population variances.

Mindmap

Keywords

💡S squared

💡Population variance

💡Chi-squared distribution

💡Unbiased estimator

💡Degrees of freedom

💡Standard deviation

💡Normal distribution

💡Summation

💡Hypothesis testing

💡T distribution

💡Variance

Highlights

The discussion focuses on the distribution of S squared, which is an estimator for the population variance.

S squared is calculated as 1 over n minus one times the sum of (xj - X bar) squared from j=1 to n.

The expected value of S squared is equal to Sigma squared, the population variance.

S squared is an unbiased sample variance, meaning it does not systematically overestimate or underestimate the population variance.

The distribution of S squared is related to the chi-squared distribution with n-1 degrees of freedom.

The proof involves considering the modified quantity n-1 times S squared over Sigma squared.

The Xj values are assumed to be normally distributed with mean mu and variance Sigma squared.

The sum of (xj - mu)/Sigma squared for j=1 to n is normally distributed with mean 0 and variance 1.

The distribution of the sum of independent normal 0 1 random variables is chi-squared with n degrees of freedom.

By algebraic manipulation, the chi-squared distribution with n degrees of freedom can be related to the S squared statistic.

The middle term in the expansion cancels out because it is a sum of xj - X bar, which equals zero.

The final term in the expansion is (X bar - mu)/Sigma squared, which is a normal 0 1 random variable squared.

The term is chi-squared with one degree of freedom, as it represents the square of a standard normal random variable.

The relationship between chi-squared distributions and degrees of freedom is crucial for understanding t-distributions and t-tests.

The distribution of n-1 S squared over Sigma squared as chi-squared with n-1 degrees of freedom is a fundamental concept in statistics.

The video provides a concise and clear explanation of the distribution of S squared and its relation to the chi-squared distribution.

Transcripts

Browse More Related Video

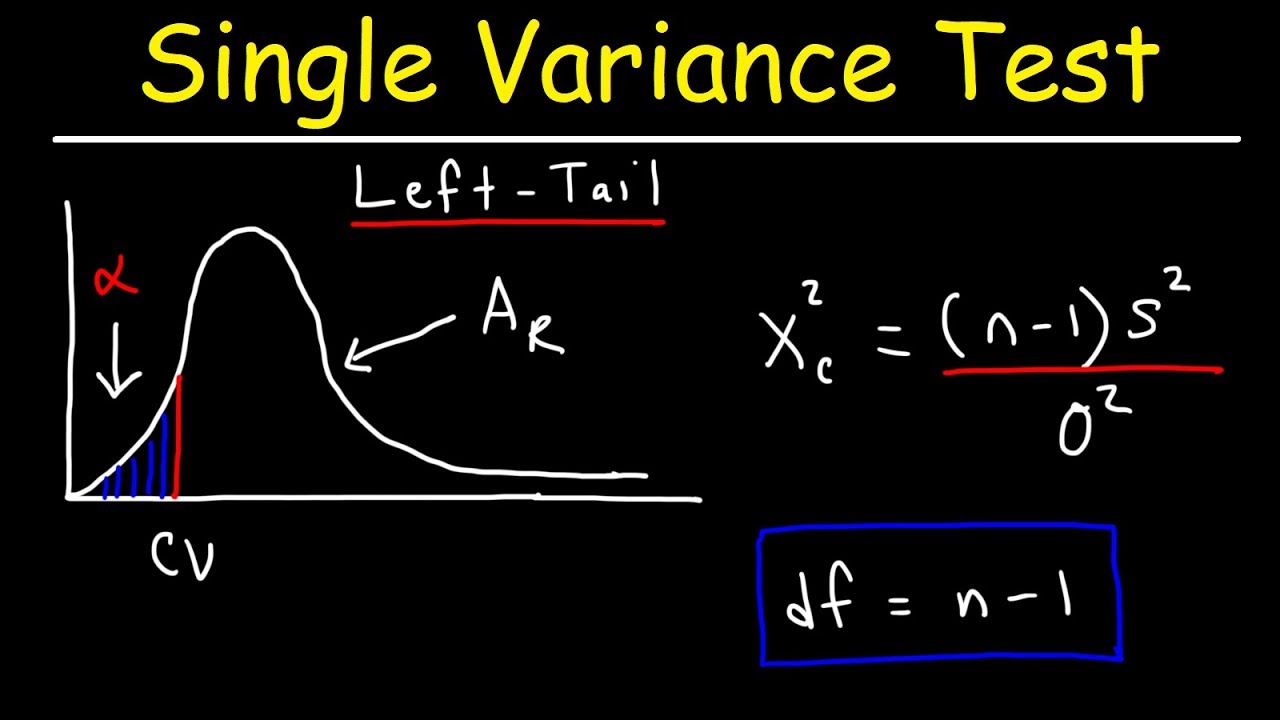

Chi Square Distribution Test of a Single Variance or Standard Deviation

What is Variance in Statistics? Learn the Variance Formula and Calculating Statistical Variance!

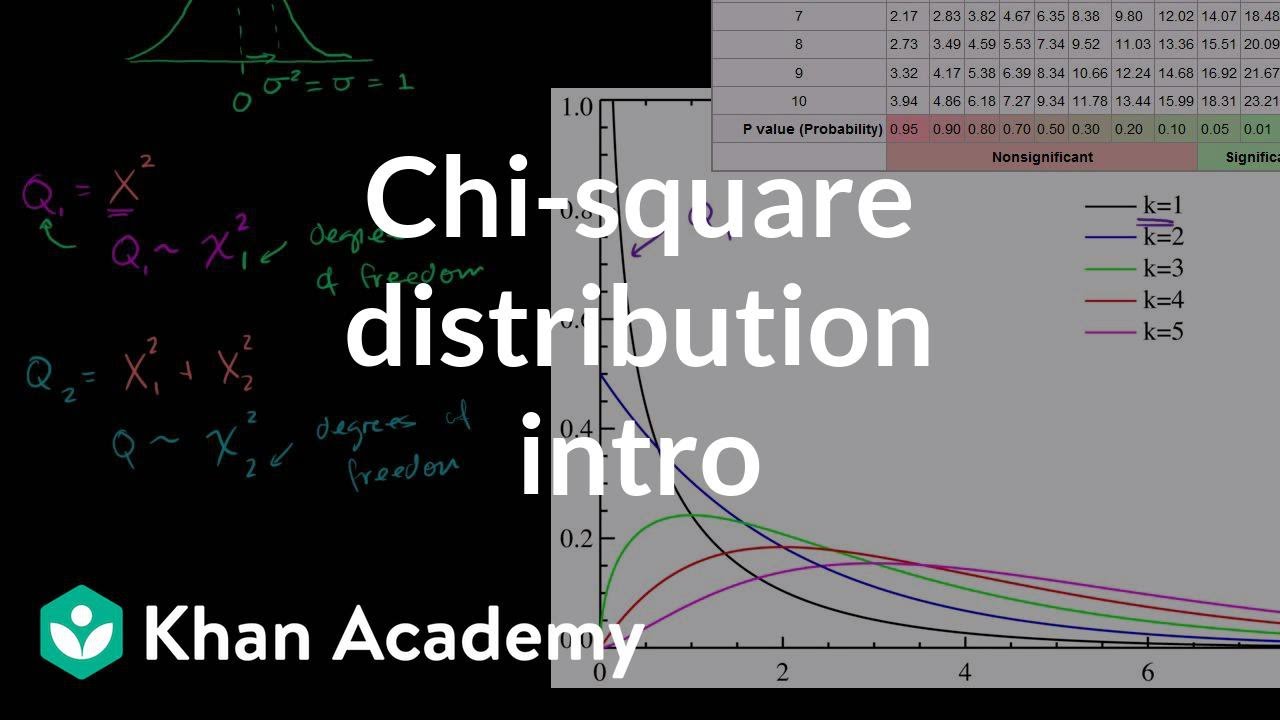

Chi-square distribution introduction | Probability and Statistics | Khan Academy

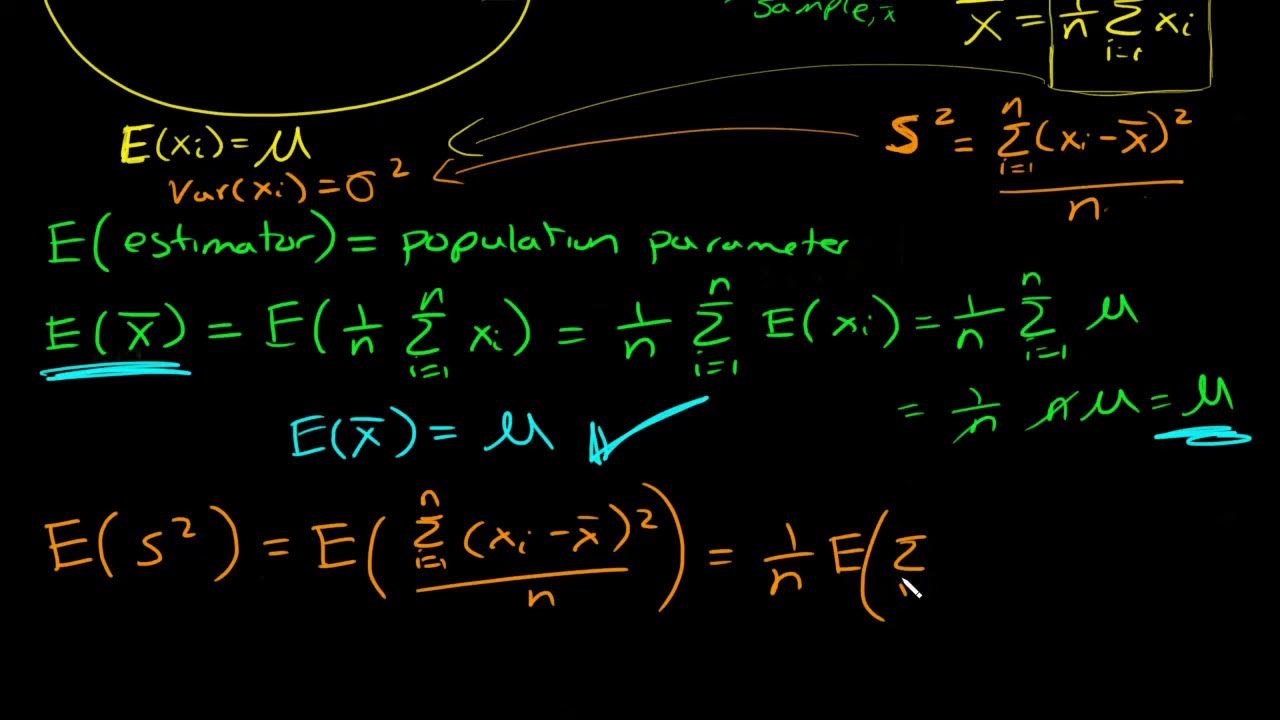

What is an unbiased estimator? Proof sample mean is unbiased and why we divide by n-1 for sample var

Degrees Of Freedom in a Chi-Squared Test

Dividing By n-1 Explained

5.0 / 5 (0 votes)

Thanks for rating: