10.2.5 Regression - Residuals and the Least-Squares Property

TLDRThis video script explores the concept of residuals and the least squares property in the context of linear regression. It defines residuals as the difference between observed and predicted y-values and illustrates this with a sample data set. The script explains that the 'best fit' line is determined by minimizing the sum of squared residuals, ensuring the smallest possible error across all data points. The goal is to help viewers understand the relationship between residuals and the regression line, and how the line of best fit is derived using the least squares method.

Takeaways

- 📐 The script discusses the concept of residuals and the least squares property in the context of regression analysis.

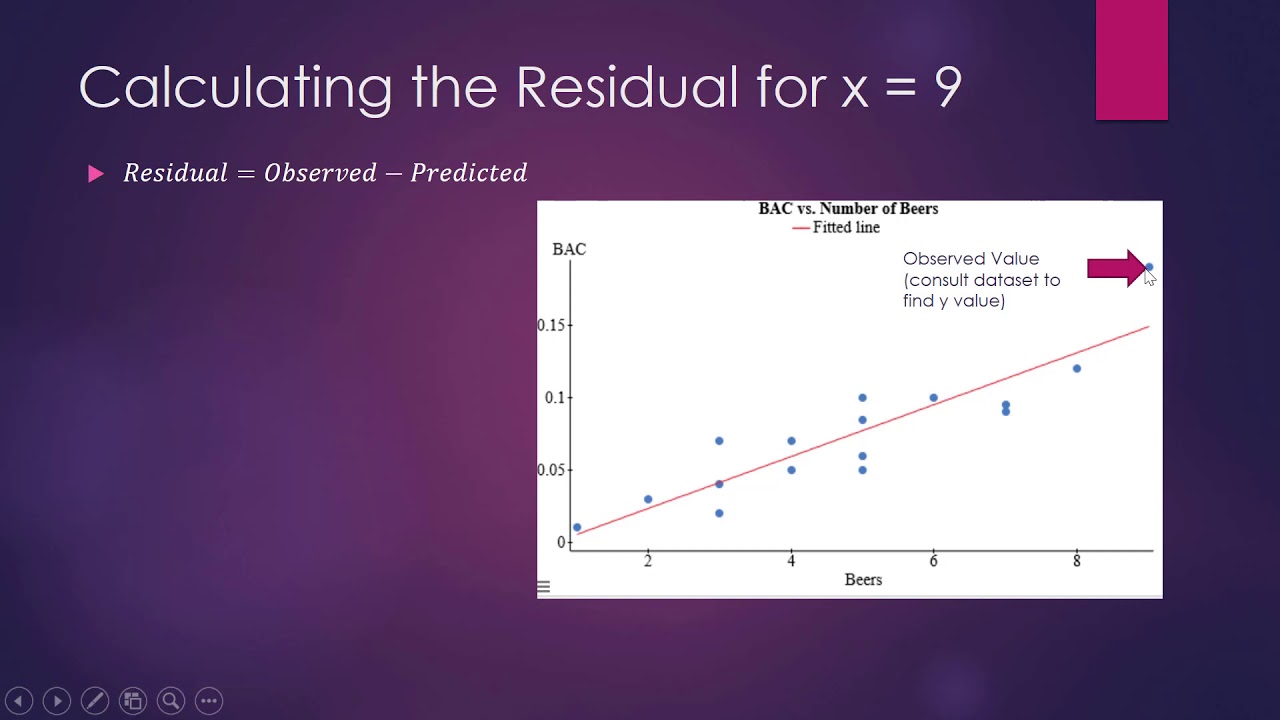

- 🔍 Residual is defined as the difference between the observed y value and the predicted y value (y - ŷ) from the regression equation.

- 📈 The goal is to understand the relationship between residuals and the regression line, and to explain what it means for a line to 'best fit' the data.

- 📊 The regression line is determined by minimizing the sum of the squares of the residuals, which represents the errors between the predicted and actual values.

- 📉 A positive residual indicates that the actual y value is above the regression line, while a negative residual indicates it is below.

- 📝 The script provides a simple example with a regression equation ŷ = x + 1, and calculates residuals for different x values.

- 📉 The least squares property is satisfied when the sum of the squares of the residuals is minimized, indicating the best fit line.

- 📊 The concept of 'best fit' is about finding a line where the overall squared errors are as small as possible for the entire dataset.

- 🧩 The script explains that different lines can be drawn, but the one with the smallest sum of squared residuals is considered the best fit.

- 📚 The process of finding the best fit line involves calculus, specifically minimizing a function of several variables, which is covered in more advanced courses.

- 🔑 The coefficients of the regression equation (b₀ and b₁) are determined to minimize the sum of squared residuals, ensuring the line fits the data optimally.

Q & A

What is a residual in the context of regression analysis?

-A residual is the difference between the observed sample value of y (the actual y value) and the y value predicted by the regression equation (y-hat). It represents the error or the distance of the actual data point from the predicted regression line.

What does the regression equation predict for a given x value?

-The regression equation predicts the expected y value (y-hat) for a given x value based on the relationship defined by the coefficients of the regression line.

What is the purpose of the least squares property in regression analysis?

-The least squares property is used to determine the best fit line for a set of data points. It minimizes the sum of the squares of the residuals, which represents the vertical distances of the data points from the regression line.

How is the best fit line defined in regression analysis?

-The best fit line is defined as the line that minimizes the sum of the squares of the residuals. It is the line that has the smallest possible total squared distance from the data points.

What is the significance of squaring the residuals in the least squares method?

-Squaring the residuals ensures that all residuals are treated as positive values, regardless of whether they are above or below the regression line. This allows for the sum of the squares to be calculated and minimized.

What happens if a different line is used to approximate the data instead of the least squares line?

-If a different line is used to approximate the data, the sum of the squares of the residuals will likely be greater than the sum for the least squares line, indicating a less accurate fit to the data.

How are the coefficients of the regression equation determined?

-The coefficients of the regression equation, b0 and b1, are determined by minimizing the sum of the squares of the residuals. This is typically done using calculus techniques in a process known as least squares estimation.

What is the relationship between residuals and the regression line?

-The relationship between residuals and the regression line is that residuals measure the deviation of each data point from the line. The line with the smallest sum of squared residuals is considered the best fit for the data.

Why is minimizing the sum of squared residuals important in regression analysis?

-Minimizing the sum of squared residuals is important because it provides a measure of the model's accuracy. A smaller sum indicates that the model's predictions are closer to the actual data points, suggesting a better fit.

What can be inferred from a residual plot in the context of regression analysis?

-A residual plot can be used to analyze the correlation and regression results. Patterns in the residual plot can indicate issues such as non-linearity, heteroscedasticity, or the presence of outliers in the data.

Outlines

📈 Understanding Residuals and the Regression Line

In this segment, the concept of residuals is introduced as the difference between the observed y value and the predicted y value from the regression equation. The goal is to explain the relationship between residuals and the regression line. A simple example with a small dataset and a linear regression equation (y hat equals x plus one) is used to illustrate how residuals are calculated. The video script discusses how the regression line is determined to be the best fit by minimizing the sum of the squared residuals, which is visually represented on a graph. Points above and below the line result in positive and negative residuals, respectively, and the significance of these in determining the fit of the regression line is highlighted.

🔍 The Least Squares Property and Best Fit Line

This paragraph delves into the least squares property, which is the criterion used to determine the best fit line in a linear regression model. The least squares line minimizes the sum of the squares of the residuals, ensuring the line fits the data as closely as possible. The script explains how squaring the residuals helps in this process and provides a hypothetical scenario where drawing a different line would result in a larger sum of squared residuals, thus not being the best fit. The importance of the coefficients b sub 0 and b sub 1 in achieving this minimum sum is also discussed, with a mention of calculus as a tool for deriving these coefficients in a more advanced context.

📚 Formulas for the Best Fit Line and Minimizing Residuals

The final paragraph focuses on the mathematical aspect of finding the best fit line by minimizing the sum of the squares of the residuals. It mentions that the optimal values for b sub 0 and b sub 1 can be found using calculus, specifically in the context of multivariable functions. The script suggests that these formulas ensure the line represents the best possible fit for the given data, minimizing the overall error. The discussion wraps up with a look forward to the next video, which will cover residual plots for analyzing correlation and regression results.

Mindmap

Keywords

💡Residual

💡Regression Line

💡Least Squares Property

💡Observed Sample Value

💡Predicted y Value

💡Error

💡Coefficients

💡Sum of Squares

💡Best Fit Line

💡Residual Plot

Highlights

The video discusses learning outcome number five from lesson 10.2 about residuals and the least squares property.

The goal is to explain the relationship between residuals and the regression line in one's own words.

Residuals are defined as the difference between the observed and predicted y values by the regression equation.

A sample data set with four ordered pairs is used to illustrate the concept of residuals.

The regression equation for the sample is y hat equals x plus one, predicting y values based on x.

An example is given where x equals eight, and the regression equation predicts y to be nine, but the actual y is four.

The residual is calculated as the difference between the actual y value and the predicted y value (y - y hat).

The concept of a residual plot is introduced, showing the signed distance between data points and the regression line.

Points below the regression line have negative residuals, while points above have positive residuals.

The least squares property is explained as the principle that the best fit line minimizes the sum of squared residuals.

The least squares line is determined by minimizing the sum of the squares of the residuals for all data points.

An example illustrates that drawing another line would result in a larger sum of squared residuals compared to the best fit line.

The coefficients b sub 0 and b sub 1 of the regression equation are those that minimize the sum of squared residuals.

Calculus is used to derive the formulas for b sub 0 and b sub 1 that minimize the sum of squared residuals.

The best fit line is the one that makes the sum of squared errors as small as possible for the entire data set.

The next video will discuss learning outcome number six about residual plots and their use in analyzing correlation and regression results.

Transcripts

Browse More Related Video

Introduction To Ordinary Least Squares With Examples

The Main Ideas of Fitting a Line to Data (The Main Ideas of Least Squares and Linear Regression.)

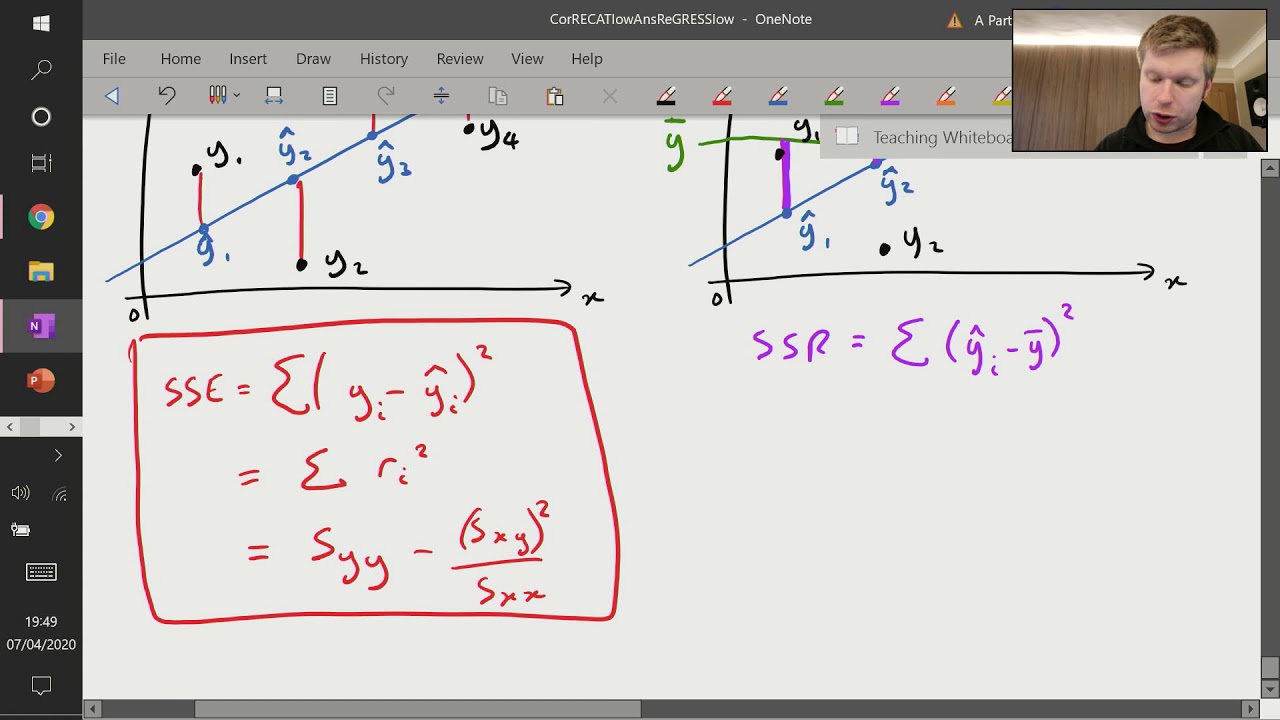

Correlation and Regression (6 of 9: Sum of Squares - SSE, SSR and SST)

Introduction to residuals and least squares regression

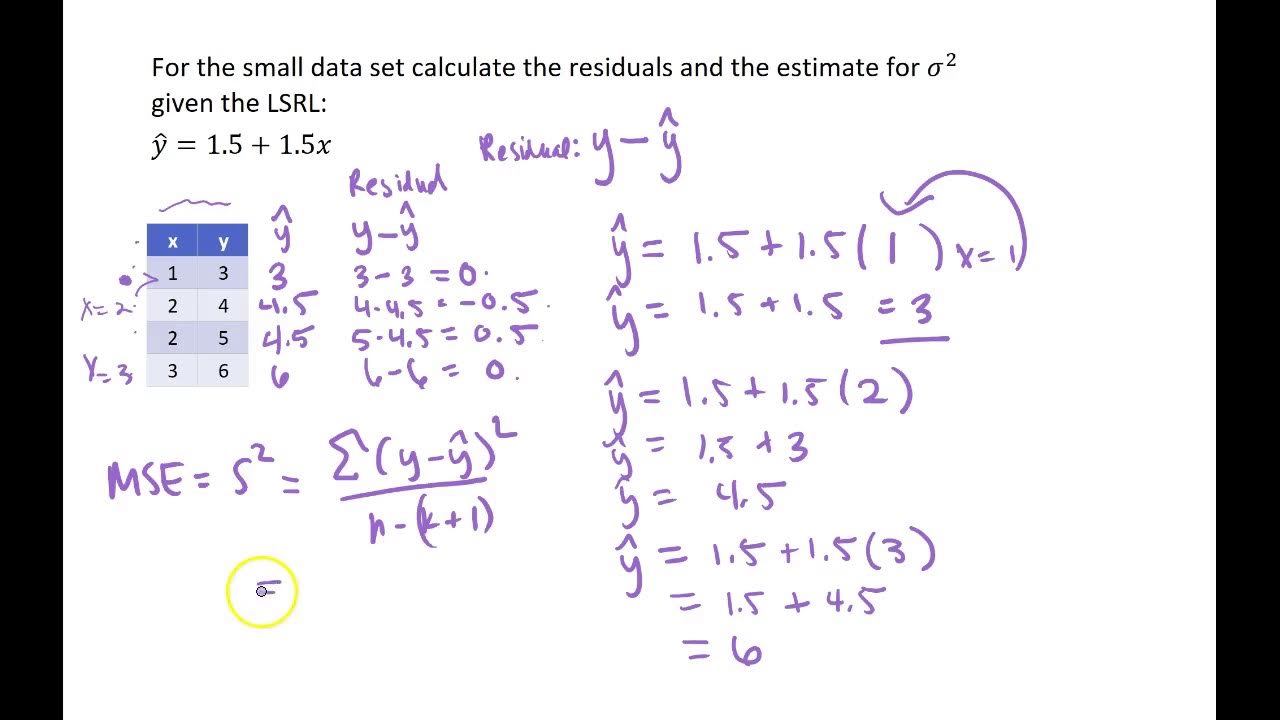

Calculating Residuals and MSE for Regression by hand

How to Calculate the Residual

5.0 / 5 (0 votes)

Thanks for rating: