The Internet: How Search Works

TLDRThe video script discusses the importance and complexity of search engines in providing accurate and relevant information to users' queries. It highlights the responsibility of search engine teams, such as those at Google and Bing, to utilize artificial intelligence and machine learning to enhance search results. The script explains how search engines pre-scan the web to create a search index, using algorithms like Google's PageRank to rank pages based on their relevance and the number of links from other pages. It also touches on the challenges of spam and the continuous evolution of search algorithms to combat it. The summary emphasizes the modern capabilities of search engines to understand context and provide personalized results, even using implicit information such as location to refine searches. The use of machine learning allows search engines to not only find words but comprehend their meanings, ensuring that despite the internet's exponential growth, the information users seek remains accessible.

Takeaways

- 🔍 **Search Engine Responsibility**: Search engines like Google and Bing are tasked with providing the best answers to a wide range of questions, from trivial to incredibly important, reflecting the significant responsibility they hold in shaping public knowledge.

- 🌐 **Preventive Crawling**: Search engines don't search the web in real time. Instead, they proactively crawl the internet to create a searchable index, which speeds up the process when a user makes a query.

- 🕸️ **The Role of Spiders**: Search engines use automated programs called spiders to traverse the web, following hyperlinks and collecting data on web pages to be stored in a search index.

- 📚 **Search Index Database**: The information gathered by spiders is stored in a database known as a search index, which is used to provide quick and relevant search results.

- ✈️ **Real-Time Search Results**: When a user searches for something like 'how long does it take to travel to Mars', the search engine uses its pre-collected data to provide an immediate list of relevant pages.

- 🔑 **Determining Relevance**: Search engines use complex algorithms to determine the most relevant results, considering factors like the presence of search terms in page titles and the proximity of words.

- 🏆 **PageRank Algorithm**: Google's PageRank algorithm, named after its inventor Larry Page, ranks pages based on the number of other web pages that link to them, assuming that popularity correlates with relevance.

- 🛡️ **Algorithm Updates**: Search engines regularly update their algorithms to combat spam and ensure that untrustworthy sites do not appear at the top of search results.

- 🧐 **User Vigilance**: Users are encouraged to be cautious and verify the reliability of sources by checking web addresses, as search engines can be manipulated.

- 📍 **Location-Based Results**: Modern search engines use implicit information, such as a user's location, to provide more personalized and relevant search results, like showing nearby dog parks without specifying a location.

- 🤖 **Machine Learning**: Search engines utilize machine learning to understand not just the words on a page but their underlying meanings, allowing for more accurate and nuanced search results.

- 📈 **Internet Growth and Search Evolution**: As the internet grows exponentially, search engine teams are continuously working to refine their algorithms to provide users with the most relevant and fastest search results possible.

Q & A

What is the primary responsibility of search engines?

-The primary responsibility of search engines is to provide users with the best answers to their queries, whether they are trivial or incredibly important.

Why do search engines not search the World Wide Web in real time for every search?

-Search engines do not search the World Wide Web in real time because there are over a billion websites and hundreds more are created every minute. Searching in real time would be too time-consuming.

How do search engines make searches faster?

-Search engines make searches faster by constantly scanning the web in advance and recording information in a special database called a search index, which can be accessed quickly when a user performs a search.

What is a Spider program in the context of search engines?

-A Spider program is a tool used by search engines to crawl through web pages, following hyperlinks and collecting information about each page, which is then stored in the search index.

How does a search engine determine the best matches to show first in search results?

-A search engine uses its own algorithm to rank pages based on what it thinks the user wants. The algorithm may consider factors such as whether the search term appears in the page title or if all words appear next to each other.

What is the PageRank algorithm, and why is it significant?

-The PageRank algorithm is a famous method invented by Google for ranking search results. It considers how many other web pages link to a given page, assuming that if many websites find a page interesting, it's likely relevant to the user's search.

Why do search engines update their algorithms regularly?

-Search engines update their algorithms to prevent spammers from gaming the system and to ensure that fake or untrustworthy sites do not appear at the top of search results.

How can users identify untrustworthy pages in search results?

-Users can identify untrustworthy pages by examining the web address and ensuring it comes from a reliable source.

How do modern search engines use information not explicitly provided by the user to improve search results?

-Modern search engines use location data and other contextual information to provide more relevant results, such as showing nearby dog parks even if the user did not specify their location.

What role does machine learning play in improving search engine results?

-Machine learning enables search algorithms to understand the underlying meaning of words on a page, rather than just searching for individual letters or words, which helps in finding the most relevant results for a user's query.

How does the growth of the internet affect the ability of search engines to provide relevant information?

-Despite the exponential growth of the internet, if search engine teams design their systems effectively, the information users want should remain easily accessible through a few keystrokes.

What is the ultimate goal of search engine teams in terms of user experience?

-The ultimate goal of search engine teams is to provide users with fast, accurate, and relevant search results that match their queries, using advanced algorithms and machine learning to continuously improve the search experience.

Outlines

🔍 Search Engine Responsibility and Function

The first paragraph introduces John, who leads teams at Google, and Akshaya from the Bing search team. They discuss the importance of providing accurate answers to users' queries, which range from trivial to significant. It also raises a question about the travel time to Mars and introduces the concept of how search engines process requests and provide results. The explanation outlines that search engines don't search the web in real-time due to its vastness. Instead, they pre-scan the web to create a search index, which is used to quickly retrieve relevant information when a user performs a search. The paragraph also describes the process of how a search engine's 'Spider' program traverses web pages and collects data for the search index.

Mindmap

Keywords

💡Search Engine

💡Machine Learning

💡Spider

💡Search Index

💡Algorithm

💡Page Rank

💡Spammers

💡Reliable Source

💡Artificial Intelligence (AI)

💡Hyperlinks

💡Internet Growth

Highlights

John leads the search and machine learning teams at Google, emphasizing the importance of providing the best answers to users' queries.

Akshaya from the Bing search team discusses the integration of AI and machine learning, with a focus on user impact and societal implications.

The question of how long it takes to travel to Mars is used to illustrate the search engine's process of turning a user's request into a result.

Search engines do not search the web in real-time due to the vast number of websites, opting instead for pre-scanned data.

Search engines use spiders to traverse web pages, collecting information to build a search index for faster retrieval.

The search index is a database that stores information from visited web pages to facilitate quick search results.

Search engines look for search terms in their index and rank pages based on various factors to determine the best matches.

Google's PageRank algorithm ranks pages based on the number of other web pages linking to them, indicating relevance.

Spammers attempt to manipulate search algorithms to rank higher, prompting search engines to regularly update their algorithms.

Users are advised to be vigilant about untrustworthy pages by checking web addresses and ensuring they come from reliable sources.

Modern search engines use advanced algorithms to provide better and faster results, even incorporating user-implicit data.

Search engines can infer user location and provide relevant local results without explicit location input from the user.

Search engines understand the context and meaning of words to provide more accurate results, distinguishing between 'fast pitcher' as an athlete and 'large pitcher' for a kitchen.

Machine learning is a key component in search algorithms, allowing them to understand the underlying meaning of words beyond their literal form.

The internet's exponential growth is matched by the continuous evolution of search programs to keep information readily accessible.

The responsibility of search engine teams is to ensure that the information users need is easily accessible despite the internet's rapid expansion.

Transcripts

Browse More Related Video

Why are Matrices Useful?

How To Use Google Trends - Google Trends Tutorial For Beginners

Types Of Machine Learning | Machine Learning Algorithms | Machine Learning Tutorial | Simplilearn

5 Google Trends Keyword Research and Content Strategy Tips

The beauty I see in algebra: Margot Gerritsen at TEDxStanford

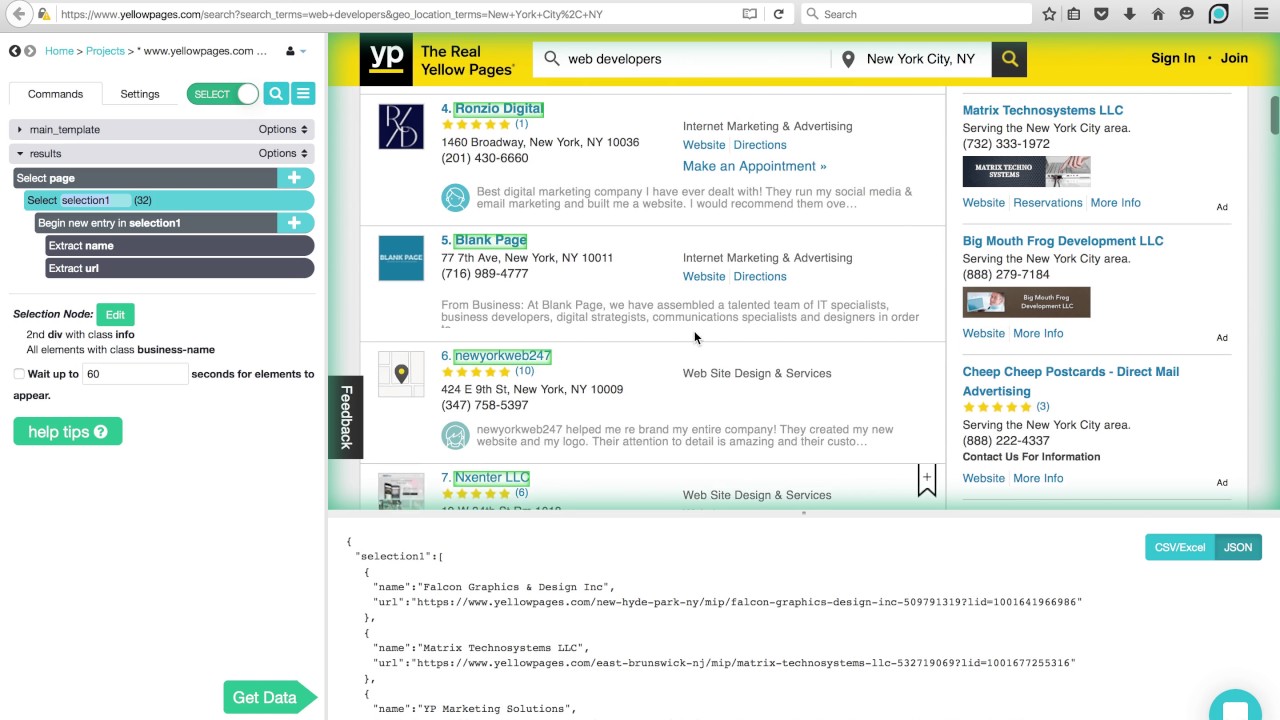

ParseHub Tutorial: Directories

5.0 / 5 (0 votes)

Thanks for rating: