Cosine similarity, cosine distance explained | Math, Statistics for data science, machine learning

TLDRThis video script introduces the concepts of cosine similarity and cosine distance, explaining their application in data science for document classification. It uses a financial company's document tagging problem as a scenario to illustrate how vector mathematics can help determine document similarity based on the occurrence of specific terms. The script then transitions into a practical Python code example using the escalant module to calculate cosine similarity and distance, demonstrating how these measures can be used to automatically annotate and categorize documents.

Takeaways

- 📚 The concept of cosine similarity and cosine distance is introduced as important tools in data science.

- 🔍 Cosine similarity is used to determine the similarity between documents based on the frequency of certain words or terms.

- 📈 In a financial context, data scientists can use cosine similarity to automatically annotate and categorize documents by company associations.

- 🌟 Vector mathematics allows for the representation of documents as vectors, with the frequency of terms like 'iPhone' and 'Galaxy' as components.

- 📊 The magnitude and direction of vectors are key in determining document similarity through the angle between them.

- 📐 The cosine of the angle between two vectors is used to quantify similarity, with a value close to 1 indicating high similarity.

- 🔄 Cosine distance is the complement of cosine similarity, used to express dissimilarity, with a value close to 0 indicating closeness.

- 💡 The academic formula for cosine similarity is the dot product of two vectors divided by the product of their magnitudes.

- 🛠️ Python's escalant module provides a method to calculate cosine similarity, expecting two vectors as input.

- 📊 An example in the script demonstrates how to calculate the cosine similarity and distance between documents represented as vectors.

- 🎯 The script concludes with a practical application of cosine similarity and distance in distinguishing between Apple and Samsung documents.

Q & A

What are the main concepts introduced in the transcript?

-The main concepts introduced are cosine similarity and cosine distance, their applications in data science, particularly in document classification and annotation, and how to implement these concepts using Python code.

How does the transcript use the example of financial documents to explain cosine similarity?

-The transcript uses the example of financial documents mentioning 'iPhone' and 'Galaxy' to illustrate how the ratio of these mentions can help classify a document as related to Apple or Samsung. It then explains how cosine similarity can be used to determine the angle between vectors representing these documents, which in turn indicates the similarity between documents.

What is the significance of the angle between two vectors in the context of document similarity?

-The angle between two vectors determines the document similarity. A smaller angle indicates higher similarity, while a larger angle suggests that the documents are less similar or different.

How does the transcript transition from the concept of angles to cosine similarity?

-The transcript transitions by explaining that while angles can indicate similarity, it's not intuitive for humans to understand. Therefore, it introduces cosine similarity as a way to convert angles into a more intuitive range of values between 0 and 1, where 1 indicates maximum similarity and 0 indicates no similarity.

What is the academic formula for cosine similarity?

-The academic formula for cosine similarity between two vectors a and b is given by (a dot b) / (magnitude of a * magnitude of b), where a dot b is equivalent to the product of the magnitudes and the cosine of the angle between the vectors.

How is cosine distance defined in relation to cosine similarity?

-Cosine distance is defined as 1 minus the cosine similarity. It represents the dissimilarity between two vectors, with 0 indicating that the vectors are pointing in the same direction (similar) and 1 indicating that they are very different.

What Python module does the transcript use to calculate cosine similarity?

-The transcript uses the escalant module in Python to calculate cosine similarity.

How does the transcript demonstrate the calculation of cosine similarity between different documents?

-The transcript provides a step-by-step example of calculating cosine similarity between vectors representing document features, such as the counts of mentions for 'iPhone' and 'Galaxy', and interprets the resulting value to determine the similarity between documents.

What is the role of Pandas in the Python code example given in the transcript?

-In the Python code example, Pandas is used to create a data frame that holds the vectors representing the documents. This data frame is then used as input for the cosine similarity function.

How does the transcript address the issue of multiple features in a document?

-The transcript explains that while the example uses a simple two-dimensional vector with 'iPhone' and 'Galaxy' as features, in real-life scenarios, documents may have hundreds of features. The same mathematical principles apply, and cosine similarity can be calculated for high-dimensional vectors as well.

What is the significance of the cosine similarity value of 0.9 in the context of the example?

-A cosine similarity value of 0.9 indicates a high level of similarity between two documents, suggesting that they are likely to belong to the same category or have similar content.

How does the transcript suggest using cosine distance for document comparison?

-The transcript suggests using cosine distance as a measure of dissimilarity, where a lower cosine distance value indicates greater similarity and a higher value indicates greater dissimilarity between documents.

Outlines

📚 Introduction to Cosine Similarity and Distance

This paragraph introduces the concepts of cosine similarity and cosine distance, explaining their application in data science. It uses the context of a data scientist working for a financial company dealing with documents related to various companies. The example given involves identifying the company associated with a document based on the frequency of mentions of certain products (e.g., iPhone and Galaxy). The paragraph discusses the limitations of a simple ratio-based approach and introduces vector mathematics as a solution to determine document similarity. It explains how the angle between vectors can represent the similarity between documents, with zero degrees indicating high similarity and larger angles indicating less similarity. The concept is further elaborated by discussing how cosine can be used to quantify this similarity on a scale from 0 to 1.

📈 Understanding Cosine Similarity in Detail

This paragraph delves deeper into the mathematical aspect of cosine similarity. It explains that cosine similarity is essentially the cosine of the angle between two vectors, which can be used to determine the similarity between documents with multiple features. The paragraph clarifies that while two-dimensional vectors can be visualized, the same mathematical principles apply to higher-dimensional vectors. The academic formula for cosine similarity is introduced, and the paragraph explains that vectors pointing in the same direction have a cosine similarity of 1, while vectors at 90 degrees have a similarity of 0, indicating very different documents. The concept of cosine distance is also introduced as a measure of dissimilarity, defined as 1 minus cosine similarity.

💻 Implementing Cosine Similarity and Distance in Python

The final paragraph focuses on the practical implementation of cosine similarity and distance in Python. It outlines the process of using the escalant module to calculate cosine similarity between two vectors, as demonstrated with the example vectors representing Apple and Samsung documents. The paragraph also discusses the syntax and structure required for inputting data into the cosine similarity function. It then moves on to show how to apply this in a real-world scenario by creating a Pandas dataframe with pre-counted word frequencies of certain products in different documents. The paragraph concludes with an example of calculating cosine similarity and distance between different document vectors and emphasizes the importance of these concepts in data science applications.

Mindmap

Keywords

💡Cosine Similarity

💡Cosine Distance

💡Data Science

💡Vector Mathematics

💡Financial Documents

💡Annotation

💡Competitor Analysis

💡Python

💡Pandas

💡TensorFlow

Highlights

The introduction of cosine similarity and cosine distance in data science applications.

The use case of a data scientist working for a financial company dealing with financial documents.

Identifying company associations with documents based on the frequency of product mentions, such as iPhone and Galaxy.

The concept of using ratios for document annotation and automation in metadata tagging.

The complexity introduced by additional product mentions like iPad and Google Pixel in document analysis.

The application of vector mathematics to represent and analyze document similarity.

The representation of document vectors with magnitude and direction on an x-y axis.

The use of angles between vectors to determine document similarity.

The transformation of angles into a more intuitive 0 to 1 range for document similarity.

The academic formula for cosine similarity involving dot product and magnitude of vectors.

The interpretation of cosine similarity values in relation to document similarity.

The concept of cosine distance as a measure of dissimilarity, represented as 1 minus cosine similarity.

The demonstration of cosine similarity and distance using Python's escalant module.

The creation of a Pandas DataFrame for document feature representation.

The comparison of document similarity and distance using actual document examples.

The syntax and usage of cosine similarity and distance functions in Python.

The mention of TensorFlow's capability for cosine similarity calculations.

The conclusion and call to action for viewers to share the video with friends.

Transcripts

Browse More Related Video

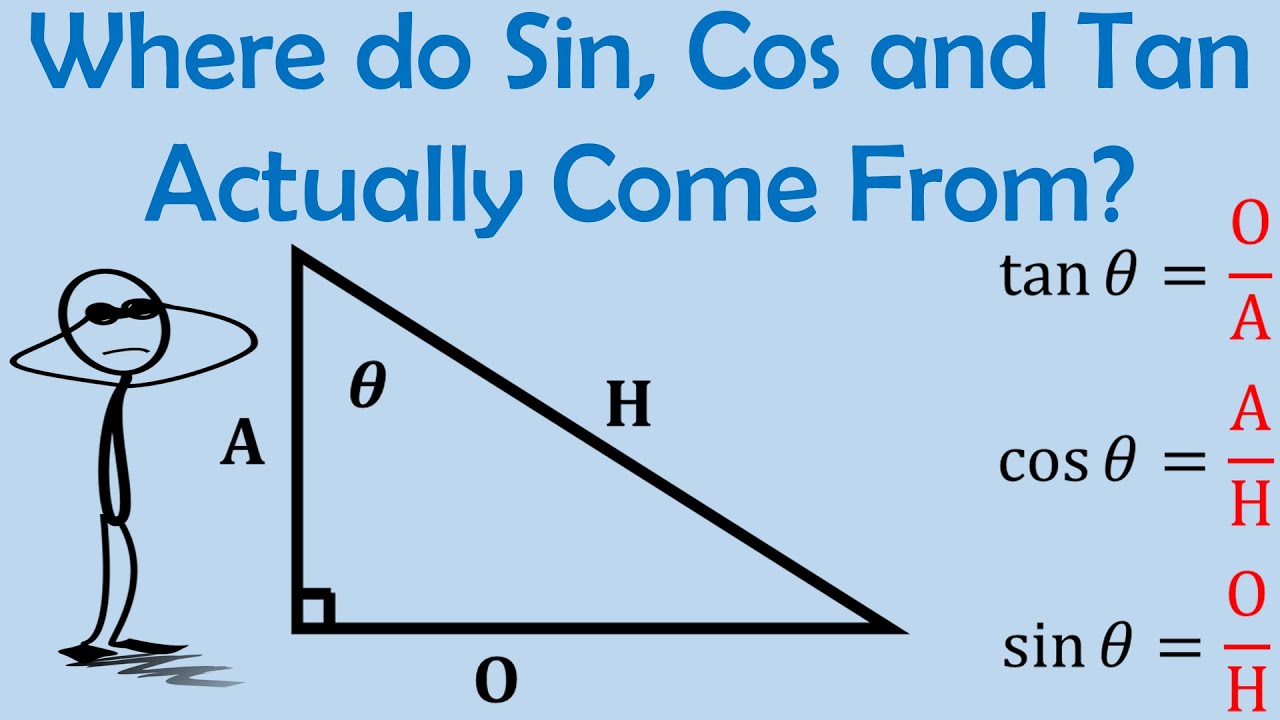

sin cos tan explained. Explanation using real life example | Math, Statistics for data science

Where do Sin, Cos and Tan Actually Come From - Origins of Trigonometry - Part 1

Trig - 0.4 Fundamental Trig Identities

Beautiful Soup 4 Tutorial #1 - Web Scraping With Python

Simple explanation of Modified Z Score | Modified Z Score to detect outliers with python code

Hyperbolic trig functions | MIT 18.01SC Single Variable Calculus, Fall 2010

5.0 / 5 (0 votes)

Thanks for rating: