Bootstrapping and Resampling in Statistics with Example| Statistics Tutorial #12 |MarinStatsLectures

TLDRThe transcript discusses the bootstrap approach in statistical inference, a resampling method used to estimate the sampling distribution of a statistic without relying on large sample theory. It highlights the benefits of bootstrapping, especially when dealing with small samples or complex estimates like percentiles or composite measures, where calculating the standard error is challenging. The process involves taking repeated random samples with replacement from the original data set and using these to approximate the distribution and standard error. The transcript emphasizes the power of bootstrapping as a tool that can yield results nearly identical to those from traditional large sample theory, even when assumptions are not met, and is less dependent on the sample size.

Takeaways

- 📊 The bootstrap approach is a resampling method used in statistical inference for estimating the sampling distribution and standard error of a statistic.

- 🔢 It is particularly useful when dealing with small sample sizes or when the standard error calculation is complex or impossible.

- 🔄 Bootstrap resampling involves taking samples with replacement from the original dataset to create new 'bootstrap' samples of the same size.

- 🎯 The standard deviation of the bootstrap estimates is referred to as the bootstrap standard error of the mean, which serves as an estimate of the sampling distribution's variability.

- 🌟 Bootstrapping does not rely on the Central Limit Theorem, making it suitable for cases where the sampling distribution is not normally distributed.

- 🚫 The method can be influenced by outliers in the data, similar to other statistical methods that depend on the observed data.

- 💡 Increasing the number of bootstrap resamples (B) improves the estimate of the sampling distribution but does not increase the information content of the original data.

- 📈 The results from bootstrapping are often very similar to those obtained through large sample theory, even when the assumptions for the latter are not met.

- 🛠️ Bootstrapping is a powerful tool that has become more accessible with advancements in computing power.

- 🧠 The concept of bootstrapping might be challenging for some, but it is a valuable technique to understand and apply in statistical analysis.

- 📚 Further examples and comparisons between bootstrap and theoretical methods will be explored in subsequent educational content.

Q & A

What is the bootstrap approach in statistical inference?

-The bootstrap approach is a resampling method used in statistical inference that involves creating new samples from the observed data by resampling with replacement. It is used to estimate the sampling distribution of a statistic, such as the mean, without relying on large sample theory assumptions like normality.

Why might we choose to use the bootstrap approach over the large sample theory approach?

-We might choose the bootstrap approach over the large sample theory approach when we do not have a large sample size, and thus cannot assume the sampling distribution is approximately normal, or when calculating the standard error of the estimate is difficult, such as in the case of non-simple estimates like percentile ranges or composite measures.

How does the bootstrap approach help with estimating the standard error?

-The bootstrap approach helps with estimating the standard error by generating a bootstrap sampling distribution through repeated resampling of the observed data. The standard deviation of these bootstrap estimates provides an estimate of the standard error, which can be used for constructing confidence intervals or testing hypotheses.

What is the main difference between the theoretical sampling distribution and the bootstrap sampling distribution?

-The theoretical sampling distribution is based on mathematical theory and assumes a large sample size for normality, while the bootstrap sampling distribution is generated through empirical resampling of the observed data, without relying on these assumptions.

How many times should we resample in the bootstrap approach?

-The number of resamples in the bootstrap approach can vary, but it is generally recommended to use at least 10,000 or more to get a reliable estimate of the standard error. The exact number depends on the available computing power and the desired precision of the estimate.

Does the bootstrap approach increase the amount of information in the data?

-No, increasing the number of resamples in the bootstrap approach does not increase the amount of information in the data. It only provides a more stable estimate of the sampling distribution and standard error based on the existing data.

What happens if an extreme value is present in the observed data?

-If an extreme value is present in the observed data, it may appear multiple times in the resamples and could potentially affect the bootstrap estimates. However, this is similar to how an outlier affects the large sample approach by skewing the mean and inflating the standard deviation.

How does the bootstrap approach handle non-representative samples?

-The bootstrap approach assumes that the sample is representative of the population. If the sample is not representative, the bootstrap estimates will also not be representative, and the resulting bootstrap sampling distribution will not accurately reflect the true population distribution.

What are some advantages of the bootstrap approach?

-The bootstrap approach is advantageous because it is flexible and does not rely on strict assumptions like normality or large sample sizes. It is also powerful in that it can be applied to complex estimates and provides a way to estimate the sampling distribution and standard error when traditional methods are difficult or impossible to apply.

How does the bootstrap approach relate to modern computing?

-The bootstrap approach became more widely used with the advent of modern computing power. It allows for repeated resampling, which was previously time-consuming and impractical without the ability to perform large numbers of calculations efficiently.

What are some potential limitations of the bootstrap approach?

-While the bootstrap approach is powerful, it does have limitations. It relies on the assumption that the original sample is representative of the population. Additionally, it may require significant computing resources when dealing with a large number of resamples, and it may not be as efficient as other methods when strong theoretical properties are known to hold.

Outlines

📊 Introduction to Bootstrap Method in Statistical Inference

This paragraph introduces the concept of the bootstrap method in the context of statistical inference. It contrasts the parametric or large sample approach, which relies on the sampling distribution and standard error, with the bootstrap approach. The discussion focuses on estimating the mean of a numeric variable and highlights the limitations of the parametric approach when dealing with small sample sizes or complex estimates like percentile ranges or composite measures. The paragraph sets the stage for explaining the bootstrap method as an alternative that doesn't require large sample sizes and can handle more complex estimation scenarios.

🔄 Understanding the Bootstrap Resampling Process

This paragraph delves into the mechanics of the bootstrap resampling process. It explains how to create a bootstrap sample by randomly selecting observations from the original sample with replacement, thereby generating a new set of estimates. The process is repeated multiple times (B times) to build a bootstrap sampling distribution, which is used to estimate the standard error of the mean. The paragraph emphasizes that the bootstrap approach is based on the assumption that the sample is representative of the population and that the number of resamples is limited by time and computing power rather than the amount of information in the data.

📈 Bootstrap Standard Error and Sampling Distribution

The paragraph discusses the calculation of the bootstrap standard error and the creation of a bootstrap sampling distribution. It explains how resampling with replacement can lead to more reliable estimates of the standard error by simulating a larger number of possible estimates. The paragraph also addresses the concern that extreme values in the data might skew the bootstrap results, but argues that the bootstrap approach relies on the data to the same extent as the large sample approaches, making it a powerful tool for statistical inference. The paragraph concludes by noting the relative recency of the bootstrap method and its dependence on computing power.

🤔 Addressing Concerns about Bootstrapping

In this paragraph, the speaker addresses a common concern about the bootstrap method's reliance on observed data, particularly the impact of outliers. It explains that while outliers can appear multiple times in resamples and potentially skew the results, the large sample approach is also affected by such extreme values. The paragraph reassures that the bootstrap method is as dependent on the quality of the observed data as any other statistical method and highlights its robustness and power. The speaker also encourages viewers to explore the bootstrap method further through upcoming videos and ends with a call to action for viewers to subscribe to the channel.

Mindmap

Keywords

💡bootstrap approach

💡sampling distribution

💡standard error

💡large sample theory

💡resampling

💡population

💡sample mean

💡standard deviation

💡percentiles

💡composite measure

💡confidence intervals

Highlights

Introduction to the bootstrap approach in statistical inference.

Bootstrap method as an alternative to large sample theory.

Use of bootstrap when sample size is not large or normality cannot be assumed.

Challenges in calculating standard error for complex estimates like percentile ranges.

The concept of resampling with replacement to generate new sample estimates.

Bootstrap standard error as an alternative to theoretical standard error.

Procedure for bootstrap resampling illustrated with a simple example.

Bootstrapping can be repeated a large number of times for a more reliable estimate.

Limitations of resampling in terms of computational power and time.

Bootstrapping results are nearly identical to large sample theory outcomes.

Bootstrap approach is always valid, regardless of the assumptions met in large sample theory.

Addressing concerns about the influence of extreme values in bootstrapping.

Bootstrapping is a powerful tool that has been gaining traction in the academic world.

The dependency on computing power for the practical application of bootstrap methods.

Upcoming videos will explore bootstrapping further, including constructing confidence intervals and hypothesis testing.

Encouragement for viewers to subscribe for more content on statistical methods.

Transcripts

Browse More Related Video

Bootstrap Confidence Interval with Examples | Statistics Tutorial #36 | MarinStatsLectures

Bootstrap Hypothesis Testing in Statistics with Example |Statistics Tutorial #35 |MarinStatsLectures

Lecture 5: Law of Large Numbers & Central Limit Theorem

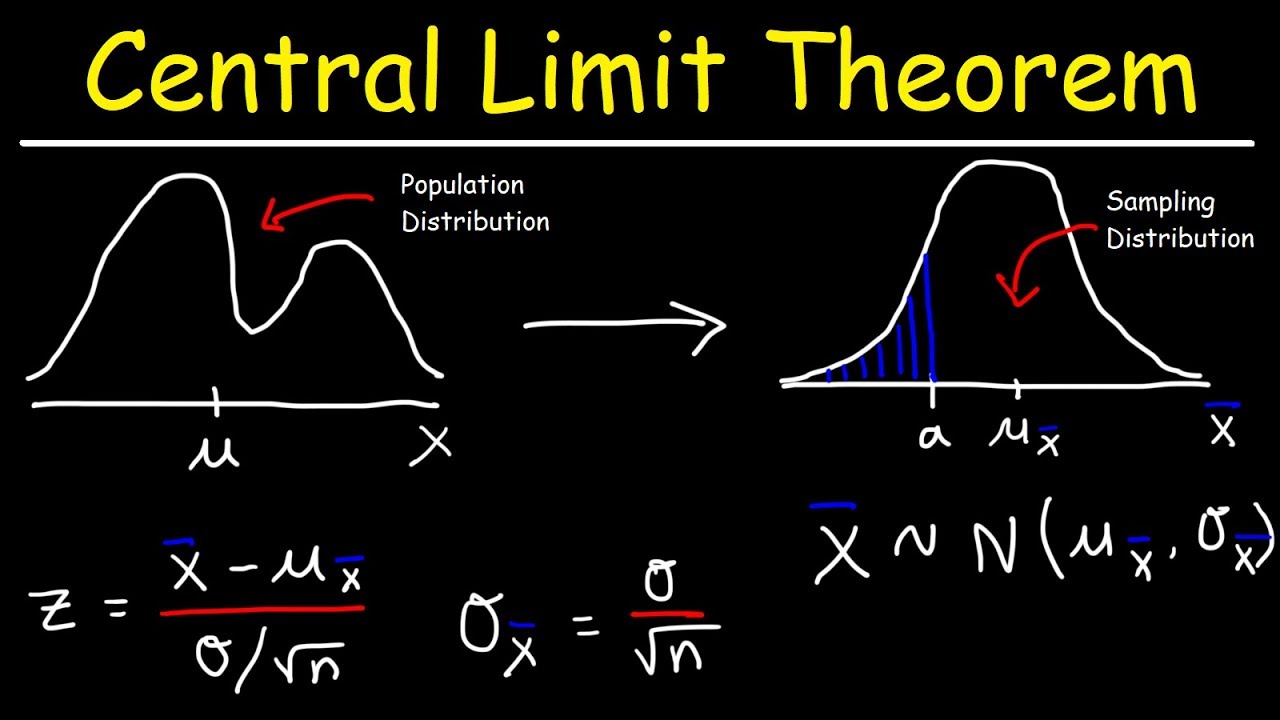

Central Limit Theorem - Sampling Distribution of Sample Means - Stats & Probability

What is a Sampling Distribution? | Puppet Master of Statistics

SAMPLING DISTRIBUTION OF SAMPLE MEANS - WITH AND WITHOUT REPLACEMENT

5.0 / 5 (0 votes)

Thanks for rating: