Multivariable maxima and minima

TLDRThis script explores the concept of maximizing multivariable functions, which are essential in practical applications like maximizing company profits or minimizing cost functions in machine learning. It explains that to find the maximum, one must set the partial derivatives to zero, indicating a flat tangent plane at the peak. However, this alone doesn't guarantee a global maximum, as local maxima and minima also satisfy this condition. The script introduces the gradient vector notation for concisely expressing this requirement and hints at the concept of saddle points to be discussed in a subsequent video.

Takeaways

- 📚 Multivariable functions take in multiple inputs and produce a single output, often representing real-world scenarios like company profits.

- 🔍 Maximizing a multivariable function involves finding the input values that yield the highest output, such as maximizing profits by adjusting factors like wages or prices.

- 🤖 In machine learning and AI, cost functions are used to measure how wrong a model's predictions are, with the goal of minimizing this function to improve performance.

- 📈 The process of maximizing a function is conceptually similar to finding the peak of a mountain, where the input values correspond to the location of the peak.

- 🔧 To find the maximum, one must consider the tangent plane at the peak; if it's flat, it suggests a potential maximum, as any direction from this point does not increase the function's value.

- 📉 The core observation in calculus is that at a maximum, the tangent plane is flat, which mathematically translates to the function's partial derivatives being zero.

- 🔄 Finding the partial derivatives set to zero gives a system of equations to solve for the potential maximum points, but further analysis is needed to confirm if it's a true maximum.

- 🏔 Local maxima are points where the function is higher than its immediate neighbors but not necessarily the highest overall, similar to smaller mountains compared to the tallest.

- 🏞 The condition of a flat tangent plane (all partial derivatives being zero) is also met at local and global minima, indicating the need for additional tests to differentiate between them.

- 📊 The gradient of a function, a vector containing all partial derivatives, must equal the zero vector to indicate a potential maximum or minimum, but this alone is not conclusive.

- 🛤️ Beyond local maxima and minima, there are saddle points in multivariable calculus where the tangent plane is flat but the point is neither a maximum nor a minimum, a concept to be explored further.

Q & A

What is the primary goal when dealing with a multivariable function in practical applications?

-The primary goal is often to maximize or minimize the function, depending on the context. For example, in business, one might want to maximize profits by finding the optimal values for variables such as employee wages, product prices, or capital debt.

How does the concept of maximizing a multivariable function relate to machine learning and artificial intelligence?

-In machine learning and AI, a cost function is assigned to a task. The goal is to minimize this cost function, which represents the error or 'wrongness' of the model's predictions. Minimizing the cost function helps the computer perform the assigned task more accurately.

What does it mean for a function to be at a maximum in the context of its graph?

-A function is at a maximum when it reaches its peak value, analogous to finding the tallest mountain in a landscape. This peak represents the highest output value of the function for a given set of input values.

Why is the concept of a tangent plane important in finding the maximum of a function?

-The tangent plane at a point on the graph of a function is important because if the plane is flat, it indicates that small changes in any direction from that point will not significantly increase the function's value, suggesting a potential maximum.

What mathematical condition must be satisfied at a point to indicate a potential maximum or minimum of a function?

-The mathematical condition is that all the partial derivatives of the function with respect to each variable at that point must be zero. This indicates a flat tangent plane, which is a necessary condition for a local maximum or minimum.

Why is it not sufficient to find a point where all partial derivatives are zero?

-Finding a point where all partial derivatives are zero is necessary but not sufficient because it could also indicate a local minimum or a saddle point, not necessarily a global maximum.

What is the term used to describe the points on a function's graph that are higher than their immediate neighbors but not the highest overall?

-These points are called local maxima, which are relative maxima in the context of the surrounding area of the function's graph.

What is the term used to describe the lowest point on a function's graph, considering all possible input values?

-This point is referred to as the global minimum, which is the absolute lowest value the function can take.

What is the gradient of a function and how does it relate to finding maxima and minima?

-The gradient of a function is a vector containing all the partial derivatives of the function with respect to its variables. Setting the gradient equal to the zero vector is a way to succinctly express the condition that all partial derivatives must be zero to find potential maxima, minima, or saddle points.

What is a saddle point and how does it differ from a local or global maximum?

-A saddle point is a point on the graph of a function where the tangent plane is flat, but it is neither a local maximum nor a minimum. It represents a 'dip' in one direction and a 'peak' in another, and is a concept unique to multivariable calculus.

Outlines

📈 Maximizing Multivariable Functions

This paragraph introduces the concept of maximizing a multivariable function, which is a function that takes multiple inputs and produces a single output. The goal is to find the specific input values that yield the highest possible output value. The paragraph provides real-world applications, such as maximizing a company's profits by adjusting variables like employee wages, product prices, or capital debt. It also touches on the relevance of this concept in machine learning and artificial intelligence, where minimizing a cost function helps in training a computer to perform tasks accurately. The explanation is grounded in the idea of finding the 'peak' of a function, which is analogous to finding the maximum value. The paragraph concludes with a discussion on the mathematical approach to identifying this peak by using the function's partial derivatives, which must be zero at the peak to ensure no increase in function value in any direction.

🔍 Understanding Local Maxima, Minima, and Saddle Points

This paragraph delves deeper into the implications of finding points where the partial derivatives of a function are zero. It clarifies that such points do not automatically indicate a global maximum; they could also be local maxima or minima. The paragraph explains that local maxima are points where the function value is the highest relative to its immediate neighbors but not necessarily the highest across the entire function's domain. It also introduces the concept of saddle points, which are points where the tangent plane is flat but do not represent either a local maximum or minimum. The importance of further testing beyond finding where the gradient equals zero is emphasized to determine the nature of these critical points. The paragraph concludes with a brief mention of the gradient vector notation, which succinctly represents the condition of all partial derivatives being zero, and sets the stage for the next video, which will explore saddle points in more detail.

Mindmap

Keywords

💡Multivariable function

💡Maximize

💡Cost function

💡Partial derivatives

💡Tangent plane

💡Local Maxima

💡Global Maximum

💡Gradient

💡Saddle point

💡Optimization

Highlights

Maximizing a multivariable function involves finding input values that yield the highest output.

The practical application of maximizing functions includes optimizing company profits by considering various business decisions.

In machine learning, cost functions are used to measure how wrong a computer's guess is, with the goal of minimizing this function to improve performance.

The art and science of machine learning involve designing cost functions and applying techniques to minimize them efficiently.

Conceptually, finding the maximum of a multivariable function is akin to finding the peak of a graph representing the function.

At the peak, the tangent plane to the function is flat, indicating no direction can increase the function's value.

The slope of the tangent plane in any direction at the peak is zero, which corresponds to the function's partial derivatives being zero.

Partial derivatives of a function at a point equal to zero indicate a potential maximum, but further testing is required to confirm.

Local maxima are points where the function is higher than its immediate neighbors but not necessarily the highest overall.

Global maxima represent the absolute highest point of the function, unlike local maxima which are relative to their surroundings.

Minima points also have flat tangent planes, but they represent the lowest values of the function rather than the highest.

The gradient of a function, a vector of all partial derivatives, must equal the zero vector to indicate a potential extremum.

Gradient notation simplifies the requirement for finding extrema by representing the condition in a single line.

Saddle points are a unique feature of multivariable calculus where the tangent plane is flat, but the point is neither a maximum nor a minimum.

The concept of saddle points will be further explored in subsequent videos to understand their significance in multivariable functions.

The process of finding extrema involves not only setting partial derivatives to zero but also requires additional tests to determine the nature of the extremum.

The video transcript provides a foundational understanding of multivariable calculus concepts, setting the stage for more detailed exploration in future lessons.

Transcripts

Browse More Related Video

Critical points introduction | AP Calculus AB | Khan Academy

Saddle points

Lec 9: Max-min problems; least squares | MIT 18.02 Multivariable Calculus, Fall 2007

Second partial derivative test

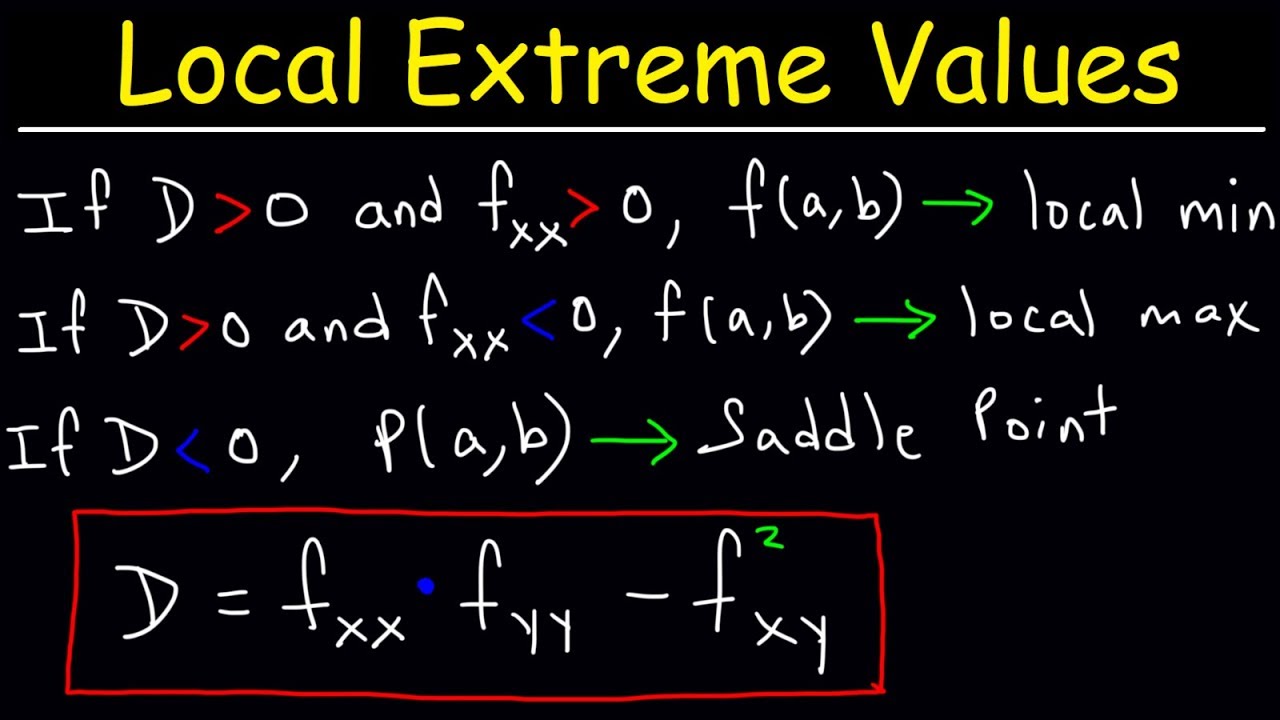

Local Extrema, Critical Points, & Saddle Points of Multivariable Functions - Calculus 3

Find and Classify all Critical Points of a Multivariable Function

5.0 / 5 (0 votes)

Thanks for rating: