LinkedIn Metric Interview Question and Answer: Tips for Data Science Interview Success!

TLDRIn this video, Emma addresses a real metric interview question from the book 'Decode and Conquer,' which is beneficial for both product management and data science interviews. She discusses LinkedIn's feature of asking new users to upload a profile photo during sign-up and the importance of clarifying the feature's purpose. Emma suggests three key metrics for evaluating the feature's success: user engagement, the percentage of new users uploading photos, and a guardrail metric to ensure no negative impact on the sign-up process. She emphasizes the significance of choosing metrics that are easy to measure and fit the context, providing a sample answer to guide viewers through the thought process.

Takeaways

- 📚 Emma introduces a real metric interview question from the book 'Decode and Conquer', which is useful for both product management and data science interviews.

- 🔍 The original question is about evaluating the success of a new LinkedIn feature that asks users to upload a profile photo during the sign-up phase.

- 🤔 Emma emphasizes the importance of understanding the feature's function and the business goal, which in this case is to improve user engagement and possibly detect fraud.

- 📈 She suggests considering three types of metrics: success metrics, a guardrail metric, and interaction with the interviewer to provide a comprehensive answer.

- 🎯 The first success metric is user engagement, measured by the average number of invites sent per user per day or the number of posts made.

- 📷 The second success metric is the percentage of new users who upload their profile photos, which should be higher than the control group to validate the feature's usefulness.

- 🚧 The guardrail metric is to monitor the percentage of users who drop off during the sign-up process, ensuring it does not increase due to the new feature.

- 🔢 Emma discusses the importance of seeing real numbers and p-values to make data-informed decisions, especially when there are trade-offs between metrics.

- 💡 She advises that if the feature increases engagement significantly but decreases the number of users completing sign-up, it might still be worth launching due to the primary goal.

- 📝 Emma shares tips on choosing metrics: they should be easy to measure, fit the context, and not just follow a generic framework like AARR.

- 🤝 Interaction with the interviewer is crucial, as it shows the candidate's ability to clarify questions and think on their feet.

- 👋 Emma invites feedback on her approach to answering the interview question and encourages viewers to share their thoughts.

Q & A

What is the main purpose of the video?

-The main purpose of the video is to discuss a real metric interview question from the book 'Decode and Conquer' and to provide a structured approach to answer such questions, particularly in the context of a data science interview.

Why is the book 'Decode and Conquer' mentioned in the video?

-The book 'Decode and Conquer' is mentioned because it is a resource about product management interviews, but the speaker found it helpful for data science interviews as well, especially for metric-based questions.

What is the original interview question discussed in the video?

-The original interview question is about evaluating the success of a new feature on LinkedIn that asks new users to upload their profile photo during the sign-up phase instead of after.

Why is the question considered typical for a data science interview?

-The question is considered typical for a data science interview because it involves evaluating the success of a feature using metrics, which is a core competence of data scientists, including designing experiments, analyzing results, and making data-informed decisions.

What is the issue with the simple answer provided by the book according to the speaker?

-The issue with the simple answer provided by the book is that the candidate does not clarify the function of the feature and comes up with many metrics that are not very feasible in A/B tests.

What are the two success metrics suggested by the speaker to evaluate the new feature?

-The two success metrics suggested by the speaker are the average number of invites sent per user per day (to measure user engagement) and the percentage of new users who upload their profile photos (to measure the usefulness of the feature).

What is the 'guardrail metric' mentioned in the video and why is it important?

-The 'guardrail metric' is the percentage of users who drop off in the sign-up process. It is important to ensure that in the pursuit of the new feature, the overall user experience does not degrade, and the sign-up completion rate does not decrease.

What is the goal of the new feature according to the speaker's interpretation?

-The goal of the new feature, as interpreted by the speaker, is to improve user engagement by having more users upload their photos during the sign-up process, which may also help in detecting fraudulent accounts.

How does the speaker suggest interacting with the interviewer during the interview?

-The speaker suggests clarifying the question, coming up with relevant metrics, and interacting with the interviewer to ensure a mutual understanding of the business goal and the potential impact of the new feature.

What is the speaker's advice on choosing metrics for an A/B test in an interview?

-The speaker advises that for A/B tests, providing three metrics is usually sufficient, and the chosen metrics should be easy to measure and fit the context of the specific use case to avoid sounding robotic.

What is the final recommendation made by the speaker based on the hypothetical test results?

-The final recommendation made by the speaker is to launch the feature, as having more engaged users, even with a slight decrease in sign-up completion, aligns with the current objective of improving engagement.

Outlines

📘 Introduction to Data Science Interview Question

Emma introduces a video focusing on a real metric interview question from the book 'Decode and Conquer', which is beneficial for both product management and data science interviews. She addresses the need for a more practical approach than theoretical frameworks, especially for evaluating the success of a product feature. The specific question involves LinkedIn testing a new feature that prompts users to upload a profile photo during the sign-up phase. Emma aims to provide a detailed answer that reflects a data science interview scenario, emphasizing the importance of clarifying the feature's function and setting measurable goals.

📊 Analyzing Metrics for Feature Success

In this paragraph, Emma discusses the approach to evaluating the success of a new feature that requires users to upload a profile photo during the sign-up process. She emphasizes the importance of understanding the feature's purpose, which is to improve user engagement by ensuring more users complete their profiles. Emma suggests two success metrics: user engagement, measured by the average number of invites or posts per user per day, and the percentage of new users uploading photos, which should be higher than the control group. Additionally, she proposes a guardrail metric to monitor the sign-up drop-off rate, ensuring it does not increase due to the new feature. Emma also addresses the importance of context in choosing metrics and the need for them to be measurable and relevant to the specific use case.

🔍 Evaluating Test Results and Decision Making

Emma explores a hypothetical scenario where the percentage of users uploading profile photos increases, but the number of users completing the sign-up process decreases. She stresses the importance of reviewing actual data to assess the business impact and make informed decisions. Emma explains that if the increase in engagement is significant, but there is also a significant drop in sign-up completion, it's a trade-off situation. Given the primary objective is to improve engagement, she recommends launching the feature despite the potential drawbacks. The paragraph concludes with advice on how to answer such open-ended interview questions effectively, emphasizing the importance of clarity, relevance, and context in metric selection.

Mindmap

Keywords

💡Magic Interview Question

💡Frameworks

💡Product Management Interviews

💡Data Science Interviews

💡A/B Testing

💡Profile Photo

💡User Engagement

💡Metrics

💡Guardrail Metric

💡Significant

💡Fraud Detection

Highlights

Emma discusses the value of sharing real interview questions over frameworks in her channel's content.

The video covers a real metric interview question from the book 'Decode and Conquer', useful for product management and data science interviews.

The original interview question involves evaluating the success of a new LinkedIn feature asking users to upload a profile photo during sign-up.

Emma emphasizes the importance of understanding the function of the feature in the context of the business goal before selecting metrics.

The goal of the new feature is hypothesized to be improving user engagement by having more users upload their photos during sign-up.

A secondary goal could be to detect fraud by adding credibility to new accounts through profile photos.

Three key metrics are proposed to evaluate the feature: user engagement, usefulness of the feature, and a guardrail metric.

User engagement is measured by the average number of invites sent per user per day or the number of posts made.

The usefulness metric is the percentage of new users who upload their profile photos, expected to be higher than the control group.

A guardrail metric monitors the percentage of users who drop off during the sign-up process to ensure it does not increase.

Emma advises on the importance of seeing real numbers and p-values to make data-informed decisions.

A scenario is presented where an increase in photo uploads is offset by a decrease in sign-ups, prompting a difficult decision.

The recommendation is made to launch the feature if more engaged users are deemed valuable despite the trade-off.

The candidate's approach to clarifying the question, selecting relevant metrics, and interacting with the interviewer is praised.

Different metrics could be chosen for the question, and providing 3 metrics is usually sufficient for an A/B test.

Metrics should be easy to measure and include a time threshold for clarity and practicality.

The importance of context in choosing metrics is stressed, to avoid sounding robotic and to make a convincing argument.

Emma invites feedback on the provided sample answer and encourages viewers to share their own approaches to the question.

The video concludes with an invitation for viewers to share their thoughts on the question and the approach taken in the video.

Transcripts

Browse More Related Video

Lyft/Uber Metric Interview Question and Answer: Tips for Data Science Interview Success!

Crack Metric/Business Case/Product Sense Problems for Data Scientists | Data Science Interviews

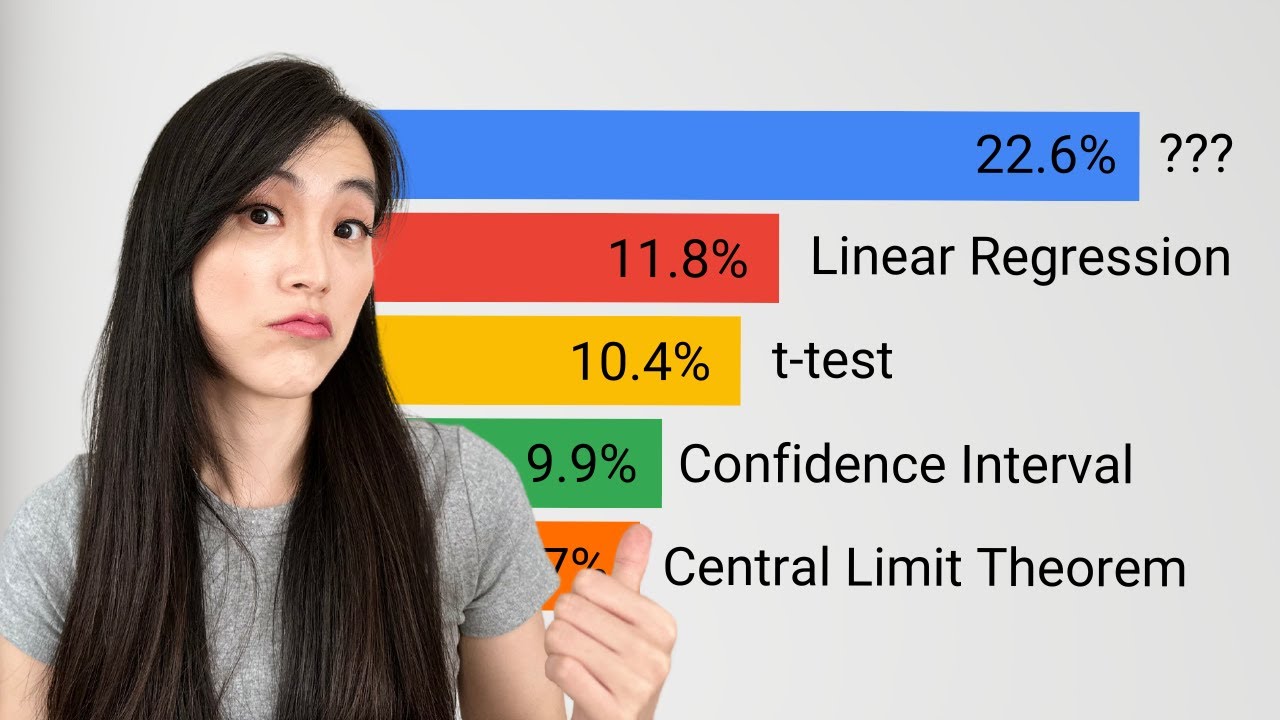

Ace Statistics Interviews: A Data-driven Approach For Data Scientists

How to setup on screen stats in games with MSI Afterburner and RivaTuner.

Baseball Advanced Stats Explained (Sort Of): Hitting

RescueNet® CaseReview - Module 6 How to trend CPR data

5.0 / 5 (0 votes)

Thanks for rating: