Industrial-scale Web Scraping with AI & Proxy Networks

TLDRThe video script introduces viewers to the concept of web scraping as a means to extract valuable data from the internet, using a headless browser called Puppeteer. It emphasizes the competitive nature of e-commerce and dropshipping, and offers a solution to identify trending products on sites like Amazon and eBay. The script also suggests utilizing AI tools like GPT-4 for data analysis and automation tasks. It addresses challenges like dealing with bot traffic and captchas, and recommends using Bright Data's scraping browser for overcoming these obstacles. The tutorial provides a step-by-step guide on setting up a Node.js project with Puppeteer, navigating web pages programmatically, and parsing HTML content. The script concludes by highlighting the potential applications of the scraped data in various AI-driven projects.

Takeaways

- 🌐 Web scraping is the process of extracting valuable data from the internet, often hidden within complex HTML structures.

- 🛍️ E-commerce and dropshipping are common ways to make money online, but they require knowledge of what and when to sell.

- 🤖 Puppeteer is a headless browser that allows for automated interaction with websites, making it an ideal tool for web scraping tasks.

- 🚀 AI tools like GPT-4 can be utilized to analyze scraped data, write reviews, create advertisements, and automate various tasks.

- 🔒 Big e-commerce sites may block traffic from bots, so using tools like the scraping browser, which operates on a proxy network, is essential to avoid such issues.

- 🔧 The scraping browser offers built-in features like captcha-solving and fingerprint retries to aid in industrial-scale web scraping.

- 📝 To get started with web scraping, one can create a new Node.js project, install Puppeteer, and set up a connection to a remote browser.

- 🌐 Puppeteer allows for programmatic navigation and interaction with websites, including executing JavaScript and simulating user actions.

- 🔍 Web scraping can be enhanced by using tools like GPT-4 to write code for extracting specific data from web pages.

- 📈 Once data is collected, it can be used for various purposes such as targeted advertising, market analysis, or even building custom AI agents.

- ⏳ It's important to implement delays when navigating through multiple pages to avoid overwhelming server requests and maintain the health of the scraping process.

Q & A

What is the primary challenge when it comes to extracting useful data from the internet?

-The primary challenge is that valuable data is often buried within complex HTML structures, which requires 'digging' through a lot of irrelevant or 'dirty' markup to find the raw data needed.

What is the significance of data mining in the context of the internet?

-Data mining is significant because it serves as the process of sifting through large amounts of data to extract valuable information, much like mining for precious minerals. It is metaphorically compared to digging through a mountain of complex HTML to find the useful data.

How does one typically monetize e-commerce on the internet?

-A common way to make money on the internet through e-commerce is by using the dropshipping model. However, it's important to be aware of what products to sell and when, as the market is highly competitive.

What is a headless browser and how does it aid in web scraping?

-A headless browser is a web browser without a graphical user interface that can be programmed to interact with web pages. It aids in web scraping by allowing users to extract data from websites programmatically, as it can execute JavaScript and simulate user interactions like a regular user would do.

What is the role of AI tools like GPT-4 in data analysis?

-AI tools like GPT-4 can be utilized to analyze data, write reviews, create advertisements, and automate various tasks. They can process and interpret complex data sets to produce actionable insights and content, enhancing the efficiency of data-driven tasks.

Why might large e-commerce sites block IP addresses, and how can this be circumvented?

-Large e-commerce sites may block IP addresses if they suspect non-human traffic, such as bots, to protect their site from scraping and data misuse. This can be circumvented by using tools like the Scraping Browser, which operates on a proxy network and has features like captcha-solving and fingerprint retries to mimic human-like browsing behavior.

What is the purpose of the Bright Data scraping browser and how does it differ from other tools?

-The Bright Data scraping browser is designed to scrape the web at an industrial scale. It runs on a proxy network, which helps avoid IP bans by rotating IP addresses and simulating different users. It also has built-in features for solving captchas and retrying failed requests, making it a robust tool for large-scale web scraping tasks.

How does Puppeteer facilitate web scraping?

-Puppeteer is an open-source automation library that allows users to control a headless version of the Chrome or Chromium browser. It can be used to programmatically interact with web pages by executing JavaScript, clicking buttons, and performing other actions that a user could do, making it an effective tool for web scraping tasks.

What is the importance of using a delay when navigating between pages during web scraping?

-Using a delay when navigating between pages during web scraping is important to avoid sending an overwhelming number of server requests in a short period. This helps to not overload the server and reduces the risk of being detected as a bot, which could lead to the blocking of the IP address.

How can the script content be utilized for e-commerce?

-The script content can be utilized for e-commerce by scraping data from various e-commerce sites to identify trending products, analyze market trends, and create targeted advertisements. It can also be used to build custom APIs for product data, which can be the foundation for developing e-commerce strategies, such as Amazon Dropshipping business plans.

What is the potential of combining web scraping with AI?

-Combining web scraping with AI has immense potential as it allows for the extraction of large amounts of data, which can then be processed and analyzed by AI tools to gain insights, create personalized content, and develop advanced applications. This can lead to the creation of custom AI agents, targeted marketing strategies, and more efficient data-driven decision making in e-commerce and other fields.

Outlines

🌐 Web Scraping with Puppeteer and AI Tools

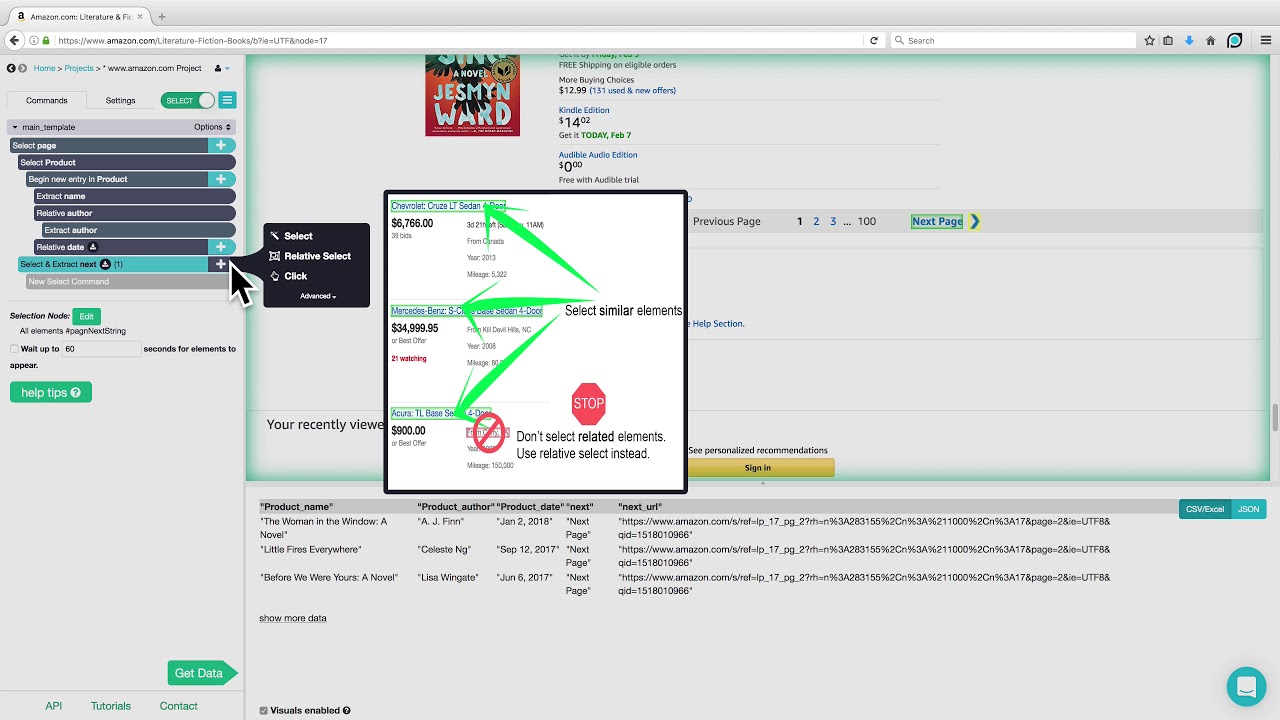

This paragraph discusses the challenges of extracting valuable data from the internet, which is often buried within complex HTML structures. It introduces the concept of web scraping as a solution to this problem, highlighting its potential for monetization in e-commerce and dropshipping. The speaker proposes a method that involves using a headless browser called Puppeteer to extract data from public-facing websites, even those without an API like Amazon. The paragraph also touches on the competitive nature of e-commerce and the importance of understanding market trends. It suggests using AI tools like GPT-4 for data analysis, review writing, and advertisement creation. The speaker addresses the issue of being blocked by e-commerce sites due to bot traffic and introduces a tool called the scraping browser, sponsored by Bright Data, which operates on a proxy network and offers features like captcha-solving and fingerprint retries to facilitate industrial-scale web scraping.

🛠️ Building a Custom API for Trending Products

The second paragraph delves into the practical application of web scraping by demonstrating how to build a custom API for extracting trending product data from e-commerce platforms like Amazon. It explains the process of using Puppeteer for programmatic interaction with websites, including navigating and parsing web pages. The speaker also discusses the limitations of using the same IP address for extensive scraping, which can lead to bans. The paragraph introduces the scraping browser as a solution to avoid such issues by using a proxy network. The speaker then provides a step-by-step guide on setting up a Node.js project with Puppeteer, connecting to a remote browser, and scraping a webpage. It concludes with a practical example of extracting product titles and prices from Amazon's bestsellers page and suggests further possibilities like using AI for targeted advertisements or building a custom AI agent for business planning.

Mindmap

Keywords

💡Data Mining

💡Web Scraping

💡Puppeteer

💡Headless Browser

💡Dropshipping

💡API

💡AI Tools

💡Chat GPT

💡Proxy Network

💡Bright Data

💡Node.js

Highlights

The internet is a vast source of useful data, often buried within complex HTML structures.

Data mining metaphorically involves digging through 'dirt' to find valuable data.

E-commerce and dropshipping are common ways to make money online, but they are highly competitive fields.

Web scraping with a headless browser like Puppeteer allows for data extraction from public-facing websites, even those without an API.

AI tools like GPT-4 can be utilized to analyze scraped data, write reviews, and automate various tasks.

Large e-commerce sites may block traffic from bots, making web scraping challenging.

Bright Data's Scraping Browser is a tool that runs on a proxy network and offers features like captcha-solving and fingerprint retries.

Automated IP address rotation is crucial for serious web scraping to avoid being blocked.

The Web Scraper IDE provides templates and tools for web scraping, but the speaker prefers full control over the workflow.

Puppeteer is an open-source tool from Google that allows programmatic interaction with websites.

Using a remote browser like the Scraping Browser can help avoid IP bans by using a proxy network.

Creating a new Node.js project and installing Puppeteer is the first step in setting up a web scraping environment.

Puppeteer can be used to programmatically navigate and interact with web pages like an end user.

The use of selectors and browser APIs in Puppeteer allows for parsing and extracting specific web page elements.

Chat GPT can be used to write web scraping code, making the process faster and more efficient.

By combining web scraping and AI, one can build custom APIs and conduct industrial-scale data extraction.

Web scraping is a key technique for obtaining the data necessary for AI applications.

Transcripts

Browse More Related Video

Puppeteer: Headless Automated Testing, Scraping, and Downloading

Web Scraping to CSV | Multiple Pages Scraping with BeautifulSoup

ParseHub Tutorial: Scraping Product Details from Amazon

ParseHub Tutorial: Scraping 2 eCommerce Websites in 1 Project

Beautiful Soup 4 Tutorial #1 - Web Scraping With Python

Find and Find_All | Web Scraping in Python

5.0 / 5 (0 votes)

Thanks for rating: