What Is Mathematical Optimization?

TLDRThis video series dives into the world of optimization, a dynamic field of mathematics with broad applications. It begins with an introduction to optimization, explaining it as a process of selecting the best option from a set with associated costs. The series then focuses on convex optimization, a special class of problems that can be solved efficiently using a unified approach. The script discusses the importance of understanding linear programming as a foundation before tackling more complex, nonlinear problems. It also touches on geometric intuition, the principle of duality, and the interior points method—an algorithm that has made significant strides in solving convex optimization problems. The series aims to be accessible, requiring only basic linear algebra and calculus, and is suitable for a wide audience, including students, researchers, and professionals interested in optimization. The final part of the series promises to reveal the significance of an ocean animation in relation to the topic.

Takeaways

- 📰 The New York Times in 1987 highlighted a breakthrough in problem-solving with the invention of the interior points method for convex optimization.

- 🔍 Convex optimization is important because it provides an efficient algorithm for a wide range of optimization problems.

- 📈 The video series is divided into three parts: an introduction to optimization, a deeper dive into convex optimization and duality, and an overview of the interior points method.

- 🌐 The series is designed to be accessible to a wide audience, including students and professionals, without requiring extensive mathematical background.

- 📉 Optimization problems involve choosing the best option from a set of possibilities, each with an associated cost.

- 📏 Linear programming is a well-understood type of optimization problem where both the cost function and constraints are linear.

- 📊 Linear functions can be visualized using either hyperplane representation or vector representation, with the latter being preferred for understanding direction in cost functions.

- 🧮 Linear constraints are represented by hyperplanes that divide space into positive, negative, and null regions, defining the feasible set for decision variables.

- 📉 Linear regression is an example of an optimization problem where the objective function is quadratic, and there are no constraints on the decision variable.

- 💼 Portfolio optimization in finance is another example of an optimization problem, where the goal is to maximize returns within certain constraints, such as budget and volatility.

- 🔧 Convex optimization problems can be solved efficiently using established technology, which is a significant advancement in the field of optimization.

- ⏭️ The upcoming videos will explore the concept of convexity and the interior points method in more detail, providing insights into the principles behind these optimization techniques.

Q & A

What is convex optimization?

-Convex optimization is a subfield of mathematical optimization that deals with problems where the objective function to be minimized or maximized is convex, and the feasible set over which the optimization is performed is also convex.

Why is convex optimization considered special in the context of problem solving?

-Convex optimization is special because it allows for the efficient and unified solution of a large family of optimization problems. Once a problem is recognized as convex, established and mature technology can be applied almost as a black box to find the optimal solution.

What is the interior points method?

-The interior points method is an efficient algorithm for convex optimization. It is designed to find the optimal solution by iteratively moving through the interior of the feasible region towards the optimal point.

What are the three main components of an optimization problem?

-The three main components of an optimization problem are: 1) a set where the decision variable lives, often denoted as R^n, 2) a cost function (objective function) that we want to minimize, and 3) constraints on the decision variable, which include equality and inequality constraints that define the feasible set.

How does the principle of duality relate to convex optimization?

-The principle of duality in convex optimization is a fundamental concept that establishes a relationship between a given optimization problem and its dual. It allows us to gain insights into the structure of the problem and provides alternative ways to solve or analyze it.

What is a linear program?

-A linear program is a type of optimization problem where both the cost function and the constraints are linear. It is one of the best-understood problems in optimization and serves as a foundation for understanding more complex, nonlinear problems.

How does linear regression relate to optimization?

-Linear regression is an optimization problem where the goal is to fit a linear model to a set of data points by minimizing the error between the model's predictions and the actual outputs, often using a quadratic objective function.

What is the significance of the vector representation in linear programming?

-The vector representation is significant in linear programming because it helps in understanding the direction that increases or decreases the linear function. This is particularly useful when minimizing a cost function without constraints, as one would move along the vector -c as much as possible.

How does the concept of a feasible region come into play in optimization problems?

-The feasible region is the set of all possible values for the decision variable that satisfy the constraints of the optimization problem. It is the region within which the optimal solution must lie, and it is defined by the intersection of the positive half-spaces of the linear constraints.

What is the role of the cost function in an optimization problem?

-The cost function, also known as the objective function, is the function that we aim to minimize (or maximize if multiplied by -1) in an optimization problem. It assigns a cost to each possible decision, and the goal is to find the decision that results in the smallest (or largest) cost.

What is the difference between equality and inequality constraints in optimization problems?

-Equality constraints are conditions that the decision variable must exactly satisfy, while inequality constraints are conditions that the decision variable must satisfy without necessarily being equal to. Together, they define the feasible set within which the optimal solution is sought.

Why is it important to recognize a problem as convex in optimization?

-Recognizing a problem as convex is important because convex problems have well-established solution techniques and can be solved efficiently using a unified approach. This recognition allows the application of mature optimization technology to find the global optimum.

Outlines

📰 Introduction to Convex Optimization

This paragraph introduces the topic of convex optimization, referencing a 1987 New York Times headline about the invention of the interior points method, an efficient algorithm for convex optimization. It sets the stage for a three-part video series that aims to make optimization accessible to a wide audience, including students and professionals. The video series promises to provide an intuitive understanding of optimization, starting with basic concepts, delving into the principle of duality, and concluding with an overview of the interior points method. The video also teases an ocean animation that will be explained in the context of optimization.

🔍 Understanding Optimization and Linear Programming

The second paragraph delves into the basics of optimization problems, explaining that they involve choosing the best option from a set of possibilities, each with an associated cost. It introduces the concept of decision variables and the optimization problem's three main components: the feasible set, the cost function (also known as the objective function), and the constraints. The paragraph also distinguishes between linear and nonlinear optimization problems and uses the example of linear regression to illustrate a more complex, nonlinear optimization scenario. It also touches on portfolio optimization in finance, highlighting the balance between maximizing returns and adhering to constraints like budget and volatility limits.

🛠️ The Rise of Convex Optimization

The final paragraph discusses the evolution of optimization problem-solving techniques. It points out that while optimization problems with similar formulations can require vastly different solution methods, convex optimization problems have been found to be solvable in a unified manner. This discovery has led to the development of established technologies that can be applied to convex problems almost like a black box. The paragraph also hints at the upcoming discussion on the specifics of convexity and its benefits in optimization, as well as a look into the 'black box' of convex optimization algorithms in the subsequent videos.

Mindmap

Keywords

💡Interior Points Method

💡Convex Optimization

💡Optimization

💡Linear Programming

💡Decision Variable

💡Objective Function

💡Constraints

💡Feasible Set

💡Duality Principle

💡Quadratic Function

💡Portfolio Optimization

Highlights

The New York Times front page in 1987 announced a breakthrough in problem-solving with the invention of the interior points method for convex optimization.

Convex optimization is an efficient algorithm for solving optimization problems where the feasible region is convex.

The video series is divided into three parts: an introduction to optimization, a deeper dive into convex optimization and duality, and an overview of the interior points method.

The series is intended for a wide audience, including those without a specific background in mathematics, beyond basic linear algebra and calculus.

Optimization problems involve choosing the best option from a set of possibilities, each with an associated cost.

Linear programs are well-understood problems where both the cost function and constraints are linear.

Geometric intuition for linear programs can be built by visualizing linear functions with hyperplanes or vectors.

Linear regression or the least squares problem is an example of an optimization problem with a quadratic objective function and no constraints.

Portfolio optimization in finance is another example of an optimization problem, involving maximizing returns under budget and volatility constraints.

Convex optimization problems can be solved efficiently using a unified approach once recognized as convex.

The interior points method is a significant algorithm for convex optimization that has been developed into a mature technology.

The series aims to provide a self-contained presentation that is crisp, visual, and focuses on building intuition without excessive mathematical details.

The ocean animation in the series represents a concept related to optimization and will be explained in the bonus section.

The series is suitable for math and computer science students, researchers, and engineers interested in optimization.

The video discusses the importance of understanding the difference between maximization and minimization problems in optimization.

An optimization problem is formally defined by a decision variable set, a cost function to minimize, and constraints on the decision variable.

The feasible set is defined by the constraints and is the set of possibilities from which the decision variable can be chosen.

The series will explore the principle of duality in convex optimization, which is a fascinating related concept.

Transcripts

Browse More Related Video

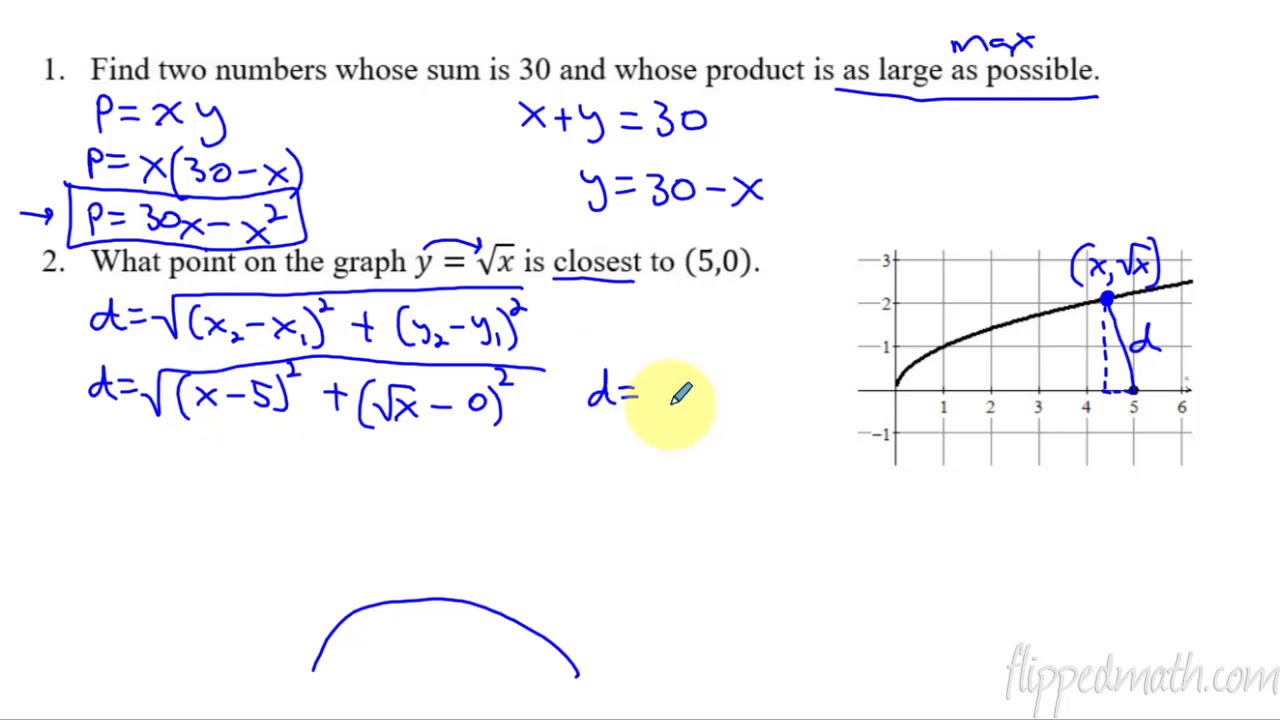

Calculus AB/BC – 5.10 Introduction to Optimization Problems

Introduction to Optimization: What Is Optimization?

Calculus I: Limits & Derivatives — Subject 3 of Machine Learning Foundations

Solving Optimization Problems with MATLAB | Master Class with Loren Shure

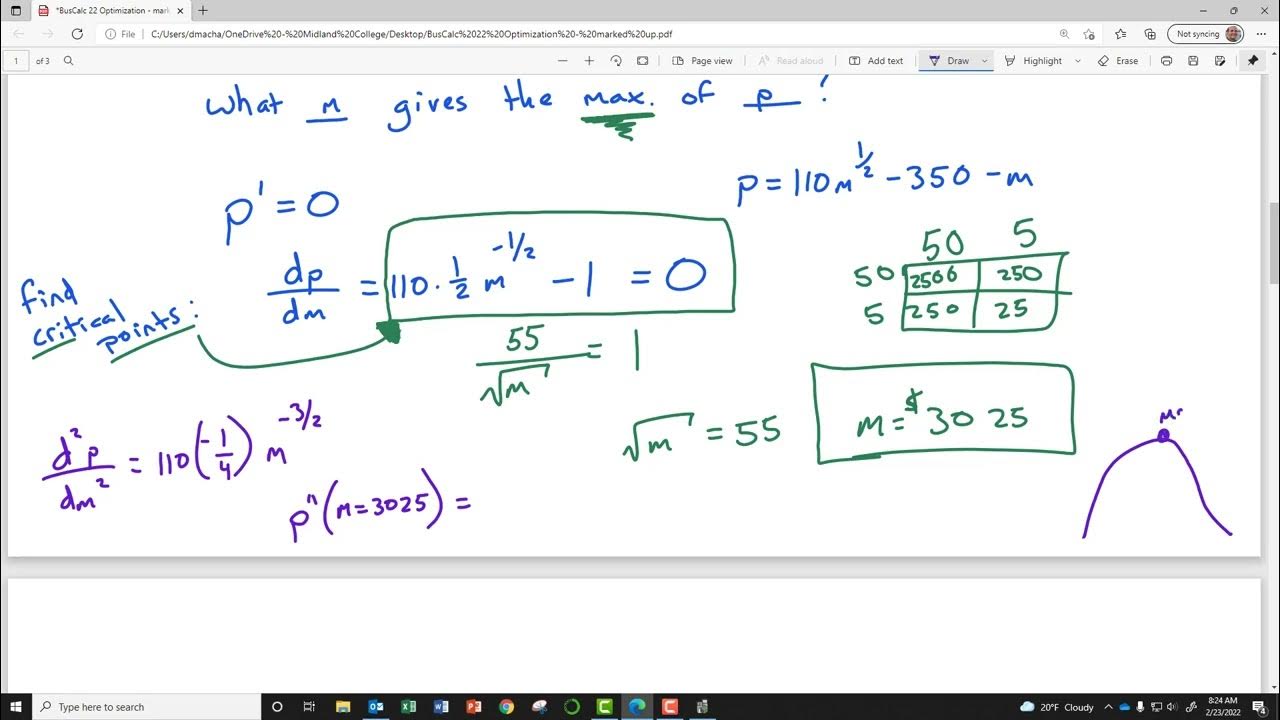

BusCalc 22 Optimization

Tensor Calculus Lecture 14f: Principal Curvatures

5.0 / 5 (0 votes)

Thanks for rating: