Calculus I: Limits & Derivatives — Subject 3 of Machine Learning Foundations

TLDRDr. John Crone introduces viewers to the Machine Learning Foundation Series with a focus on Calculus 1: Limits and Derivatives. This foundational subject is integral to machine learning, as it forms the basis for computing derivatives through differentiation, which is essential for learning from training data within most machine learning algorithms, including deep learning techniques like back propagation and stochastic gradient descent. The series is designed to be accessible to those not yet familiar with linear algebra, and it stands alone while occasionally referencing tensor theory from earlier linear algebra subjects. The content is divided into three segments: understanding what calculus is, computing derivatives through differentiation, and exploring automatic differentiation in Python. This subject not only lays the groundwork for Calculus 2 but is also crucial for the final subject, Optimization, which synthesizes all the previous subjects in the series.

Takeaways

- 📚 **Introduction to Calculus**: The video introduces the subject of Calculus 1, focusing on limits and derivatives, which are fundamental to machine learning algorithms.

- 🐶 **Mascot Mention**: Dr. John Crone's puppy, Oboe, is the mascot for the Machine Learning Foundation Series.

- 🔬 **Series Overview**: The Machine Learning Foundation Series consists of eight subjects, with Calculus 1 being the third in the series.

- 👨🏫 **Instructor's Expertise**: Dr. John Crone teaches the subject, emphasizing the importance of understanding calculus for machine learning.

- 📈 **Differentiation's Role**: Differentiation, including automatic differentiation algorithms in Python, is crucial for optimizing machine learning algorithms.

- 🧮 **Prerequisite Consideration**: While the subject assumes some familiarity with linear algebra and tensor theory, it is designed to be approachable for those less familiar with these areas.

- 📘 **Content Structure**: The Calculus 1 subject is divided into three thematic segments: limits, computing derivatives with differentiation, and automatic differentiation in Python.

- 🔑 **Foundational Importance**: Calculus 1 is foundational not only for Calculus 2 but also for the final subject on optimization in the series.

- ⏱️ **Historical Context**: The video provides a brief history of calculus and mentions the method of exhaustion, a technique that is still relevant today.

- 📖 **Learning Approach**: The subject is taught through a combination of hands-on code demos and critical equations.

- 🔧 **Practical Application**: Computing derivatives is essential for learning from training data within most machine learning algorithms, including deep learning techniques like back propagation and stochastic gradient descent.

Q & A

What is the focus of the Machine Learning Foundation series?

-The Machine Learning Foundation series focuses on studying various subjects that are foundational to machine learning, starting with Calculus 1: Limits and Derivatives.

Who is the presenter of the Machine Learning Foundation series?

-Dr. John Crone is the presenter of the Machine Learning Foundation series.

What role does Calculus play in machine learning?

-Calculus plays a crucial role in machine learning as it provides the mathematical foundation for computing derivatives, which is essential for learning from training data within most machine learning algorithms, including those used in deep learning.

What is the significance of differentiation in the context of machine learning?

-Differentiation is the basis of learning from training data within most machine learning algorithms. It is used in techniques such as back propagation and stochastic gradient descent.

Can someone start with Calculus 1 without prior knowledge of linear algebra?

-Yes, the subject of Calculus 1 largely stands alone, and one can start their journey into the Machine Learning Foundation series with it even if they are not overly familiar with linear algebra.

What is the mascot of the Machine Learning Foundation series?

-The mascot of the Machine Learning Foundation series is a puppy named Oboe.

How many subjects are there in the Machine Learning Foundation series?

-The Machine Learning Foundation series consists of eight subjects.

What is the relationship between Calculus 1 and the other subjects in the series?

-Calculus 1 is foundational for all of the remaining subjects in the series, including Calculus 2 and the final subject, Optimization, which ties together all preceding subjects.

What are the three thematic segments into which Calculus 1 is divided?

-Calculus 1 is divided into three thematic segments: limits, computing derivatives with differentiation, and automatic differentiation in Python.

What is the method of exhaustion and why is it relevant to calculus?

-The method of exhaustion is a centuries-old calculus technique that is still relevant today. It is a technique used for calculating areas and volumes by breaking them down into smaller and smaller parts, which is a precursor to modern approaches to calculating limits.

What programming libraries are assumed to be familiar to the audience in the series?

-The audience is assumed to be familiar with Jupyter notebooks, as well as the NumPy, TensorFlow, and PyTorch libraries.

How does the series approach the teaching of calculus?

-The series primarily uses hands-on code demos to teach calculus, along with critical equations to provide a deep understanding of the subject.

Outlines

📚 Introduction to Machine Learning Foundation Series: Calculus 1

Dr. John Crone introduces the audience to the Machine Learning Foundation series, specifically focusing on Calculus 1: Limits and Derivatives. He emphasizes the subject's importance in understanding machine learning algorithms, particularly those involving differentiation like back propagation and stochastic gradient descent. The series is structured to be accessible even to those not well-versed in linear algebra, making it an ideal starting point for those new to the field. The content is divided into three segments: an introduction to calculus, computing derivatives with differentiation, and automatic differentiation in Python. The first segment delves into the history of calculus, the method of exhaustion, and modern approaches to calculating limits.

Mindmap

Keywords

💡Calculus

💡Limits

💡Derivatives

💡Differentiation

💡Machine Learning

💡Optimization

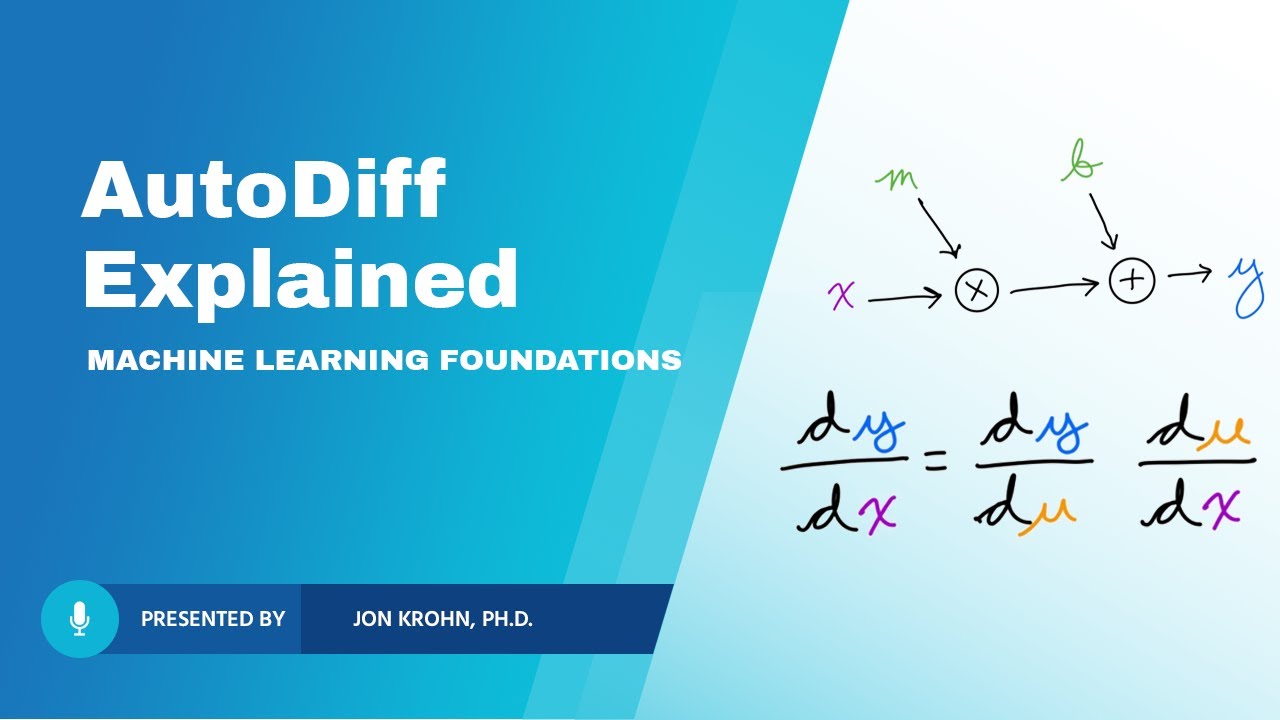

💡Automatic Differentiation

💡Python

💡Tensor

💡Linear Algebra

💡Backpropagation

💡Stochastic Gradient Descent

Highlights

Introduction to the Machine Learning Foundation series with a focus on studying calculus.

Calculus 1, Limits and Derivatives, is the third subject in the series.

Calculus is essential for understanding optimization in machine learning algorithms.

Differentiation is the basis of learning from training data within most machine learning algorithms.

The subject can be studied independently of linear algebra.

The series consists of eight subjects, with Calculus 1 being foundational for all others.

Calculus 1 is particularly important for Calculus 2 and the final subject on optimization.

The content is divided into three thematic segments: limits, computing derivatives, and automatic differentiation in Python.

The first segment provides a brief history of calculus and an introduction to the method of exhaustion.

Modern approaches to calculating limits will be demonstrated.

Differentiation, including powerful automatic differentiation algorithms in Python, is a key focus.

The subject assumes familiarity with Jupyter notebooks and libraries such as NumPy, TensorFlow, and PyTorch.

The series is hosted by Dr. John Crone, featuring his puppy Oboe as the mascot.

The course aims to teach optimization of learning algorithms through the use of differentiation.

Backpropagation and stochastic gradient descent are mentioned as algorithms that utilize differentiation.

The subject matter is applicable to deep learning and other areas of machine learning.

The importance of computing derivatives in the context of machine learning is emphasized.

The course is designed to be accessible for those new to linear algebra.

Tensor-related theory from earlier linear algebra subjects will be referenced.

Transcripts

Browse More Related Video

Calculus II: Partial Derivatives & Integrals — Subject 4 of Machine Learning Foundations

Calculus Applications – Topic 46 of Machine Learning Foundations

Backpropagation — Topic 79 of Machine Learning Foundations

My Favorite Calculus Resources — Topic 92 of Machine Learning Foundations

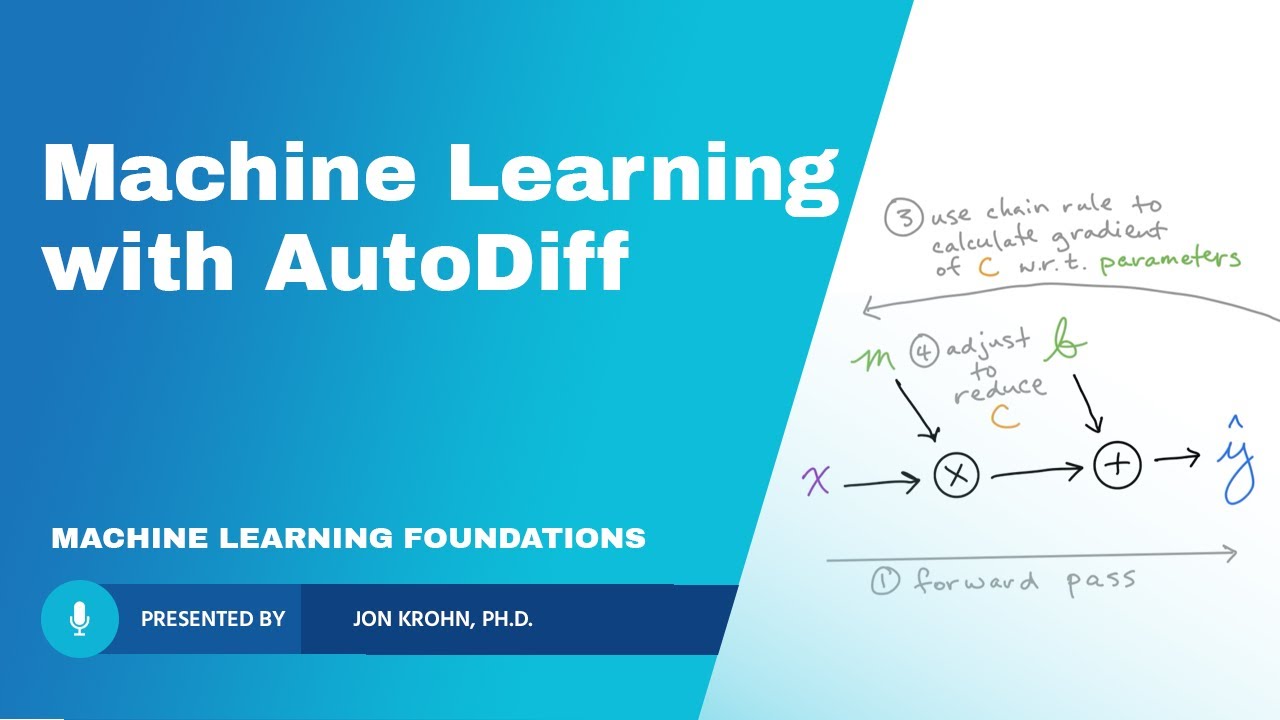

Machine Learning from First Principles, with PyTorch AutoDiff — Topic 66 of ML Foundations

What Automatic Differentiation Is — Topic 62 of Machine Learning Foundations

5.0 / 5 (0 votes)

Thanks for rating: