The Biggest Ideas in the Universe | 20. Entropy and Information

TLDRThe video script delves into the concept of entropy, a fundamental principle in thermodynamics, and its intricate relationship with information theory. Host Sean Carroll explains entropy as a measure of our ignorance about a system, highlighting its significance in the second law of thermodynamics. The script explores various definitions of entropy, including the Boltzmann entropy defined by the volume of a macro state in phase space, and the Gibbs entropy related to probability distributions. Carroll also touches on the historical debates surrounding entropy, such as the reversibility objection raised by Loschmidt and the recurrence objection by Zermelo. The discussion further extends to the role of entropy in the universe's arrow of time, the low entropy state of the early universe, and the connection between entropy and information, as pioneered by Claude Shannon. The script concludes with a foray into quantum mechanics, introducing the notion of entanglement entropy or von Neumann entropy, which is inherent in quantum systems due to entanglement, even when the overall state is known. This comprehensive overview aims to provide viewers with a deeper understanding of how entropy governs the physical world and the flow of information.

Takeaways

- 📈 **Entropy and Information**: Entropy is closely related to information and is a measure of our ignorance about a system's microstate given its macroscopic features.

- ⏳ **Arrow of Time**: The increase of entropy is responsible for the arrow of time, making the past and future distinct in our everyday experiences.

- 🚫 **Second Law of Thermodynamics**: The second law, which can be stated without the concept of entropy, implies that entropy either increases or remains the same in closed systems.

- 🧠 **Laplace's Demon**: We are not Laplace's demon, meaning we cannot know the precise position and velocity of every particle, hence entropy accounts for our lack of knowledge.

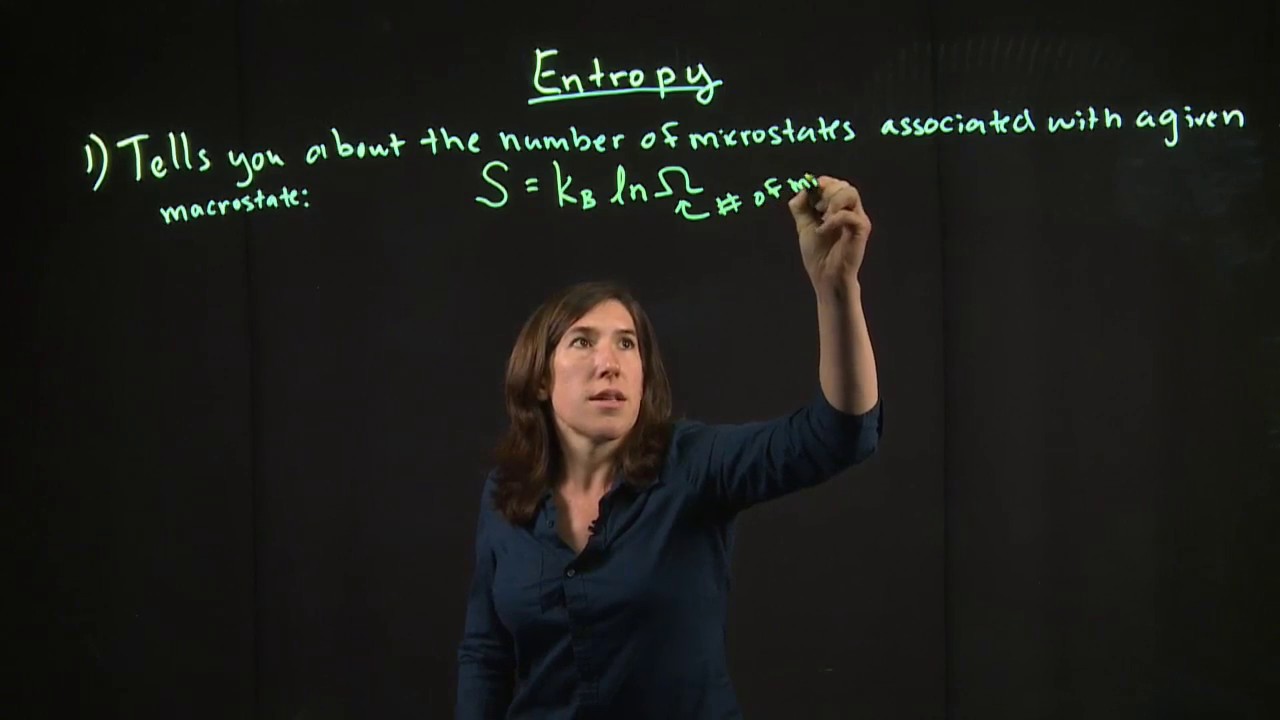

- 📊 **Boltzmann's Definition**: Boltzmann's definition of entropy (S = k log W) involves coarse-graining phase space into macro states based on observables, providing a precise measure of entropy.

- 🎲 **Gibbs Entropy**: Gibbs entropy, based on probability distributions, also calculates entropy but is tied to what we know about the system, differing from Boltzmann's approach.

- 🔄 **Reversibility Objection**: The reversibility objection by Loschmidt argues that for every process increasing entropy, there's an equally likely reverse process decreasing it, challenging the second law's inevitability.

- 🌌 **Cosmological Entropy**: The low entropy of the early universe is compatible with the 'past hypothesis,' suggesting the universe began in a state of low entropy.

- ⚙️ **Maxwell's Demon**: Maxwell's thought experiment about a demon sorting molecules to decrease entropy in a box was later resolved by Landauer's principle, which shows information erasure increases entropy.

- ℹ️ **Shannon's Entropy**: Shannon's information theory introduces entropy as a measure of the uncertainty in a set of possible messages, directly relating to the concept of information content.

- 🧬 **Quantum Entropy**: Von Neumann's entropy in quantum mechanics accounts for the entropy inherent in entangled quantum states, even when the overall system's state is known.

Q & A

What is the main topic of discussion in the provided transcript?

-The main topic of discussion is entropy, its relationship with information, and its implications in various contexts such as thermodynamics, statistical mechanics, and quantum mechanics.

Why is entropy considered difficult to define?

-Entropy is considered difficult to define because it has many different definitions that serve different purposes and apply to different sets of circumstances. These definitions are not mutually exclusive and are all valid in their respective contexts.

What is the Boltzmann entropy and how is it calculated?

-Boltzmann entropy is a measure of the number of microstates that correspond to a given macrostate. It is calculated using the formula S = k log W, where W is the volume of the phase space occupied by the macrostate, and k is Boltzmann's constant.

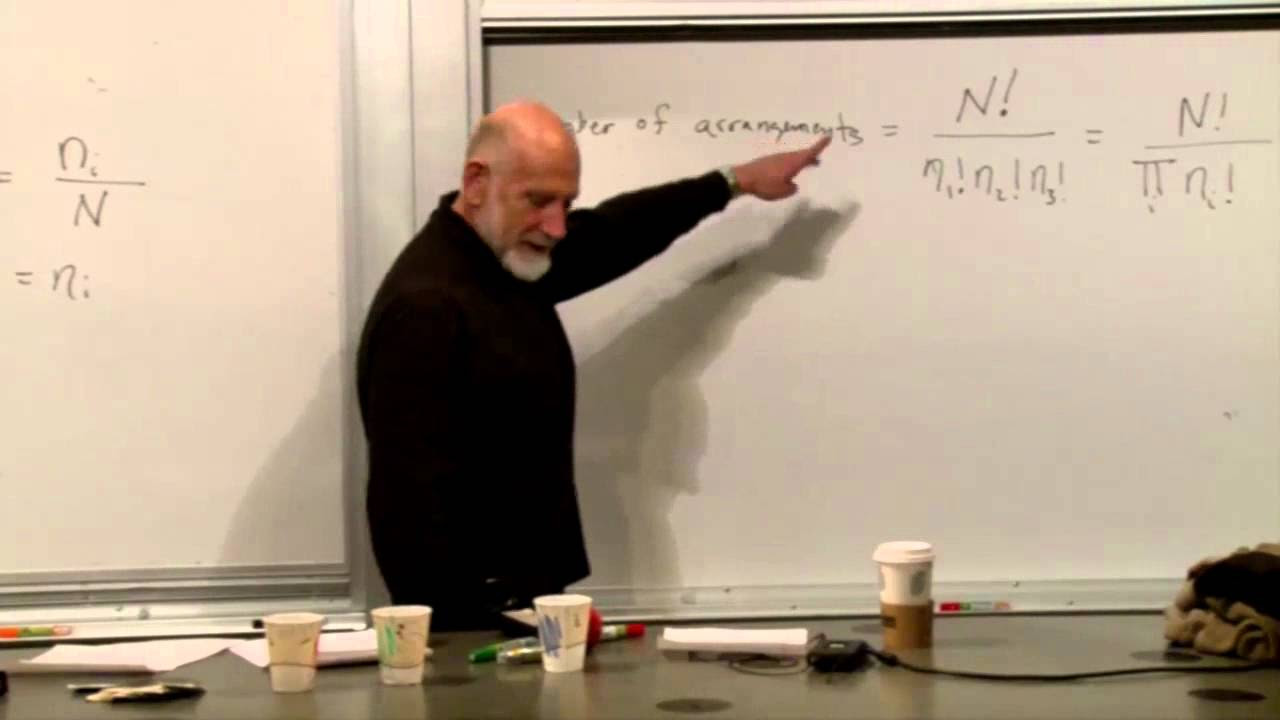

How does the concept of coarse-graining relate to entropy?

-Coarse-graining is a method used in statistical mechanics to define macrostates by grouping together many microstates that appear the same from a macroscopic perspective. This process helps in characterizing entropy as it allows for the calculation of the number of microstates within a macrostate.

What is the Gibbs entropy and how does it differ from Boltzmann entropy?

-Gibbs entropy is defined as the negative integral of the probability distribution function for a system's phase space, multiplied by the logarithm of that probability. It differs from Boltzmann entropy in that it does not rely on coarse-graining and is based on the knowledge conveyed through the probability distribution of the system's microstates.

Why is the second law of thermodynamics important in the context of entropy?

-The second law of thermodynamics states that the entropy of a closed system either increases or remains constant over time. It is important because it dictates the direction of spontaneous processes and underlies the concept of the arrow of time, indicating the progression from order to disorder.

What is the relationship between entropy and information?

-Entropy and information are closely related. High entropy in a system indicates a lack of information about the system's microstate, whereas low entropy suggests a higher level of knowledge or information about the system's specific configuration.

What is the role of the past hypothesis in explaining the arrow of time?

-The past hypothesis, which posits that the universe began in a state of low entropy, is crucial for explaining the arrow of time. It provides a boundary condition that breaks the time-reversal symmetry and allows for the entropy of the universe to increase over time, consistent with our observations.

How does the concept of entropy relate to the existence of life?

-Entropy plays a critical role in the existence of life. Living systems require a constant increase in entropy to maintain their organization and structure. This is achieved by taking in low-entropy energy from the environment and expelling high-entropy waste, thus increasing the overall entropy of the universe.

What is Maxwell's demon and what problem does it pose in the context of the second law of thermodynamics?

-Maxwell's demon is a thought experiment that involves a hypothetical entity capable of decreasing the entropy of a system without doing work, seemingly violating the second law of thermodynamics. The problem it poses is how such a demon could exist without increasing the overall entropy of the universe.

What is Landauer's principle and how does it relate to the concept of Maxwell's demon?

-Landauer's principle states that the erasure of information in a physical system requires a minimum amount of energy and results in an increase in entropy. This principle provides a resolution to the paradox of Maxwell's demon by showing that the act of information erasure, which the demon would need to perform, necessarily increases the entropy of the universe.

Outlines

😀 Introduction to Entropy and Information

Sean Carroll introduces the topic of entropy, emphasizing its relationship with information. He mentions that entropy is multifaceted and challenging to define, playing a crucial role in discussions of time's arrow and the second law of thermodynamics. Carroll also highlights the historical context, involving figures like Boltzmann, Carnot, and Clausius, and touches on the statistical nature of entropy.

📚 Defining Entropy in Classical Statistical Mechanics

Carroll delves into two definitions of entropy within classical statistical mechanics. He discusses the concept of coarse-graining, where macroscopic states encompass numerous microscopic arrangements. The Boltzmann entropy is introduced as a measure of ignorance about a system's microscopic state, quantified by the logarithm of the volume of the macro state in phase space.

🔢 Entropy and Probability Distributions

The video explores the connection between entropy and probability distributions. Carroll contrasts Boltzmann's entropy, which relies on coarse-graining, with Gibbs' entropy, which is based on the probability distribution across phase space. The Gibbs entropy is defined as the negative integral of the probability distribution function multiplied by the logarithm of that probability, leading to a discussion on the relationship between entropy, knowledge, and information.

🤔 Entropy and the Second Law of Thermodynamics

Carroll examines the second law of thermodynamics in relation to the Gibbs and Boltzmann entropies. He explains that while the Gibbs entropy implies a constant entropy for closed systems, the second law is more accurately described by the change in entropy being greater than or equal to the heat exchanged with the environment. This leads to the concept that entropy can increase even in open systems when considering the effects of a heat bath.

🌌 Entropy and the Early Universe

The discussion shifts to the entropy of the early universe, addressing misconceptions about its high or low entropy state. Carroll argues that the early universe's entropy was low due to gravitational effects, contrasting it with a box of gas in thermal equilibrium. He also touches on the maximum entropy the universe could have had and the implications for the past hypothesis, which suggests the universe began in a low-entropy state.

🧐 The Puzzle of Low Entropy in the Early Universe

Carroll acknowledges the mystery of why the early universe had a low-entropy state. He mentions his own speculative work with Jennifer Chen, which attempted to provide a dynamical explanation involving baby universes and quantum nucleation. He calls for more research into this area, emphasizing the lack of a widely accepted theory to explain the initial low entropy of the universe.

🤓 Objections to Boltzmann's Formulation

Carroll presents historical objections to Boltzmann's statistical interpretation of the second law, including Loschmidt's reversibility objection and Zermelo's recurrence objection. He explains the content and implications of these objections and Boltzmann's responses, highlighting the philosophical debates surrounding the nature of entropy and the probabilistic nature of physical laws.

🧠 Boltzmann's Brains and the Anthropic Principle

The video addresses Boltzmann's idea regarding fluctuations in an infinite universe and the concept of Boltzmann brains. Carroll explains the flaw in Boltzmann's reasoning, which was highlighted by Eddington, showing that it's more likely for a single observer (a brain) to arise from random fluctuations than a complex entity like a person or a planet.

🔄 Entropy and the Arrow of Time

Carroll argues that the thermodynamic arrow of time, as described by the second law of thermodynamics, underlies all other arrows of time, including the psychological, biological, and cosmological arrows. He discusses the past hypothesis and its role in providing a boundary condition that breaks the time-reversal symmetry, explaining the directionality of time and the possibility of free will.

☀️ Entropy and Life

The video connects the concept of entropy to the existence of life on Earth. Carroll explains how life relies on the increase of entropy, as illustrated by the process of photosynthesis and the use of ATP in cells. He emphasizes the importance of the sun providing low-entropy energy that is then expelled in a higher entropy form, allowing life to maintain its integrity.

😈 Maxwell's Demon and the Role of Information

Carroll explores Maxwell's thought experiment involving a demon controlling the entropy of a system, which seemed to contradict the second law. He discusses the resolution to this paradox, as proposed by Landauer and Bennett, which involves the inevitable increase of entropy when information is erased, thus upholding the second law of thermodynamics.

📈 Entropy, Information, and Claude Shannon

The video concludes with a discussion on Claude Shannon's work on information theory and its connection to entropy. Carroll explains Shannon's concept of information content, or surprisal, and how it relates to the probability of symbols in a communication system. He highlights the formal similarity between Shannon's entropy (information entropy) and Gibbs' entropy, noting the different interpretations in communication theory and physics.

🤖 Quantum Entropy and Von Neumann's Conception

Carroll introduces the concept of quantum entropy, or entanglement entropy, as developed by Von Neumann. He explains that even when the complete state of a quantum system is known, entropy can still arise when considering subsystems that are entangled. This entropy is inherent and does not depend on ignorance or coarse-graining, reflecting a fundamental aspect of quantum mechanics.

Mindmap

Keywords

💡Entropy

💡Information

💡Second Law of Thermodynamics

💡Boltzmann's Entropy Formula

💡Coarse Graining

💡Gibbs Entropy

💡Maxwell's Demon

💡Landauer's Principle

💡Claude Shannon

💡Quantum Mechanics

💡Black Hole Entropy

Highlights

Entropy is closely related to information in a subtle and complex way.

The concept of entropy is tied to the arrow of time and the differences between past and future states.

Entropy is a measure of our ignorance about a system, given the macroscopic features we can observe.

Different definitions of entropy exist, all serving a purpose and applying to different circumstances.

The second law of thermodynamics, which involves entropy, was formulated before the term 'entropy' was even coined.

Boltzmann's definition of entropy is based on coarse-graining and the volume of a macro state in phase space.

Gibbs entropy is defined through a probability distribution and is tied to how much we know about the system.

The second law of thermodynamics, when defined using Gibbs entropy, implies that entropy remains constant in closed systems.

When a system is coupled to a heat bath, the change in entropy is greater than or equal to the heat exchanged with the outside world.

The concept of entropy has been contentious due to its seemingly personal and subjective nature.

The early universe's entropy was low, which is compatible with the idea that the universe began in a low entropy state.

The entropy of black holes and its relevance to the maximum entropy of the universe is a significant topic in modern cosmology.

The 'Past Hypothesis' suggests that the universe started in a state of low entropy, which is crucial for understanding the arrow of time.

Maxwell's Demon thought experiment challenges the second law of thermodynamics by proposing a scenario where entropy could decrease without external work.

Landauer's Principle establishes a relationship between the erasure of information and the increase in entropy.

Claude Shannon's information theory introduces a different perspective on entropy in the context of communication and data transmission.

Von Neumann entropy, or entanglement entropy, is a concept from quantum mechanics that deals with the entropy of entangled quantum states.

Transcripts

5.0 / 5 (0 votes)

Thanks for rating: