Crack A/B Testing Problems for Data Science Interviews | Product Sense Interviews

TLDRIn this informative video, Emma dives into the world of A/B testing, a crucial skill for data scientists. She outlines six key topics, including understanding A/B tests, determining test duration, addressing the multiple testing problem, and managing novelty and primary effects. Emma also discusses interference issues between variants and offers strategies to mitigate them. The video is packed with insights, practical examples, and resources for further learning, making it an invaluable guide for anyone looking to master A/B testing.

Takeaways

- 😀 Emma's channel focuses on helping viewers improve their skills in tackling product sense problems, particularly in A/B testing.

- 📊 A/B testing is a controlled experiment widely used in the industry to make informed product launch decisions, comparing a control group with a treatment group.

- 🧐 Data scientists often face A/B testing questions in interviews, which may cover hypothesis development, test design, result evaluation, and decision-making.

- ⏱ The duration of an A/B test is determined by calculating the sample size, which depends on type 2 error (or power), significance level, and the minimum detectable effect.

- 🔢 A rule of thumb for sample size calculation is provided, and Emma offers a separate video for those interested in the derivation of the formula.

- 🤔 Estimating the difference between treatment and control before running an experiment is challenging and often relies on the minimum detectable effect, determined by stakeholders.

- 🚫 The multiple testing problem arises when conducting several tests simultaneously, increasing the risk of false discoveries, and can be addressed using methods like Bonferroni correction or controlling the false discovery rate (FDR).

- 🆕 The novelty effect and primary effect can influence A/B test results, with users initially reacting differently to new changes, which may not be sustainable over time.

- 🔄 Interference between control and treatment groups can lead to unreliable results, especially in social networks and two-sided markets where user behavior can be influenced by others.

- 🛠 To prevent spillover effects in testing, strategies like geo-based randomization, time-based randomization, network clusters, or ego network randomization can be employed.

- 📚 Emma recommends further learning through a free Udacity course on A/B testing fundamentals and a book titled 'Trustworthy Online Control Experiments' for in-depth knowledge and practical insights.

Q & A

What is the main focus of the video by Emma?

-The video focuses on explaining A/B testing, covering six important topics, answering common questions, and providing resources for further learning.

Why are A/B testing problems often asked in data science interviews?

-A/B testing problems are frequently asked because they are a core competence for data scientists and help evaluate a feature with a subset of users to infer its reception by all users.

What are the three parameters needed to calculate the sample size for an A/B test?

-The three parameters needed are type 2 error or power, the significance level, and the minimum detectable effect.

What is the 'Rule of Sum' for calculating sample size in A/B testing?

-The 'Rule of Sum' states that the sample size is approximately equal to 16 times the sample variance divided by the square of delta (the difference between treatment and control).

How does the minimum detectable effect influence the sample size in an A/B test?

-A larger minimum detectable effect requires fewer samples, as it represents the smallest difference that would matter in practice, thus affecting the required sample size for the test.

What is the problem with using the same significance level for multiple tests?

-Using the same significance level for multiple tests can increase the probability of false discoveries, as the chance of observing at least one false positive increases with the number of tests.

What is the Bonferroni correction and how is it used in dealing with multiple testing problems?

-The Bonferroni correction is a method used to adjust the significance level in multiple testing scenarios by dividing the original significance level by the number of tests, thus reducing the chance of false positives.

What are the primary effect and novelty effect in the context of A/B testing?

-The primary effect is the initial resistance to change, while the novelty effect is the initial attraction to a new feature. Both effects can cause the initial test results to be unreliable as they tend not to last long.

How can interference between control and treatment groups affect A/B test results?

-Interference can lead to unreliable results if users in the control group are influenced by those in the treatment group, especially in social networks or two-sided markets where a network effect can cause spillover between groups.

What are some strategies to prevent spillover between control and treatment groups in A/B testing?

-Strategies include geo-based randomization, time-based randomization, creating network clusters, and ego network randomization, which aim to isolate users in each group to prevent interference.

What resources does Emma recommend for further learning about A/B testing?

-Emma recommends a free online course from Udacity and a book titled 'Trustworthy Online Control Experiments' for in-depth knowledge on running A/B tests and dealing with potential pitfalls.

Outlines

🔍 Introduction to A/B Testing

Emma welcomes viewers to her channel, highlighting the popularity of her video on cracking product sense problems. She introduces the topic of A/B testing, which is often combined with metric questions in data science interviews. Emma plans to cover six key topics on A/B testing, provide common questions and answers, and share resources for further learning. She outlines the video's content, encouraging viewers to skip sections they are already familiar with. The first topic is a basic explanation of A/B testing, its importance in the industry, and its frequent appearance in data science interviews.

📊 Designing A/B Tests: Duration and Sample Size

The second topic delves into the specifics of designing an A/B test, focusing on determining the test's duration. To decide this, one must calculate the sample size, which requires understanding type 2 error (or power), significance level, and the minimum detectable effect. Emma explains the 'rule of sum' formula for sample size and discusses how each parameter affects it. She also touches on the challenges of estimating the difference between treatment and control groups before running the experiment and the importance of the minimum detectable effect in practical scenarios.

🚫 Addressing Multiple Testing and Novelty Effects

Emma addresses the multiple testing problem that arises when conducting tests with multiple variants, explaining the increased risk of false discoveries and how it can be mitigated using methods like the Bonferroni correction or controlling the false discovery rate (FDR). She also discusses the primary and novelty effects that can influence user behavior in response to new features, and how these effects can cause initial test results to be misleading. Strategies to analyze and deal with these effects, such as focusing on first-time users or comparing different user groups, are suggested.

🤔 Dealing with Interference in A/B Testing

The fourth topic tackles the issue of interference between control and treatment groups, which can lead to unreliable test results. Emma provides examples from social networks and two-sided markets to illustrate how network effects and shared resources can influence user behavior and distort the treatment effect. She then presents various strategies to prevent such spillover, including geo-based randomization, time-based randomization, network clustering, and ego network randomization, each with its own applications and limitations.

🛠️ Testing Strategy for Two-Sided Markets and Social Networks

In this section, Emma outlines a testing strategy for a new feature aimed at increasing the number of rides by offering coupons, focusing on preventing spillover effects between control and treatment groups. She suggests methods like geo-based randomization for two-sided markets and network clustering or ego network randomization for social networks. The goal is to isolate the effects of the new feature and accurately measure its impact, considering the unique challenges posed by each type of platform.

📚 Resources for Further Learning on A/B Testing

Concluding the video, Emma recommends two resources for further learning on A/B testing: a free online course from Udacity covering the fundamentals and a book titled 'Trustworthy Online Control Experiments' that provides in-depth knowledge on running effective A/B tests, potential pitfalls, and solutions. She also mentions her plan to summarize the book's content in a future video and invites viewers to share any questions they may have.

Mindmap

Keywords

💡A/B Testing

💡Control Group

💡Treatment Group

💡Sample Size

💡Type 2 Error

💡Significance Level

💡Minimum Detectable Effect

💡Multiple Testing Problem

💡Bonferroni Correction

💡False Discovery Rate (FDR)

💡Novelty Effect

💡Primary Effect

💡Interference

💡Network Effect

Highlights

The most popular video on Emma's channel focuses on cracking product sense problems, a common area where viewers seek more help.

Today's video discusses A/B testing, a frequent topic in data science interviews often paired with metric questions.

Emma will cover six important topics of A/B testing, providing insights into commonly asked questions and answers.

A/B testing, also known as controlled experiments, is widely used in the industry for product launch decisions.

A/B testing allows tech companies to evaluate features with subsets of users to infer overall user reception.

The duration of an A/B test is determined by obtaining the sample size and considering type 2 error, power, significance level, and minimum detectable effect.

The 'rule of sum' formula for sample size is discussed, with an offer to learn more through another video.

The impact of each parameter on sample size is explained, including the need for more samples with larger sample variance.

The multiple testing problem is introduced, explaining the increased probability of false discoveries with more variants.

Bonferroni correction and controlling the false discovery rate (FDR) are presented as methods to deal with multiple testing problems.

Primary effect and novelty effect are discussed as common issues in A/B testing that can affect the reliability of results.

Strategies to deal with primary and novelty effects include running tests on first-time users or comparing first-time vs. old users.

Interference between control and treatment groups can lead to unreliable results, especially in social networks and two-sided markets.

Network effect and shared resources in two-sided markets can cause the post-launch effect to differ from the treatment effect.

Designing tests to prevent spillover between control and treatment groups is crucial, with several strategies outlined.

Geo-based randomization, time-based randomization, network clusters, and ego network randomization are suggested as solutions to prevent interference.

Emma recommends two resources for further learning: a free Udacity course and the book 'Trustworthy Online Control Experiments'.

The video concludes with an invitation for viewers to ask questions and a promise of future content summarizing the recommended book.

Transcripts

Browse More Related Video

A/B Testing Mistakes to Avoid in Your Data Science Interview: Tips and Tricks!

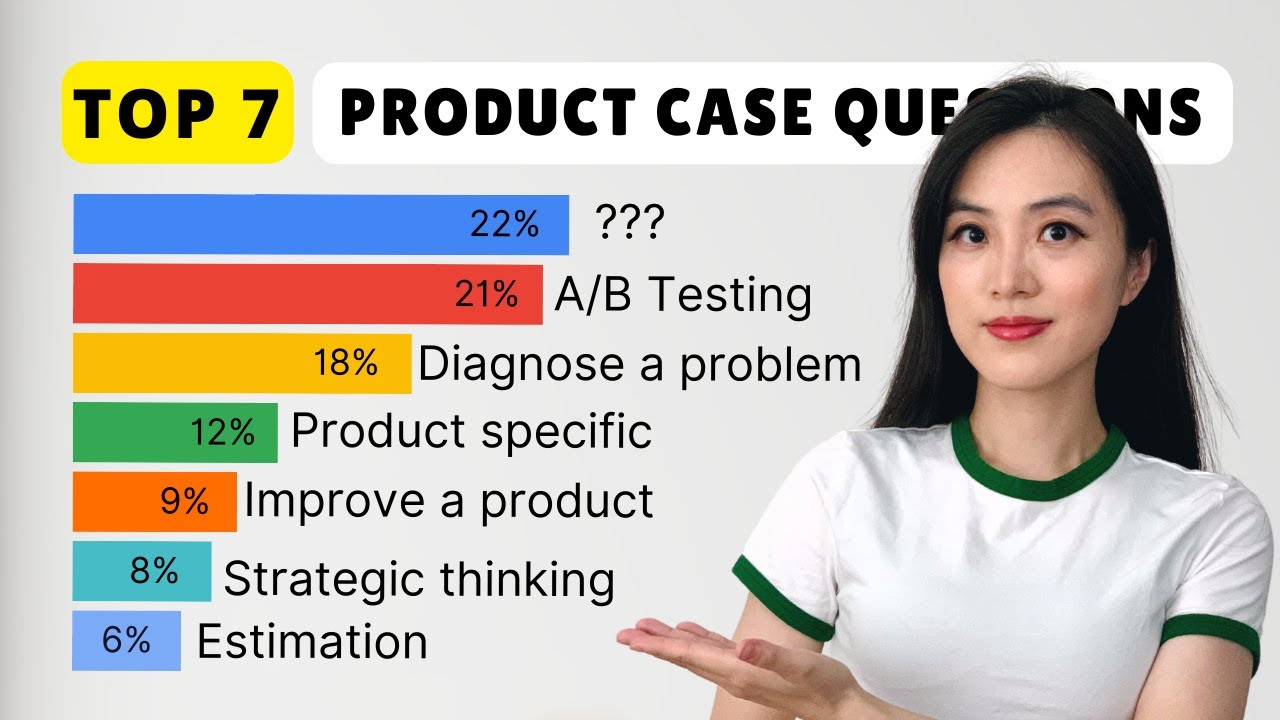

Ace Product/Business Case Interview Questions: A Data-driven Approach for Data Scientists

the truth about UC Davis....

Analyze trends for an online store | Google Digital Marketing & E-commerce Certificate

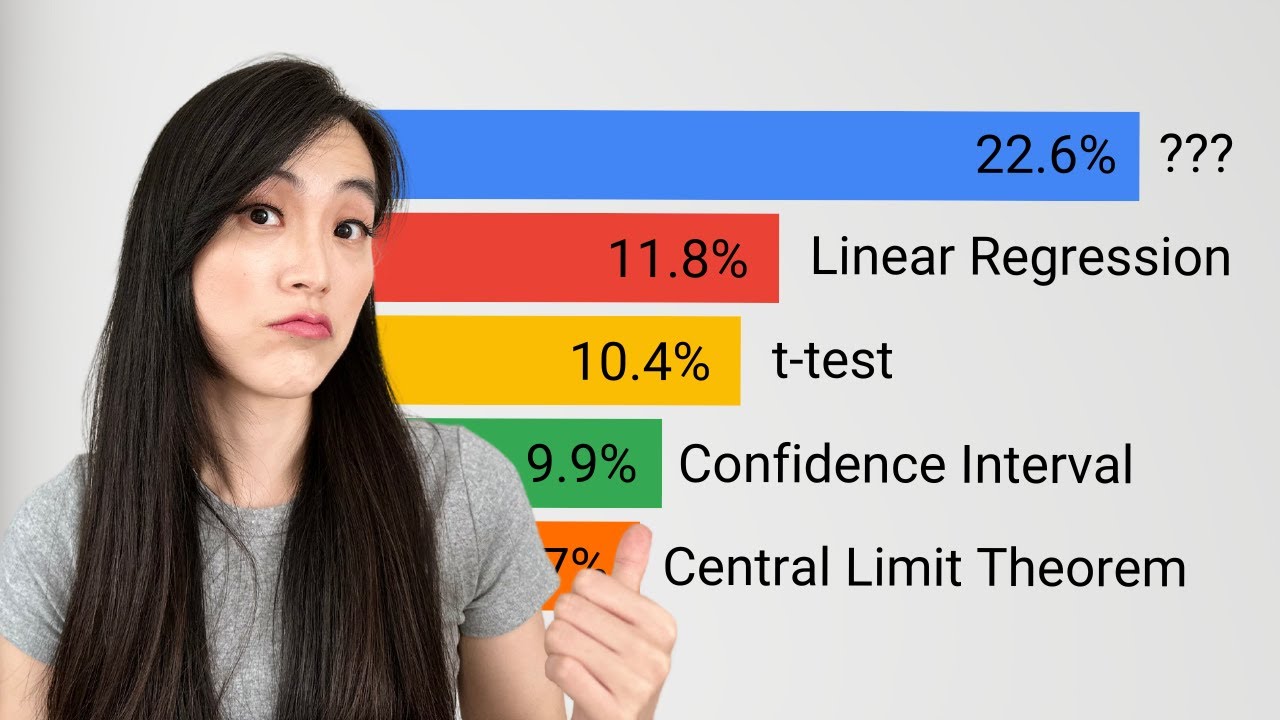

Ace Statistics Interviews: A Data-driven Approach For Data Scientists

A/B Testing Analysis Made Easy: How to Use Hypothesis Testing for Data Science Interviews!

5.0 / 5 (0 votes)

Thanks for rating: