AI experts make predictions for 2040. I was a little surprised. | Science News

TLDRA recent UK study interviews 12 software development experts using the Delphi method, predicting AI's future by 2040. Concerns include nations, particularly the US and China, cutting corners in AI safety, potential AI-caused megadeaths, the infancy of quantum computing's commercial use, AI-generated disinformation, and the tokenization of internet assets through non-blockchain distributed services. The experts recommend increased AI regulations, safety measures, and interdisciplinary education to address these challenges.

Takeaways

- 🤖 **AI Safety Concerns**: Experts predict that by 2040, AI safety corners will be cut due to international competition, particularly between the United States and China.

- 💥 **AI-Caused Megadeaths**: Some experts estimate that AI could be responsible for events causing at least a million deaths by 2040, with others disagreeing on the scale but not the possibility.

- 🌐 **Quantum Computing**: There's a consensus that by 2040, quantum computing will only just be in use, with disagreement on its commercial relevance.

- 📰 **Truth and Fiction**: AI will make discerning truth from fiction increasingly difficult across various domains, potentially leading to an arms race between AI creating and identifying fake content.

- 🎭 **Philip K. Dick's World**: The future is likened more to the dystopian worlds of Philip K. Dick's novels, where reality is questioned, rather than George Orwell's totalitarian regime.

- 🖋️ **Digital Ownership (Tokenship)**: By 2040, owning internet assets through tokenship, akin to NFTs, will become common, but not necessarily through blockchain technology.

- 🔍 **Complexity and Accountability**: The increasing complexity of software and AI will make it harder to distinguish between accidents and deliberate manipulation, leading to a need for ambient accountability.

- 📚 **Regulations and Education**: Experts propose stricter AI safety regulations, built-in safety requirements, outcome checks in software development, better education, and social science input to understand the impact of these changes.

- 🚀 **Scientific Publications**: AI's potential to produce and disseminate low-quality papers and fake data is an underestimated issue that could undermine fact-checking and scientific credibility.

- 📖 **Nautilus Magazine**: Nautilus is a well-regarded science magazine covering a broad range of topics, offering in-depth stories and high-quality content from scientists and experts.

- 💡 **Post-Quantum Gravity**: The speaker mentions a new article about Jonathan Oppenheim's theory of post-quantum gravity, highlighting the magazine's coverage of cutting-edge scientific theories.

Q & A

What is the main topic of discussion in the transcript?

-The main topic of discussion is the potential dangers of AI and the findings of a report based on interviews with experts on software development, using the Delphi method.

What is the Delphi method and why was it created?

-The Delphi method is a systematic interview process named after the Oracle of Delphi, created by the RAND corporation in the 1950s to make better use of experts' knowledge. It involves conducting in-depth interviews, transcribing and anonymously sharing these with other participants, who then add opinions and further information, followed by additional rounds of interviews.

According to the experts interviewed, what is a significant concern regarding AI safety by 2040?

-The experts agree that by 2040, corners will be cut in AI safety, not due to company competition but because of competition between nations, particularly the United States and China.

What is the term used in the report to describe events causing at least a million deaths by AI by 2040?

-The term used is 'megadeath,' which refers to events causing at least a million deaths.

What is the experts' consensus on the use of quantum computing by 2040?

-The experts agree that by 2040, quantum computing will only just be used, with some disagreement coming from the degree of its application, as it currently has no commercial relevance and is not expected to by 2040.

What challenges do the experts foresee regarding truth and fiction in the context of AI advancements?

-The experts are concerned that AI will make it increasingly difficult to distinguish truth from fiction in various domains, leading to an arms race between AIs producing fake content and AIs trying to identify such content, potentially misidentifying truth as fake.

How does the participant describe the future world in terms of reality and truth?

-The participant describes the future world as a Philip K. Dick reality, where characters frequently question the nature of reality, implying that it will be challenging to discern what is true.

What does the term 'tokenship' refer to in the context of the experts' predictions?

-Tokenship refers to a digital record, which is associated with NFTs (non-fungible tokens). The experts predict that by 2040, it will become common to buy and own internet assets through tokenship, facilitated not by blockchain technology but through other distributed services.

What is the experts' view on the impact of increasing software complexity on accident versus manipulation differentiation?

-The experts believe that the increasing complexity of software, especially AI, will make it difficult to differentiate between accidents and deliberate manipulation, as no human will be able to fully understand what is happening, likened to a modern-day Kafkaesque scenario.

What solutions do the experts propose to address the issues identified?

-The experts propose regulations on AI safety, built-in safety requirements, outcome checks on software development, better education for people in relevant positions, and more input from the social sciences on the potential impacts of these technological changes.

What is the speaker's concern about AI's impact on scientific publications?

-The speaker is concerned that AI will make it easier to produce low-quality papers and fake data, which could spread globally. This is a special case of fake news and misinformation that could undermine fact-checkers, as they heavily rely on scientific publications.

How does the speaker describe Nautilus magazine and what is its scope?

-The speaker describes Nautilus as a science magazine that covers a wide range of topics, from astronomy to economics, history, neuroscience, philosophy, and physics. It features articles written by scientists who provide inside stories and is known for its high-quality writing and graphic design.

Outlines

🤖 AI Safety Concerns and National Competition

This paragraph discusses the concerns raised by experts about the future of AI safety, emphasizing the role of national competition rather than corporate rivalry. The experts, interviewed using the Delphi method, predict that by 2040, AI development will be rushed, leading to potential megadeath events. The consensus also highlights the disagreement on the extent of quantum computing's impact by 2040, with some experts seeing its limited commercial relevance. Additionally, the paragraph touches on the challenges of discerning truth from AI-generated content, leading to a potential 'Philip K. Dick world,' where reality becomes increasingly ambiguous.

📚 The Future of Scientific Publications and AI

The second paragraph focuses on the potential negative impact of AI on scientific publications. It suggests that AI could facilitate the creation and dissemination of low-quality papers and fake data globally, which is a concerning development as fact-checkers rely heavily on scientific publications. The speaker argues that this issue is an underestimated aspect of fake news and misinformation, as the erosion of trust in scientific sources could have far-reaching consequences. The paragraph concludes with a mention of the speaker's recent article on Nautilus magazine, which discusses Jonathan Oppenheim's new theory of post-quantum gravity, and promotes the magazine as a valuable source of science news across various disciplines.

Mindmap

Keywords

💡AI safety

💡Delphi method

💡Quantum computing

💡Fake content

💡Tokenship

💡Software complexity

💡Regulations

💡Scientific publication

💡Fake news

💡Nautilus magazine

💡Post quantum gravity

Highlights

A new report discusses the dangers of AI and the fears surrounding it, based on interviews with experts.

The study was conducted using the Delphi method, an systematic interview process with experts.

The Delphi method originates from the Oracle of Delphi, a concept dating back 2500 years.

Developed by RAND corporation, the Delphi method aims to optimize the use of expert knowledge.

Experts were asked about the state of software development by 2040, leading to 5 main points of agreement.

There is a consensus that AI safety corners will be cut by 2040 due to national, not corporate, competition.

Some experts predict AI could cause a 'megadeath' event by 2040, with at least a million deaths.

Quantum computing is expected to have limited commercial relevance by 2040, according to the experts.

AI's impact on truth and fiction is a major concern, potentially leading to an arms race in fake content production and detection.

Experts foresee a future similar to Philip K. Dick's dystopian novels, with reality becoming increasingly uncertain.

By 2040, owning internet assets through tokenship, like NFTs, will become common.

Tokenization will likely not rely on blockchain technology but on other distributed services.

The complexity of software and AI will make distinguishing between accidents and deliberate manipulation difficult.

The experts propose increased regulations, built-in safety requirements, and outcome checks for software development.

There is a call for better education and social science input to understand the impact of technological changes.

AI's potential to produce and spread low-quality papers and fake data is a concern, impacting scientific publications and fact-checking.

The discussion emphasizes the importance of addressing these challenges to prevent a dystopian future.

Transcripts

Browse More Related Video

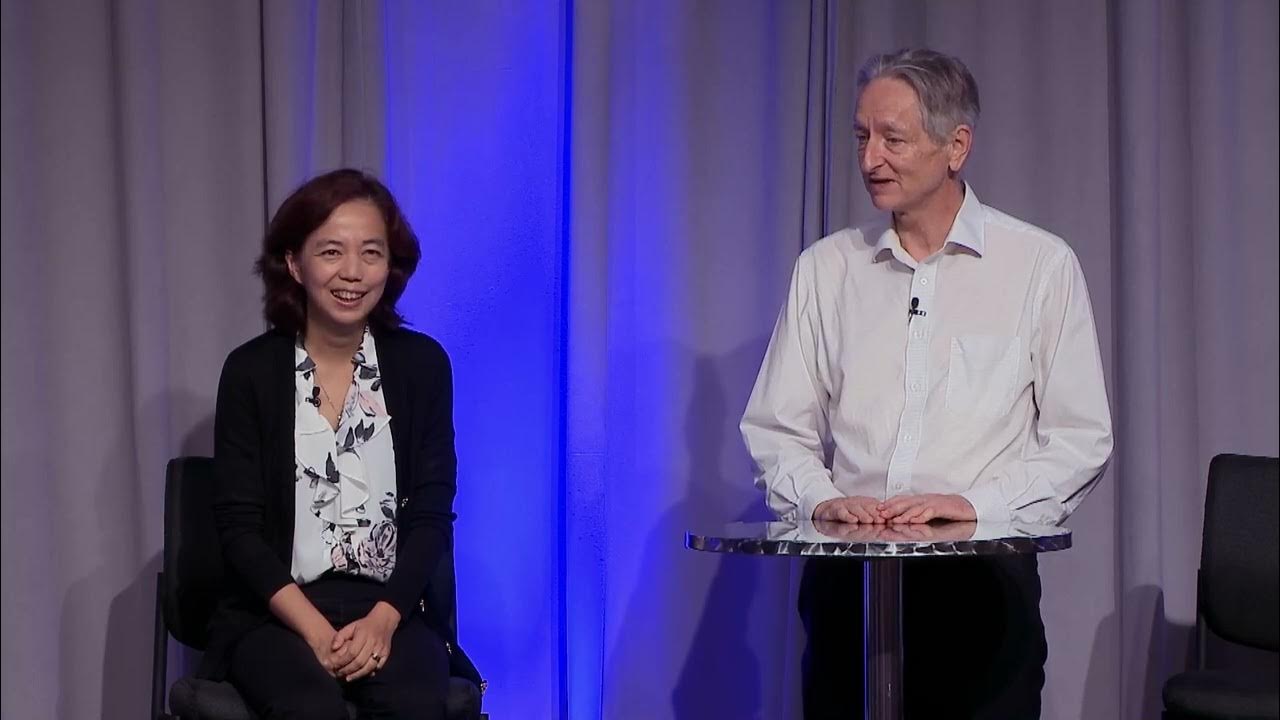

Geoffrey Hinton in conversation with Fei-Fei Li — Responsible AI development

Artificial intelligence and algorithms: pros and cons | DW Documentary (AI documentary)

Rapid AI Progress Surprises Even Experts: Survey just out

The Future of Artificial Intelligence

2024 AI+Education Summit: What do Educators Need from AI?

Michio Kaku Breaks in Tears "Quantum Computer Just Shut Down After It Revealed This"

5.0 / 5 (0 votes)

Thanks for rating: